This post has been republished via RSS; it originally appeared at: SQL Server Support articles.

First published on MSDN on Feb 06, 2018Workloads that rely on auto-commit (each operation like an INSERT has an implied, corresponding begin and commit transaction) and perform a large number of small transactions may take significantly longer to complete when run against availability group databases configured for synchronous commit. Aside from an issue with resource latency (IO, CPU or network) this performance impact may be expected.

Synchronous Commit Emphasizes Minimizing Data Loss Over Performance

Synchronous commit availability mode is desirable when minimizing data loss is paramount for your high availability solution and prioritizes Recovery Point Objective over performance.

In the following link, it says this about synchronous commit.

Synchronous-commit mode emphasizes high availability over performance, at the cost of increased transaction latency. Under synchronous-commit mode, transactions wait to send the transaction confirmation to the client until the secondary replica has hardened the log to disk.

This blog will demonstrate how to measure the performance impact on a high transaction rate workload that is dependent on auto-commit behavior in SQL Server and how explicitly defining transactions in that same workload can mitigate the impact and provide better performance.

The Transaction Must be Written at the Primary and The Synchronous Secondary To Commit

To illustrate the impact of synchronous commit, take a simple INSERT command initiated on a database at the primary replica. That insert must be hardened on the transaction log file of the primary replica and of the transaction log file on the secondary replica and then acknowledgement must arrive from both replicas before SQL Server can commit the transaction. When performing hundreds of these commands a second in a workload, the impact performance can be significant.

For more internal details on how synchronous commit works, see the following blog:

AlwaysON – HADRON Learning Series: How does AlwaysON Process a Synchronous Commit Request

Demo – Measure Workload Performance in Synchronous Commit

Let’s demonstrate the impact of auto-commit mode when executing a workload against an availability group database configured for synchronous commit, and how to measure the throughput.

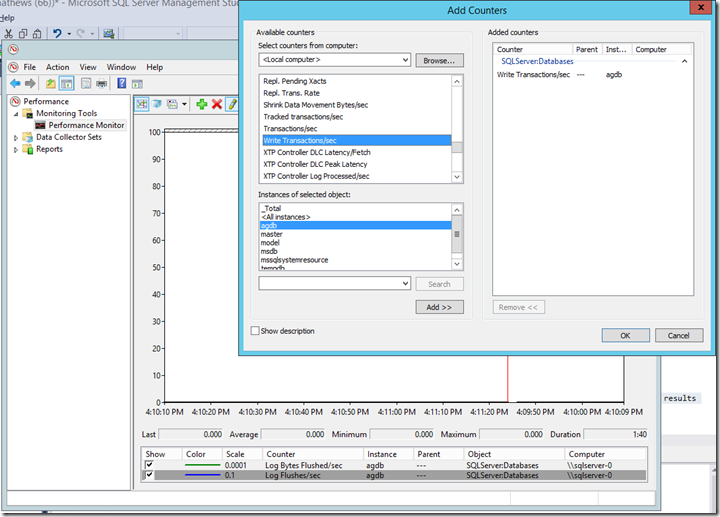

Enable the following counters in performance monitor on the primary replica:

For availability group database agdb in our example:

SQL Server:Databases:Log Bytes Flushed/sec

SQL Server:Databases:Log Flushes/sec

SQL Server:Databases:Write Transactions/sec

SQL Server:Database Replica:Transaction Delay

The following workload inserts 10,000 rows 10 times, and uses auto-commit behavior, which will result in 100,000 transactions. It will report the average time it took to insert 10,000 rows in seconds and the average time it took to insert a single row in milliseconds.

use agdb

godeclare @y int=0

declare @x int=0

declare @starttime datetime

declare @endtime datetime

create table test (col int)

create table results (duration float)while @x<10

begin

Set @starttime =getdate()

while @y<10000begin

insert test (col)

select @y

set @y=@y+1

end

set @endtime =getdate()

insert results (duration)

select datediff(millisecond,@starttime, @endtime)/1000.0

set @x=@x+1

set @y=0

endselect * from results

select avg(duration) as 'avg duration in sec for 10,000 INSERTs', avg(duration)/10 as 'avg duration in ms for each INSERT' from resultsdrop table results

drop table test

After we create our availability group and database, and configure it for synchronous commit with a single secondary replica, we run our workload. The report is that our average INSERT took 4.4 ms to complete the round trip, getting hardened on the log file at the primary and secondary replicas. The batch took 7 minutes 50 seconds to complete.

HADR_SYNC_COMMIT wait type measures the latency when performing transactions in an availability group database when configured for synchronous commit. To reset the instance’s waittype counter values (they accumulate over time), execute the following to clear the data:

DBCC SQLPERF('sys.dm_os_wait_stats' , CLEAR)

Test to demonstrate the severity of this waittype on the instance. There is a large number of these waits reported.

Let’s review the performance monitor counters collected during the batch. Log Bytes Flushed/sec for the database is pretty low, less than 1 mb/sec.

Compare Log Flushes/sec to Write Transactions/sec For every write transaction performed, there is a flush to disk. This is what we would expect in auto-commit mode. Here we see 211 transactions are completing every second and for each transaction there is a corresponding flush to disk, on the database log file at the primary replica and on the database log file at the secondary replica.

|

|

The workload reported ‘avg duration for each transaction in MS’ as 4.4 ms. We can use performance monitor to make a second confirmation calculation that this is accurate: divide one second by an average of 211 Write Transactions performed in a second, which is 1/211=.0047 seconds or 4.7 ms. This number is very similar to the number that our workload results reported. This is the time it took to capture the log change, deliver it to the secondary, write it to the log file on the primary and secondary and report back to the client that it is complete.

Do not Depend on Auto-Commit - Define Explicit Transactions

The batch has been modified with an explicit transaction in the workload in such a way that 10,000 INSERT operations will be committed together. This is much more efficient considering the requirements of synchronous commit, that each transaction requires the hardening of the operation to the log files of each replica and the network round trip needed to accomplish that work. Note the BEGIN TRAN and COMMIT TRAN commands added to the batch and commented.

Run the workload using explicit transactions, observe execution time, estimate transaction performanceuse agdb

godeclare @y int=0

declare @x int=0

declare @starttime datetime

declare @endtime datetime

create table test (col int)

create table results (duration float)while @x<10

begin

Set @starttime =getdate()

begin tran --BEGIN 10000 row transaction

while @y<10000begin

insert test (col)

select @y

set @y=@y+1

end

commit tran --COMMIT 10000 row transaction

set @endtime =getdate()

insert results (duration)

select datediff(millisecond,@starttime, @endtime)/1000.0

set @x=@x+1

set @y=0

endselect * from results

select avg(duration) as 'avg duration in sec for 10,000 INSERTs', avg(duration)/10 as 'avg duration in ms for each INSERT' from resultsdrop table results

drop table test

Our results are almost instantaneous. The results completed in a couple seconds!

Having reset the SQL Server wait counters using DBCC SQLPERF (described earlier) we see HADR_SYNC_COMMIT waits are significantly lower.

Reviewing performance monitor, Log Bytes Flushed/sec spiked to 10.4 mb/sec versus our earlier test in which it averaged less than 1 mb/sec. This shows how much more throughput SQL Server is able to accomplish when not committing a few bytes for individually small transactions.

When comparing Log Flushes/sec to Write Transactions/sec the correlation is not as clear. During batch execution, Log Flushes/sec spike to 181, and Write Transactions are much lower, since the workload itself performed only 10 explicit transactions instead of 100,000.

|

|

Conclusion

Synchronous commit can introduce latency to your high transaction workloads, this can be especially impactful in environments that must complete large ETL batches on the weekends or overnight in order to prepare the data for the next business day. Using explicit transactions can optimize workload performance and still take advantage of the strengths synchronous commit offers (eliminate data loss).