This post has been republished via RSS; it originally appeared at: Running SAP Applications on the Microsoft Platform articles.

First published on MSDN on Oct 27, 2016Introduction

Over the last one year Microsoft has been working with various Global System Integrators to move larger global multi-national companies from an end of life proprietary UNIX platforms running Oracle or DB2 to Windows, SQL Server & Azure.

In some of those cases we migrated complex SAP landscapes with sometimes up to 9TB databases on from their own datacenters or hosting partners to Azure. Some of those systems migrated are representing the largest Production SAP on Public Cloud worldwide as measured by SAPS, number of dialog steps per day and by overall landscape size and complexity.

To reduce administration costs, improve support and lower complexity the Operating System was changed from UNIX to Windows and the Database was changed from DB2 or Oracle to SQL Server 2014

After migrating to SQL Server 2014 and converting to Column Store the BW database size reduced from nearly 9TB to less than 3TB on one customer.

The customers we were working with usually run complex SAP landscapes that would be commonly seen in large global multi-national companies with components like:

SAP ECC, SAP EP, SAP BW, SAP Business Objects, SAP Content Server, SAP SCM, SCM LiveCache and various other SAP and non-SAP standalone engines

1. Start Planning, System Survey & Patching Early

Logon Production systems and capture screenshots to establish the peak throughput, patch levels, CPU, Disk & Memory consumption and database size.

In transaction ST03 identify the performance trend (such as month end spikes) and peak dialog steps per day. Take screenshots showing the typical and peak values per hour, day, week and month.

The performance data should be broken out by each task type, in particular Dialog, Background, Update, RFC and http(s)

Collate an inventory of the SAP landscape and collect the SAP Application, version, patch levels, Database sizes and sizes of the largest tables.

SAP Note 706478 - Preventing Basis tables from increasing considerably should be reviewed to determine if system or log tables can be archived.

Identify unusually large tables as such tables can impact export and import times.

Careful attention should be paid to the non-Netweaver SAP applications such as TREX, Content Server, LiveCache and other standalone engines.

If required plan early to apply support packs to allow modern releases of SQL Server or another DBMS to be used. At the same time the kernels on the target system should be updated so that they are the same or similar to the kernel planned for the target system. For example if the ECC 6.0 system is on an old depreciated 7.40 kernel update the kernel to the latest 7.45 series kernel on the old UNIX system thereby avoiding a large change to the kernel layer during the migration weekend

After compiling an inventory of the current SAP landscape build a PowerPoint summarizing the current state, remediation actions needed such as kernels or support packs and highlight any issues (such as the customer running a desupported SAP application).

This PowerPoint should be used as the starting point for the target system sizing and solution design.

Do not rely on "calculated SAPS" (based on CPU models) or the theoretical SAPS of the Hardware platform. The current Hardware platform may either be oversized (a lot of excess capacity) and undersized (current hardware is over-utilized).

Start developing a naming convention and review other blogs on this blog site discussing sizing. The 2-tier and 3-tier SAPS for Azure VMs can be found here:

SAP Applications on Azure: Supported Products and Azure VM types

2. Use Intel Based Servers to Export UNIX Systems

When it is time to start the first test migrations use Intel based servers to export the databases.

The performance capabilities of UNIX servers are far below that of modern Intel servers, particularly with respect to the very important SAPS per thread metric. SAP Note 1612283 has more information

Running R3load on UNIX servers will significantly slow down the OS/DB Migration. It is recommended to deploy a number of two socket Intel E5v4 2667 (8 core / 3.2GHz) servers as close to the source UNIX servers as possible. Ideally the Intel servers and the UNIX servers should be on the same network switch.

Due to the very high network utilization it is not recommended to use VMWare if possible. Physical servers will provide the best performance. It is recommended to use 10 gigabit network if this is supported on the UNIX server.

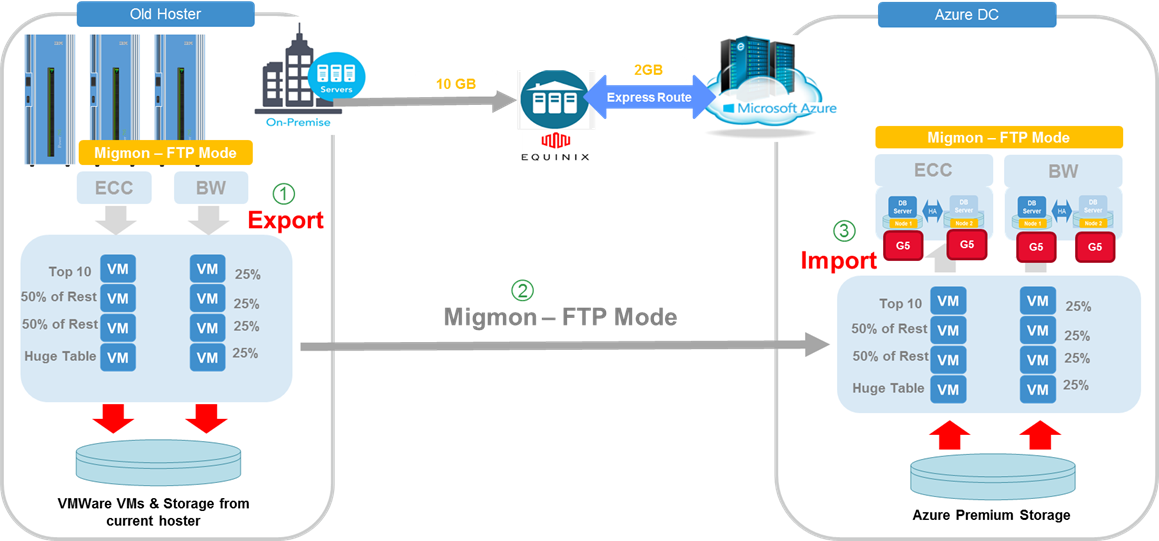

Either Robocopy or the build in Migmon ftp client can be used to transfer dump files from the source R3load servers at the existing datacenter into Azure

3. Carefully Document & Plan HA/DR Solution

The Azure platform and the SQL Server AlwaysOn provide a lot of built in High Availability and Disaster Recovery solutions and features. These features are integrated into SAP solutions and documentation is provided on how to deploy them

Table showing

| SAP Component | HA Technology | DR Technology | Comment |

| SAP AppServer | - | Azure Site Recovery | SAP app server is not a Single Point of Failure and does not need HA |

| SAP ASCS | SIOS | SIOS + Windows Geocluster | SIOS is a shared disk solution that creates a shared disk for sapmnt. See Note 1634991 for Geocluster |

| SQL Server | AlwaysOn | AlwaysOn | Local HA uses AlwaysOn in Synchronous mode. DR uses AlwaysOn in Async mode |

| Standalone Engine File Based | Scale out – no SPOF | Azure Site Recovery | Azure Site Recovery takes a file system consistent clone |

| Standalone Engine DBMS Based | DBMS level replication | DBMS level replication | LiveCache Warm Log Shipping |

4. Use Azure Site Recovery

The Azure platform includes a built in Disaster Recovery solution called Azure Site Recovery. Azure Site Recovery can replicate VMs from on-premises to Azure and also from Azure to Azure.

The Azure to Azure Disaster Recovery scenario is in development as of October 2016 and will be released in the near future.

Azure Site Recovery is discussed in this blog and this site

5. Ensure SMIGR_CREATE_DDL is Updated & Note 888210 is Reviewed Regularly

SAP Note 888210 - NW 7.**: System copy (supplementary note) is the "master" note for heterogeneous system copy.

The program SMIGR_CREATE_DDL is used to handle non-standard tables such as those found on SAP BW systems and is frequently updated. It is critically important to ensure all notes referenced in 888210 are applied on the source system before the export happens.

The OS/DB Migration process for SAP BW systems will not function correctly if SMIGR_CREATE_DDL is not up to date

Review Note 888210

throughout the project and implement all notes required into the source systems (UNIX/DB2 or Oracle). Regularly check this note for changes.

Also review Note 1593998 - SMIGR_CREATE_DDL for MSSQL

6. Use Azure Resource Manager & D Series v2 VMs

All new SAP on Azure deployments should be based on Azure Resource Manager and not the old ASM model

New faster Azure D-Series v2 VMs are now available in most Azure regions with significantly faster 2.4 GHz Intel Xeon E5-2673v3 (Haswell) processors.

These VM types are very beneficial for SAP applications because they have high SAPS/thread

New SAP SD 2-Tier and 3-Tier benchmarks for D-Series v2 are published on the SD Benchmark website

Large database servers can run on Azure G-Series including the GS5 with 32 cpu, 448GB and supporting 64 Premium Storage disks each 1TB in size and with 5,000 IOPS

New DS15v2 also supports Accelerated Networking . This is of great benefit during a migration and for busy DB server VMs

7. Use Premium Storage for Development, QAS and Production

Our general guidance is to recommend all customers to use Premium Storage for the Production DBMS servers and for non-production systems.

Premium Storage should also be used for Content Server, TREX, LiveCache, Business Objects and other IO intensive non-Netweaver file based or DBMS based applications

Premium Storage is of no benefit on SAP application servers

Standard Storage can be used for database backups or storing archive files or interface files

More information can be found in SAP Note 2367194 - Use of Azure Premium SSD Storage for SAP DBMS Instance

8. Use Latest Generally Available Windows Server Release & SQL Server Release

The Azure platform fully supports both Linux and Windows and multiple databases are supported such as Oracle, DB2, Sybase, MaxDB and Hana. Many customers prefer to move to SQL Server to reduce the number of support vendors to just two. The entire technology stack is only SAP and Microsoft, greatly reducing the support requirements

Microsoft has released a Database Trade In Program that allows customers to trade in DB2, Oracle or other DBMS and obtain SQL Server licenses free of charge ( conditions apply ).

The latest Magic Quadrant for Operational Database Management Systems places SQL Server in the lead.

Windows 2016 will be released by Microsoft at Ignite in September

SQL Server 2016 is already Generally Available and more information can be found in SAP Note 2201059 – Release planning for Microsoft SQL Server 2016

Windows 2012 R2 is released and fully supported by SAP for all modern Netweaver components and most standalone engines (such as LiveCache etc). See SAP Note 1732161 - SAP Systems on Windows Server 2012 (R2)

The list of supported OS/DB combinations for Azure is documented in SAP Note 1928533 - SAP Applications on Azure: Supported Products and Azure VM types

It is strongly recommended to use the latest available SQL Server Service Pack and CU . As a minimum SQL Server 2014 SP1 CU8 or SQL Server 2016 CU2 are recommended

9. Create "Flight Plan" for Migration Cutover Weekend

During the Dress Rehearsals establish the expected rate and duration for the export and import.

It is not sufficient just to establish the runtime of the migration. It is also important to plot the "Flight Plan" so that progress can be compared to a known expected value.

The Y Axis represents the number of packages completed successfully and the X Axis represents hours

If there is significant deviation away from the expected export or import progress, troubleshooting can begin very quickly rather than when the project has already exceeded the downtime window.

10. Use DFS-R for SAP Interface Directories

Windows Server includes a feature called Distributed File System Replication

DFS-R is a solution to provide High Availability for Interface File Systems on Azure.

DFS namespaces use the format \\DomainName\RootName and are typically too long for the SAP kernel to handle

An example might be \\corp.companyname.com\SAPInterface

To avoid this problem it is recommended to make a CNAME in Active Directory DNS

The CNAME maps the namespace to an alias the meets the maximum hostname length SAP support

For example:

\\corp.companyname.com\SAPInterface -> \\sapdfs\SAPInterface

Additional points:

-

Typically Full Mesh replication mode is used.

-

DFS-R is not synchronous replication

-

Test 3

rd

party backup utilities with DFS file systems

-

Carefully test the security and ACLs on DFS namespaces and resources

-

DFS-R should not be used for high performance or high IOPS operations

11. Ensure Azure Monitoring Agents Are Deployed

Azure monitoring agents must be installed to enable SAP support to view Azure Virtual Machine properties in ST06. This is a mandatory requirement for all Azure deployments including non-production deployments.

Details can be found in SAP Note 2015553 - SAP on Microsoft Azure: Support prerequisites

The following Powershell commands are used to setup Azure monitoring extensions

Get-AzureRmVMAEMExtension

Remove-AzureRmVMAEMExtension

Set-AzureRmVMAEMExtension

Test-AzureRmVMAEMExtension

12. Start Performance Testing & Validation Early

Performance testing activities are broken into two main areas:

-

Performance of the OS/DB Migration Export, upload to Azure and Import process

-

Performance testing of the SAP applications

The SAP OS/DB Migration process is highly tunable and can be optimized extensively. Test cycles can be completed with different package and table split combinations and the export.html and import.html reviewed. Based on this large Global Multinational customer and other large customers we can say the following:

-

Huge systems with multiple >8-10TB databases, many TB of R3load dump files and many complex non-SAP interfaces and 3

rd

party systems can be moved to Azure in about 72 hours

-

Typical systems with multiple 3-7TB databases, 1-2 TB of R3load dump files and some interfaces and non-SAP applications can be moved in 48 hours

-

Small to Medium sized customers with 1-3TB databases, 1TB of R3load dump files and 3-4 non-SAP applications can move to Azure in 24-36 hours

It is common to find Index Build tasks with very long runtimes. On larger migrations it is common to remove several index build packages from the downtime phase of the migration. The indexes can then be built during post-processing phase of the migration (when RFCs are being changed, TMS being setup etc)

Performance testing of the SAP applications is a more involved process for very large and complex migrations. Most customer migrations of 3-5TB sized databases do not require an extensive performance test.

Performance testing for large Global Multinationals companies begins with collecting an inventory of the top 10-30 online reports, batch jobs, BW process chains, BW reports and interface upload/download. During the migration project it is recommended to perform a "clone of production" migration (Production databases are copied to another set of servers and exported) or an actual export of production (a "Dry Run"). These full data volume copies of production can be used for performance testing. Customers with large BW landscapes should setup a clone of production BW and several source systems such as ECC.

13. Document OS/DB Migration Configuration & Design (Only Needed for >3TB Databases)

A comprehensive OS/DB Migration FAQ is published on this blog. Make sure to download the most recent version of this document.

Large and complex OS/DB migrations should have a "Migration Design Document". Typically this is a PowerPoint. As each Migration Test is performed the Export.html and Import.html should be added as an appendix so the impacts of tunings can be reviewed.

Errors, failed packages and other abnormal events should be documented in the appendix too

Recommended parameters to include in the Migration Design Document:

Package Splitting and Table Splitting Design – how many splits on large tables etc

Number of r3load processes for export and import

Resource Governor memory cap – typically around 5%

Number of SQL Server Datafiles and the distribution onto disks

Number and type of Premium Storage disks

Consider placing the SQL Server Transaction Log file on D: (temporary disk)

Supplementary Log file on additional P30(s)

Perfmon Logging recommended counter set:

-

BCP rows/sec and BCP throughput kb/sec

-

SQL Server Log Usage, Memory Usage

-

Processor Information – CPU per each individual thread

-

Disk – ms/read and ms/write for each disk

Collect every 45 seconds or 90 seconds and graph in Excel

Receiver Side Scaling – test setting this on and off on DB server

BCP Batch size – Review this blog and test values to determine best performance

Buffer Pool Extension setup and configuration – typically not used during OS/DB migration

Database Recovery Mode - SIMPLE

Transparent Data Encryption – we typically recommend importing into a TDE enabled database

SQL Server Settings – MAXDOP, Max/Min Memory size

Traceflags set during import

CPU consumption graph on r3load servers and database server during Export and Import

SMIGR CREATE DDL configuration (such as Column Store settings)

14. Support Agreements and Azure Rapid Response

Very large OS/DB Migrations or Azure projects it is strongly recommended to have a Microsoft Premier Support agreement or additionally use Azure Rapid Response

More information about Azure Support plans can be found here:

https://azure.microsoft.com/en-us/support/plans/

https://www.microsoft.com/en-us/microsoftservices/premier_support_microsoft_azure.aspx

Azure Rapid Response offers a 15 minute callback for urgent support topics.

15. Use SQL Server 2014 or 2016 to Replace Business Warehouse Accelerator (BWA)

A specific blog for SAP BW and the learnings out of this project will follow later.

SQL Server has a built in Column Store feature since SQL Server 2012. All customers moving to Azure have decommissioned SAP BWA and replaced these appliances with SQL Server Column Store.

Comprehensive documentation and blogs are available on SQL Server Column Store. The SQL Server Column Store implementation is designed for large scale data warehouse deployments.

16. Use SQL Server Transparent Data Encryption, Azure Advanced Disk Encryption & Network Security Groups to Secure Solution

SQL Server supports Transparent Data Encryption and this feature is frequently used by Cloud customers. SQL Server TDE integrates with the Azure Key Management Service natively in SQL Server 2016 and via a free utility on SQL Server 2014 and earlier.

TDE guarantees that database backups are secured in addition to protecting the "at rest" data.

SQL Server TDE supports common protocols for encryption. We generally recommend AES-256

Testing on customer systems has shown that it is faster to import directly into an empty already Encrypted database than to apply TDE after the database import.

The overhead of importing into a TDE database is approximately 5% CPU

Therefore it is recommended to follow this sequence:

-

Ensure Perform Volume Maintenance Tasks privilege is assigned to the SQL Server Service Account to allow Instant File Initialization (datafiles can be created quickly but log files need to be written to and zeroed out)

-

Create a database of the desired size (for example a 7.2TB database a database of approximately 8TB would be created)

-

Ensure to create a very large transaction log as during the import a lot of log space will be consumed

-

Configure Azure Key Vault, TDE and monitor the database encryption status and percent complete. Status can be found in

sys.dm_database_encryption_keys

-

When the Encryption Status = 3, the R3load import can start

-

When the import and post processing finished create a Backup

-

Restore backups on replica node(s) configure AlwaysOn

The Azure platform also support Disk Encryption . This technology is similar to Windows Bitlocker and can be used to encrypt the VHDs that are used by a VM.

Note: it is not necessary or beneficial to use Azure Disk Encryption and SQL Server TDE at the same time. We recommend against storing SQL Server data and log files that have been encrypted with TDE on disks that have been encrypted with ADE. Using both SQL Server TDE and ADE can cause performance problems

The Azure networking platform supports creating ACLs on servers and subnets. Network Security Groups can be used to enforce rules to allow or disallow specific IP address and ports.

Content from third party websites, SAP and other sources reproduced in accordance with Fair Use criticism, comment, news reporting, teaching, scholarship, and research