First published on on Mar 20, 2017

We have listened to your wonderful feedback on EH Archive blob naming conventions and have now designed a simpler naming pattern, while at the same time capturing the essentials required for identifying the source of the archived blob.

So, what is the blob-naming pattern then?

In short, it could be any

name that Azure Storage

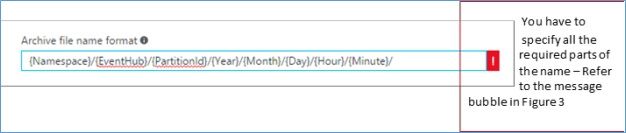

allows if it has the following required parameters – {namespace}, {EventHub}, {PartitionId}, {Year}, {Month},{Day},{Hour},{Minute} and {Second} in any order and with or without any delimiters support for Azure Storage Blob. We need all these parameters to ensure the uniqueness of the files created.

The portal now features a text box that validates that these required parameters exist when you compose an Azure Storage Blob name pattern that fits your needs. As you type these parameters, it also evaluates them for your event hub, namespace and partition Id so that you have a realistic example of a blob name.

Figure 1: Select from the sample file format specified

Figure 2: Build your own custom file name

Figure 3: The message bubble guiding you to build the file name

Once you have chosen the format for your file names, a sample is displayed in the portal as shown below

Figure 4: The format you have chosen and an example

Some file naming examples could be:

These mandatory parameters are case-insensitive. In addition, they can appear in any order. E.g. myuniqueblobpattern/{Namespace}/{EventHub}/{PartitionId}/{year}{month}{date}/{hour}{minute}{second}/

Or

{year}{month}{date}/{hour}{minute}{second}/myuniqueblobpattern/{Namespace}/{EventHub}/{PartitionId}

Go ahead and type away different combinations on the text box!

We would love to hear your feedback on these improvements!