This post has been republished via RSS; it originally appeared at: Containers articles.

First published on TECHNET on May 05, 2016Actual Author: Jason Messer

All of the technical documentation corresponding to this post is available here .

There is a lot excitement and energy around the introduction of Windows containers and Microsoft’s partnership with Docker. For Windows Server Technical Preview 5, we invested heavily in the container network stack to better align with the Docker management experience and brought our own networking expertise to add additional features and capabilities for Windows containers! This article will describe the Windows container networking stack, how to attach your containers to a network using Docker, and how Microsoft is making containers first-class citizens in the modern datacenter with Microsoft Azure Stack.

Introduction

Windows Containers can be used to host all sorts of different applications from web servers running Node.js to databases, to video streaming. These applications all require network connectivity in order to expose their services to external clients. So what does the network stack look like for Windows containers? How do we assign an IP address to a container or attach a container endpoint to a network? How do we apply advanced network policy such as maximum bandwidth caps or access control list (ACL) rules to a container?

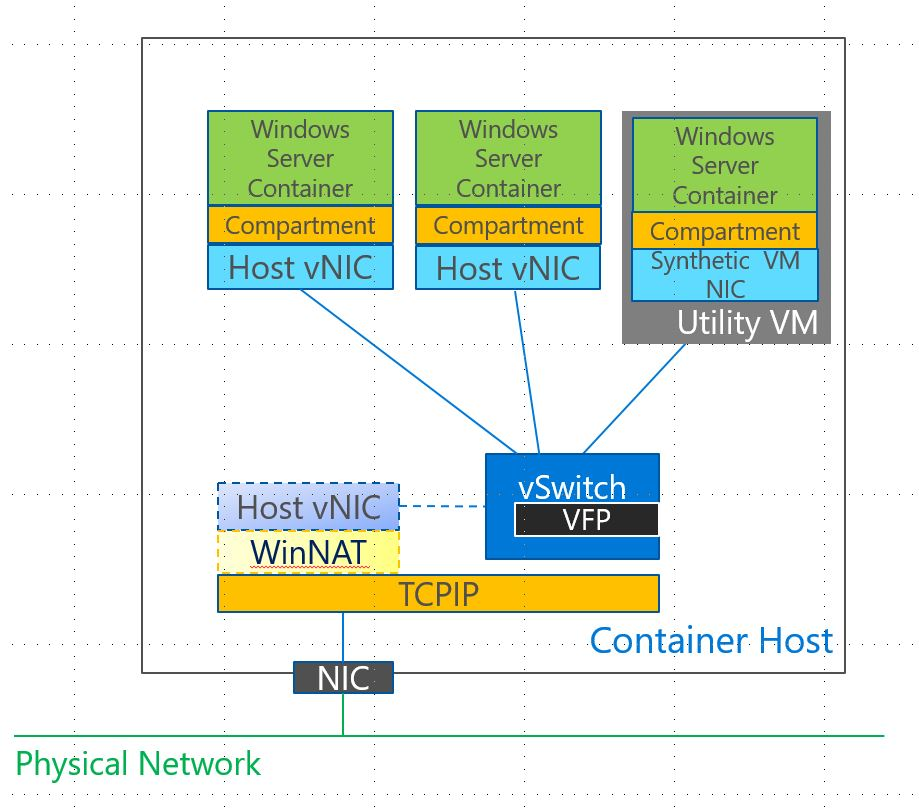

Let’s dive into this topic by first looking at a picture of the container’s network stack in Figure 1.

[caption id="attachment_8505" align="alignnone" width="919"]

Figure 1 – Windows Container Network Stack[/caption]

Figure 1 – Windows Container Network Stack[/caption]

All containers run inside a container host which could be a physical server, a Windows client, or a virtual machine. It is assumed that this container host already has network connectivity through a NIC card using WiFi or Ethernet which it needs to extend to the containers themselves. The container host uses a Hyper-V virtual switch to provide this connectivity to the containers and connects the containers to the virtual switch (vSwitch) using either a Host virtual NIC (Windows Server Containers) or a Synthetic VM NIC (Hyper-V Containers). Compare this with Linux containers which use a bridge device instead of the Hyper-V Virtual Switch and veth pairs instead of vNICs / vmNICs to provide this basic Layer-2 (Ethernet) connectivity to the containers themselves.

The Hyper-V virtual switch alone does not allow network services running in a container to be accessible from the outside world, however. We also need Layer-3 (IP) connectivity to correctly route packets to their intended destination. In addition to IP, we need higher-level networking protocols such as TCP and UDP to correctly address specific services running in a container using a port number (e.g. TCP Port 80 is typically used to access a web server). Additional Layer 4- 7 services such as DNS, DHCP, HTTP, SMB, etc. are also required for containers to be useful. All of these options and more are supported with Windows container networking.

Docker Network Configuration and Management Stack

New in Windows Server Technical Preview 5 (TP5) is the ability to setup container networking using the Docker client and Docker engine’s RESTful API. Network configuration settings can be specified either at container network creation time or at container creation time depending upon the scope of the setting. Reference MSDN article ( ) for more information.

The Windows Container Network management stack uses Docker as the management surface and the Windows Host Network Service (HNS) as a servicing layer to create the network “plumbing” underneath (e.g. vSwitch, WinNAT, etc.). The Docker engine communicates with HNS through a network plug-in (libnetwork). Reference Figure 2 to see the updated management stack.

[caption id="attachment_8515" align="alignnone" width="1014"]

Figure 2 – Management Stack[/caption]

Figure 2 – Management Stack[/caption]

With this Docker network plugin interfacing with the Windows network stack through HNS, users no longer have to create their own static port mappings or custom Windows Firewall rules for NAT as these are automatically created for you.

Example: Create static Port Mapping through Docker

Note: NetNatStaticMapping (and Firewall Rule) created automatically

Networking Modes

Windows containers will attach to a container host network using one of four different network modes (or drivers). The networking mode used determines how the containers will be accessible to external clients, how IP addresses will be assigned, and how network policy will be enforced.

Each of these networking modes use an internal or external VM Switch – created automatically by HNS - to connect containers to the container host’s physical (or virtual) network. Briefly, the four networking modes are given below with recommended usage. Please refer to the MSDN article ( here ) for more in-depth information about each mode:

- NAT – this is the default network mode and attaches containers to a private IP subnet. This mode is quick and easy to use in any environment.

- Transparent – this networking mode attaches containers directly to the physical network without performing any address translation. Use this mode with care as it can quickly cause problems in the physical network when too many containers are running on a particular host.

- L2 Bridge / L2 Tunnel – these networking modes should usually be reserved for private and public cloud deployments when containers are running on a tenant VM.

Note: The “NAT” VM Switch Type will no longer be available in Windows Server 2016 or Windows 10 client builds. NAT container networks can be created by specifying the “nat” driver in Docker or NAT Mode in PowerShell.

Example: Create Docker ‘nat’ network

Notice how VM Switch and NetNat are created automatically

Container Networking + Software Defined Networking (SDN)

Containers are increasingly becoming first-class citizens in the datacenter and enterprise alongside virtual machines. IaaS cloud tenants or enterprise business units need to be able to programmatically define network policy (e.g. ACLs, QoS, load balancing) for both VM network adapters as well as container endpoints. The Software Defined Networking (SDN) Stack ( TechNet topic ) in Windows Server 2016 allows customers to do just that by creating network policy for a specific container endpoint through the Windows Network Controller using either PowerShell scripts, SCVMM, or the new Azure Portal in the Microsoft Azure Stack.

In a virtualized environment, the container host will be a virtual machine running on a physical server. The Network Controller will send policy down to a Host Agent running on the physical server using standard SouthBound channels (e.g. OVSDB). The Host Agent will then program this policy into the VFP extension in the vSwitch on the physical server where it will be enforced. This network policy is specific to an IP address (e.g. container end-point) so that even though multiple container endpoints are attached through a container host VM using a single VM network adapter, network policy can still be granularly defined.

Using the L2 tunnel networking mode, all container network traffic from the container host VM will be forwarded to the physical server’s vSwitch. The VFP forwarding extension in this vSwitch will enforce the policy received from the Network Controller and higher-levels of the Azure Stack (e.g. Network Resource Provider, Azure Resource Manager, Azure Portal). Reference Figure 3 to see how this stack looks.

[caption id="attachment_8536" align="alignnone" width="1330"]

Figure 3 –Containers attaching to SDN overlay virtual network[/caption]

Figure 3 –Containers attaching to SDN overlay virtual network[/caption]

This will allow containers to join overlay virtual networks (e.g. VxLAN) created by individual cloud tenants to communicate across multi-node clusters and with other VMs. as well as receive network policy.

Future Goodness

We will continue to innovate in this space not only by adding code to the Windows OS but also by contributing code to the open source Docker project on GitHub. We want Windows container users to have full access to the rich set of network policy and be able to create this policy through the Docker client. We’re also looking at ways to apply network policy as close to the container endpoint as possible in order to shorten the data-path and thereby improve network throughput and decrease latency.

Please continue to offer your feedback and comments on how we can continue to improve Windows Containers!

~ Jason Messer