This post has been republished via RSS; it originally appeared at: Networking Blog articles.

Hi Everybody, Dan Cuomo back to talk about testing the Windows Time service for High Accuracy time.

The Windows Time service has grown exponentially during our last couple releases. In “the old days” time was merely an enabler for Active Directory; Kerberos tickets required systems to be within < 5 minutes of accuracy. If you ask your friendly neighborhood metrology expert, this is outrageous by today’s standards. Modern applications need time accuracy measured in the milliseconds (ms), microseconds (us), nanoseconds (ns) – There are other applications but for our areas of interest, we’ll stop at nanoseconds ?.

Unfortunately, there’s a lot of misinformation about the Windows Time service. Due to our past requirements, it’s often assumed that Windows can’t obtain high accuracy time on a modern operating system. While you may not be able to consistently hit in the nanosecond range just yet, you can obtain accuracy in the order of microseconds as demonstrated here and validated by NIST (see the section on Clock Source Stability where we were validated at 41 microseconds!). However, it’s important to use proper testing methodologies, otherwise, you might create a self-fulfilling prophecy.

So here are a few things you SHOULD NOT do when testing the Windows Time service.

Don’t compare systems “Out of the Box”

I’ve heard from more than a few customers that they want to see the native time accuracy performance Windows can obtain. This is a bad idea for a few reasons.

First, Microsoft has a lot of customers. They range from our high-performance Azure Data Centers, to high accuracy customers in the Intelligent Edge, to your mom and dad’s computer they just bought at Best Buy.

Our time service must work in all of these cases. You can imagine that a home computer doesn’t (and shouldn’t) synchronize nearly as much as one of the aforementioned high accuracy systems. This is wasteful of system resources such as CPU processing, but more importantly, power consumption considering these devices are mobile and often low powered.

Long story short, Windows is optimized to make the most common scenarios work as best as possible – “Common” in this case means the 100’s of millions of every day users with basic network connectivity to the internet.

Secondly, the typical Out of the Box comparison attempts to compare Linux to Windows. The problem is that they downloaded and configured Linux packages (not updates) to better synchronize their system because “that’s how Linux does it.” Did you also download our software timestamping capability from the PowerShell gallery (Install-Module SoftwareTimestamping)?

Needless to say, this is not an apples-to-apples comparison and if you truly want to understand what the platforms can do natively, you need to only configure what comes with a specific operating system.

Finally, Why? What other system in your datacenter do you not tweak or optimize?

If you run Active Directory, you setup your site links, change replication intervals, or configure group policies to meet your needs. If you’re running Hyper-V you upgrade drivers, enable advanced features like RDMA and VMMQ, and tweak live migration settings to squeeze out the best possible performance and reliability. So, if you’re serious about high-accuracy time, why not put the best foot forward?

To reach high accuracy (sub 1-second or sub 1-millisecond), you should modify your configuration using our high accuracy settings.

Don’t use different tools

I recently attended the 2019 WSTS conference in San Jose, Ca – and I’m American. Everyone at the conference spoke English, however it wasn’t everyone’s first language (in fact our friends from the United Kingdom would argue it wasn’t my primary language either ?). As a result, there were different interpretations of same information and colloquialisms that where “lost in translation.”

Each tool is written by a developer and that tool compares the time it gets from an operating system with a reference clock. As a simple example, how long does that tool take to query the time on the system? This is up to the tool and APIs implemented and while this may seem insignificant, it’s crucial if you’re counting accuracy in the microseconds.

If you want to compare one thing to another, you need to use the same tooling and APIs to eliminate the variability in the data; the time service is no different. If you use w32tm.exe to assess the accuracy of Windows, you should use w32tm.exe to assess the accuracy of other systems. If you have a 3rd-party tool, then use that tool across Windows and any other system you’re testing.

This problem is exacerbated by the next problem…

Don’t test from the system you’re testing

“When you're good at something, you'll tell everyone. When you're great at something, they'll tell you.” – NFL Hall-of-Famer, Walter Payton

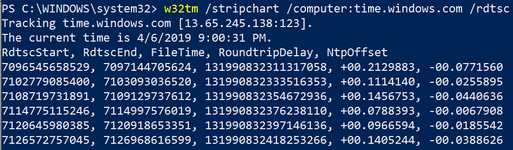

As previously mentioned, Windows has an inbox component called w32tm.exe. You can use this tool with the /stripchart parameter to query the clock on a remote machine. If this returns a positive value, the remote clock is ahead of your clock by the specified offset. If a negative value was returned, your system is ahead of the reference clock.

Because of the convenience of using the inbox tool, I’ve often seen customers setup a test that looks a lot like this:

They run w32tm.exe from the computer they’re comparing against the reference clock and conclude that their system’s offset is 650 microseconds.

Satisfied, you call success. This seems all well and good until one of your snarky co-workers walks in sipping their morning cup ‘O Joe and says, “But if the system knows it’s offset from the reference clock, why doesn’t it just correct the time?”

As you begin to eloquently explain to your co-worker why they don’t understand, your oversight dawns on you and you realize you you’re going to need a double facepalm.

The very problem that causes your system to be offset from the reference clock is affecting the measurements you’re taking from the client. Put another way, you’re asking the system to self-verify its accuracy against the reference clock. That’s like telling the dentist, “trust me, I don’t have any cavities.” If that dentist doesn’t poke around your mouth for a bit, you may have more than one issue…

There’s a better way

From the numerous stratum, to network hops, to operating system network stack latency, there’s a considerable amount of noise in the measurements. There are some good testing tools and methods available for assessing time accuracy available, but I can assure you most are not free – Trust me, I’ve looked (I’m also open to some suggestions in the comments ?).

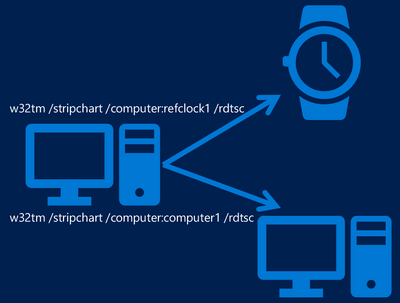

It would be better if you had another system verify your accuracy as compared to the reference clock in a way that could take into account the asymmetry in the network. Below you see a picture of a measurement system that simultaneously takes stripcharts of the reference clock and the system under test. In this scenario, you would output stripcharts to their own respective CSV file and import into excel to compare them.

Note: It’s a little more complicated than that, but that’s the gist.

This approach has numerous benefits:

- By putting a measurement system in between the reference clock and the system under test, you get an external opinion about your system's accuracy.

- This enables you to use the same tooling from the measurement system to measure different operating systems. w32tm /stripchart only requires that the node respond to NTP requests.

- If you place your observation system equidistant from the reference clock and the system under test, with enough samples, you can statistically minimize (not eliminate) the effect of network asymmetry.

While this is certainly not foolproof, this is a much-improved method of testing that begins to whittle down the variability affecting measurements.

Summary: Listen to Walter; get a second opinion

Don’t use 2012 R2 or Windows 8.1 (or below)

When Windows Server 2012 R2 and the corresponding clients were released, the only in-market time-dependent scenario we had was Active Directory. As previously mentioned, this required only sub-5 minutes of accuracy. If you’re interested in testing Windows for time accuracy, you need to upgrade to a modern operating system.

*sigh* I fully recognize that many customers are just not able to upgrade their systems to the latest and greatest operating system. There are many reasons why customers may choose not to upgrade, but ultimately, we just didn’t bake in all the high accuracy goodness that allows Windows to reach sub-millisecond accuracy into 2012 R2 (or Windows 8) and below.

If you want to see what Windows can do in time accuracy, you need to use at least Windows 10 version 1607 or Windows Server 2016. The best experience will be in Windows 10 version 1809 and Windows Server 2019 (or whatever the latest release is when you read this). Our high accuracy supportability guidelines outline all of this for you.

Don’t test “a little”

I’m thinking of a number between 1 and…

If you want to measure something, it’s important to obtain a statistically significant sample size. Time accuracy is no different. As you’re aware, there is a variable amount of latency and asymmetry as packets move throughout your network. This changes constantly and can be affected by a number of network related aspects like Head-of-Line blocking, prioritization of traffic (e.g. quality of service), and the sheer number of packets moving through some switches and not others.

As a result, we’d recommend using a large sample size. In a perfect world, you should take samples over a couple of days at least. Imagine you start a measurement at 8 AM in the morning, a surge of network traffic from logins and network profiles begins at 9 AM, or the backup job kicks off at 3 AM Sunday night and a maintenance window that live migrates a bunch of machines.

…needless to say, there are so many irregularities that you know and some you probably don’t know. To get the best results, you should take samples over a couple of weeks to eliminate irregularities in network traffic.

Summary: “Do or do not there is no try” - Yoda

Test the whole scenario

“How long is forever? Sometimes, just one second” – Lewis Carroll, Alice in Wonderland

While rare, leap seconds are a fact of life. If your business requires accuracy below 1-second you MUST handle leap seconds and handle them properly.

As discussed in some detail here, leap second smearing (spreading the additional second across the entire day) is not acceptable for regulated customers like the financial services industry for two major reasons:

- Leap second smearing is not traceable – Traceability (proof of your time accuracy back to a national reference timescale) is not possible according to the brainiacs that run the timing laboratories at NIST, NPL, etc.

- You’ve blown your accuracy target – Smearing works by carving up a second into smaller units and inserting those smaller units into the time scale throughout the day which means that by noon (12 PM), you’re approximately a ½ second offset from UTC.

If you need to keep time through a leap second, read this next. We also have some validation guides for you.

Summary: If you need to keep accurate time through leap seconds, make sure you test them.

Don’t test things you don’t care about

Some anecdotal information may be interesting but if you care about accuracy, then follow the recommendations in this article and test accuracy. Be careful not to put too much weight into things you don’t really care about (if you do care about things listed below, then as we recommended in the last section, have at it…). Here are a couple of examples of “rabbit holes” I’ve seen people spend far too much time on:

Startup convergence: How quickly a system becomes accurate is usually not too important for servers (I’m not aware of any client scenarios that are affected by this). This can be nice anecdotal information, but it should not be a primary metric you capture if your goal is to understand accuracy and stability. You certainly shouldn’t glean a systems overall ability because of how quickly it became accurate.

That said, it’s certainly a pain to have to wait around for a system to become accurate prior to enabling a workload on them. While this could be automated, it’s certainly not ideal.

The sweet spot is to make sure that you measure long-enough to where it does matter. If your workload has a time dependency, is not redundantly available, and must be running within a few minutes of a reboot, then make sure you understand convergence. However most modern workloads are built with availability in mind which likely means that they can wait a bit to take on the workload.

Bare-metal vs Virtual Machines

This one might be obvious, but your tests should reflect the actual profile of your target workload, which is to say, that if you’re using virtual machines, test virtual machines. If your systems are bare-metal, test bare-metal.

With virtual machines, receive traffic is intended to optimize host CPU performance and as such may see a bit more variability in processing of all packets. This affects the observed time accuracy at a granularity of microseconds.

Profiling can go a bit deeper than just bare-metal vs . Other things you might want to profile as they can vary your time accuracy are network adapter driver, firmware, and settings (e.g. Interrupt Moderation), OEM hardware (Dell vs HPE vs Lenovo vs…)…Your mileage may vary so test your scenarios!

Live Migration

Recently, I’ve heard an influx of comments about live migration. For most of us, live migration is a given; a basic function of a modern-day datacenter.

The challenge with time accuracy is that virtual machines typically get time accuracy from their host. In turn, the virtual machines accuracy is reflective of their host. If Host A and Host B are not closely synchronized, then the virtual machines time will be offset when it reaches the new system. In addition, the virtual machine doesn’t know how long it was in transit to the new host. It has no point of reference for the time it was off. It only knows what the destination tells it when it wakes up.

However, not everyone actually cares about this. First, there’s a risk reward with live migration that some customers and applications just don’t tolerate. I’ve even seen some customers require changes requests to be filed before moving a virtual machine to a new host (it was exhausting…but those VMs were vital to their business goals).

Second, as previously mentioned, many customers with high accuracy requirements are using bare-metal systems but for simplicity are testing their accuracy with virtual machines.

The point is that if your systems don’t migrate, then you don’t need to worry about the accuracy after a live migration. If they do, then make sure you those scenarios.

Summary

There are a lot of things to consider when testing something as fine-grained as time accuracy. This is an area that most of us just take for granted and a few seconds here or there doesn’t affect our workload. If, however your workload is time-sensitive, make sure you properly scope out the scenarios and perform as high-fidelity tests as possible.

Thanks for reading,

Dan Cuomo