This post has been republished via RSS; it originally appeared at: Networking Blog articles.

Hi folks,

Dan Cuomo back for our next installment in this blog series on synthetic accelerations. This time we’ll cover Windows Server 2012 R2. If you haven’t already read the first post on Server 2012, I’d recommend reviewing that before proceeding here. Following this post, we’ll tackle Windows Server 2016, and finally Windows Server 2019.

Background

Windows Server 2012 R2 added a few improvements to VMQ, including Dynamic VMQ which enables the processor processing network traffic destined for a virtual machine to be updated based on some heuristics.

However, the most important feature introduced in 2012 R2 was vRSS which is critical to understand as we move into the future operating systems like Windows Server 2016 and 2019. vRSS is the basis for how we exceed the bandwidth of a single CPU as you’ll see in this post.

As such, we’ll focus this article on vRSS then cover Dynamic VMQ so you can understand the journey when we complete our series with Windows Server 2016 and 2019.

Virtual Receive Side Scaling (vRSS)

Virtual Receive Side Scaling (vRSS) was introduced in Windows Server 2012 R2. vRSS enables improved performance over VMQ alone through a couple of new responsibilities.

In the Guest

Virtual CPUs fill up just like any other CPU and can become a bottleneck just like CPUs on the host. If the virtual machine has multiple virtual CPUs, it can alleviate this chokepoint by splitting the processing for the received network traffic across each virtual CPU in the guest.

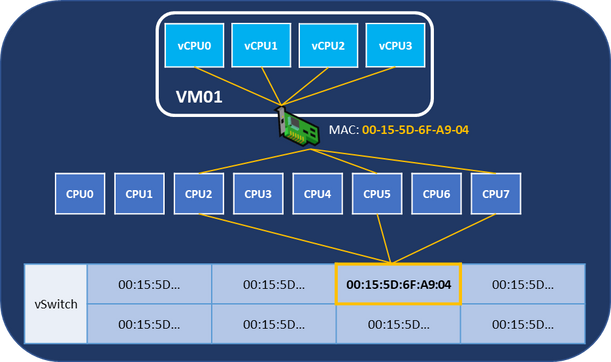

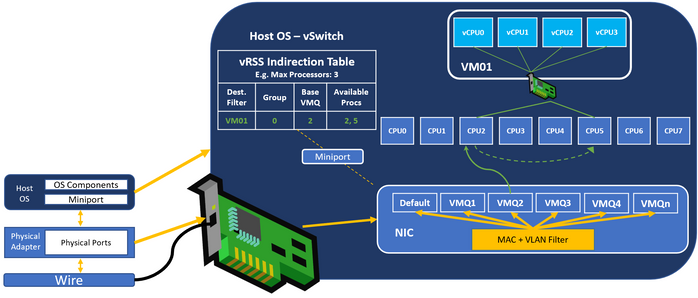

If you want to visualize this architecturally, a virtual NIC attaches to a “port” on the Hyper-V Virtual switch (vSwitch). This is similar to a physical port on a physical switch (except that it’s all virtual). In the picture below you can see that the vSwitch operates at layer 2 (ethernet). If you remember from our first article, legacy VMQ operates similarly using a MAC + VLAN filter to separate traffic into different VMQs.

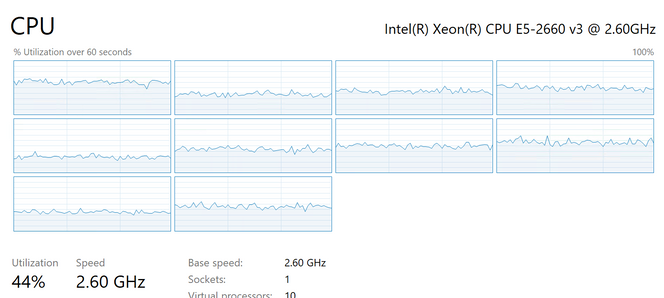

Once the traffic reaches the vmNIC, it needs to traverse the network stack and be processed by the Guest’s vCPUs. In the guest, you’ll see the network traffic distributed in task manager similar to this thanks to vRSS.

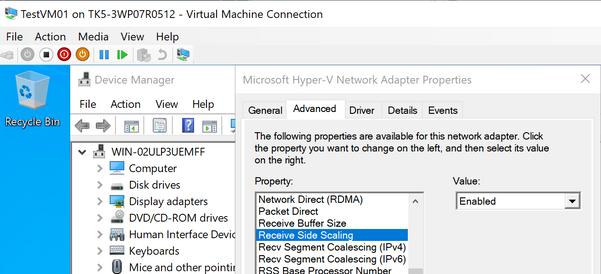

This operates by using RSS inside the guest, hence the intuitive name vRSS ?. Enabling or disabling this can be done by modifying the Receive Side Scaling property on the vNIC inside the guest.

On the Host

But vRSS ain’t no one-trick pony! vRSS also has responsibilities on the host as well. vRSS’s core responsibilities are:

- Creating the mapping of VMQs to Processors (known as the indirection table)

- Packet distribution onto processors

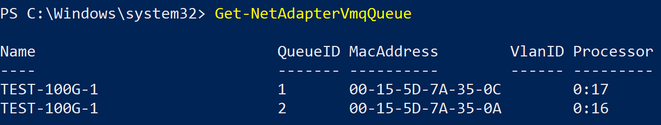

When VMQ interrupts a processor, it always interrupts the “base processor” for a specific VMQ. In the example below, the VM with the MAC Address ending in 7A-35-0C always interrupts processor 17.

Next, we use RSS technology to spread the flows across other available processors in the processor array. While this is a bit of work for the system, the benefit is that the throughput can surpass the bandwidth of a single VMQ. Remember, the base processor must always receive the packets first, but the heavy lifting is performed by other available processors.

Note: You may recognize that vRSS and VMQ are working together. CPU spreading with vRSS requires VMQ.

Now a VM can receive the bandwidth (approximately) equivalent to the number of processors engaged to do its bidding. Typically, the default is 8 processors. Excellent…

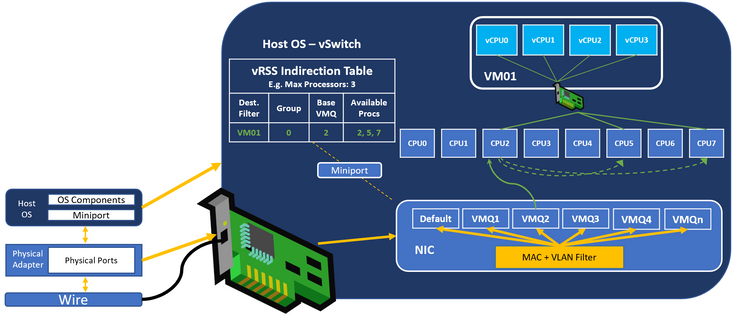

Here’s a diagram that shows the packet flow. In the picture below:

- All packets destined for VM01 are received by VMQ2 in the physical NIC

- VMQ2 Interrupts the CPU2 (the base processor)

- The virtual switch will use CPU2 to process as many packets as it can

- If required to keep up with the inbound workload, the virtual switch will “hand-out” packets to the other processors (in this case CPU5). It will engage no more than the value of MaxProcessors (in this case 3) set in the vRSS indirection table

Note: The virtual switch from the picture above was replaced with the vRSS indirection table however this is the same feature; vRSS is a function of the virtual switch.

The Base CPU

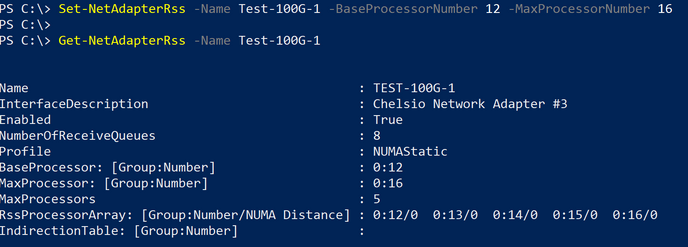

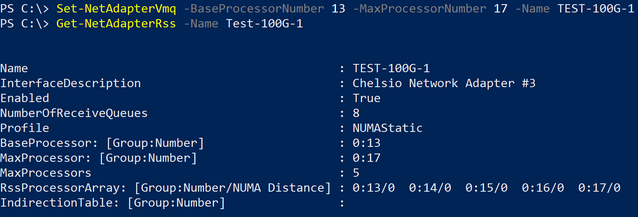

The base CPU is chosen out of the available processors in the RSS processor array on the system. In the screen shot below, we constrain the processor array to processors 12 – 16. You can see that the physical adapter (which is configured with a virtual switch), updates the RSSProcessorArray property accordingly.

Note: There is no RSS indirection table shown because there is a virtual switch attached and TCP/IP is no longer bound to this adapter. Instead the virtual switch manages the indirection table.

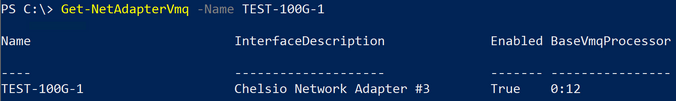

Next, if we look at Get-NetAdapterVMQ, you can see that the BaseVmqProcessor for the adapter has been updated to one in the RSSProcessorArray.

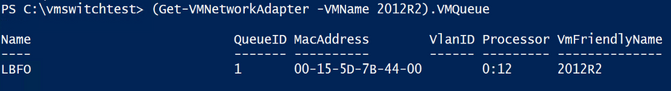

And the processors listed below for each VM have been constrained between 12 and 16 as we set in a previous command.

Note: In this example, we use one possible command to get the VMQ assigned for a vNIC. You could also use Get-NetAdapterVMQQueue.

Setting the Groundwork for 2016 and Beyond

As you can see, we did not need to use the Set-NetAdapterVMQ cmdlets in the previous blog post (although they would have worked as well).

As we move forward to the next article on Windows Server 2016, you’ll begin to understand how integral RSS is to VMQ. In Windows Server 2012, the two technologies were mutually exclusive (per adapter). In Windows Server 2012 R2, RSS isn’t yet the peanut butter to VMQ’s jelly, but you can see there is a budding friendship starting to form…In the 2012 R2 days, probably closer to orange mocha frappuccinos…

High and Low Throughput

VRSS intends to engage the least number of processors necessary to process the received traffic. This is for efficiency as there is a tax to engage and spread across each additional CPU. On low throughput, this may mean that only the base CPU is engaged. However, on high-throughput environments, the number of CPUs that can be engaged is equal to the value of MaxProcessors.

At maximum throughput, the base CPU may be receiving so many packets that it’s only able to process a minimal number of packets itself. Instead, it hands out packets to the others in the processor array.

In the example shown above, this VM will receive throughput equivalent to what approximately 2 – 3 processors can crank out (because the MaxProcessors value is limited to 3). As mentioned before, this is because most of the base CPU’s work is simply handing packets out to other processors. Despite engaging three processors, you’re getting a bit less than that.

In fact, you may find that despite setting MaxProcessors to 8, you may only engage a few processors, this is likely because your Base Processor is pegged and cannot hand out any more packets to other processors.

Note: The defaults are specific to your NIC, however in most cases, MaxProcessors defaults to 8 and was changed in this example only for visualization purposes.

As you can imagine, this is far better than VMQ alone. A single VM could receive approximately 15 Gbps using this mechanism – a nearly 3x improvement! In the next post on Windows Server 2016 we’ll explain how VMMQ enhances this to get even better throughput and lower the CPU cost, improving your system’s density.

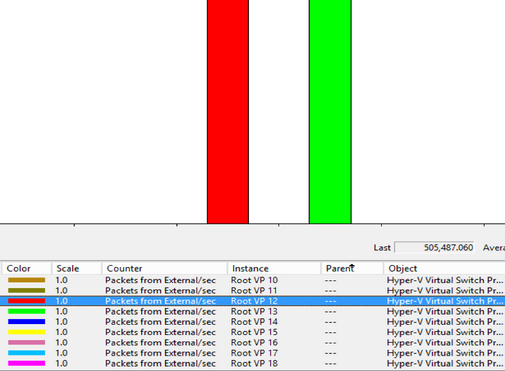

You can see which processors are being engaged for virtual traffic by opening perfmon, changing to the histogram view, and adding the Hyper-V Virtual Switch Processor Object with the Packets from External/sec counter. Next use NTTTCP or CTSTraffic to send traffic from another machine to your VM.

In the picture below, you can see that two processors are engaged: CPU12 (shown in Red) and CPU13 (shown in green). Each CPU that is processing packets will be represented by a bar. The height of the bar indicates the number of packets being processed by that specific CPU.

Dynamic VMQ

The next major improvement in 2012 R2 was called Dynamic VMQ. To make better use of VMQ and available system resources, the OS was improved in Windows Server 2012 R2 to allow a VMQ to processor assignment be updated dynamically based on its CPU load.

If you refer back to the article on 2012, you can see an obvious challenge where multiple VMs using the same processor can easily starve one another. In other words, the maximum throughput of a single CPU core does not change from around 5 or 6 Gbps.

Imagine there are 3 VMs all with a VMQ but assigned to the same processor, and that processor can process a total of 6 Gbps. Each VM could potentially receive 2 Gbps if they were distributed evenly.

However, network traffic isn’t that predictable. It’s just as likely that 5 Gbps goes to VM1 and the remaining processing power is split among the other VMs. When the other VMs suddenly need more throughput, it’s not available for them because VM1 is already dominating the core used to process the data.

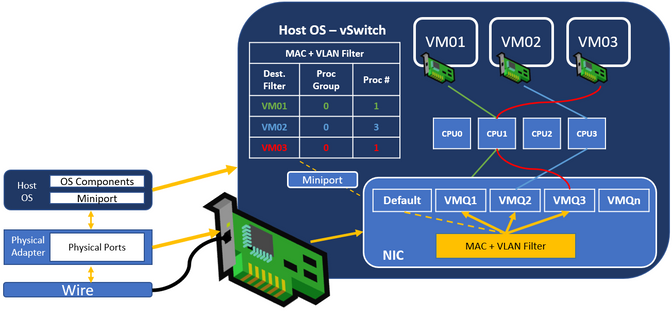

In this picture VM03 is assigned to the same core as VM01. In this way, VM01 and VM03 are in competition for the CPU cores. Their individual performance is gated by the VMs sharing that processor.

With Dynamic VMQ however, if CPU1 is not able to process the incoming packets, one of the queues can be reassigned to interrupt a different processor if one is able to sustain the workload.

The measurements determining that a queue should be reassigned is happening in Windows (this is another one of vRSS’s responsibilities). Since the queues can now move and they exist in the NIC you should pay special attention to keep your NIC’s driver and firmware up-to-date.

Dynamic VMQ is all about enabling your workloads to have consistent performance. If you have a night-owl for a CEO, and he tries to login at night when the backups are running, you want him to have the same performance as when he works during the day. Otherwise, you might have to work the same hours!

That said, there’s a couple of problems in this implementation which will be addressed in Windows Server 2019. Most notably, 2012 R2 does not handle workloads that burst very well. If you have a system that needs max throughput suddenly, Dynamic VMQ isn’t able to do that. Instead, you’ll notice that the system ramps up slowly, maxing out at around 15 Gbps. This is because the system only reserves the bare minimum resources required, pre-allocating only the single VMQ available when network throughput begins. As demand for throughput increases, the system takes some measurements, vRSS expands to more CPUs, it takes some more measurements, expands to more CPUs, and finally max throughput is reached.

Implications of the Default Queue

As a refresher, the default queue is where all traffic that doesn’t match a filter land - this is a shared VMQ.

With vRSS however, this is less painful because the packets from the default queue can be distributed to other available processors. While VMs that land on this VMQ will not reach the same performance metrics as VMs with a dedicated queue, the delta is not as severe as enabling a much higher density of VMs on the same hardware.

Host Virtual NICs

Host virtual NICs cannot leverage vRSS or Dynamic VMQ. This will be addressed in later versions however if your host NICs need more than what a single VMQ can provide, Windows Server 2012 R2 can’t help you.

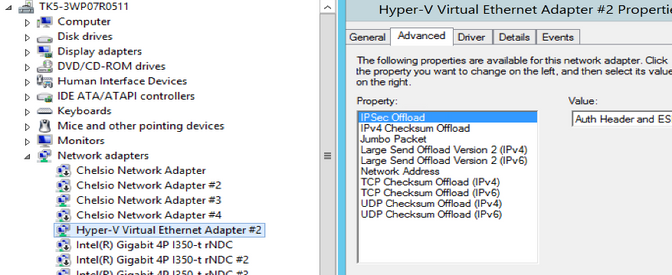

If you look at the properties of a Host virtual NIC there is no RSS capability listed.

Summary of Requirements

We had a similar list in the article on Windows Server 2012. I’ll keep this in each blog so you can see the progression. As you can see in 2012 R2, there were performance and workload stability improvements, however the management experience didn't change much.

- Install latest drivers and firmware – Even more important now that queues are moving!

Processor Array engaged by default – CPU0- Configure the system to avoid CPU0 on non-hyperthreaded systems and CPU0 and CPU1 on hyperthreaded systems (e.g. BaseProcessorNumber should be 1 or 2 depending on hyperthreading)

- Configure the MaxProcessorNumber to establish that an adapter cannot use a processor higher than this.

- Configure MaxProcessors to establish how many processors out of the available list a NIC can spread VMQs across simultaneously

- Test customer workload

Other best practices

All 2012 best practices move forward including that NOT disabling RSS unless directed by Microsoft support for troubleshooting - Disabling RSS is not a supported configuration on Windows. RSS can be temporarily disabled by Microsoft Support recommendation for troubleshooting whether there is an issue with the feature. RSS should not be permanently disabled, which would leave Windows in an unsupported state.

Summary of Advantages

- Spreading across virtual CPUs (vRSS in the Guest) – The virtual processors have been removed as a bottleneck.

- Spreading across physical CPUs (vRSS on the host) – Additional CPUs can be engaged to improve the performance of an individual virtual NIC by ~3x (approximately 15 Gbps).

- Dynamic Assignment – Overburdened processors can be moved to processors with less workload if the hardware (firmware/driver) supports it and an available processor is available.

- Default Queue – An overprovisioned system with more VMs than queues can “ease the pain” of a shared default queue by spreading and balance traffic across multiple CPUs enabling higher VM density.

Summary of Disadvantages

- One VMQ per virtual NIC – One is the loneliest number, and that goes for VMQs as well. While vRSS eases the pain, scaling up the workload to achieve higher throughputs means a considerable tax in the form of a CPU penalty.

- No Host vRSS – Host virtual NICs cannot take advantage of vRSS spreading on the host

- No default queue management – There is minimal management of the default queue.

- No management of vRSS –There is no management of vRSS

- Bursty workloads cannot be satisfied – No preallocated resources means that the system must measure and react to the workload’s demands slowly

As you can see, vRSS and Dynamic VMQ brought significant enhancements to Hyper-V networking for its time. This allowed virtual machines to nearly triple the throughput into a virtual machine AND have some level of throughput consistency for the workload by balancing across available processors. In our next article, we’ll discuss the rearchitecture of network cards and how this enabled us to overcome some of the existing shortcomings in 2012 R2.

Thanks for reading,

Dan