This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

PatientHub is an end-to-end healthcare app that leverages predictive machine learning (ML) models to provide key insights for both physicians and patients. In a previous PatientHub blog post, we had an overview of how PatientHub works. In this blog post, we will dive deep into one specific topic: how we developed and deployed a healthcare machine learning model to detect the diabetes re-admission rate.

DISCLAIMER: Please note that this app is intended for research and development use only. The app is not intended for use in clinical diagnosis or clinical decision-making or for any other clinical use and the performance of the app for clinical use has not been established. You bear sole responsibility for any use of this app, including incorporation into any product intended for clinical use.

Dataset

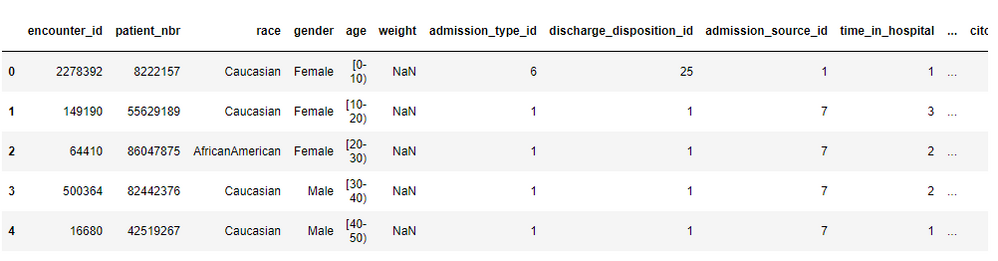

We use a real-world open dataset representative of 10 years (1999-2008) of clinical care at 130 US hospitals and integrated delivery networks. This data set is anonymous and includes over 100K records with more than 50 features representing patient and hospital outcomes, such as gender, race, age, specialty of the admitting physician. Most importantly, the dataset has a column indicating whether the patient was re-admitted into hospital because of diabetes within the next 30 days after the treatment.

Figure 1 Sample data set used for PatientHub app

Our goal is to train a machine learning model that predicts whether a patient will be re-admitted to hospital because of diabetes within 30 days. We use this scenario as a demonstration for how to develop a machine learning solution for such a use-case, and how to understand how the machine learning model makes its predictions.

You can download the data from the link. The research paper describing the dataset is located here.

Data Preprocessing

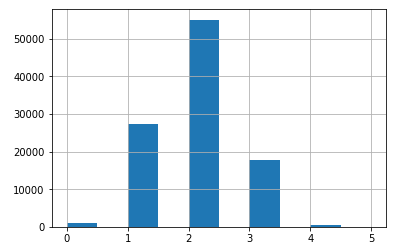

As with many model building exercises, we need to make sure the data contains accurate information. We analyzed the missing data for all the patient records using the following python code (result visualization is below), which indicates how many records contain missing values. As you can see, most of the records miss 1-3 values. However, since there are 50 features in each record and only 1-3 values are missing, machine learning algorithms can usually deal with that, so we don’t need to drop any patient record.

pt_sparsity = df_raw.isnull().apply(sum, axis=1)

myhist = pt_sparsity.hist()

Figure 2 Number of record containing missing values; Horizontal: number of missing values; Vertical: total number of record

The standard data preprocessing techniques are used to clean and transform the dataset. For example, the primary diagnosis column is an ICD9 (International Classification of Diseases) number indicating the exact disease. We’ve conducted one-hot encoding using Pandas like below:

for (icd9, count) in diag_counts.head(diag_thresh).iteritems():

new_col = 'diag_' + str(icd9)

df_raw[new_col] = (df_raw.diag_1 == icd9)|(df_raw.diag_2 == icd9)|(df_raw.diag_3 == icd9)

Model Training

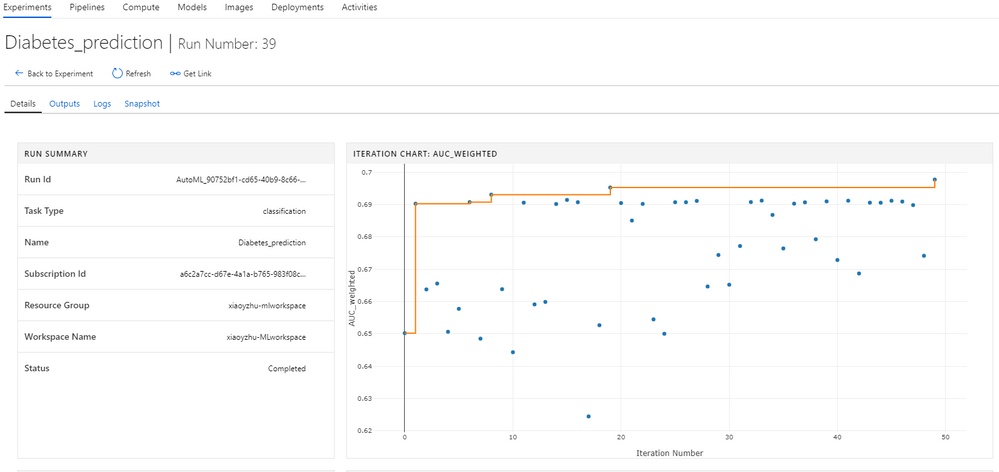

We used the Azure ML service’s automated ML (AutoML) capability to train the model. In machine learning practices, data scientists usually need to try out different machine learning models and hyperparameters, to find the best one to solve their problem. By using AutoML, data scientists define the machine learning goals and constraints and then launch the automated machine learning process. AutoML will automatically select the best algorithm and tune the hyperparameters, a process that can be very time consuming for the data scientist.

Below is the code snippet showing the AutoML configuration for the diabetes readmission prediction model:

automl_config = AutoMLConfig(task = 'classification',

debug_log = 'automl_errors.log',

primary_metric = 'AUC_weighted',

iteration_timeout_minutes = 60,

iterations = num_iterations,

n_cross_validations = 3,

verbosity = logging.INFO,

X = X_train,

preprocess = False,

y = y_train_array,

model_explainability = False,

path = './')

local_run = experiment.submit(automl_config, show_output = True)

The criteria specified is weighted Area Under Curve (AUC). This is a widely used metric in medical setting; in this case, the likelihood of a patient who gets readmitted to hospital will be low, and using AUC as the metric can force the model to find a balance between precision and recall. Under the hood, AutoML tries different algorithms and select the one that comes with highest AUC score. It will also ensemble different models for best performance. Below is the model performance history. The best one achieves around 0.7 Weighted AUC score (Note that higher number is better, with 1.0 being a perfect score).

Figure 3 AutoML training process

Model Deployment

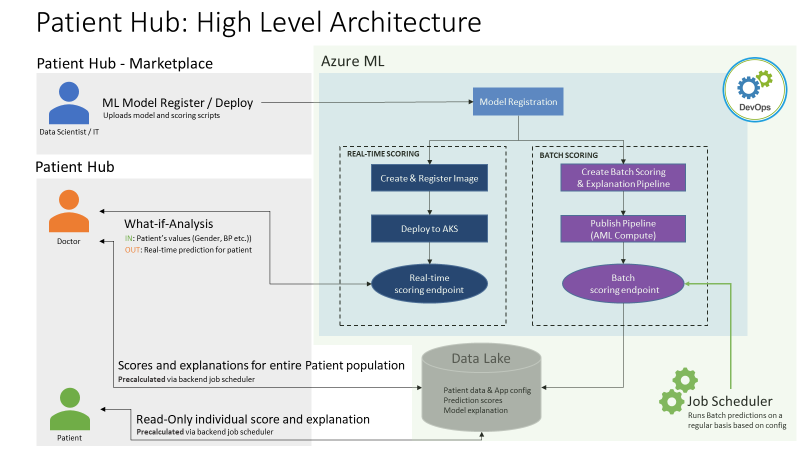

The next step is to deploy the models to the environment. As shown below in the PatientHub architecture, we have two paths to deploy the models: a real-time scoring path, and a batch scoring path.

Figure 4 PatientHub Architecture

Real-time scoring

In medical settings, patients and doctors usually want to do some real-time what-if analysis and see how prediction changes in response to an intervention. For example, patients and doctors might want to see how changing smoking habits would affect diabetes symptoms. In those scenarios, the real-time scoring analysis is very helpful.

To create a real-time scoring service, we use the Python SDK for Azure Machine Learning, to deploy the machine learning model to the Azure Kubernetes Service backend for production scenario. There are three steps needed:

- Register the model

- Prepare to deploy (specify assets, usage, compute target)

- Deploy the model to the compute target.

Each step will take several lines of code, as shown below:

# register the model

model = local_run.register_model(description = description, iteration = num_iterations-2, tags = tags)

image_config = ContainerImage.image_configuration(runtime= "python",

execution_script = script_file_name,

conda_file = conda_env_file_name,

tags = {'area': "digits", 'type': "automl_classification"},

description = "Image for ACE PatientHub Diabetes Analysis")

image = Image.create(name = "patienthubdiabetesanalysis",

# this is the model object

models = [model],

image_config = image_config,

workspace = ws)

image.wait_for_creation(show_output = True)

prov_config = AksCompute.provisioning_configuration()

aks_name = 'patienthub'

# Deploy to AKS cluster

aks_target = ComputeTarget.create(workspace = ws,

name = aks_name,

provisioning_configuration = prov_config)

Batch scoring

Since most medical history data is static, it’s also quite common to do batch scoring on a regular basis. For example, hospitals might pre-generate prediction to speed up UX and save infrastructure costs. Also, model explanation can often be very time-consuming, hence it’s more efficient to execute the model on the data in a batch process.

Instead of using Azure Kubernetes Service, for batch scoring we use Azure Machine Learning Pipeline because it’s designed for this scenario. The first two steps (register the model, prepare to deploy) are the analogous to those of real-time scoring; for the 3rd step, we will use an Azure Machine Learning Pipeline, as below:

batch_score_step = PythonScriptStep(

name="batch_scoring",

script_name="batch_scoring.py",

arguments=["--dataset_path", input_images,

"--model_name", "inception",

"--label_dir", label_dir,

"--output_dir", output_dir,

"--batch_size", batch_size_param],

compute_target=compute_target,

inputs=[input_images, label_dir],

outputs=[output_dir],

runconfig=amlcompute_run_config)

pipeline = Pipeline(workspace=ws, steps=[batch_score_step])

pipeline_run = Experiment(ws, 'batch_scoring').submit(pipeline, pipeline_params={"param_batch_size": 20})

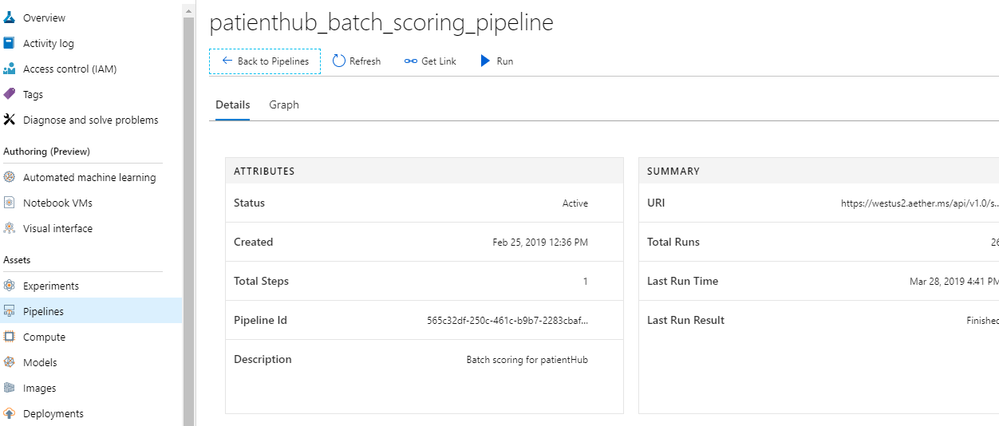

After the Machine Learning Pipeline is published, you can see the published Pipeline in the Azure portal, and can be called using a REST endpoint. This allows users to integrate this published Azure Machine Learning Pipeline with the existing medical system.

Figure 5 Azure Machine Learning Pipeline

Summary

In this blog post, we have described how we trained the PatientHub machine learning models using AutoML and deployed the trained machine learning model to Azure Kubernetes Service cluster. If you want to learn more on PatientHub, please visit our summary blog on what is PatientHub. If you have any question, feel free to leave a comment below.