This post has been republished via RSS; it originally appeared at: AI Customer Engineering Team articles.

This article shows how to deploy an Azure Machine Learning service (AML) generated model to an Azure Function. Right now, AML supports a variety of choices to deploy models for inferencing – GPUs, FPGA, IoT Edge, custom Docker images. Customers have provided feedback to support – an event-driven serverless compute platform that can also solve complex orchestration problems – as a model deployment endpoint within Azure Machine Learning service, and our AI platform engineering team is looking into this feedback to make it a seamless experience.

In the meantime, this blog post will walk you through the set of steps to manually download the model file and package it as part of Azure Functions for inferencing . This article uses Python code to build an E2E ML model and Azure functions. The data and model are from the Forecast Energy Demand tutorial

Why customers want Azure Functions integrated with Azure ML Service?

- There is no need to have pre-provisioned resources such as clusters

- Leverage event driven programming with several built-in triggers – for example, when the payload is uploaded to Storage, automatically trigger the Azure Function to score the payload using the AML model.

- There is no need to remember URLs or IP addresses to make POST requests.

Things to keep in mind

- When using Azure Functions without the consumption plan, there may be impact due to ‘cold start’ scenarios. This blog explains this behavior.

- Machine learning models may be large and include several library dependencies. When inferencing (scoring), the machine learning model must be deserialized and then applied against the payload which may lead to higher performance latencies when coupled with ‘cold start’ scenarios.

- At this time, Azure Functions only supports python dependencies that can be installed via pip install. If the model has other complex dependencies, then this may not be viable.

- Azure Functions python support recently went GA.

Implementation

Here are the set of steps :

- Picking the model file that needs to be hosted in Azure Functions

- Building the Azure Functions scaffolding, testing and deploying to Azure.

Step 1: Picking a model

Picking the right model with the right features and setting up the correct parameters takes a lot of time and effort. Fortunately, Azure Automated ML makes this easy.

To try this, I am using the model that was built using automated ML and is part of the Forecasting Energy Demand tutorial at https://github.com/Azure/MachineLearningNotebooks/tree/master/how-to-use-azureml/automated-machine-learning/forecasting-energy-demand.

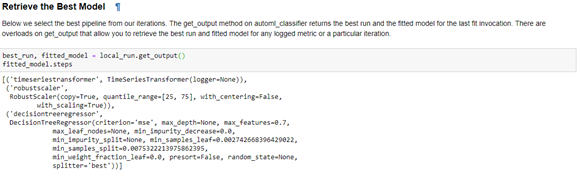

After completing the training of the model, automated ML provides the right ‘fitted model’.

Add these commands to download the pickle file from the notebook.

import pickle pickle.dump(fitted_model, open( "forecastmodel.p", "wb" ))

And now in the folder, I can see this pickle file:

This file can now be downloaded to the local dev machine where the next set of steps will be carried out.

The local dev machine has docker, Azure Functions developer tools (npm install -g azure-functions-core-tools), and installed in it.

Step 2: Building the Azure Functions scaffolding and testing

If you follow this article, this explains how to develop an Azure function using Python.

In my dev machine, I have a folder ‘blog’ where requirements.txt contains the various python packages required by the Function App.

requirements.txt

azure-functions==1.0.0b3 azure-functions-worker==1.0.0b3 grpcio==1.14.2 grpcio-tools==1.14.2 protobuf==3.6.1 six==1.12.0 numpy azureml-sdk sklearn

Inside this folder, I have a folder ‘HttpTrigger’ and have created the following files:

- main.py

- function.json

main.py

import azure.functions as func

import logging

import pickle

import sys

import os

import pathlib

import numpy as np

import azureml.train.automl

import pandas as pd

def main(req: func.HttpRequest) -> func.HttpResponse:

logging.info('Python HTTP trigger function processed a request.')

clientId = req.params.get('clientId')

logging.info('**********Processing********')

if not clientId:

try:

req_body = req.get_json()

except ValueError:

pass

else:

clientId = req_body.get('clientId')

# load the model

f = open(pathlib.Path(__file__).parent/'forecastmodel.pkl', 'rb')

model = pickle.load(f)

if clientId:

logging.info(clientId)

data = {'timeStamp':['2017-02-01 02:00:00','2017-02-01 03:00:00'],'precip':[0.0,0.0],'temp':[33.61,32.30]}

test = pd.DataFrame(data,columns=['timeStamp', 'precip', 'temp'])

logging.info(test.timeStamp + ' ' + str(test.precip) + ' ' + str(test.temp))

y_pred = model.predict(test)

logging.info(y_pred)

return func.HttpResponse(str(y_pred))

else:

return func.HttpResponse(

"Please pass the correct parameters on query strint or in the request body", status_code=400

)

The code uses ‘2017-02-01’ since the demand forecast tutorial includes the dataset from that year.

function.json

{

"scriptFile": "main.py",

"bindings": [

{

"authLevel": "anonymous",

"type": "httpTrigger",

"direction": "in",

"name": "req"

},

{

"type": "http",

"direction": "out",

"name": "$return"

}

]

}

Testing the function locally

You’ll need to set up the virtual python environment. For instructions, see this article .

python3.6 -m venv .functionenv source .functionenv/bin/activate

And then install the required packages:

pip install -r requirements.txt

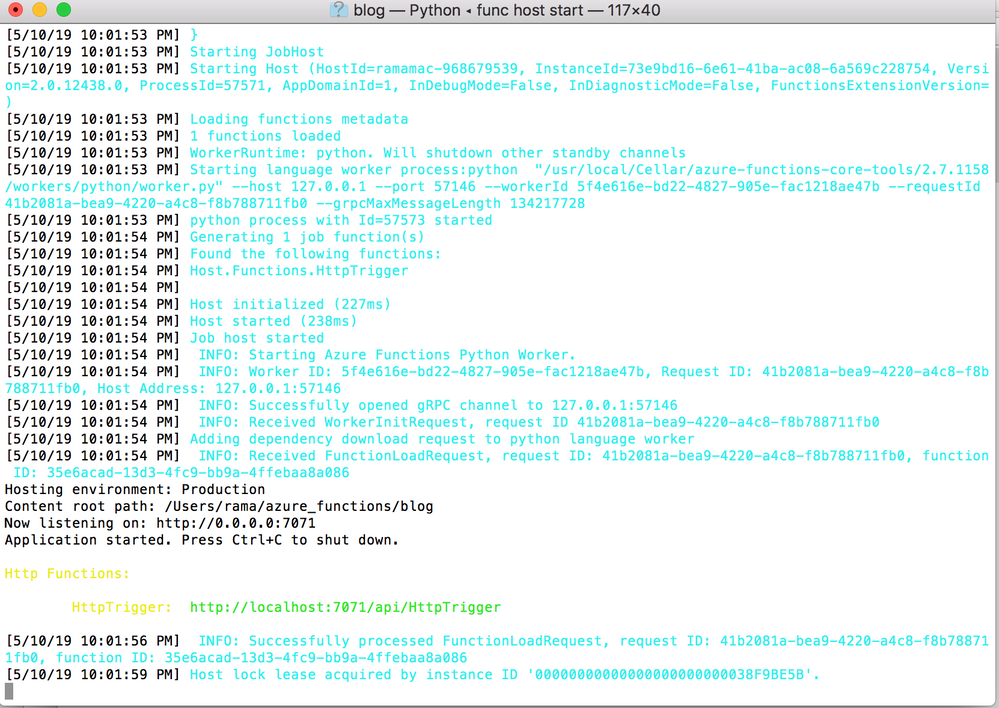

From the ‘blog’ folder, launch the Azure Functions local runtime to start locally:

func host start

The Azure Function should start locally and provide the complete URL of the HttpTrigger API. You should see similar output to what appears below.

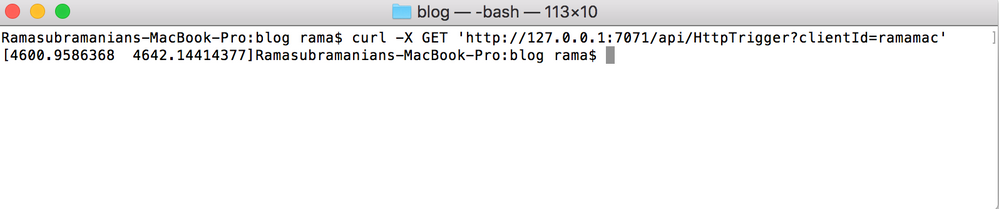

To test the function, from another shell do a curl command and you should get the output which is a prediction.

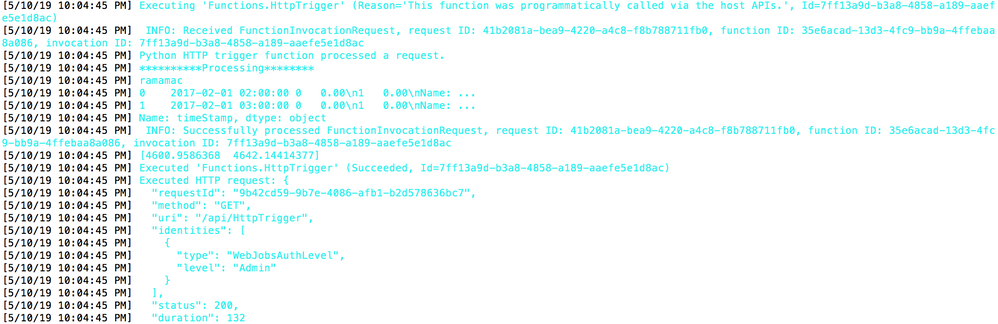

The output is the array [4600.9586368 4642.14414377] which is the demand prediction for the parameters passed in from ‘main.py’

data = {'timeStamp':['2017-02-01 02:00:00','2017-02-01 03:00:00'],'precip':[0.0,0.0],'temp':[33.61,32.30]}

So the complete details are as follows:

|

timestamp |

Precip |

Temp |

Demand |

|

2017-02-01 02:00:00 |

0.0 |

33.61 |

4600.9586368 |

|

2017-02-01 03:00:00 |

0.0 |

32.30 |

4642.14414377 |

You can modify main.py and get new prediction values.

The shell where the function was started will also show the logging and other details

You can deploy this as an Azure Functions using the instructions from this tutorial. Remember to use ‘--build-native-deps’ parameter and now a docker image will be created and operationalized within Azure Functions.

I created an app service plan and a function app name within it

az appservice plan create --resource-group <rg name> --name <name> --subscription <subscription id> --is-linux az functionapp create --resource-group <> --os-type Linux --plan <plan name> --runtime python --name <function app name> --storage-account <storage account name> --subscription <subscripton id> func azure functionapp publish <function app name> --build-native-deps

At this point, you have taken the Demand Forecasting model and operationalized it as part of Azure Functions, thus taking a Serverless pattern approach for inferencing of the model.

In a subsequent blog, we will show how to use this custom docker image within Azure Machine Learning and the advantages of leveraging the AML registry and using MLOps for operationalizing the code.

Useful Links

- https://docs.microsoft.com/en-us/azure/azure-functions/functions-create-function-linux-custom-image

- https://hub.docker.com/_/microsoft-azure-functions-base

- https://github.com/Azure/azure-functions-python-worker/issues/340 --> use the latest core tools to get past this issue.

- https://docs.microsoft.com/en-us/azure/container-registry/container-registry-get-started-docker-cli

- https://github.com/Microsoft/LightGBM/issues/1369