This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Recently, my team engaged with a customer where they were looking for a better method to extract & expose various marketing-related data feature aggregates for use in training a machine learning model to predict the outcome of customer contacts or touchpoints – to help determine if they would ultimately lead to real sales opportunities.

Data feature aggregates are simply summary statistics or calculations performed on a set of data to derive new features or attributes. Feature aggregates are commonly used in machine learning to provide more informative and relevant input data for model training purposes.

Machine learning has become an essential tool for businesses to gain insights and improve decision-making. To train machine learning models, it is crucial to have a robust training dataset that includes relevant features. However, creating these features can be a time-consuming and complex task. Azure Data Factory - Data Flows is an excellent tool for aggregating features and improving the quality of your training dataset.

Azure Data Factory - Data Flows is a cloud-based data integration service that enables you to create, schedule, and manage data pipelines. Data Flows represent a visual interface that provides a rich, drag-and-drop experience for building data transformation logic. It can process large amounts of data quickly and efficiently, making it an ideal tool for feature aggregation.

Feature engineering is the process of selecting and transforming raw data into meaningful features that can be used to train machine learning models. However, feature engineering can be a challenging task, especially when working with large datasets. Azure Data Factory - Data Flows simplifies the feature engineering process by providing a visual interface for building data transformation logic. It includes a wide range of data transformation activities, including aggregation, filtering, sorting, and joining.

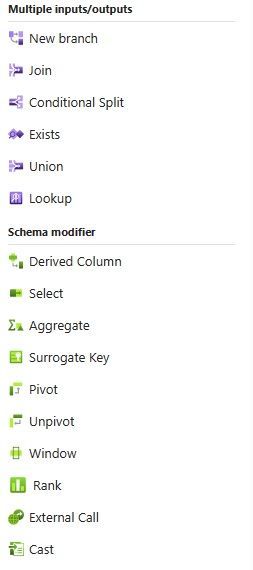

Below are screenshots of the Azure Data Factory – Data flow mapping activities:

The aggregation activity in Data Flows is particularly useful for feature aggregation. It allows you to group data by one or more columns and calculate aggregate values for each group. This process can be repeated for each relevant feature, resulting in a dataset with relevant features for machine learning model training. By using Data Flows to aggregate features, you can easily create new feature aggregates for inclusion in machine learning training files.

When creating a training dataset for machine learning models, it is essential to have relevant features that capture the underlying patterns and relationships in the data. Feature engineering is the process of selecting and transforming raw data into meaningful features that can be used to train machine learning models. However, feature engineering can be a time-consuming and challenging task, especially when dealing with large datasets.

The process to follow consists of basically (3) Steps:

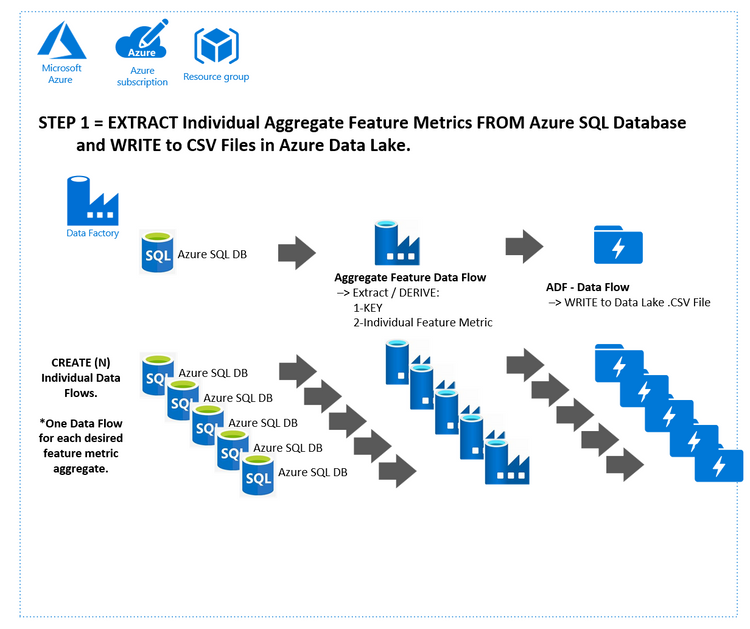

Step 1 of the process is depicted below:

In this step, we create individual Azure data Factory Data Flows – to extract, derive, and aggregate the desired (numeric) features from the Azure SQL database. The database sources for these types of aggregate features are typically endless – as are the use cases. Typical scenarios might include customer aggregates across sales, marketing, and CRM databases.

The pattern is simple; create an individual Azure Data Factory – Data flow for each aggregate you wish to generate that will be used as an input for a Machine Learning training file. The Key to success is to have a common ID also included in the output – so that we can later perform a JOIN on this field across ALL aggregates. A common ID column might be ‘Customer_ID’.

By creating each aggregate individually – each with its own Azure Data Factory–Data flow, you have the ability to completely customize the logic and workflow to produce each aggregate feature.

Underlying the visual interface of Data Flows is Apache Spark. Spark is a powerful open-source distributed computing engine that provides fast and efficient processing of large-scale data workloads. Data Flows leverage the power of Spark to process big data at scale and provide a scalable and efficient data integration service platform. By utilizing Spark, Data Flows can handle

large amounts of data and perform complex data transformations quickly and efficiently. This makes it an ideal tool for feature engineering and data preparation for machine learning model training.

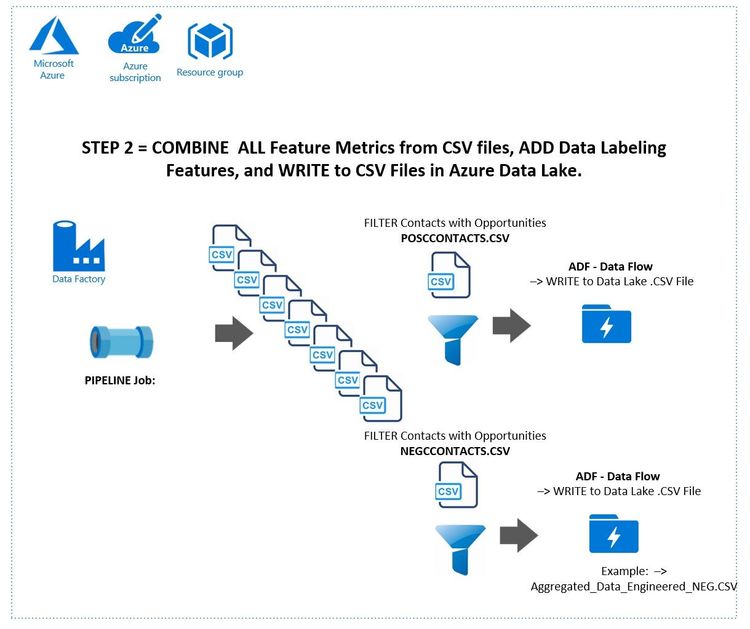

Step 2 is depicted below:

In this step, we JOIN All the individual aggregates together using a UNION Data flow activity. The "Key to Success" - is to have a common ID also included in the CSV output. This is so that we can perform a JOIN on this field across ALL the desired aggregates. For example, a common ID column might be ‘Customer_ID’. Additionally, there are ‘branch’ activities in this dataflow to filter and assign ‘label’ columns such as ‘WinLossFlag’ values to our CSV dataset. These labels are then used for ‘training’ our Machine Learning models.

A Word about Labels

Labels in machine learning are used to train supervised learning models. In supervised learning, the machine learning algorithm is trained on a labeled dataset, which means that each data point in the dataset is associated with a label. The label is the output variable or the target variable that the machine learning model is trying to predict.

Labels are important in machine learning because they provide the ground truth for the machine learning algorithm to learn from. By training on a labeled dataset, the machine learning algorithm can learn the relationship between the input features and the output variable. This relationship can then be used to make predictions on new, unseen data.

Labels can be binary or categorical, such as true/false, yes/no, or red/blue/green. They can also be continuous, such as the price of a house or the temperature of a room. The type of label used depends on the type of machine learning problem being solved.

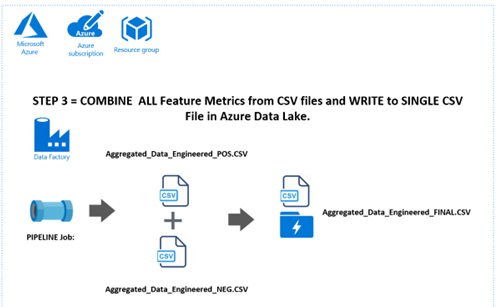

Step 3 is depicted below:

In this step, we simply combine all the (Positive + Negative) labeled feature aggregates into (1) single output file for machine leaning model training purposes.

The benefits of this approach include the following:

- Live Data – coming directly from ‘live’ data sources ex. Azure SQL Databases.

- Individually Verifiable Metrics – Auditable CSV outputs for EACH aggregate metric.

- Schedule-able Data flows can run as part of Data

- Simple, powerful, elegant, approach to performing common and/or complex Data Engineering tasks with minimal coding effort required.

- Flexible pattern – by incorporating individual Data Factory Data flows - to produce each aggregate metric - the underlying SPARK architecture can be leveraged to help derive, aggregate, and summarize critical data to derive meaningful and insight features for making future Machine Learning predictions.

- Interactive Debugging:

- In Azure Data Factory - Data Flows, debugging can be done using the Debug mode, which allows you to run your data transformation logic in a debug environment. The debug environment provides a visual interface that allows you to see the data as it flows through each step of the transformation process. This can help you identify any

- errors or issues in your data transformation logic and fix them before deploying it to production.

- In the Debug mode, you can also set breakpoints, which are points in your data transformation logic where the debugger will pause execution. This allows you to inspect the data at that point and ensure that it is in the expected format. You can also step through the data transformation logic one step at a time to see how the data is being transformed at each step.

- Debugging in Azure Data Factory - Data Flows is an essential tool for ensuring the quality and accuracy of your data transformation logic. By identifying and fixing errors early in the development process, you can save time and resources and ensure that your data transformation logic is working correctly when deployed to production.

Azure Data Factory - Data Flows is revolutionizing the way machine learning training files are created. By streamlining the feature engineering process, Data Flows enables businesses to create relevant features quickly and efficiently. This process can lead to more accurate and robust machine learning models, resulting in better insights and more informed decision-making.

In conclusion, the art of feature aggregation is a critical component of machine learning model training. With Azure Data Factory - Data Flows, businesses can simplify the feature engineering process and create relevant features quickly and efficiently. By including relevant features in machine learning training files, businesses can improve the accuracy and robustness of their machine learning models, leading to better insights and more informed decision-making.