This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Introduction

BeeGFS/beeOND is a popular parallel filesystem used to handle the I/O requirements for many HPC workloads. The BeeGFS/beeOND configuration defaults are reasonable but not optimal for all I/O patterns. In this post we will provide some tuning suggestions to improve BeeGFS/beeOND performance for a number of specific I/O patterns. The following tuning options will be explored and examples of how they are tuned for different I/O patterns will be discussed

1. Number of managed disk or number of local NVMe SSD’s per VM.

2. BeeGFS chunksize/Number of targets

3. Number of metadata servers

Deploying BeeGFS/beeOND

The azurehpc github repository contains scripts to automatically deploy BeeGFS/beeOND parallel filesystems on Azure (See the examples directory).

git clone git@github.com:Azure/azurehpc.git

Disk layout considerations

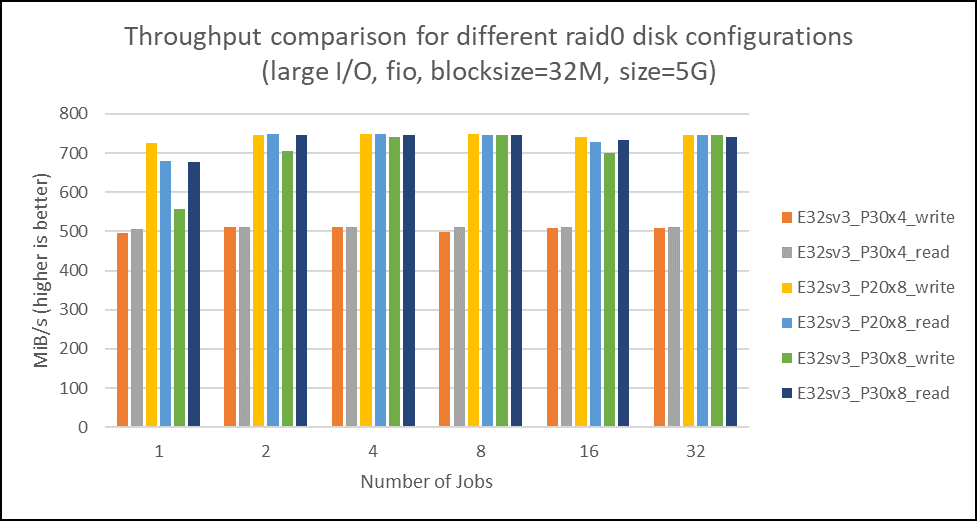

When deploying a BeeGFS parallel filesystem using managed disks the number and type of disks needs to be carefully considered. The VM disks throughput/IOPS limits will dictate the maximum amount of I/O each VM in the BeeGFS parallel filesystem can support. Azure managed disks have built-in redundancy, at a minimum (LRS) three copies of data are stored and so raid0 striping is enough. In general, for large throughput striping more slower disks than fewer faster disks is preferred. We see in fig 1, that 8xP20 disks gives better performance than 4xP30 and that 8xP30 does not give any better performance because of the VM (E32s_v3) disk throughput throttling limit.

In the case of local NVMe SSD’s (e.g Lsv2), the choice of the number of NVMe’s per VM may depend on the I/O pattern and size of the parallel filesystem. For large throughput and a large parallel filesystem having multiple disks striped (raid0) NVMe per VM may be preferred from a performance and management/deployment perspective, but if IOPS are more important a single NVMe per VM may give better performance.

BeeGFS/beeOND chunksize/number of targets

A BeeGFS parallel filesystem will consist of several storage targets, typically each VM will be a storage target. The storage target may use managed disks or local NVMe SSD’s and can be striped with raid0. The chunksize is the amount of data sent to each target, to be processed in parallel. The BeeGFS/beeOND default chunksize is 512k and the default number of storage targets is 4.

beegfs-ctl –getentryinfo /beegfs

EntryID: root

Metadata buddy group: 1

Current primary metadata node: cgbeegfsserver-4 [ID: 1]

Stripe pattern details:

+ Type: RAID0

+ Chunksize: 512K

+ Number of storage targets: desired: 4

+ Storage Pool: 1 (Default)

Fortunately, in beegfs you can change the chunksize and number of storage targets for a directory. This means that you can have different directories tuned for different I/O patterns by adjusting the chunksize and number of targets.

For example, to set the chunksize to 1MB and number of storage targets to 8.

beegfs-ctl --setpattern --chunksize=1m --numtargets=8 /beegfs/chunksize_1m_4t

A rough formula for setting the optimal chunksize is

Chunksize = I/O Blocksize / Number of targets

Number of Metadata Servers

BeeGFS parallel filesystems that do a lot of metadata operations (e.g creating files, deleting files, stat operations on files, checking file attributes/permissions etc) will need the right number and type of metadata servers setup to perform correctly. In the case of beeOND it is important to be aware that by default only 1 metadata server is configured no matter how many nodes are in your beeOND parallel filesystem. Fortunately, you can change the default number of metadata servers used in beeOND.

To increase the number of metadata servers in a BeeOND parallel filesystem to 4.

beeond start -P -m 4 -n hostfile -d /mnt/resource/BeeOND -c /mnt/BeeOND

Large Shared Parallel I/O

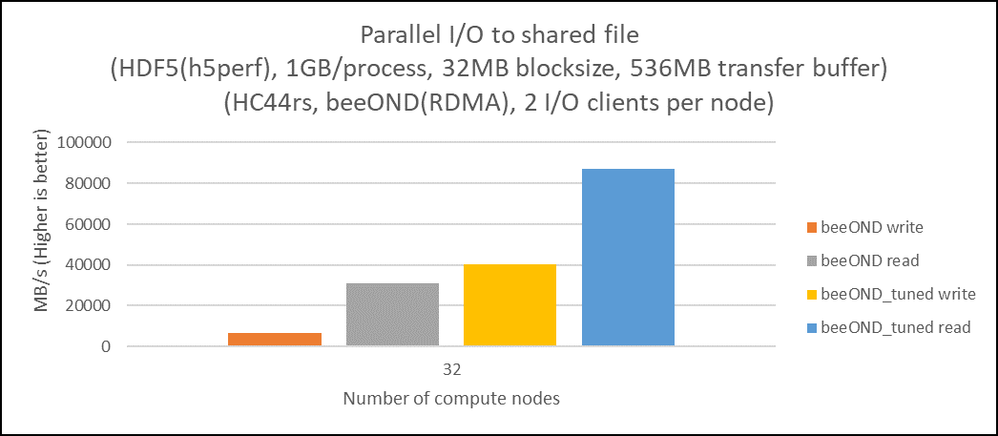

The shared parallel I/O format is a common format in HPC in which all parallel processes write/read to/from the same file (N-1 I/O). Examples of this format at MPIO, HDF5 and netcdf. The default BeeGFS/beeOND setting are not optimal for this I/O pattern, you can get significant performance improvements by increasing the number of storage targets.

It is also important to be aware that the number of targets used for shared parallel I/O will limit the size of the file.

Small I/O (high IOPS)

BeeGFS/beeOND is primarily designed for large throughput I/O, but there are times when the I/O pattern of interest is high IOPS. The default BeeGFS configuration is not ideal for this I/O pattern, but there is some tuning modification that can improve the performance. To improve IOPS it is usually best to reduce the chunksize to the beeGFS/beeOND minimum of 64k (assuming the I/O blocksize is < 64k) and reduce the number of targets to 1.

Fig. 3 BeeGFS/beeOND IOPS performance is imporved by reducing the chunksize to 64k and setting the number of stor4age targets to 1.

Fig. 3 BeeGFS/beeOND IOPS performance is imporved by reducing the chunksize to 64k and setting the number of stor4age targets to 1.

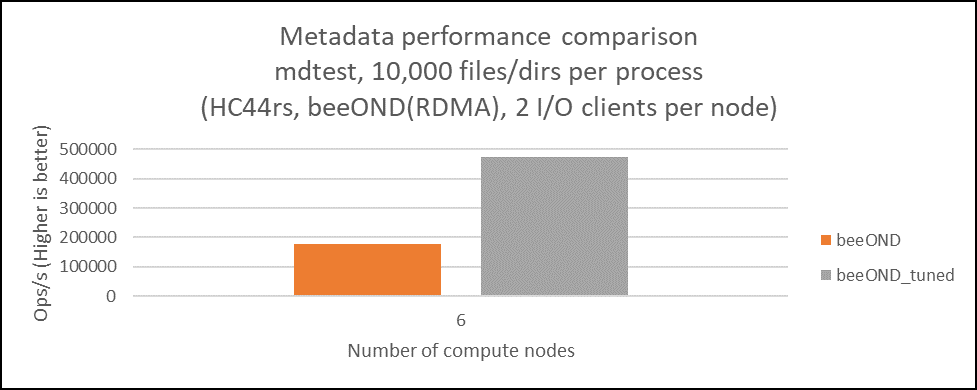

Metadata performance

The number and type of metadata servers will impact the performance for metadata sensitive I/O. beeOND for example by default has only one metadata server, adding more metadata servers can significantly improve performance.

fig. 4 beeOND metadata performance can be significantly improved by increasing the number of metadata servers.

fig. 4 beeOND metadata performance can be significantly improved by increasing the number of metadata servers.

Summary

BeeGFS/beeOND is a popular parallel filesystem and used for many different I/O patterns. The default BeeGFS/beeOND configuration is reasonable for many I/O scenarios, but not always optimal. By applying some simple BeeGFS/beeOND tuning optimizations significant improvements in I/O performance is possible for several I/O patterns outlined in this post. If BeeGFS/beeOND is required to support multiple different I/O patterns, each can be tuned and applied to a specific directory.