This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

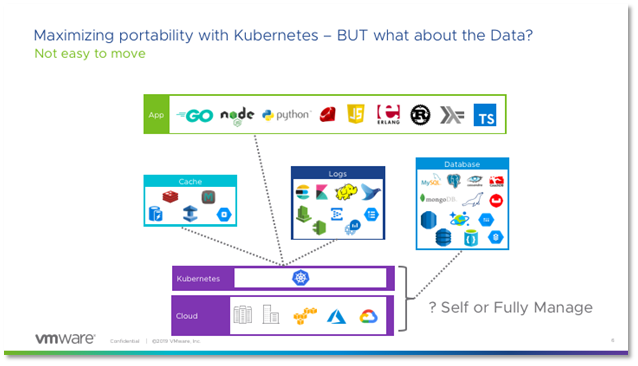

As more and more applications convert to cloud native with Kubernetes as a normalizing layer, the ability to have portability between public clouds also becomes easier. Theoretically, an application can now move easily between AWS/Azure/GCP.

However, there are a couple of questions that you still have to consider:

- Should I connect to cloud services such as ML/AI, DevOps tool chains, DBs, Storage, etc.? This might lock me in.

- Should I create my own PostgreSQL database and manage it?

This decision point really lies between implementing a self-managed or a fully managed database.

Before proceeding, let’s clarify the definitions of “self-managed” and “fully managed” within the context of this blog.

- A self-managed database is an implementation of a specific database configuration in which the DevOps Admin manages the entire lifecycle of the database. This includes provisioning of the database with the appropriate configuration (including HA, encryption, security, etc.), as well as managing backups, upgrades, security patching, etc. This is fairly complex and requires expertise, but it may be necessary given business requirements such as legacy dependencies which make movement to cloud difficult, regulatory compliances like GDPR, auditing, or investment in own datacenter etc. To illustrate the complexities associated with self-managed databases and specifically on just the scaling portion, please review “Scaling PostgreSQL for Large Amounts of Data”

- A fully managed database is consumption of a database offered through a managed service provider (e.g. Azure) or even IT. In this scenario, the DevOps admin no longer needs to worry about any configuration, backups, upgrades, or security patching. As noted in Azure’s documentation for Azure Database for PostgreSQL, it has built-in HA, scale, etc. You do need to properly set guardrails on the database - who or what has access, and to what they have access. For the purposes of this blog, we will consider Hyperscale services like Azure Database for PostgreSQL or Azure Cosmos DB.

Decision points

There are multiple factors you should be considering in making this decision. I’ll cover a few (but not all) of these decision points and review how they apply to a sample application.

Specific applications could have dependencies that cannot be provided on specific database types and/or database versions. A perfect example is a financial application which uses an older version of a DB such as Ingres DB, which isn’t even available as a managed service. The operations team would have to roll out Ingres DB on Azure compute nodes and manage the entire lifecycle. Another healthcare application may have requirements to use an older version of Postgres that cannot be upgraded due to the simple fact that developers (and/or funds) are not available to upgrade the application code to use newer versions of Postgres. In these circumstances, the easy and obvious decision would be to roll your own DB on the Public Cloud environment.

Database redundancy and high availability is another factor. While potentially simple for small applications, the configuration can become onerous when dealing with a scaled application. Hence in production environments running mission critical workloads, with strict availability requirements, using a managed service such as Azure Database for PostgreSQL simplifies deployments and significantly reduces time to production. It’s easier to configure HA on Azure. Configuring anything similar in a self-managed database requires advanced knowledge and skills.

Whether to save primary storage space (tier 1) or ensure disaster recovery, an ability to consistently backup the database and potentially restore is generally a business requirement. Building the proper backup and restoral mechanisms for a self-managed database requires full knowledge of the backend and storage. Several parts of the operations that need to be designed include:

- Setting up and configuring initial and scalable back end storage in multiple tiers – where the first tier is potentially cache and last tier being “cold” backup storage.

- Understanding and setting up how to restore and recover data as quickly as possible.

- When and how to scale the storage

- And so on…

Hence, this is complex, but it can be built and maintained with substantial operations and capital cost. However, using a managed service such as Azure Database for PostgreSQL, these capabilities are easily available as a configuration option. Not only can data be backed up but the retention days for backup, local vs geo redundancy, type of backup storage can all be selected. In addition, other features like encryption are built-in default capabilities in the product. Restoral is also easy, and the precise point in time can be selected to restore.

Another major concern with databases is compliance. The standard PCI, HIPAA, etc. compliance requirements can be met using either a self-managed or fully managed database as these require specific implementations of tenancy, encryption, redundancy, etc. However, issues such as geo-bounding (storing data in specific geos) are not truly database centric but rather application centric. The application is required to essentially “route” the data to the proper geo-storage. Many businesses seriously consider using a self-managed (mainly on-prem) implementation, due to an obvious ability to control the implementation and meet the requirements. However, a fully managed service on a Public Cloud (such as Azure Database for PostgreSQL) generally has a long set of certifications that ensure the storage meet PCI, HIPAA, etc. requirements. Enabling you to get closer to meeting your compliance needs faster and easier.

Operational expertise is a big factor in this decision. Using Kubernetes adds even more complexity to the puzzle. This complexity is realized because of the need to manage Kubernetes itself, and the interconnections with storage. While the K8S community is working on things like operators to make it simple, you still have to determine how to setup and manage the storage component that K8S connects to. Hence there is still a need to have significant expertise in infrastructure. Instead, a fully managed service with secure connections reduces the need for database expertise (Kubernetes or not) and infrastructure expertise.

Finally, the issue of scale is a large consideration when it comes to self-managed vs fully managed databases. Scaling requires a more complex implementation leading to more nodes, storage, security, etc. Many businesses might have the expertise to manage the scale, but many do not. Fully managed services like such as Hyperscale (Citus) on Azure Database for PostgreSQL allow incremental increases in the database capacity through the addition of new nodes when needed with a selection of varied costs, cores, storage, and connections.

While I covered a few of the more common factors above, there are many more factors. The following table iterates through a more complete list:

|

Factor |

Self-managed |

Fully managed |

|

Specific SW dependencies needed for Application? |

Yes |

Potentially |

|

Potentially Control Compliance (PCI, GDPR, etc.) |

Yes – managed via the app |

Yes – managed via the app |

|

Operations cost reduction (reduce DBA expertise, SW licenses, etc.) |

No |

Yes |

|

Simple self-service for Devs |

Potentially |

Yes |

|

Built-in Disaster Recovery |

No |

Yes |

|

Built-in SLA |

No |

Yes |

|

Simplified Scaling |

No |

Yes |

|

Built in end-to-end security |

No |

Yes |

While the obvious choice per the table is using a fully managed database, it’s truly dependent on business requirements and cost. In many cases self-managed databases are chosen for various political and business decisions, but the truly obvious choice is a Public Cloud based database service, such as Hyperscale (Citus) on Azure Database for PostgreSQL.

Sample application

As we stated in this blog, many applications are becoming orchestrated via Kubernetes. While the Kubernetes based application can migrate from cloud to cloud, the database can be implemented, secured, scaled, and maintained in one cloud with a secure, potentially low latency, connection.

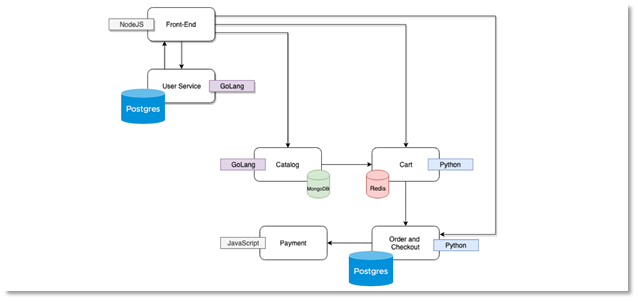

We built a sample application, called Acme Fitness, which is an e-commerce application that has several services and databases and is deployed with Kubernetes.

Let’s review the application’s databases, what was used and why from a DevOps perspective.

- User service – stores users’ profiles and passwords. This data is long term and using a DB such as Postgres is viable. However, the number of users will most likely grow if Acme Fitness is successful, hence using Azure Database for PostgreSQL is a viable option.

- Catalog service – requires a large number of catalog items which includes catalog item details as well as images for each item. Most likely won’t grow as much as the user service, since the catalog is limited to fitness products. For now, the team has selected to implement MongoDB on Azure compute. They could have chosen to use Azure Cosmos DB which is compatible with Mongo DB. They can always move this over when it becomes viable.

- Cart Service – is where the users' “Cart” is stored, and this information is ephemeral until the user “checks” out. Until then this will change and or even be completely be deleted. The application team has chosen to implement a local Redis service to support this.

- Order Service – is where ALL transactions, including all the items as part of the transaction are kept. The obvious choice here is a database like Postgres. However, compared to the user or catalog databases, this database will require to scale heavily. Hence using a database service such as Hyperscale Citus on Azure Database for PostgreSQL becomes compelling.

All the databases have different requirements and different implementations, 2 self-managed DBs and 2 fully managed databases. As the application evolves and hopefully the business grows these requirements might change. Monitoring and understanding these needs are an important part of operations to ensure the proper database implementation is available for the application.

Coming up…

In the next blog we will explore in detail how the Orders database was:

- Deployed using Azure’s Open Service Broker capability through VMware Bitnami

- How the Order code is structured to handle a scalable Postgres database such as Hyperscale (Citus) on Azure Database for PostgreSQL

- How the deployment scales

- The important aspects of the database – Hyperscale (Citus) on Azure Database for PostgreSQL, and why it was picked.