This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Accelerate your end-to-end ML lifecycles with semi-automated image annotation using Azure Machine Learning

Many data and machine learning scientists have had the experience of working on a computer vision problem, e.g. object detection, that requires a significant investment of time annotating images within an unlabeled dataset. While labeling data can be relaxing, our existence is impermanent.

The following cloud-based solution speeds up the end-to-end ML lifecycle by performing semi-automated annotation of unlabeled image data. This is achieved by integrating the open-source web-based image annotation tool COCO-Annotator with a custom-built image segmentation or keypoint detection model that is trained and deployed on Azure.

COCO-Annotator allows developers to easily review and correct inaccurate segmentation proposals for unlabeled data generated by the custom-built model. Corrected data can then be added to the training set for retraining the model. As segmentation proposals become increasingly accurate over iterations of training, the developer has to perform fewer corrections to the segmentation proposals.

COCO-Annotator

COCO-Annotator is an open-source web-based image annotation tool for creating COCO-style training sets for object detection and segmentation, and for keypoint detection. If you follow the installation instructions, you will be all set within minutes: You simply clone the github repository, and spin up the container with “docker-compose up”.

Data Science Virtual Machine

I’m running COCO-Annotator on a lightweight because this allows me to annotate images on my workstation in the office or on my notebook or tablet’s touchscreen. I can even share the address of my DSVM with colleagues so that I don’t have to annotate all images myself.

Set up an annotation service

COCO-Annotator supports model-based image annotation with models out of the box, requiring only a single configuration step to enable a trained MaskRCNN segmentation model. Another exciting feature of COCO-Annotator is that you can point it towards any Rest API endpoint for automatically annotating your images as long as the Rest API endpoint returns COCO-style annotations in JSON format. This can be extremely useful if you are working on a dataset with category labels specific to your use-case. In a typical workflow scenario, you might annotate a couple of images, use them to fine-tune a model pretrained on another dataset, then use the resulting model to semi-automatically label more images, iteratively growing your training set and improving the performance of your model.

As mentioned above, COCO-Annotator expects COCO-style annotations in JSON format:

Depending on your use-case, you may want to perform automated annotation with a model you have deployed in production, e.g. on an auto-scaling AKS cluster. This way you will get first-hand exposure to the performance of the production model. Alternatively, you can run a local docker container on the same DSVM on which you are running COCO-Annotator. If you built the container image with the AML SDK, you can use the same image locally as you would deploy in production to AKS or an Edge device.

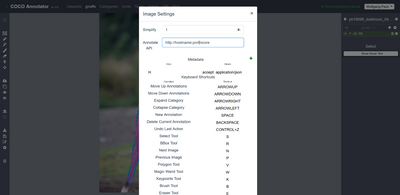

Figure 1: Simply click on the “Image Settings” wheel in the left panel. Enter the URL to the REST endpoint that returns coco-style image annotations as JSON data. Then you can click the “download from cloud” button in the left panel to have images annotated automatically.

Dataset storage

Depending on your setup, you may also want to consider maintaining your dataset on Azure Blob Storage. That way you could hook up an Azure Machine Learning Pipeline to a storage container, to automatically retrain your model with the newly annotated training data. More importantly, this way the data will be available even when the DSVM is shut down.

You can simplify your workflow significantly by mounting your Azure Blob Storage container on your DSVM, so that COCO-annotator datasets are directly stored in your storage container. Just follow these instructions, then create a symbolic link from the dataset directory of COCO-Annotator to the mount directory for that storage container.

Use Azure Machine Learning pipelines to retrain the model

If you store your dataset in Azure Blob Storage, either manually or by mounting the container on the machine running COCO-Annotator, you should consider using AML pipelines to retrain your model with the newly annotated data. Your AML pipeline could be a sequence of steps, from data ingestion to registering the model in the AML model registry. You could even perform automated hyperparameter sweeps with HyperDrive while retraining your model.

One great feature of AML pipelines is that you can set up a scheduler that checks according to a desired polling interval whether there have been any changes to the dataset in your storage container. For example, the scheduler could check once a day whether the dataset was updated. You can of course also manually trigger a pipeline run, if you feel you have annotated enough images for one day. You can even take it a step further, and add a step at the beginning of your pipeline that calculates more advanced statistics on your dataset, to determine whether it is worth triggering a full run of the training pipeline.

Update annotation service with MLOps

If you haven’t used the MLOps extension for Azure DevOps, now might be a good time to check it out. You can define a release pipeline, such that the annotation service is updated with your model, if the above AML pipeline has registered a new model in the AML model registry. You can condition the updating of your deployment on whether the new model performs better on some hold-out dataset.

Please let me know if you found this useful or need more info. Thanks.