This blog post is co-authored by Philippe Zenhaeusern and Javier Soriano

In the last few months working on Azure Sentinel we have talked to many partners and customers about ways to automate Azure Sentinel deployment and operations.

These are some of the typical questions: How can I automate customer onboarding into Sentinel? How can I programmatically configure connectors? As a partner, how do I push to my new customer all the custom analytics rules/workbooks/playbooks that I have created for other customers?

In this post we are going to try to answer all these questions, not only describing how to do it, but also giving you some of the work done with a repository that contains a minimum viable product (MVP) around how to build a full Sentinel as Code environment.

The post will follow this structure:

- Infrastructure as Code

- Azure Sentinel Automation Overview

- Automating the deployment of specific Azure Sentinel components

- Building your Sentinel as Code in Azure DevOps

We recommend you going one by one in order to fully understand how it works.

Infrastructure as Code

You might be familiar with Infrastructure as Code concept. Have you heard about Azure Resource Manager, Terraform or AWS Cloud Formation? Well, they are all ways to describe your infrastructure as code, so you can treat it as such…put it under source control (eg. git, svn) so you can track changes to your infrastructure the same way you track changes in your code. You can use any source control platform, but in this article, we will use Github.

Besides treating your infrastructure as code, you can also use DevOps tooling to test that code and deploy that infrastructure into your environment, all in a programmatic way. This is also referred as Continuous Integration/Continuous Delivery (CICD). Please take a look at this article if you want to know more. In this post we will use Azure DevOps as our DevOps tool, but the concepts are the same for any other tool.

In the Sentinel context, the whole idea is to codify your Azure Sentinel deployment and put it in a code repository. Every time there is a change in the files that define this Sentinel environment, this change will trigger a pipeline that will verify the changes and deploys them into your Sentinel environment. But how do we programmatically make these changes into Sentinel?

Azure Sentinel Automation Overview

As you probably know, there are different components inside Azure Sentinel…we have Connectors, Analytics Rules, Workbooks, Playbooks, Hunting Queries, Notebooks and so on.

All these components can be managed easily through the Azure Portal, but what can I use to programmatically modify all these?

Here is a table that summarizes what can be used for each:

Component | Automated with |

Onboarding | API, Powershell, ARM |

Alert Rules | API, Powershell |

Hunting Queries | API, Powershell |

Playbooks | ARM |

Workbooks | ARM |

Connectors | API |

- Powershell: Special thanks to Wortell for writing the AzSentinel module, which greatly facilitates many of the tasks. We will use it in the three components that support it (Onboarding, Alert Rules, Hunting Queries).

- API: There are some components that don’t currently have a Powershell module and can only be configured programmatically via API. The API is still in preview but it can be found here. We will use it partially in Alert Rules and in Connectors.

- ARM: This is Azure’s native management and deployment service. You can use ARM templates to define Azure resources as code. We will use it for Playbooks and Workbooks.

How to structure your Sentinel code repository

Here we would like to show what we think is the recommended way to structure your repository.

|- contoso/ ________________________ # Root folder for customer

| |- AnalyticsRules/ ______________________ # Subfolder for Analytics Rules

| |- analytics-rules.json _________________ # Analytics Rules definition file (JSON)

|

| |- Connectors/ ______________________ # Subfolder for Connectors

| |- connectors.json _________________ # Connectors definition file (JSON)

|

| |- HuntingRules/ _____________________ #

| |- hunting-rules.json _______________ # Hunting Rules definition file (JSON)

|

| |- Onboard/ ______________________ # Subfolder for Onboarding

| |- onboarding.json _________________ # Onboarding definition file (JSON)

|

| |- Pipelines/ _____________________ # Subfolder for Pipelines

| |- pipeline.yml _______________ # Pipeline definition files (YAML)

|

| |- Playbooks/ ______________________ # Subfolder for Playbooks

| |- playbook.json _________________ # Playbooks definition files (ARM)

|

| |- Scripts/ _____________________ # Subfolder for script helpers

| |- CreateAnalyticsRules.ps1 _______________ # Script files (PowerShell)

|

| |- Workbooks/ ______________________ # Subfolder for Workbooks

| |- workbook-sample.json _________________ # Workbook definition files (ARM)

You can find a sample repository with this structure here.

We will use this same repository throughout this post as we have placed there the whole testing environment. Note: take into account that this is just a Minimum Viable Product and is subject to improvements. Feel free to clone it and enhance it.

Automating deployment of specific Azure Sentinel components

Now that we have clear view on what to use to automate what and how to structure our code repository, we can start creating things. Let’s go component by component detailing how to automate its deployment and operation.

Onboarding

Thanks to the AzSentinel Powershell module by Wortell, we have a command that simplifies this process. We just need to execute the following command to enable Sentinel on a given Log Analytics workspace:

Set-AzSentinel [-SubscriptionId <String>] -WorkspaceName <String> [-WhatIf] [-Confirm] [<CommonParameters>]

We have a created a script (InstallSentinel.ps1) with some more logic in it, so we can use it in our pipelines. This script takes a configuration file (JSON) as an input where we specify the different workspaces where the Sentinel (SecurityInsights) solution should be enabled. The file has the following format:

“deployments”: [

{

“resourcegroup”: “<rgname>”,

“workspace”: “<workspacename>”

},

{

“resourcegroup”: “<rgname2>”,

“workspace”: “<workspacename2>”

}

]

}

The InstallSentinel.ps1 script is located in our repo here and has the following syntax:

InstallSentinel.ps1 -OnboardingFile <String>

We will use this script in our pipeline.

Connectors

Sentinel Data Connectors can currently only be automated over the API which is not officially documented yet. However, with Developer Tools enabled in your browser it is quite easy to catch the related connector calls. Please take into account that this API might change in the future without notice, so be cautious when using it.

The following script runs through an example that connects to “Azure Security Center” and “Azure Activity Logs” to the Sentinel workspace, both are very common connectors to collect data from your Azure environments. (Be aware that some connectors will require additional rights, connecting “Azure Active Directory” source for instance will require additional AAD Diagnostic Settings permissions besides the “Global Administrator” or “Security Administrator” permissions on your Azure tenant.)

The “EnableConnectorsAPI.ps1” script is located inside our repo here and has the following syntax:

EnableConnectorsAPI.ps1 -TenantId <String> -ClientId <String> -ClientSecret <String> -SubscriptionId <String> -ResourceGroup <String> -Workspace <String> -ConnectorsFile <String>

The ConnectorsFile parameter references a JSON file which specifies all the data sources you want to connect to your Sentinel workspace. Here is a sample file:

“connectors”: [

{

“kind”: “AzureSecurityCenter”,

“properties”: {

“subscriptionId”: “subscriptionId”,

“dataTypes”: {

“alerts”: {

“state”: “Enabled”

}

}

},

},

{

“kind”: “AzureActivityLog”,

“properties”: {

“linkedResourceId”: “/subscriptions/subscriptionId/providers/microsoft.insights/eventtypes/management”

}

}]

}

The script will iterate through this JSON file and enable the data connectors one by one. This JSON file should be placed into the Connectors directory so the script can read it.

As you can imagine, there are some connectors that cannot be automated, like all the ones based on Syslog/CEF as they require installing an agent.

Analytics Rules

AzSentinel PS module provides a command to be able to create new Analytics Rules (New-AzSentinelAlertRule), but it only supports rules that are of type Scheduled. Ideally, we want to be able to deploy a Sentinel environment where other types of rules (Microsoft Security, Fusion or ML Behavior Analytics) are deployed.

For that, we have created a script that uses a combination of AzSentinel and API calls to be able to create any kind of analytic rule.

The script is located inside our repo here and has the following syntax:

CreateAnalyticsRulesAPI.ps1 -TenantId <String> -ClientId <String> -ClientSecret <String> -SubscriptionId <String> -ResourceGroup <String> -Workspace <String> -RulesFile <String>

As you can see, one of the parameters is a rules file (in JSON format) where you will specify all the rules (of any type) that need to be added to your Sentinel environment. Here is a sample file:

“analytics”: [

{

“kind”: “Scheduled”,

“displayName”: “AlertRule01”,

“description”: “test”,

“severity”: “Low”,

“enabled”: true,

“query”: “SecurityEvent | where EventID == \”4688\” | where CommandLine contains \”-noni -ep bypass $\””,

“queryFrequency”: “5H”,

“queryPeriod”: “6H”,

“triggerOperator”: “GreaterThan”,

“triggerThreshold”: 5,

“suppressionDuration”: “6H”,

“suppressionEnabled”: false,

“tactics”: [

“Persistence”,

“LateralMovement”,

“Collection”

]

},

{

“kind”: “Scheduled”,

“displayName”: “AlertRule02”,

“description”: “test”,

“severity”: “High”,

“enabled”: true,

“query”: “SecurityEvent | where EventID == \”4688\” | where CommandLine contains \”-noni -ep bypass $\””,

“queryFrequency”: “5H”,

“queryPeriod”: “6H”,

“triggerOperator”: “GreaterThan”,

“triggerThreshold”: 5,

“suppressionDuration”: “6H”,

“suppressionEnabled”: false,

“tactics”: [

“Persistence”,

“LateralMovement”

]

},

{

“kind”: “MicrosoftSecurityIncidentCreation”,

“displayName”: “Create incidents based on Azure Active Directory Identity Protection alerts”,

“description”: “Create incidents based on all alerts generated in Azure Active Directory Identity Protection”,

“enabled”: true,

“productFilter”: “Microsoft Cloud App Security”,

“severitiesFilter”: [

“High”,

“Medium”,

“Low”

],

“displayNamesFilter”: null

},

{

“kind”: “Fusion”,

“displayName”: “Advanced Multistage Attack Detection”,

“enabled”: true,

“alertRuleTemplateName”: “f71aba3d-28fb-450b-b192-4e76a83015c8”

},

{

“kind”: “MLBehaviorAnalytics”,

“displayName”: “(Preview) Anomalous SSH Login Detection”,

“enabled”: true,

“alertRuleTemplateName”: “fa118b98-de46-4e94-87f9-8e6d5060b60b”

}

]

}

As you can see Fusion and MLBehaviorAnalytics rules need a field called alertRuleTemplateName. This is an ID that is consistent across all Sentinel environments, so you should use the same values in your own files. As of today, there’s only one alert of each of these kinds, so you shouldn’t need anything else here.

The script will iterate through this JSON file and create/enable the analytics rule alerts one by one. The script also supports updating existing alerts that are already enabled. This JSON file should be placed into the Analytics Rules directory so the script can read it. Currently, the script doesn’t support attaching a playbook to an Analytics Rule alert.

Workbooks

Workbooks are a native object in Azure, and therefore, can be created through an ARM template. The idea is that you would place all the custom workbooks that you have developed inside the Workbooks folder in your repo, and any change on these will trigger a pipeline that creates them in your Sentinel environment.

We have created a script (placed in the same repo here) that can be used to automate this process. It has the following syntax:

CreateWorkbooks.ps1 -ResourceGroup <String> -WorkbooksFolder <String> -WorkbooksSourceId <String>

The script will iterate through all the workbooks in the WorksbooksFolder and deploy them into Sentinel. You also need to specify as a parameter the WorkbookSourceId. This is need to indicate ARM that the location of the workbook will be inside Azure Sentinel. WorkbookSourceId has the following format:

/subscriptions/<subscriptionid>/resourcegroups/<resourcegroup>/providers/microsoft.operationalinsights/workspaces/<workspacename>

Also take into account that the deployment will fail if a workbook with the same name already exists.

Hunting Rules

In order to automate the deployment of Hunting Rules, we will use AzSentinel module.

We have a created another script that takes as an input a JSON file where all the Hunting Rules are defined. The script will iterate over them and create/update them accordingly.

The syntax for this script is the following:

CreateHuntingRulesAPI.ps1 -Workspace <String> -RulesFile <String>

Playbooks

This will work the same way as Workbooks. Playbooks use Azure Logic Apps in order to automatically respond to incidents. Logic Apps are a native resource in ARM, and therefore we can automate its deployment with ARM templates. The idea is that you would place all the custom playbooks that you have developed inside the Playbooks folder in your repo, and any change on these will trigger a pipeline that creates them in your Sentinel environment.

We have created a script (placed in the same repo here) that can be used to automate this process. It has the following syntax:

CreatePlaybooks.ps1 -ResourceGroup <String> -PlaybooksFolder <String>This script will succeed even if the playbooks are already there.

Building your Sentinel as Code in Azure DevOps

Now that we have clear view on how to structure our code repository and what to use to automate each Sentinel component, we can start creating things in Azure DevOps. This is a high-level list of tasks that we will perform:

- Create an Azure DevOps organization

- Create a project in Azure DevOps

- Create a service connection to your Azure environment/s

- Create variables

- Connect your existing code repository with your Az DevOps project

- Create pipelines

Let’s review them one by one.

Create an Azure DevOps organization

This is the first step in order to have your Azure DevOps environment. You can see the details on how to do this here.

Create a project in Azure DevOps

A project provides a repository for source code and a place for a group of people to plan, track progress, and collaborate on building software solutions. It will be the container for your code repository, pipelines, boards, etc. See instructions on how to create it here.

Create a service connection to your Azure environment

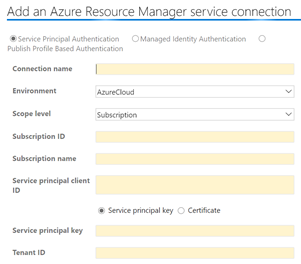

In order to talk to our Azure environment, we need to create connection with specific Azure credentials. In Azure DevOps this is called Service connection. The credentials that you will use to create this service connection are typically a service principal account defined on Azure.

You have full details on how to create a service connection here.

These are the fields you need to provide to create your service connection:

Take a note of the Connection name you provide, as you will need to use this name in your pipelines.

Create variables

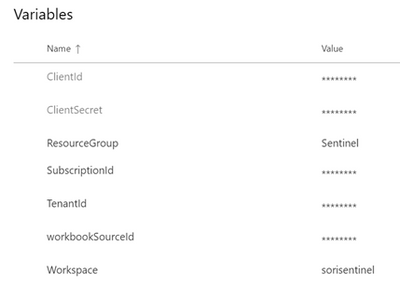

We are going to need several variables defined in the Azure DevOps environment so they can be passed to our scripts to specify the Sentinel workspace, resource group, config files and API connection information.

As we are going to need these variables across all our pipelines, the best thing to do is to create a variable group in Azure DevOps. With this, we can define the variable group once and then reuse it in different pipelines across our project. Here you have instructions on how to do it.

We have called our variable group “Az connection settings”; this is important because we will reference this name in our pipelines. Here is a screenshot of the variables that we will need to define:

Connect your existing code repository with your Az DevOps project

In this article you can see how to import an existing repo into Az DevOps. It works for Gihub, Bitbucket, Gitlab and other locations. See instructions here.

Create pipelines

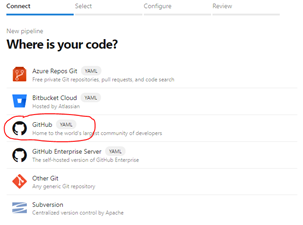

There are two ways to create our Azure Pipelines: in classic mode or as YAML files. We are going to create them as YAML files, because that way we can place them into our code repository so they can be easily tracked and reused anywhere. Here you have the basic steps to create a new pipeline.

In the new pipeline wizard, select Github YAML in the Connect step:

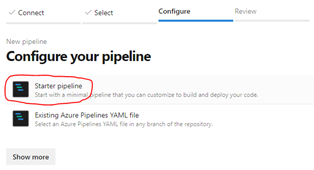

Then select your repository and then choose Starter pipeline:

We are going to create one CI (build) pipeline for Scripts and several CICD (build+deploy) pipelines (one for each Sentinel component).

Create a CI pipeline for Scripts

We will treat Scripts slightly different than the rest. This is because it is not a Sentinel component, and the scripts themselves won’t get deployed to Azure, we will just use them to deploy other things.

Because of this, the only thing we need to do with scripts is make sure they are available in the other pipelines to be used as artifacts. To accomplish this, we just need two tasks in our CI pipeline: Copy Files and Publish Pipeline Artifact

Here is an example of the YAML code that will define this pipeline:

paths:

include:

– Scripts/*

pool:

vmImage: ‘ubuntu-latest’

steps:

– task: CopyFiles@2

displayName: ‘Copy Scripts’

inputs:

SourceFolder: Scripts

TargetFolder: ‘$(build.artifactstagingdirectory)’

– task: PublishPipelineArtifact@1

displayName: ‘Publish Pipeline Artifact’

inputs:

targetPath: Scripts

artifact: Scripts

As you can see, we have added two steps, one to copy the script files and another to publish the pipeline artifacts. You can find this pipeline in our Github repo here.

Create CICD pipelines for each Sentinel component

With the Scripts now available as an artifact, we can now use them into our Sentinel component pipelines. These pipelines will be different from the previous one, because we will do CI and CD (build+deploy). We define these in our YAML pipeline file as stages.

Here is one sample pipeline for Analytics Rules:

resources:

pipelines:

– pipeline: Scripts

source: ‘scriptsCI’

trigger:

paths:

include:

– AnalyticsRules/*

stages:

– stage: build_alert_rules

jobs:

– job: AgentJob

pool:

name: Azure Pipelines

vmImage: ‘vs2017-win2016’

steps:

– task: CopyFiles@2

displayName: ‘Copy Alert Rules’

inputs:

SourceFolder: AnalyticsRules

TargetFolder: ‘$(Pipeline.Workspace)’

– task: PublishBuildArtifacts@1

displayName: ‘Publish Artifact: RulesFile’

inputs:

PathtoPublish: ‘$(Pipeline.Workspace)’

ArtifactName: RulesFile

– stage: deploy_alert_rules

jobs:

– job: AgentJob

pool:

name: Azure Pipelines

vmImage: ‘windows-2019’

variables:

– group: Az connection settings

steps:

– download: current

artifact: RulesFile

– download: Scripts

patterns: ‘*.ps1’

– task: AzurePowerShell@4

displayName: ‘Create and Update Alert Rules’

inputs:

azureSubscription: ‘Soricloud Visual Studio’

ScriptPath: ‘$(Pipeline.Workspace)/Scripts/Scripts/CreateAnalyticsRulesAPI.ps1’

ScriptArguments: ‘-TenantId $(TenantId) -ClientId $(ClientId) -ClientSecret $(ClientSecret) -SubscriptionId $(SubscriptionId) -ResourceGroup $(ResourceGroup) -Workspace $(Workspace) -RulesFile analytics-rules.json’

azurePowerShellVersion: LatestVersion

pwsh: true

As you can see, we have now two stages: build and deploy. We also had to define resources, to reference the artifact that we need from our Scripts build pipeline.

The build stage is the same the one we did for script, so we won’t explain it again.

In the deploy stage we have a couple of new things. First, we are going the variables that we defined some minutes ago, for that we use variables. Then we need to download the artifacts that we will use in our deployment task, for that we use download.

As the last step in our CICD pipeline, we are going use an Azure Powershell task where we will point to our script and specify any parameters needed. As you can see, we reference the imported variables here. One last peculiarity of this pipeline is that we need to use Powershell Core (required by AzSentinel), so we need to specify that with pwsh.

If everything went correctly, we will be able to run this pipeline now and verify that our Sentinel analytics rules were deployed automatically :smiling_face_with_smiling_eyes:

This and all the other pipelines for the rest of components are in our repo inside the Pipelines folder.

For Onboarding, the pipeline has no automatic triggers, as we consider that this would be executed only once at installation time.

Working with multiple workspaces

Whether you are a customer with an Azure Sentinel environment that contains multiple workspaces or you’re a partner that needs to operate several customers, you need to have a strategy to manage more than one workspace.

As you have seen during the article, we have used a variable group to store details like resource group and workspace name. These values will change if we need to manage multiple workspaces, so we would need more than one variable group. For example, one for customer A and another for customer B, or one for Europe and one for Asia.

After that’s done, we can choose between two approaches:

- Add more stages to your current pipelines. Until now, we only had one deploy stage that deployed to our only Sentinel environment, but now we can add additional stages (with the same steps and tasks) that deploy to other resource groups and workspaces.

- Create new pipelines. We can just clone our existing pipelines and just modify the variable group to point to a different target environment.

In Summary

We have shown you how to describe you Azure Sentinel deployment using code and then use a DevOps tool to deploy that code into your Azure environment.