This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Several new features were added to mapping data flows this past week. Here are some of the highlights:

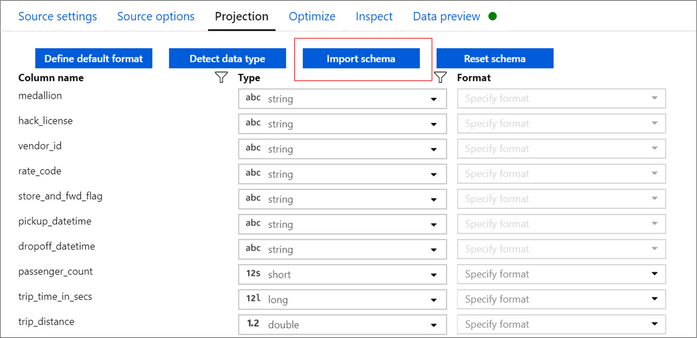

Import Schema from debug cluster

You can now use an active debug cluster to create a schema projection in your data flow source.

For more information on the projection tab, see the data flow source documentation

Test connection on Spark Cluster

You can use an active debug cluster to verify data factory can connect to your linked service when using Spark in data flows. This is useful as a sanity check to ensure your dataset and linked service are valid configurations when used in data flows.

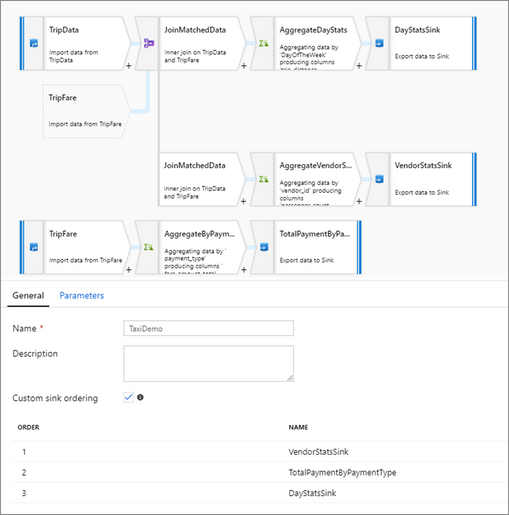

Custom sink ordering

For more information, see custom sink ordering