This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

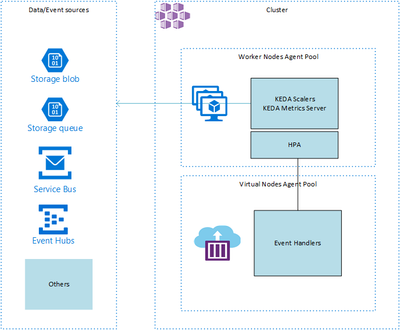

KEDA https://github.com/kedacore is an open source K8s controller that acts as a man in the middle between a data or event store such as Azure Event Hub, Storage or Bus queue (but also AWS, Kafka, etc.) and an event handler such as Azure Functions. Its scalers ensure that the appropriate number of handlers are started according to the load happening at the level of the source. KEDA comes with its own K8s Custom Resource Definition called ScaledObject. Here is an example of such a resource deployment:

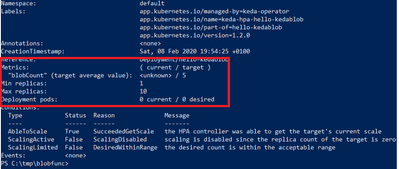

where the important part is highlighted in red. It takes the number of blobs present in the Blob Storage into account to scale out the related deployment accordingly. Note that if the handler does not delete the incoming blob, the HPA will never scale down. Also important to notice that one can influence the HPA by specifying the target metric ourselves. KEDA defaults to 5 for various event stores.

There are many triggers for many different sources, to get an exhaustive list, the easiest is to have a look at the .go source code https://github.com/kedacore/keda/blob/b068c6430c0029457ac82f3e9af2780c38d6eb72/pkg/handler/scale_handler.go . For Azure, KEDA currently supports Storage Queue, Service Bus Queue, Event Hub and Blob Storage.

The below schema shows high level interactions and components:

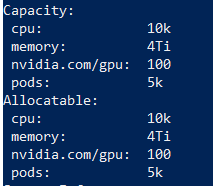

To test it out with some realistic scenario, I deployed a QueueWriter pod that writes 5000 messages every 2 seconds to a Storage Queue. I scaled out the QueueWriter to 15 instances, meaning 37500 messages/s. I let KEDA scale out automatically and ended up with ~90 virtual kubelets (meaning ~90 ACIs) handling the load. I let it run during an hour to treat about 135 million messages. Whenever I checked the queue, it was empty or could see a message from time to time, meaning that the handlers had no problem to follow the pace. KEDA can be used in conjunction with worker nodes but it is wiser to use it together with Virtual Kubelets which translates to ACIs and a dedicated agent pool coming with the following characteristics:

meaning a serious amount of resources. However, and that is not related to KEDA, one must pay attention to multiple things. Despite of the nice figures above, some limitations such as the total number of concurrent ACI (quota) apply. By default, you can't exceed 100 per region and since your cluster is bound to a region, you simply can't exceed 100. This means that letting KEDA scale your handlers without control will easily lead to hit this limit. You'll end up with containers in ProviderFailed state. A ticker to MS support can be done to increase the default threshold.

Also, admittedly, ACIs are not started in a few secs only, meaning that under high load, KEDA will attempt to scale even more. In messaging and event-driven scenarios that are very asynchronous by nature, waiting a bit is not an issue but as long as no handler is ready to handle queue messages, KEDA will keep scaling, unless a maxiumReplicaCount is specified for the ScaledObject. Last but not least, sometimes some ACIs hang in a pending state. Here again, although strange enough, the scheduler will terminate them once the HPA is back to its lower targets.

Overall, this produced rather good results and sure, KEDA is something to keep an eye on!