This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

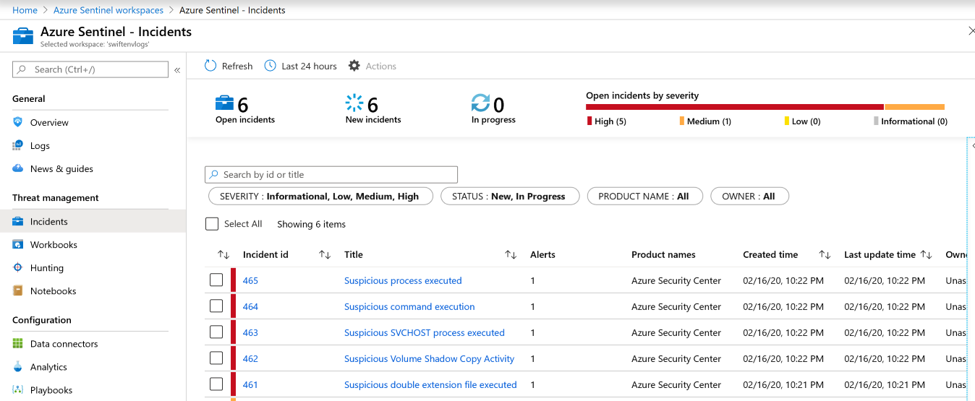

As we are exploring automation scenarios within Azure Security , we come across an unsolved mystery. When I use Azure Security Center connector into Azure Sentinel and generate Incidents, what happens when I close my Azure Sentinel Incident, does it close the related Azure Security Center alert?

The short of this is it does not. The Azure Sentinel Incident closes but the ASC Alert remains active. You have the option to then dismiss the ASC Alert within the ASC Portal. Sounds like an extra step to keep these systems synchronized.

Here is where Logic Apps comes to solve the mystery. Within the next portion of the article we will setup stakes, go into depth on the problem, and how to solve for this. If you want to just deploy the Azure Sentinel Playbook to close Incident and Dismiss ASC Alert at the same time click here.

Setting up base configuration of Azure Sentinel and Azure Security Center

To get started note that Azure Sentinel is Microsoft’s cloud native SIEM and SOAR tool, within a click of the Azure portal you can spin this up. Once spun up one of the first things customers want to do is bring in Data Connectors to start sending the Logs and Alerts from other 3rd party and Microsoft systems. One such popular connector is the Azure Security Center connector.

Azure Security Center has two awesome pillars of securing your Azure investments: Posture Management in the form of security recommendations and workload protection using threat detection that alert off IaaS and PaaS services. Check out the link to the full list of detections that generate alerts. It is impressive and a game changer for cloud security. Azure Security Center was the first public cloud offering to do recommendations and threat detection within a public cloud provider for years before the other cloud vendors built a service.

After the ASC connector is established in Azure Sentinel, you can generate Incidents based off those ASC Alerts, giving your SOC a SIEM and SOAR capabilities on ASC Alerts. To do this in Azure Sentinel follow the steps below:

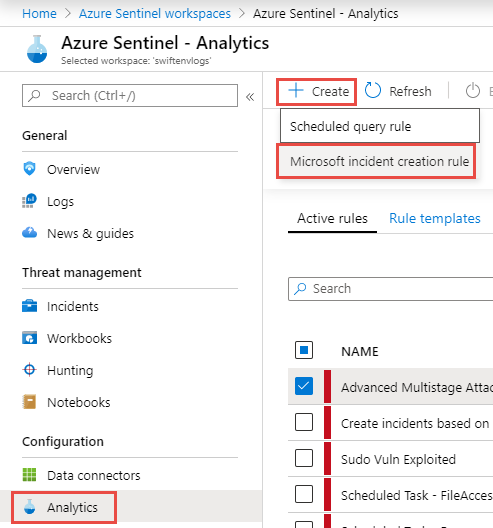

- Go to Analytics blade > Create Microsoft Incident creation rule

-

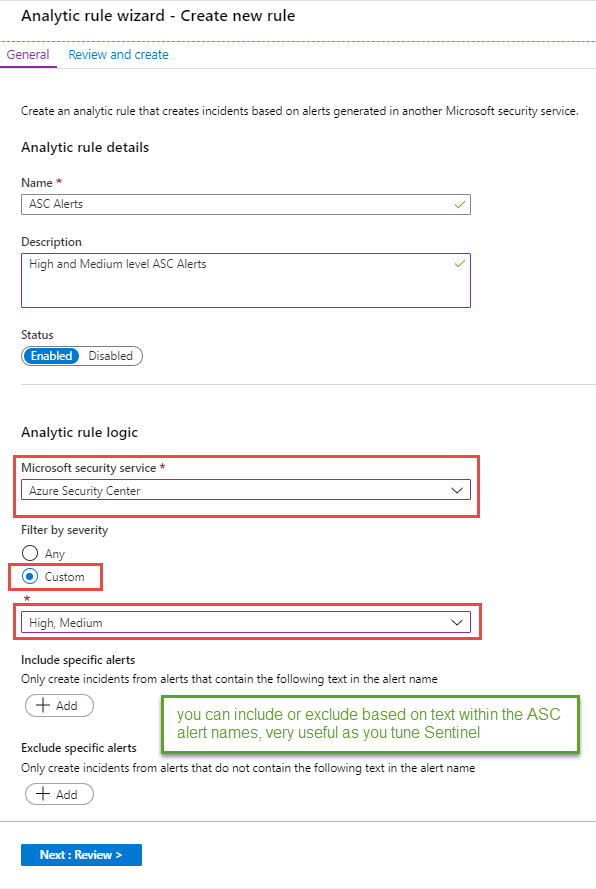

You will be taken to a setup wizard fill in for your needs. To start I choose High and Medium Severity alerts from Azure Security Center

-

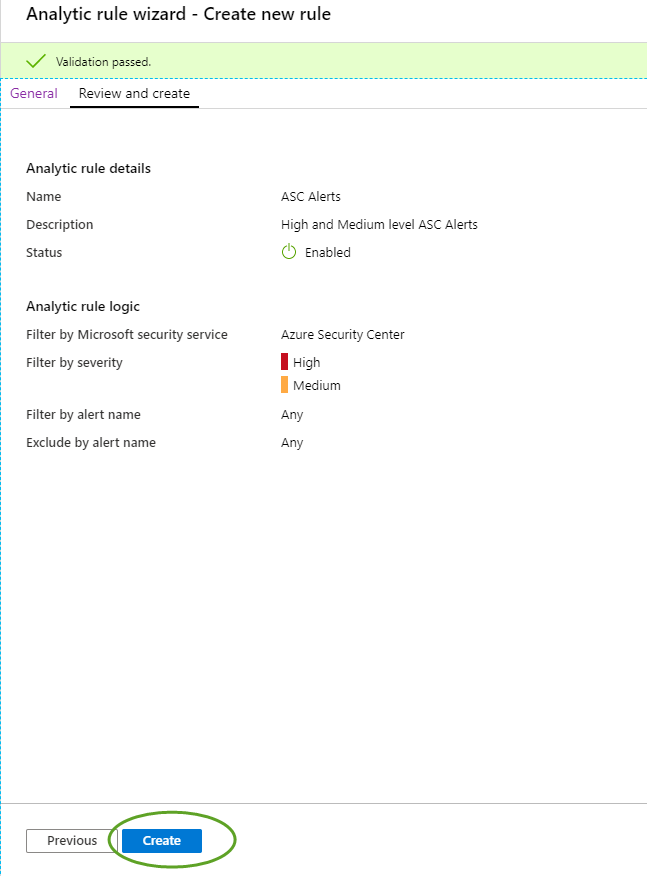

On the next screen we will validate and review our choices before telling Azure Sentinel to generate Incidents on ASC Alerts.

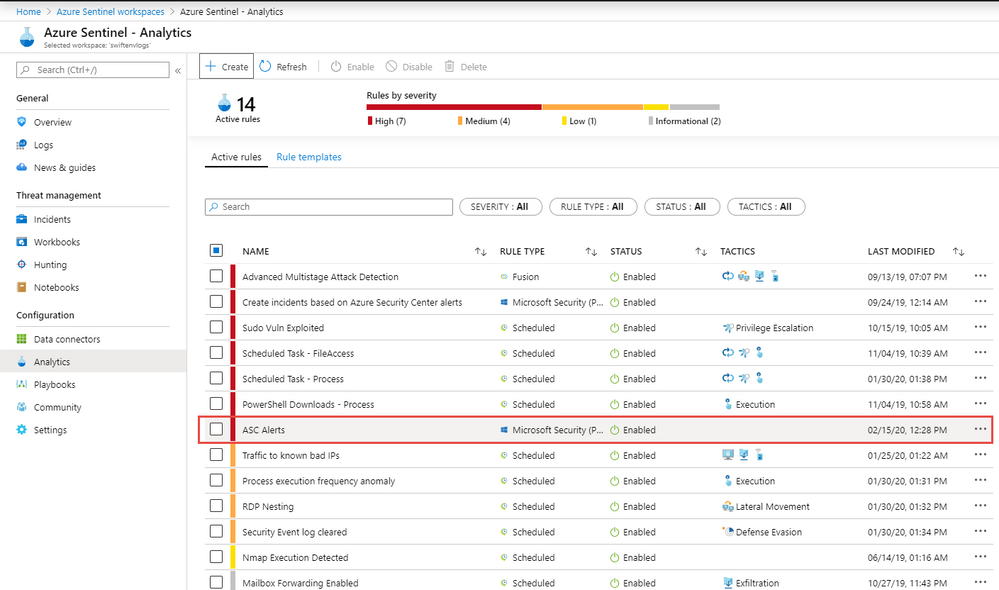

- Once created I can now see our rule and any others I brought in from Azure Sentinel’s templates or from Microsoft Security products like Azure Security Center or Microsoft Defender ATP or others. Remember you can always click back on the rule and edit to tune these further.

-

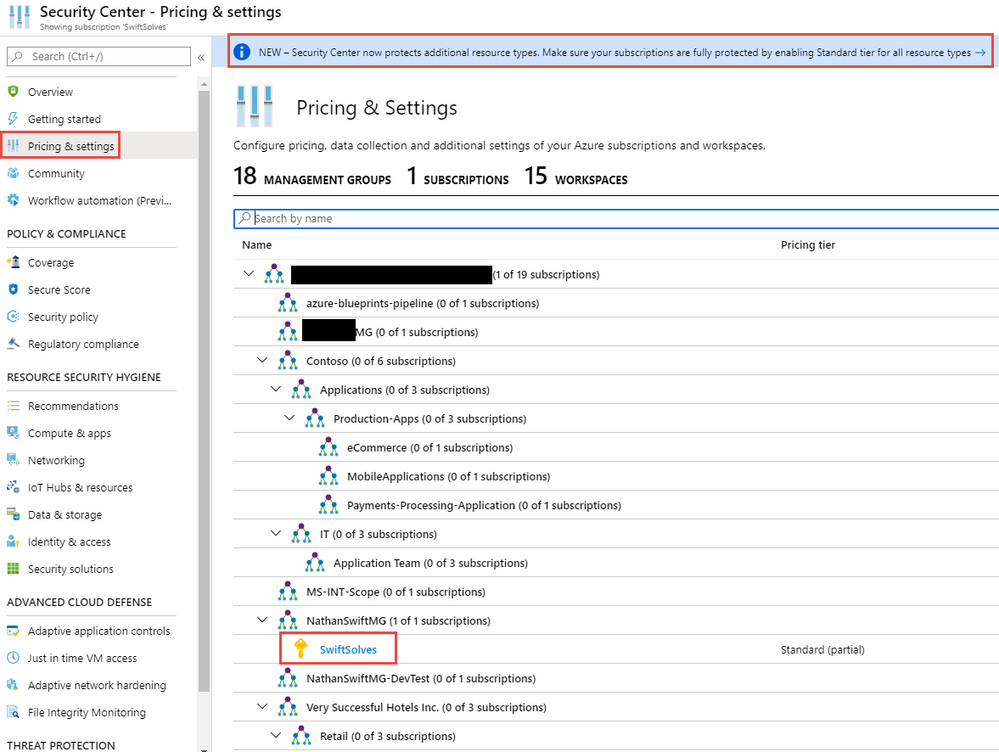

On the Azure Security Center side we want to ensure our Subscriptions are set to the Standard Tier. On each Azure Subscription Microsoft offers a 30 day opt out trial of ASC Standard tier, be sure if evaluating ASC you mark your calendar 25 days in advance.

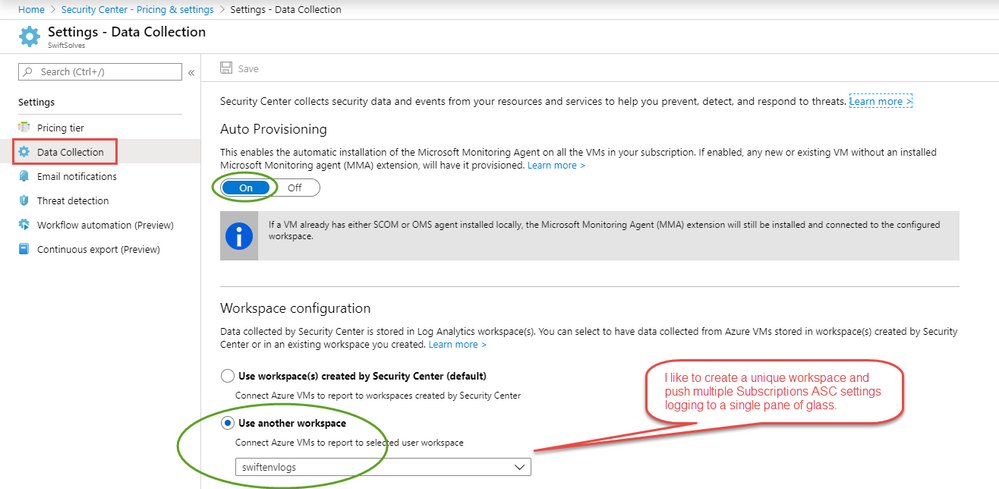

- Finally ensure on Data Collection blade that auto provisioning is turned on.

-

For testing purposes we will spin up a Windows 2012 R2 VM on Azure isolated within its own VNET. Log into the VM and download the following ACS.zip

-

Unzip and place AlertGeneration in the root of C:\ . In C:\AlertGeneration run the following PS script ScheduledTasksCreation.ps1 to create scheduled tasks that will generate Azure Security Center alerts every 5 minutes.

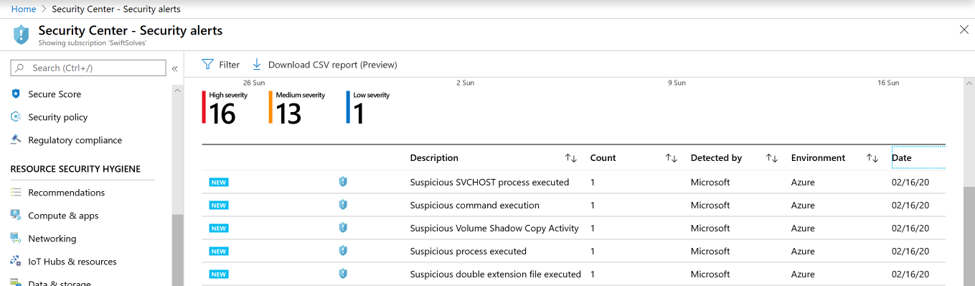

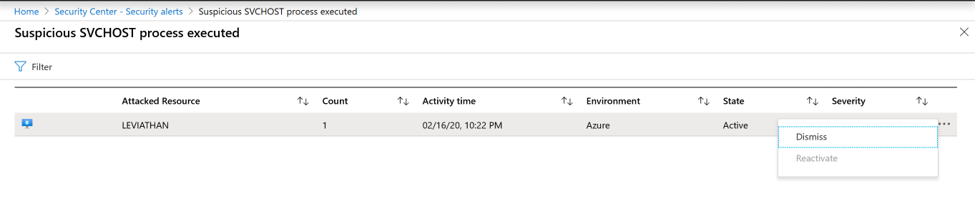

At this point we can test and close a Azure Sentinel Incident, you will observe that the corresponding ASC Alert is still active under Threats. You can manually dismiss the ASC Alert by clicking on the three ellipses and choosing Dismiss.

Due to this extra process step from a SOC Analyst to keep Azure Security Center in Sync with Azure Sentinel, I took the task to investigate how these two systems store information. If we can match on any fields directly or indirectly and pass the necessary information to the Azure Security Center Alert API to dismiss.

Lining up data sources to find potential matches

While examining the Get Azure Sentinel Incident I noticed we had some interesting property fields that could be useful in matching.

Title - Matches the alertDisplayName of ASC Alert but is not unique enough in a larger context of duplicate alerts

Status – Closed

relatedAlertProductNames - this allows us to be distinctive,

startTimeUtc - time the Incident was created in Azure Sentinel, this correlates fairly well against ASC detectedTimeUTC but not exactly. Can be off my milliseconds when testing, potential could be off by seconds as well.

relatedAlertIds – a corollary guid used in SecurityAlert log Analytics table.

On the Get Azure Security Center Alert we also get some interesting information from the property fields in the returned response.

name – this is the alert name you pass to dismiss the ASC alert in the API

alertDisplayName – Matches the title of Azure Sentinel Incident but is not unique enough in a larger context of duplicate alerts

detectedTimeUTC – this correlates fairly well against Azure Setinel’s startTimeUtc but not exactly off by milliseconds when testing, potential could be off by seconds as well.

State – active or dismissed

Direct Match Conclusion

What we are left with is a non unique direct match that could have false positives.

title == alertDisplayedName

startTimeUtc == detectedTimeUTC < only at the minutes

status == state

Due to this we could build a matching logic but questions arise what if there is a delay in timestamps, they are already off by milliseconds what if off by seconds ? How much of a time window would we use to match 1 sec off, 3 seconds off ? The names are too generic on the alert with no unique resource or identifier within the alert name. What if there are multiples or alerts within seconds of each other with same names.

You can see that direct match while possible may not be ideal in larger and more complex environments. So how can we solve this mystery, perhaps with a indirect matching method ?

The Clue

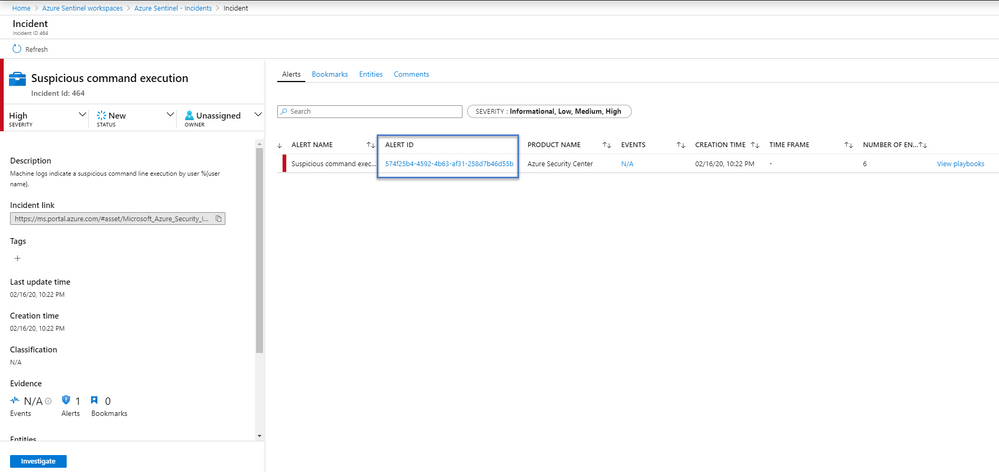

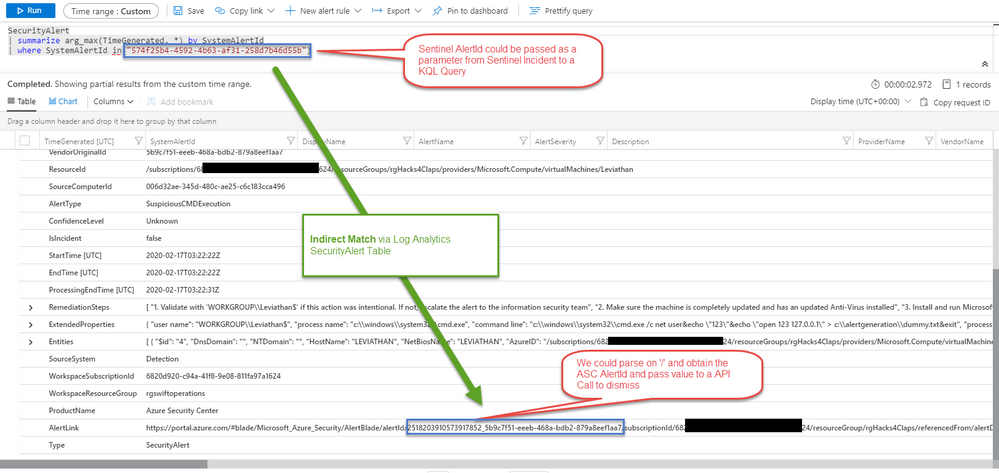

Enter the GUI for Azure Sentinel, while clicking around in the Incident under full details I noticed it had an alert ID, the same that was in the API call as relatedAlertIds but didn’t show up in ASC Alert API call.

The Alert Id even has a link that takes us to Log Analytics – SecurityAlert table of data that both Azure Sentinel and Azure Security Center can right into.

Within that generated KQL query laid the clue that could unravel Azure Sentinel to ASC in a more precise manner.

SystemAlertID == AlertLink

Many times it pays to go above code and look at the Portal UI where hints can be observed visually. Armed with this knowledge and some testing I went to work on a Azure Sentinel Playbook that a SOC Analyst can run when they are ready to close their Incident and then dismiss the corresponding Azure Security Center alert.

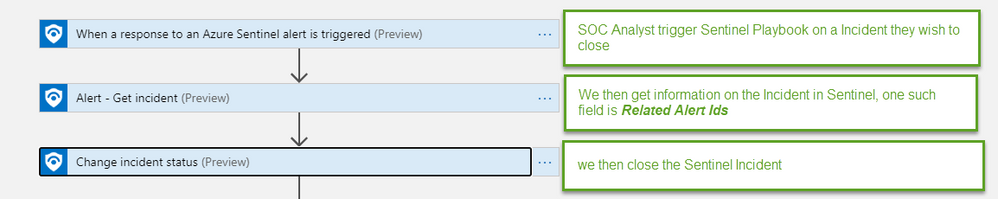

Designing Azure Sentinel Playbook

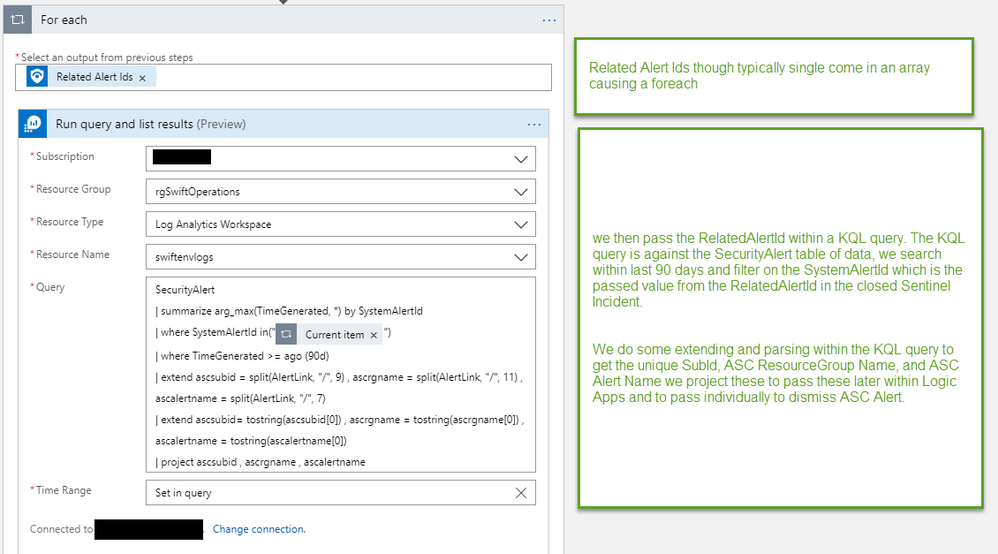

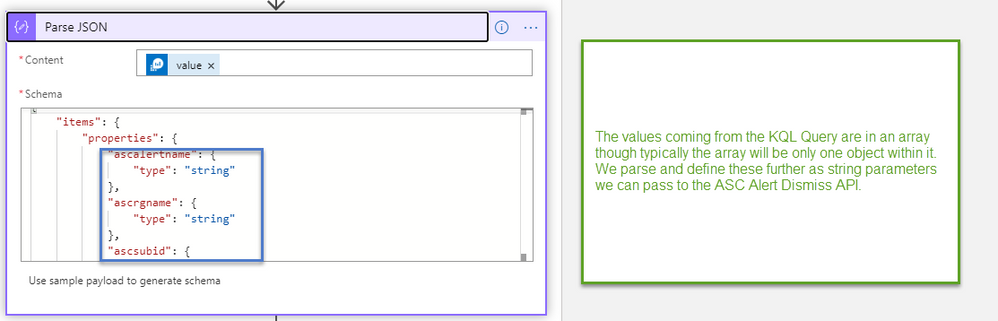

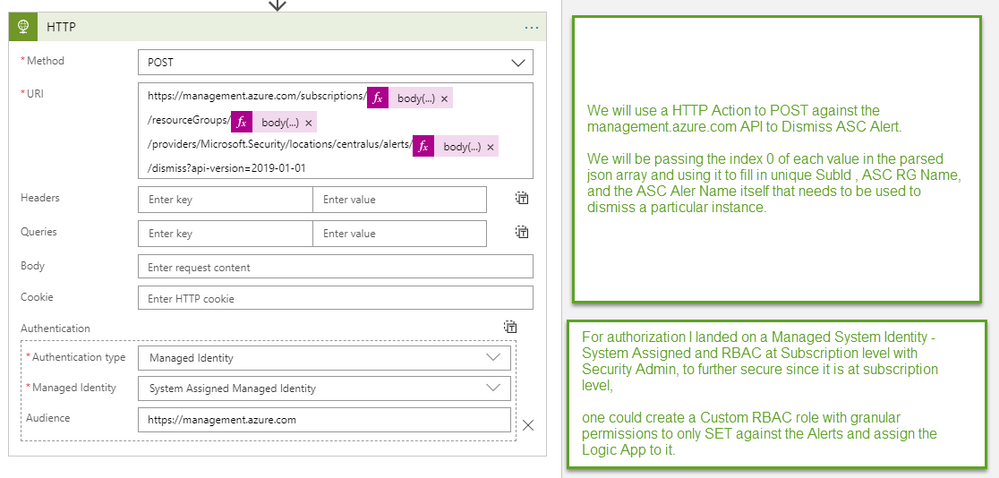

Designing the Logic app took a few iterations but I finally landed on a pattern that worked in testing. The Logic App pattern is as follows.

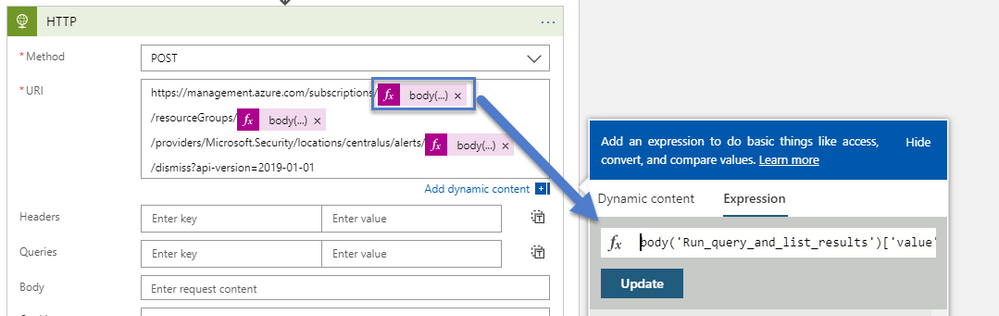

Remember clicking on a dynamic content \ expression will reveal the coded expression used, recall that the Parse JSON still gave us an array rather than foreach when we click in and select the value we will use 0 Index of the array to pass the strings in

body('Run_query_and_list_results')['value'][0]['ascsubid']

body('Run_query_and_list_results')['value'][0]['ascrgname']

body('Run_query_and_list_results')['value'][0]['ascalertname']

Conclusion

When designing or solving for a problem you should look at the data structures involved behind the scenes and determine can I make a direct match ? If I can’t make a direct match can I use another source of truth on data table somewhere and indirectly match ? Logic Apps are very powerful and easy to use to solve for problems. You don’t need to rely on code dependencies or PowerShell modules. Logic Apps can be used as a managed identity and given certain rights against the Azure Subscription or Resources to make it easy and secure obtaining authorization without having to use a ServicePrincipal and passing AppID and Key to obtain a bearer token to then pass within API call.

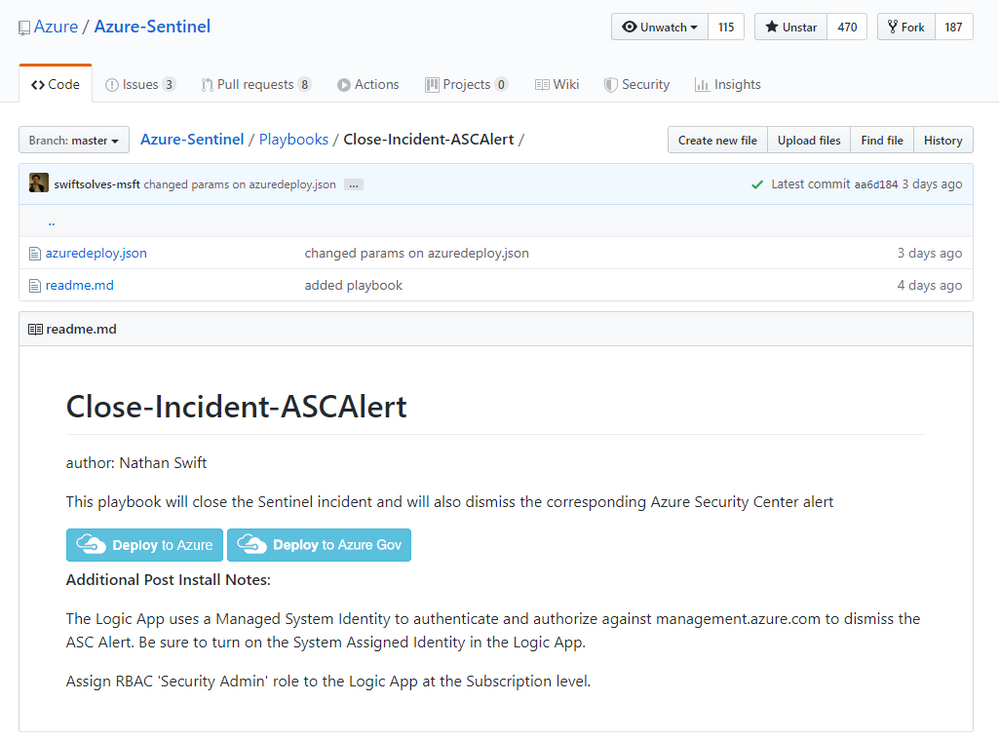

If you want to try this out you can deploy the Azure Sentinel playbook containing this logic from the Repository on Github here: https://github.com/Azure/Azure-Sentinel/tree/master/Playbooks/Close-Incident-ASCAlert

Special thanks to:

Yuri Diogenes for reviewing this post.