This post has been republished via RSS; it originally appeared at: Azure Global articles.

The weather research and forecasting (WRF) model is popular in high performance computing (HPC) code used by the weather and climate community. WRF v4 typically performs well on traditional HPC architectures that support high floating-point processing, high memory bandwidth, and a low-latency network—for example, top500 supercomputers. Now you can find all these characteristics on the new HBv2 Azure Virtual Machines (VMs) for HPC. With some minor tuning, large WRF v4 models perform very well on Azure.

WRF is a mesoscale numerical weather prediction system designed for both atmospheric research and operation forecasting applications. WRF has a large worldwide community of registered users—more than 48,000 in over 160 countries).

In the past, you needed a supercomputer to run WRF models if you wanted to complete high-resolution weather predictions in a reasonable and practical timeframe. In this post, we explore building WRF v4 models on Azure. We ran performance tests to compare the VMs optimized for HPC workloads—that is, the HB-series, HC-series, and HBv2.

Building WRF v4 on Azure

Automated scripts for building different variations of WRF v4, targeting Azure HPC VMs, are contained in the Azure HPC git repository:

git clone git@github.com:Azure/azurehpc.git

We use Spack to build WRF’s dependencies. See the apps/spack and apps/wrf directories for details. All WRF performance data were generated using the version built with Open MPI v4.0.2. The hybrid parallel (MPI + Open MP) and the MPI-only version were used.

WRF v4 benchmark models

WRF v3 models typically are not compatible with WRF v4. For example, the WRF v3 CONUS bench models (2.5 km and 12 km) are no longer supported and are not compatible with WRF v4. For our tests, a new CONUS 2.5 km model (very similar to the original WRF v3 CONUS 2.5 km model) and a larger model, Hurricane Maria 1 km, were generated using the WRF preprocessing system (WPS). See the azurehpc git repository for details on building and installing WPS v4 and the steps we followed to generate these models for W

RF v4.

| Model | Resolution km | e_we | e_sn | e_vert | Total gridpoints | Timstep (s) | Simulation hours |

| new_conus2.5km | 2.5 | 1901 | 1301 | 35 | 87M | 15 | 3 |

|

maria1km |

1 | 3665 | 2894 | 35 | 371M |

3 |

1 |

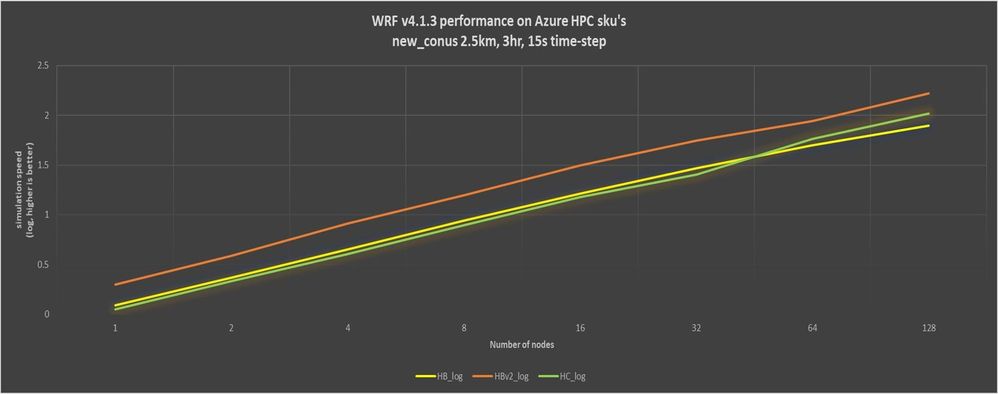

WRF v4 performance comparison on HB-series, HC-series, and HVv2 VMs

This benchmark compares the performance of WRF v4 on HB-series, HC-series, and HVv2 VMs. No performance tuning was applied. The MPI-only version of WRF v4 was used, and all cores on each HPC VM type were used. We were primarily interested in comparing the performance of these VM types on a per-node basis.

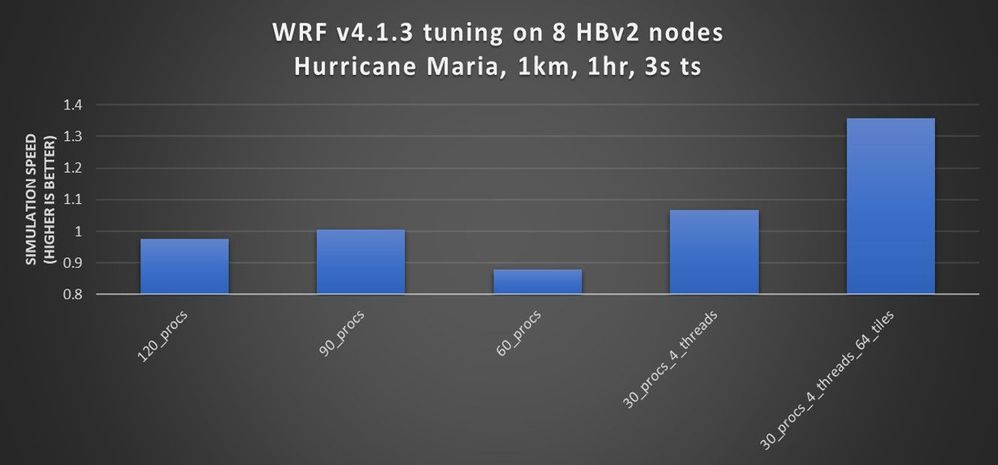

Tuning WRF v4 on Azure HBv2 VMs

The following tuning optimization were investigated:

- Comparing the WRF v4 hybrid parallel version (MPI + Open MP) versus MPI-only.

- Adjusting the optimal process and thread layout and number per node (to optimize memory bandwidth).

- Adjusting the number of tiles available for parallel threads to work on (L3 cache and NUMA domain optimization).

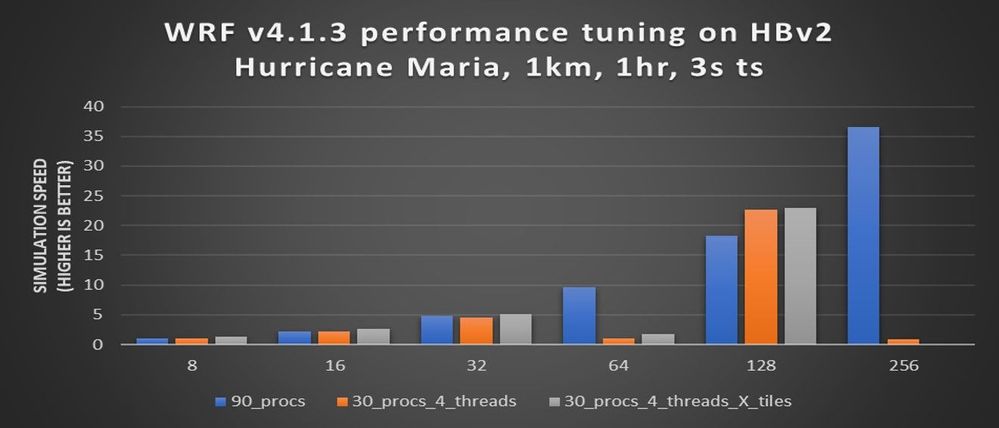

Figure 4. For the Hurricane Maria model, the tuned hybrid parallel (MPI+OpenMP) version of WRF performed better on HBv2 VMs compared to the MPI-only version up to 128 nodes. When each process has enough work, we can split the patches into tiles, and parallel threads can work on the tiles (to optimize data usage in the L3 cache and HBv2 NUMA domains).

Figure 4. For the Hurricane Maria model, the tuned hybrid parallel (MPI+OpenMP) version of WRF performed better on HBv2 VMs compared to the MPI-only version up to 128 nodes. When each process has enough work, we can split the patches into tiles, and parallel threads can work on the tiles (to optimize data usage in the L3 cache and HBv2 NUMA domains).

WRF v4 parallel scaling on HBv2

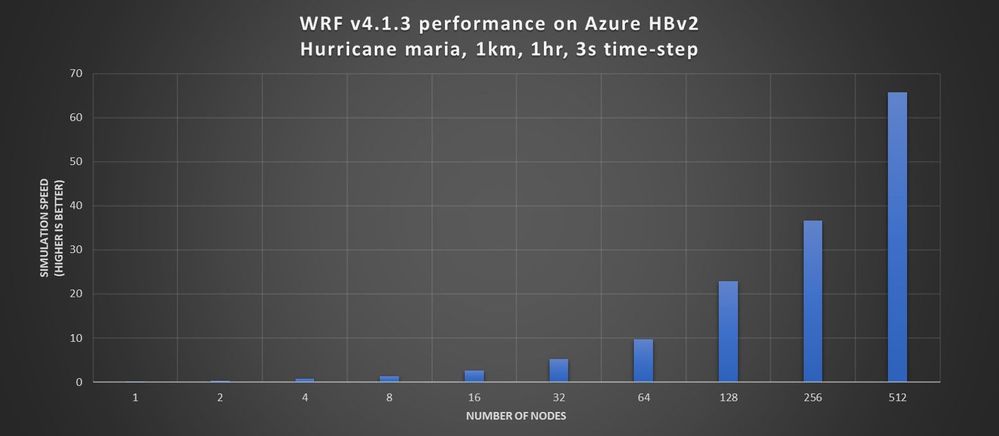

We applied performance optimizations to WRF v4 running a large Hurricane Maria 1km model on HBv2 VMs. We wanted to see how well the large Hurricane Maria 1km model would scale on this VM type.

Summary

The new HBv2 VMs have the performance you need to run large WRF v4 models, and only minor tuning is needed. To summarize the our test results:

- Running the new_conus2.5km benchmark demonstrated good scaling on HBv2, HB-series, and HC-series VMs and showed that HBv2 can run WRF v4 two times faster than the HB and HC VMs (Figures 1 and 2).

- WRF v4 performance improves considerably on HBv2 VMs—by about 30 percent—when you carefully lay out processes and threads and optimize the HBv2 NUMA domains, memory bandwidth and L3 cache (Figure 3). In the case of maria1km, hybrid parallel WRF is only effective with up to 128 nodes (Figure 4) when there is enough work per processor to divide each patch into tiles that the Open MP threads can work on. This technique keeps more data in L3 cache, and we see improved performance. A smaller number of nodes performs better with a higher tile count, and larger node counts perform better with a smaller tile count. Typically, when you find the optimal number of tiles for a particular number of nodes, the number of tiles is halved each time you double the number of nodes to achieve the best performance. In the maria1km case, when more than 128 nodes were used, hybrid parallel WRF did not help. It was best to use the MPI-only version and have 90 processes per node for best performance on HBv2 VMs.

- WRF v4 scaled well on HBv2 using the large Hurricane Maria 1km model, and a parallel efficiency of about 80 percent was measured running on 512 HBv2 nodes (Figures 5 and 6). The parallel efficiency declined at 512 HBv2 nodes due to not having enough work per processor at that scale.