A few weeks ago, we published this article explaining how to automate the deployment and operations of Azure Sentinel using Infrastructure as Code and DevOps principles.

We received great feedback about the article, but also some questions about how to do this in a multi-tenant environment using Azure Lighthouse. We are going to try to tackle the different considerations in this post, showing how to implement this with our DevOps tool of preference, Azure DevOps.

If you don’t use Lighthouse and don’t plan to (maybe you only have one Azure AD tenant), you can still apply the concepts explained in this post when working in a multi-workspace Sentinel deployment (just skip the Azure Lighthouse section).

Onboarding your customers into Azure Lighthouse

The first thing that you need to do as an MSP (or in a multi-tenant organization) working on Azure, is onboard your customers into your Azure Lighthouse environment. We are not going to cover this in this post, but you can read here how to do that. You can also refer to this other article published in this blog about the Sentinel-Lighthouse integration.

As a result of the Lighthouse onboarding operation, an identity (or set of identities) from the MSP tenant will be able to access the customer environments with the appropriate roles defined in your onboarding ARM template or Managed Services Marketplace Offer.

Depending on the type of service you’re offering to the customer, these roles will vary, but for a Sentinel-only service, we recommend using the Built-in Sentinel roles (Sentinel Reader, Sentinel Responder and Sentinel Contributor). Also take into account that you cannot delegate custom roles with Lighthouse.

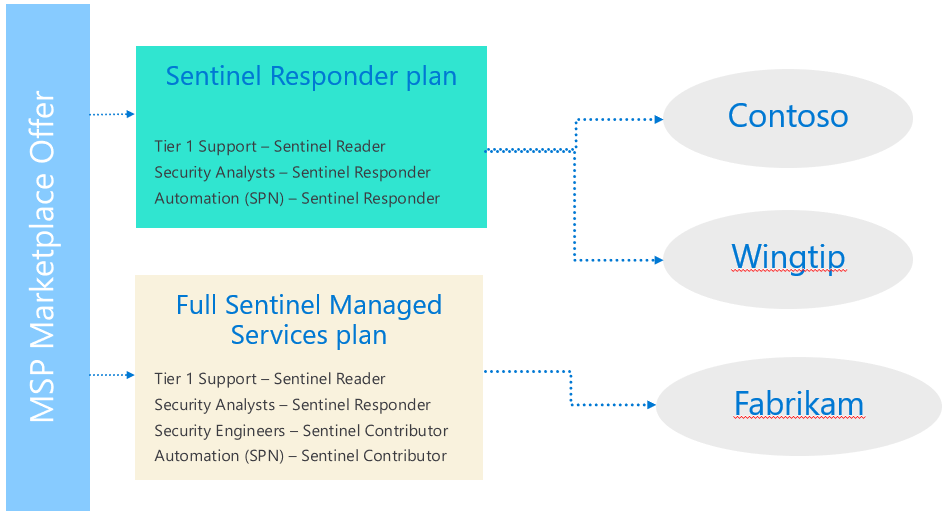

Also, it is important to mention that, with Lighthouse, you can provide access to the customer environment to user principals and/or service principals that exist in your tenant. Here is an example of the roles that you could use in your Lighthouse Marketplace Offer (or ARM template) and how it’s applied to customers:

As you can see, we have defined one marketplace offer with two plans inside it. Different plans can have different permissions for the MSP to access the customer environment. It’s also important to mention that you can make plans public or private. If you choose public, any Azure user will see your offer available in the Marketplace and can purchase it. If you make it private, you specify which customer/s have access to it.

In our example, we have created two plans, one that offers a full Sentinel managed service and another that will have limited permissions. Each plan contains a set of delegations, some for User Groups and one for Service Principals (SPN) that will be used for Automation purposes. That way, the customer is onboarded with all the access needed from your side to perform the requested service. The service principal is the identity that we will use to connect our Azure DevOps environment to the customer Azure subscription.

After multiple customers have purchased your Plan, the service principals that you defined, will have access to all those customers subscriptions with the role that you specified (in our example, Sentinel Responder or Sentinel Contributor).

Multiple customers in Azure DevOps

Now that we have onboarded one or more customers into Azure and our identities have access to multiple tenants, we can automate the deployment and management of their Azure Sentinel environments. But how do we set up our Azure DevOps service connections, projects, repositories and pipelines to operate multiple customers?

Azure DevOps Service connections

A service connection is the first thing to setup for a multi–tenant environment. As you might remember from our previous article on the DevOps topic, we created a single service connection pointing to a single subscription. As we are now managing multiple customers, we will need to create one for each of them. On the positive side, we can use the same service principal for all of them, because it was onboarded into Lighthouse and it has now access to all our customer subscriptions :smiling_face_with_smiling_eyes:

Azure DevOps variables

As you now have multiple customer environments, you also need to create multiple variable groups, one for each customer. You will then use these accordingly inside your pipelines, stages or jobs.

Code repositories

Managing code repositories requires a difficult design decision that you need to make. Are you going to use a single repository for all your customers? Are you going to create a separate repository for each customer? Do you need further isolation between customers?

These are some of the typical design choices:

- Single repository with no further customer separation. If you think that all your customers will have the same configuration, you could manage with just a repo where you put all your configuration files for Connectors, Analytics Rules, Workbooks, etc. Obviously, this means that any change to your config files will reflect in all your customer environments. This is the repo structure that we showed in our previous article and can be found here. In general, your customers will have different needs over time, so this approach is recommended only if you’re using your DevOps tool to just automate the initial setup of the customer environments. The moment your customer configurations deviate from each other this approach can be challenging.

- Single repository with a separate folder for each customer. In this case you can have all your script artifacts in a central location, but then create separate folders for the config files of each customer. This way you can modify customer configurations independently while keeping your master scripts in a single place. As an example:

|- Artifacts/

| |- Scripts/_________________________ # Folder for scripts helpers

|

|- CustomerA/ ________________________ # Folder for Customer A

| |- AnalyticsRules/ ______________________ # Subfolder for Analytics Rules

| |- analytics-rules.json _________________ # Analytics Rules definition file (JSON)

|

| |- Connectors/ ______________________ # Subfolder for Connectors

| |- connectors.json _________________ # Connectors definition file (JSON)

|

| |- HuntingRules/ _____________________ #

| |- hunting-rules.json _______________ # Hunting Rules definition file (JSON)

…

|

|- CustomerB/

| |- AnalyticsRules/ ______________________ # Subfolder for Analytics Rules

| |- analytics-rules.json _________________ # Analytics Rules definition file (JSON)

|

| |- Connectors/ ______________________ # Subfolder for Connectors

| |- connectors.json _________________ # Connectors definition file (JSON)

|

| |- HuntingRules/ _____________________ #

| |- hunting-rules.json _______________ # Hunting Rules definition file (JSON)

…

For brevity, we only show 3 subfolders per customer but there would be more for Playbooks, Workbooks, etc.

- Multiple Az DevOps projects, one per customer. With this approach you have even more isolation between customers as you place them into separate Azure DevOps projects. Each project has a separate set of permissions, service connections, repositories, etc., so this could be useful when there’s a clear need for separation of responsibilities between teams working in the operation of the environment. For each project you also get a separate Az DevOps Boards instance, so you can collaborate with your customer (or other teams within your company) creating work items, Kanban boards, etc.. Within each Az DevOps project you would also place a repository for a specific customer. If you want to know more about projects within Azure DevOps, please go here.

Azure DevOps Pipelines

Once you have your repository structure identified, its time to decide how you’re going to organize your deployment pipelines.

There are several options when it comes to building your pipelines in a multi-tenant environment:

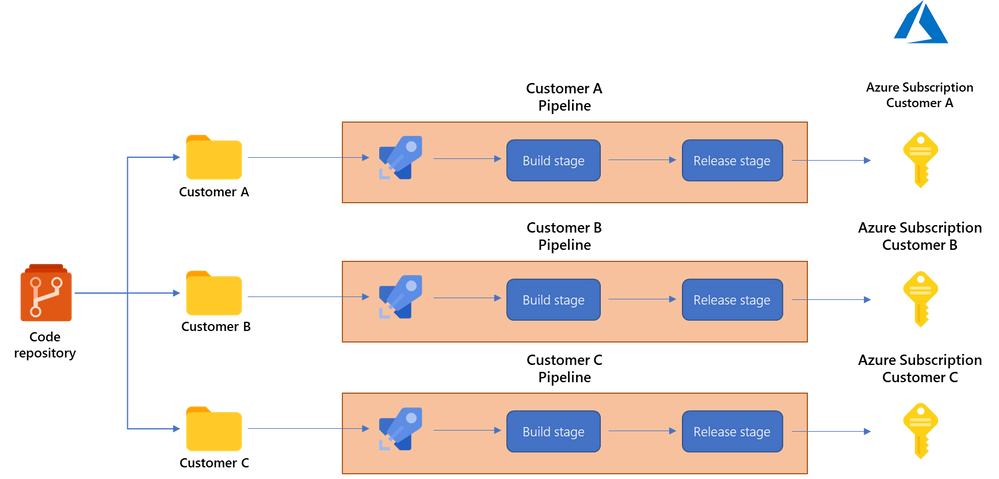

- One pipeline per customer. This is the recommended way if you are keeping your customer configuration files separate. Imagine that within your repo you have a separate folder for each customer, with subfolders for their Connectors, Analytics Rules, Playbooks, etc. configurations. In this case you can create separate pipelines for each customer, taking just the configuration files for that specific customer. Each pipeline would use a different variable group pointing to that customer’s Sentinel environment. This approach gives you full control on when each customer environment is deployed/updated and under what conditions the different stages are executed.

This is the approach that we used in our previous post here, although we were only managing a single Sentinel environment in that case.

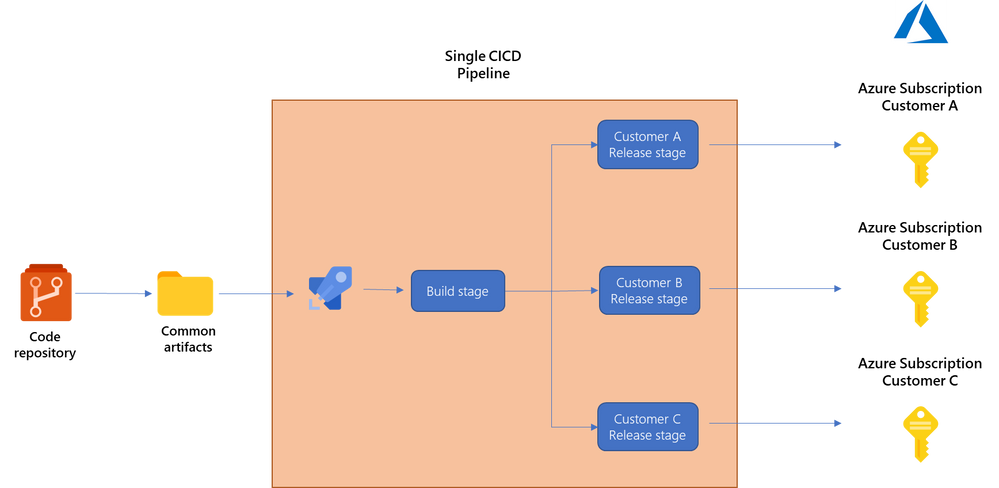

- A single pipeline for all your customers with multiple deployment stages inside it, each pointing to one customer subscription. You would use this approach if you’re keeping a common set of configuration files for all your clients. In this case, you can just create multiple stages within each of your deployment pipelines, one for each customer environment. Each release stage would use a different variable group pointing to a different customer environment. This approach will provide you more flexibility over the next one, because you can specify different approvals, dependencies and conditions on when the stage will (or will not) run. For example, you could define that Customer A production environment is only deployed if a previous stage has completed successfully (maybe your internal test environment. This option still gives you good control on when each customer is deployed while keeping the configuration simpler.

You can see an example of this approach in our repository here.

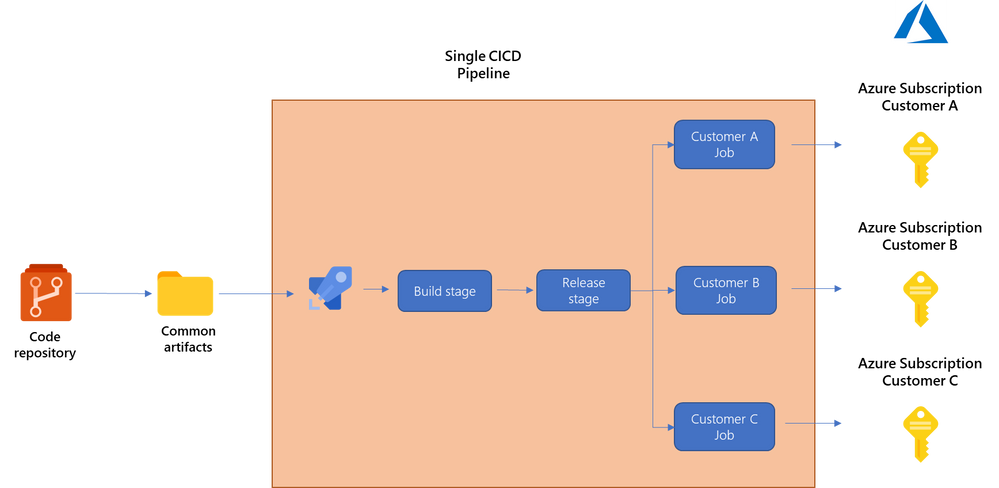

- A single pipeline with a single deployment stage with multiple deployment jobs, each pointing to one customer subscription. Similar to the previous approach but keeping it even more condensed. In this case you just have a single deployment stage, but inside it you create multiple jobs, each using a different set of variables pointing to a different customer subscription. With this option you have less granularity and control options on when each customer is deployed.

You can an example of this approach in our repo here.

Please refer to this page to better understand the different concepts (stages, steps, jobs, tasks, etc.) on how to structure your pipelines.

In Summary

In this post we have explained how to combine Azure Sentinel’s DevOps capabilities with the use of Azure Lighthouse to manage a multi-tenant environment. As you have seen, there are several implementation options; deciding which one to use greatly depends on the size of your organization and how your teams collaborate with each other…at the end, this is what the DevOps culture is all about!