This post has been republished via RSS; it originally appeared at: AI Customer Engineering Team articles.

In a previous blog post, I discussed various approaches to resolving complex software and hardware dependencies in Azure DevOps pipelines. In this blog post, I want to discuss an alternative, lightweight approach to the same problem: Using Azure Machine Learning (AML) Pipelines to compile software dependencies for model training and deployment.

The use case is the same as in the previous blog post: You want to train and deploy a machine learning model for which you have to compile software dependencies from source on a virtual machine (VM) with specific hardware. In our case, we need the virtual machine to have a NVIDIA CUDA card, and unfortunately the Microsoft-hosted build agents for Azure DevOps don’t have GPUs.

As discussed in the previous blog post, one option is to create a self-hosted agent (i.e. a VM), with the required hardware and software libraries needed for compilation. This works fine and is well documented. However, this approach has several disadvantages. First, setting it up is an involved process. You have to create the VM, add it to the agent pool of your Azure DevOps organization, and then download and configure the agent software, so that it becomes available for your DevOps build pipelines. Another complicating factor is that you might want to implement some logic in your DevOps pipeline to start and shutdown your VM before and after execution of your build pipeline. This can be achieved via the Rest API for Azure VMs.

If you are planning to use the Python SDK for training your model and deploying it as a microservice in Azure or on the Edge, using AML Pipelines might make your life very easy. There are several key advantages to this approach:

- AML Pipelines can be executed on AML compute targets that satisfy the hardware requirements of your dependencies. There is no need to set up a self-hosted DevOps agent.

- AML compute targets auto-scale

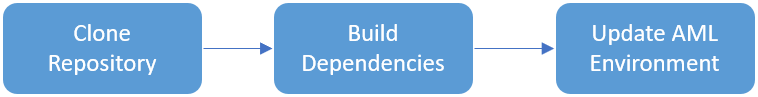

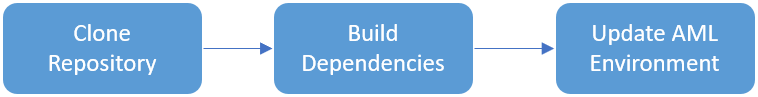

Figure 1 shows a simple AML Pipeline that would do the trick. In this case, the AML Pipeline consists of three steps. In the first step, you clone the remote repository to your AML compute target, then you compile the dependencies on that same compute target, and eventually you add the dependencies as Conda or pip packages to your AML Environment. Lastly, register the updated AML Environment as a new version, so that future model training runs and deployments will be done with the newest version of your software dependencies.

Figure 1. Clone the remote repository to your AML compute target, build dependencies, register the dependencies and create new version of your AML Environment.

Detailed description of AML Pipeline for building software dependencies

Let’s look at the details of setting up the AML Pipeline for building software dependencies. I will skip many of the steps common to any project that rely on the Python SDK for AML. For an introduction to the SDK, I refer you to the MachineLearningNotebooks on GitHub.

Define AML Pipeline

When you define your AML Pipeline, you can provision or attach an AML compute target that satisfies the hardware requirements for building your dependencies.

import os

import json

import shutil

from azureml.core.authentication import ServicePrincipalAuthentication

from azureml.core import Workspace, Environment, RunConfiguration

from azureml.pipeline.core import Pipeline, PipelineData

from azureml.pipeline.steps import PythonScriptStep

from azureml.core.compute import AmlCompute, ComputeTarget

from azureml.core import VERSION

print("azureml.core.VERSION", VERSION)

pipeline_name = "build_pipeline"

script_folder = "./scripts"

config_json = "../config.json"

os.makedirs(script_folder, exist_ok=True)

# read configuration for your AML project

with open(config_json, "r") as f:

config = json.load(f)

svc_pr = ServicePrincipalAuthentication(

tenant_id=config["tenant_id"],

service_principal_id=config["service_principal_id"],

service_principal_password=config["service_principal_password"],

)

# connect to your AML workspace

ws = Workspace.from_config(path=config_json, auth=svc_pr)

# connect to the default blob store of your workspace

def_blob_store = ws.get_default_datastore()

# choose a name for your cluster

cpu_compute_name = config["cpu_compute"]

try:

cpu_compute_target = AmlCompute(workspace=ws, name=cpu_compute_name)

print("found existing compute target: %s" % cpu_compute_name)

except Exception as e:

print("Creating a new compute target...")

provisioning_config = AmlCompute.provisioning_configuration(

vm_size="STANDARD_NC6"

)

cpu_compute_target = ComputeTarget.create(

ws, cpu_compute_name, provisioning_config

)

cpu_compute_target.wait_for_completion(show_output=True)

# get Environment definition and conda dependencies. this is useful in

# scenarios with complex software dependencies.

env = Environment.get(ws, "environment")

# initialize RunConfiguration for pipeline steps

run_config = RunConfiguration()

run_config.environment = env

# define PipelineData used for passing local repository among pipeline

# steps. This is especially useful if you compile more than one

# dependency in your pipeline, and want to avoid cloning the remote

# repository mroe than once.

repository_path = PipelineData("repository_path", datastore=def_blob_store)

# copy execution scripts to into <script_folder>, which will be compied

# to the AML compute target before pipeline execution

shutil.copy(os.path.join(".", "clone_repo.py"), script_folder)

shutil.copy(os.path.join(".", "build_pip_wheels.py"), script_folder)

shutil.copy(os.path.join(".", "register_dependencies.py"), script_folder)

# define pipeline steps

clone_repo_step = PythonScriptStep(

name="clone_repo",

script_name="clone_repo.py",

compute_target=cpu_compute_target,

source_directory=script_folder,

runconfig=run_config,

arguments=["--repository_path", repository_path],

outputs=[repository_path],

)

build_pip_wheels = PythonScriptStep(

name="build_pip_wheels",

script_name="build_pip_wheels.py",

compute_target=cpu_compute_target,

source_directory=script_folder,

runconfig=run_config,

arguments=["--repository_path", repository_path],

inputs=[repository_path],

)

build_pip_wheels.run_after(clone_repo_step)

register_dependencies = PythonScriptStep(

name="register_dependencies",

script_name="register_dependencies_whl.py",

compute_target=cpu_compute_target,

source_directory=script_folder,

runconfig=run_config,

arguments=["--repository_path", repository_path],

inputs=[repository_path],

)

register_dependencies.run_after(build_pip_wheels)

# create and validate pipeline

pipeline = Pipeline(

workspace=ws,

steps=[clone_repo_step, build_pip_wheels, register_dependencies],

)

pipeline.validate()

# publish AML pipeline so that pipeline runs can be triggered from

# Azure DevOps via Rest API calls.

published_pipeline = pipeline.publish(name=pipeline_name)

Clone Repository

The first pipeline step clones the repository. This can look something like this (using GitPython).

import git

import argparse

import os

parser = argparse.ArgumentParser()

parser.add_argument(

"--repository_path", dest="repository_path", default="/tmp/"

)

args = parser.parse_args()

# token for authentication. Alternatively, you can use username and password

token = "<personal access token>"

os.mkdir(args.repository_path)

git.Git(args.repository_path).clone(

"https://%s@dev.azure.com/<organiation>/<project>/_git/<repository>" % (token)

)

Build pip wheel

import os

from distutils.core import run_setup

import argparse

parser = argparse.ArgumentParser()

parser.add_argument(

"--repository_path", dest="repository_path", default="/tmp/"

)

args = parser.parse_args()

os.chdir(os.path.join(args.repository_path, "foo"))

run_setup("setup.py", script_args=["build", "bdist_wheel"], stop_after="run")

Update and register new version of AML Environment

import glob

import os

import argparse

from azureml.core import Environment, Workspace, Experiment, Run

parser = argparse.ArgumentParser()

parser.add_argument(

"--repository_path", dest="repository_path", default="/tmp/"

)

args = parser.parse_args()

run = Run.get_context()

ws = run.experiment.workspace

env = Environment.get(ws, "environment")

# find newest version of python wheel

os.chdir(os.path.join(args.repository_path, "foo"))

files = glob.glob("./dist/foo-*.whl")

files.sort()

file_path = files[-1]

whl_url = Environment.add_private_pip_wheel(

workspace=ws, file_path=file_path, exist_ok=True

)

# update conda_dependencies of Environment

conda_dependencies = env.python.conda_dependencies

conda_dependencies.add_pip_package(whl_url)

env.python.conda_dependencies = conda_dependencies

# register new version of Environment

env.register(ws)

Summary

I hope this blogpost provided a good demonstration of using AML Pipelines for building software dependencies. The switch from the approach I described previously has made my work much easier, because I can now use the same AML compute targets and AML Environments for compiling dependencies and training and deploying my models. Thanks for reading!