This post has been republished via RSS; it originally appeared at: Azure Data Explorer articles.

Azure Data Explorer enables you to ingest, process, explore, and transform Big Data from various sources with impressive performance. You can work with Kusto query language, which allows you to explore data in a very simple way.

Use case: Chatbot telemetry

Chatbot development is based on the Microsoft Azure Bot Framework, together with QnA and LUIS. It’s possible to develop ad-hoc custom events to be sent to Application Insights (the Event Collector) to monitor the telemetry. Application Insights includes a Continuous Export feature, which stores data in blobs within a storage account. These blobs will contain JSON files with all the standard properties, plus custom properties that are defined within the chatbot.

The Challenge: custom properties are “dictionary of dictionary”

We need a parser to manage those custom properties because they are the core ones. They are considered core properties because they have ad-hoc tracking within the application, are based on a given business logic, and are in addition to the standard built-in properties, which are part of the framework. Due to all of this, it’s possible to retrieve insights related to the usage of the bot and the value the chatbot brings in the business case where it’s been adopted.

The solution: Azure Data Explorer and a custom function

The Big Data reference architecture to ingest data from blob storage is based on Event Grid subscriptions: This article describes how you can set up the end-to-end flow. In this specific case of ingesting telemetry sent to Application Insights, we’ll demonstrate below:

- How to create the dedicated table for incoming ingested data

- The definition of the JSON mapping rules to appropriately insert data into that table

- The core function which allows us to manipulate the custom telemetry

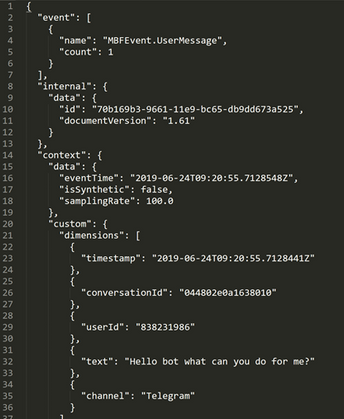

Before proceeding, it’s worth understanding the structure of the JSON produced by Application Insights. Event, Internal and Context are at the same highest level of the JSON file. Custom Properties are an array in JSON, within the Context -> Custom -> Dimensions hierarchy, as shown below

1. Table creation, where events are stored

The definition is pretty straightforward using the .create-merge command:

.create-merge table Events (eventName:string, itemID:string, timestamp:datetime, customProperties:dynamic)

It’s important that the customProperties column (which will contain the array of properties) is dynamic

2. JSON mapping rule definition

JSON mapping allows Azure Data Explorer to parse the first level of the JSON during input, considering all the dimensions under the customProperties dynamic column defined above:

.create table Events ingestion json mapping “EventMapping” ‘[

{“column”:”eventName”,”path”:”$.event[0].name”},

{“column”:”itemID”,”path”:”$.internal.data.id”},

{“column”:”timestamp”,”path”:”$.context.data.eventTime”},

{“column”:”customProperties“,”path”:”$.context.custom.dimensions“}]

3. CustomFunction to manipulate the CustomProperties

The MessageTableLoad() function defines how to load data in a Message table containing the following columns: eventName, itemId, timestamp, user, text, channel, conversationId.

– The ‘project‘ part of the script selects both of the basic properties together with user, text, channel, and conversationId that come from the set of custom properties.

– The mv-expand operator is applied to the dynamic-typed customProperties column so that each value in the collection gets a separate row.

– That column is interpreted as a Dictionary with the make_dictionary function

.create function MessageTableLoad() {

Events

| serialize rn = row_number()

| mv-expand customProperties

| summarize

eventName = any(eventName),

itemId = any(itemID),

timestamp = any(timestamp),

customProperties = make_dictionary(customProperties)

by rn

| where eventName == “MBFEvent.BotMessage” or eventName == “MBFEvent.UserMessage”

| project eventName, itemId, timestamp,

user = tostring(customProperties.userId),

text = tostring(customProperties.text),

channel = tostring(customProperties.channel),

conversationId = tostring(customProperties.conversationId)

}

Conclusion

AzureDataExplorer with the KUSTO query language enables you to parse complex structures in an easy way. After you define the rules of ingestion, it becomes very simple to navigate the raw data received as input in a clean table.

this approach can be applied to any application that sends custom telemetry to Application Insights

The Chatbot Scenario is just one way to show a practical example, demonstrating how to manage custom properties. But this approach can be applied to any application that sends custom telemetry to Application Insights.