This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Thanks to the following for their help: Dave Lusty, Clive Watson and Ofer Shezaf.

Many customers implement Web Application Firewalls to filter, monitor or block HTTP traffic to and from a web application and there are many solutions available on the market. Many of these provide logging capabilities which can be utilised to detect malicious request traffic and then ‘log’ or ‘log and block’ that traffic before it reaches web applications.

So, when setting up a Sentinel proof of concept for a customer, one of the tasks is to identify the data sources to connect to Azure Sentinel. Azure Sentinel comes with many connectors for both Microsoft and third party solutions, however, there are still cases when a customer needs to integrate with 3rd party solutions that do not have an inbuilt connector.

The following blog authored by Ofer Shezaf, details how integration to other solutions is possible using the two predominant mechanisms Syslog or CEF, but in the post he also highlights that it is by no means a definitive list due to the large number of systems that support these mechanisms of getting data into a SIEM like Sentinel.

How to solve a problem like….

Like many things, there is usually a requirement that drives the need to look at solutions to solve a problem. In some cases, the answer is simple and there is a single route that you can chose but, on this occasion, there was more than one option that could be chosen. So, what was that requirement? It was in fact, part of a proof of concept for a customer that I was working with and how to get Fastly WAF logs into Azure Sentinel.

In this blog I would like to discuss how to utilise other Azure services such as Azure Functions to process Fastly WAF log files and then prepare them for ingestion into Azure Sentinel. I will discuss my initial thoughts of trying to achieve this with Logic Apps, but ultimately building an Azure Function with C# code provided more flexibility and I will include the Azure Function sample code and KQL queries to query the Fastly logs once they are ingested into the Azure Sentinel Log Analytics workspace.

Important Note:

Although I had a specific need to address the ingestion of Fastly WAF logs, there is plenty of scope to modify this for other solutions that can export their logs to Azure Blob storage.

Fastly WAF Logging options

Fastly supports real-time streaming of data via several different protocols that allow the logs to be sent to various locations, including Azure for storage and analysis. Fastly provide good guidance on how to achieve this and it was here that I started my journey. I used the following list of URLs to understand what was possible and more importantly how we could configure it:

- About Fastly’s Real-Time Log Streaming features

- Fastly WAF logging

- Setting up remote log streaming

- Log streaming – Microsoft Azure Blob Storage

Logging to Azure Storage

As you would expect, there were a few pre-requisites needed, and all the instructions are provided on the Log streaming – Microsoft Azure Blob Storage page:

- Create an Azure Storage Account in the target subscription.

- Create a Shared Access Signature (SAS) token with write access to the container within the Azure Storage account. See the relevant documentation on how to limit access to the storage account and review the best practices when using SAS tokens.

Once the configuration had been completed files were being written into the container in the Azure storage account on the configured interval and I was ready to start thinking about getting the log files ingested into the Azure Log Analytics workspace that was being used by Azure Sentinel.

Option 1 – Azure Logic Apps

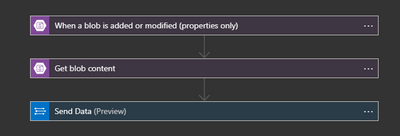

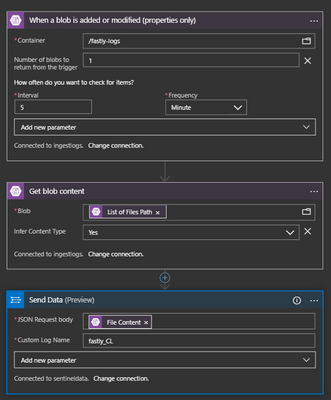

My first thoughts were to look at Azure Logic Apps as a way to get these log files into the workspace using the Azure Monitor HTTP Data Collector API. I built a straightforward Logic App that would be triggered when a file was written into the storage account container, get the contents and then send that to the workspace as shown below:

The first hurdle that needed to be overcome was getting the format of the logs correct so that the data could be queried with KQL. The default output created lines like the example below:

{"time": "1582227053","sourcetype": "fastly_req","event": {"type":"req","service_id":"14dPoQYLia9KKCecDcCqAQ","req_id":"ae48941988bff01d122db4108b5fcdc59bade6870659eb4fa95208b3bc11f0b4","start_time":"1582227053","fastly_info":"HIT-SYNTH","datacenter":"<NAME>","src":"<IP_ADDRESS>","req_method":"GET","req_uri":"/","req_h_host":"(null)","req_h_user_agent":"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML| like Gecko) Chrome/72.0.3602.2 Safari/537.36","req_h_accept_encoding":"","req_h_bytes":"163","req_body_bytes":"0","resp_status":"301","resp_bytes":"350","resp_h_bytes":"350","resp_b_bytes":"0","duration_micro_secs": "679","dest":"","req_domain":"<FQDN>","app":"Fastly","vendor_product":"fastly_cdn","orig_host":"<FQDN>"}}

You can see that the line is essentially formed of two parts:

- {"time": "1582227053","sourcetype": "fastly_req","event": and a final closing “}” at the end of the line

- {"type":"req","service_id":"14dPoQYLia9KKCecDcCqAQ","req_id":"ae48941988bff01d122db4108b5fcdc59bade6870659eb4fa95208b3bc11f0b4","start_time":"1582227053","fastly_info":"HIT-SYNTH","datacenter":"<NAME>","src":"<IP_ADDRESS>","req_method":"GET","req_uri":"/","req_h_host":"(null)","req_h_user_agent":"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML| like Gecko) Chrome/72.0.3602.2 Safari/537.36","req_h_accept_encoding":"","req_h_bytes":"163","req_body_bytes":"0","resp_status":"301","resp_bytes":"350","resp_h_bytes":"350","resp_b_bytes":"0","duration_micro_secs": "679","dest":"","req_domain":"<FQDN>","app":"Fastly","vendor_product":"fastly_cdn","orig_host":"<FQDN>"}

The second part is the section that we really wanted, so the configuration of the Log Format was updated to remove the first section and only output the second part.

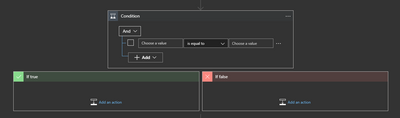

However, this ‘simple’ flow coped very well with a single line file but, these are multiline files, so I started to look at adding in an IF/THEN type condition to process each line separately and write that in the workspace.

This approach started to get complicated and did not achieve the desired result, so I needed to look at other options.

Every Cloud has a silver lining..!

By chance, a colleague posted a message on an internal Teams channel that he had written an Azure Function that collects CSV files from Azure Blob Storage, processes the CSV and injects the rows one at a time into an Event Hub instance. [If you are interested in reviewing that solution, its available on his GitHub.] This sounded very promising and I felt that he could help, a quick email later and we were talking about how we could evolve his scenario, so instead of sending data from a CSV to Event Hub, write data into a Log Analytics workspace.

Option 2 – Azure Functions

The Azure Monitor HTTP Data Collector API was still going to be a key part to this solution as was the Storage Blob trigger, only now we chose to use an Azure Function to process the Fastly WAF log files.

Before you get started there are a few bits of information that are required prior to coding the Function as they can be added to the local.settings.json for local testing:

- Connection String to the Azure Storage Account e.g. DefaultEndpointsProtocol=https;AccountName=<STORAGEACCOUNTNAME>;AccountKey=<KEY>;EndpointSuffix=core.windows.net

- Azure Log Analytics URL e.g. https://<yourworkspaceID>.ods.opinsights.azure.com/api/logs?api-version=2016-04-01

- Azure Log Analytics Workspace ID

- Azure Log Analytics Workspace Key

- Custom Log Name i.e. the table where the log data will be written to and in this case I used – fastly_CL

[There is a nice little tutorial to create a simple Azure Function using Visual Studio available in the Azure Functions documentation - https://docs.microsoft.com/en-us/azure/azure-functions/functions-create-your-first-function-visual-studio – to get started.]

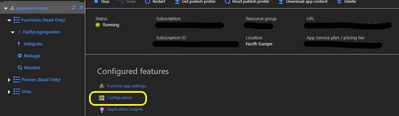

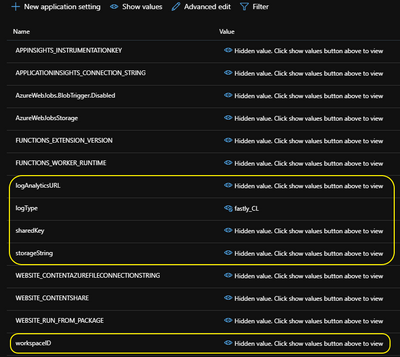

Using the Azure Portal I created a new Azure Function App and linked it to the same storage account that I had created for the Fastly logs. Once this had completed I needed to add new application settings to match the five pieces of information that I mentioned above. This is done by clicking the Configuration link and then adding the settings as shown in the screenshots below:

[Note: You can use whatever names you wish here, just so long as they match the variables in the C# code:

]

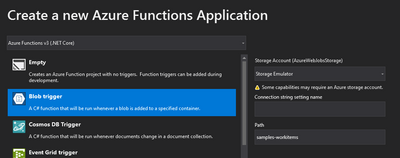

Using Visual Studio, Dave coded the function in C# using the Blob Trigger as shown below.

You can also use the dropdown on the Storage Account to select the target Storage account container (or leave the default for local testing) but you will also need the connection string and path for the target location.

Other than making the connection to the target container within the Azure Storage Account, we needed to manipulate the log file and output it as JSON and an example of the code is shown below:

Finally, using the HTTP Data Collector API, each line is written separately into the custom fastly_CL table. Sample code as follows:

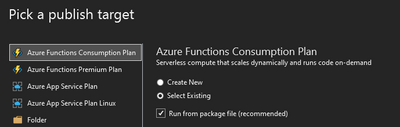

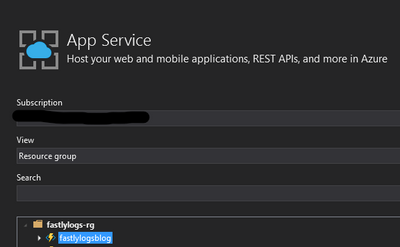

With the code complete, it was published using an Azure Functions Consumption Plan and as I had already created the Function App, it was published using an existing plan.

Once the deployment has been completed, go to the Azure Function in the Azure Portal.

One final step, which is worth configuring is to set up Application Insights to monitor the execution of the Function. You can create a new App Insights instance or use an existing one.

Let’s have a look at the data

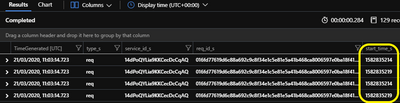

With the Azure Function configured, running and being monitored it is time to have a look at the data in the fastly_CL table in the workspace where the data has been written.

But there was one other thing that we needed to consider as part of the queries and that was the fact the TimeGenerated value was in fact the time the data was ingested but the logs actually include a start_time_s field, which is formatted in Unix Epoch Time, which we can utilise in our queries but we needed to convert those to UTC datetime using the unixtime_milliseconds_todatetime() scalar function, and below is a KQL example to achieve that:

[Note: As this was a PoC, I wanted to keep the function as simple as possible, but I should note that it would be possible and best practice to do the time conversion with the function prior to the ingestion of the data.]

Now that the data is available to us in Azure Sentinel, it is possible to craft the necessary queries and alerts. Although, not a specific requirement for the POC, the data\queries can also be utilised to build a workbook to visualise the data and would definitely be useful later.

As I mentioned at the start of this blog post, this specific scenario was to get Fastly WAF log data into Azure Sentinel, but the 'solution' could be adapted for other log sources which can export to Azure Blob Storage. With that in mind I have made the code for the Azure Function available in a GitHub repo if you would like to review, adapt, etc.

This was a very interesting piece of work to have been involved with and I hope that it will be of use to others.