This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

When transforming data in ADF with Azure SQL Database, you have the choice of enforcing a validated schema or invoking "schema drift". If you have a scenario where your source or sink database schemas will change over time, you may wish to build your data flows in a way that incorporates flexible schema patterns. The features that we'll use in this post to demonstrate this technique include schema drift, column patterns, late binding, and auto-mapping.

Late Binding

Start with a new data flow and add an Azure SQL Database source dataset. Make sure your dataset does not import the schema and that your source has no projection. This is key to allow your flows to use "late binding" for database schemas that change.

Schema Drift

On both the Source and Sink transformations set "Schema Drift" on so that ADF will allow any columns to flow through, regardless of their "early binding" definitions in the dataset of projection. In this scenario, we're not including any schema or projection, so schema drift is needed. If you have a scenario that requires hardened schemas, set "Validate Schema" and import the schema in the dataset and set a source projection.

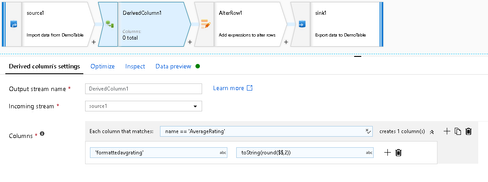

Column Patterns

When you are working with flexible schemas and schema drift, avoid naming specific column names in your data flow metadata. When transforming data and writing Derived Column expressions, use "column patterns". You will look for matching names, types, ordinal position, data types, and combinations of those field characteristics to transform data with flexible schemas.

Auto-Mapping

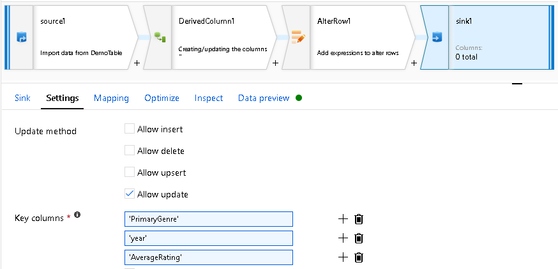

On the Sink transformation, map your incoming to outgoing fields using "auto-mapping". Setting specific hardened mappings is "early binding" and here you wish to remain flexible with "late binding".

One last note: In the video above, I demonstrate using the Alter Row transformation to update existing database rows. When using full schema-less datasets, you must enter the primary key fields in your sink using Dynamic Content. Simply refer to the target database key columns as string column names: