This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Data loading is the first experience data engineers go through when onboarding SQL analytics workloads using Azure Synapse. We recently released the COPY statement to ensure this experience is simple, flexible and fast.

It’s recommended to load multiple files at once for parallel processing and maximizing bulk loading performance with SQL pools using the COPY statement. File-splitting guidance is outlined in the following documentation and this blog covers how to easily split CSV files residing in your data lake using Azure Data Factory Mapping data flows within your data pipeline.

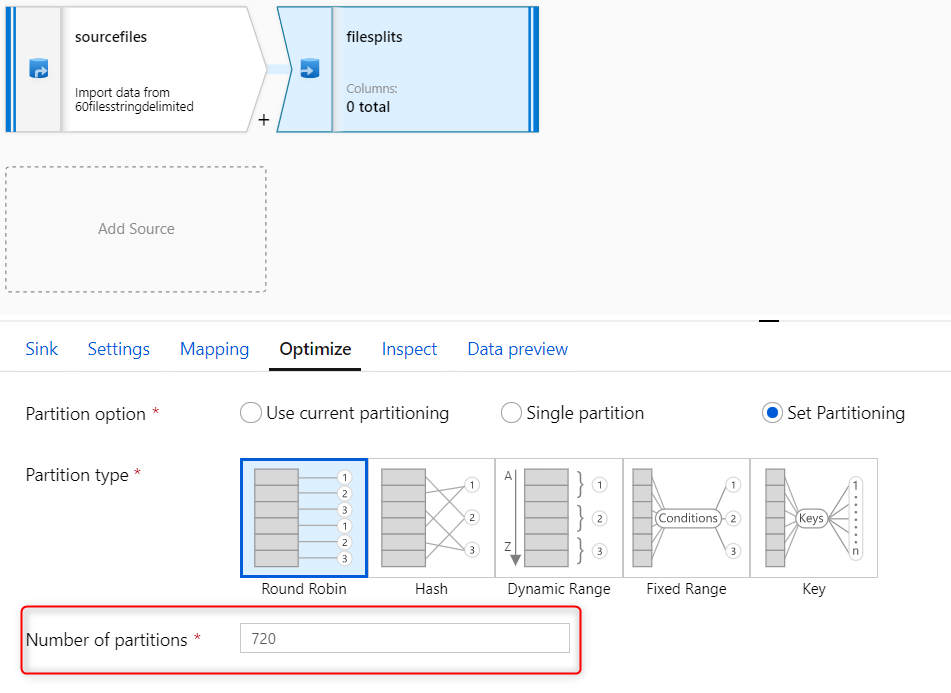

This example is for a SQL pool configured at a DW6000c which means we should have 720 file splits for optimal load performance. We will be generating 720 file splits out of 60 files. This assumes you have CSV files to split in your data lake and a data factory to create your data pipeline.

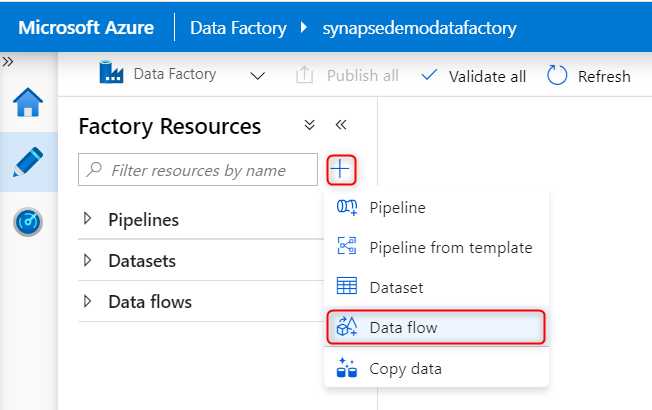

1. Navigate to your Azure Data Factory and add a data flow

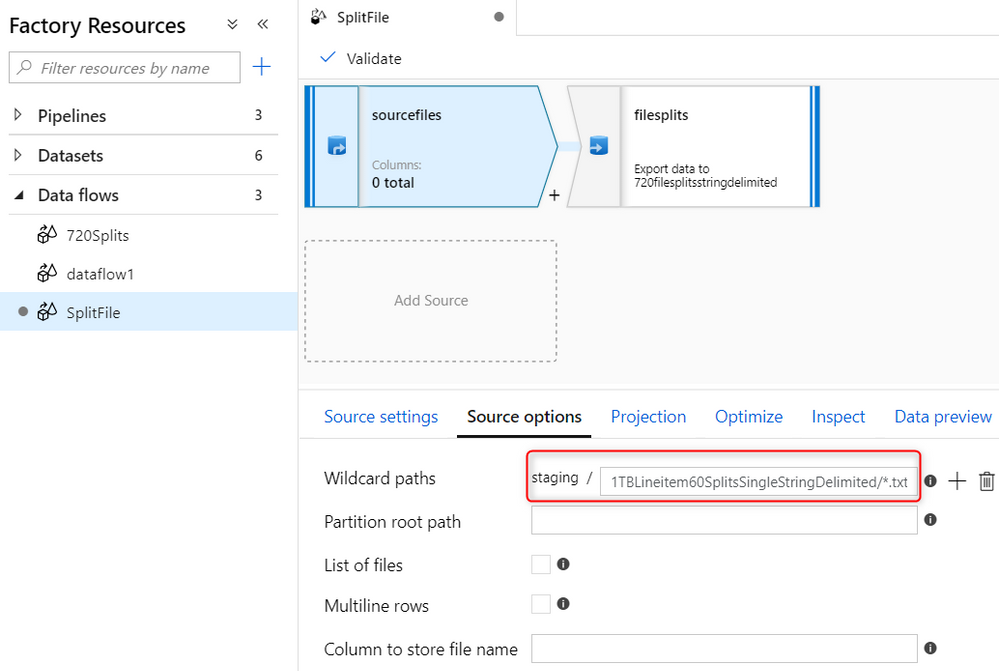

2. Fill out the data flow source where your file(s) are stored

In this example, the container is “staging” where a wildcard is specified for the 60 text files in the 1TBLineitem60SplitsSingleStringDelimited folder.

3. Add a destination sink to your source where you will be storing your file splits and specify the number of partitions (these are your file splits)

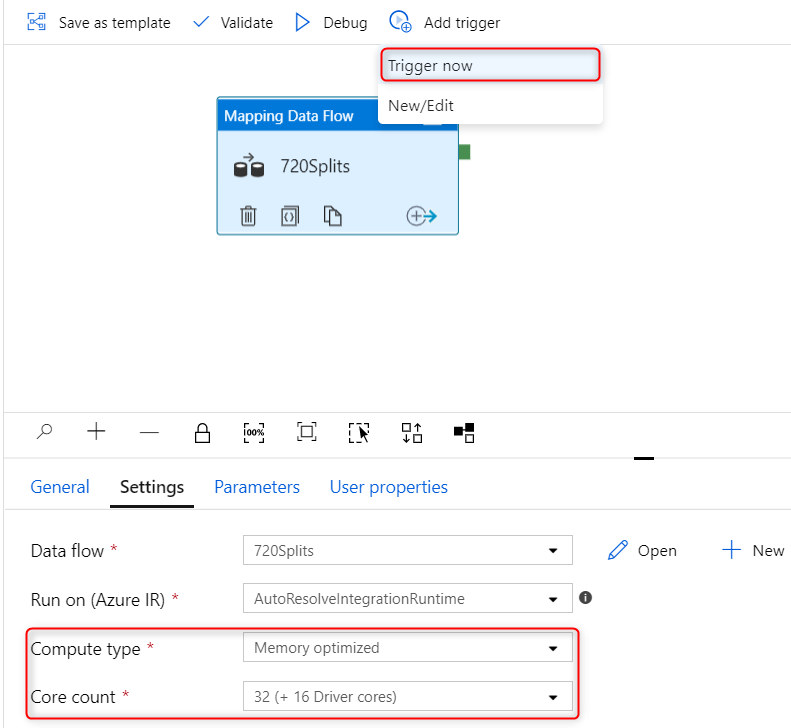

4. Add your data flow to a pipeline, configure your compute for your data flow, and manually trigger your pipeline

Using “Trigger now” to start the split process will use the specified compute for your data flow. Note using “Debug” will automatically use your debug cluster which by default is an eight-core general compute cluster.

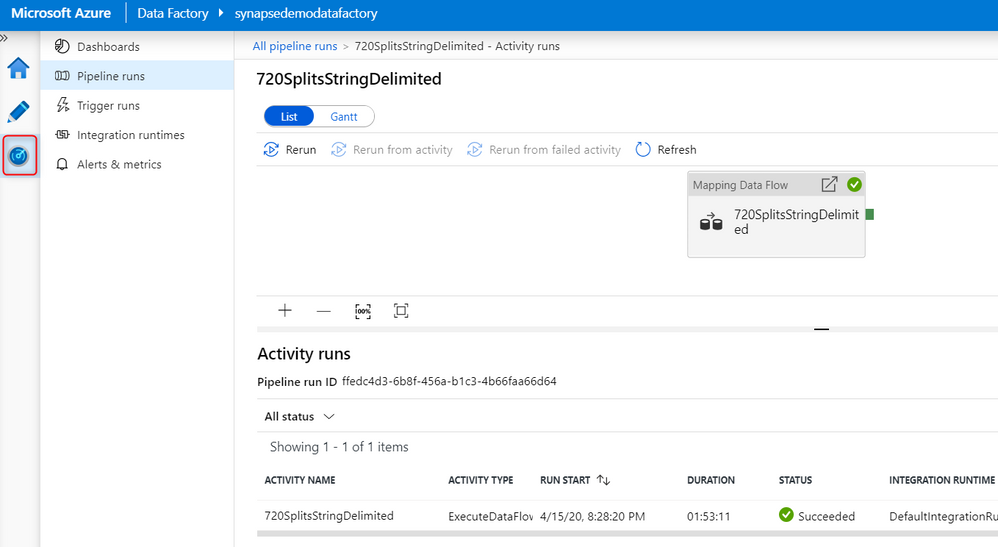

5. You can monitor your pipeline and data flow by going to the “Monitor” tab

This process works for compressed files as well where you only need to specify the compression type in the sink and source dataset.

You can then orchestrate your pipeline on a schedule to split files as they land in your sink and add the COPY activity with the COPY command to your pipeline to load into your SQL pool. For additional data loading best practices, refer to the following documentation.