This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Humans of IT is thrilled to feature an Accessibility-focused content series, where we highlight a number of inspiring stories from Microsoft MVPs, employees and community members to share how accessible technology has helped them succeed and feel empowered in the workplace. Today, we continue the series with

Meet Akira Hatsune - this is his story:

Hello, I'm Akira Hatsune, Microsoft MVP for Windows Development. I'm a Lead Architect at a Japanese development company, designing systems and writing code for development. I actively work on and am passionate about Tech for Good projects since my goal is to develop a system that can help people to live richer lives through IT. Right now, we are working on developing a communication tool for people with hearing impairments that uses speech recognition.

In this post, I'll be going through a recap of my session originally titled "The Current State of Rational Consideration and ICT Utilization in the Enterprise and Practical Examples," which was presented at Microsoft Ignite the Tour 2019/2020 in Tokyo and Osaka.

In Japan, a law called the Law to Eliminate Discrimination against Persons with Disabilities has been enacted, requiring companies to provide "reasonable consideration" to persons with disabilities.

The provision of reasonable accommodation means that when a person with a disability discloses to you that they require accommodations for them to manage the challenges of living or working with disabilities, you as an employer will need to respond "to the extent that the burden is not too great". Therefore, I am in charge of developing applications that use ICT (information and communication technology) to reduce the human burden and help make these accommodations, so that the presence or absence of hearing impairment does not impact overall effectiveness in communication.

Encounter with Real World Sensing

To give you a roadmap to the development of this application, I'd have to start with my first encounter with Microsoft Kinect. For some time now, I've been developing an app that uses sensors such as Kinect to visualize movement by tracing body movements and translating them into a different representation. This is a personal passion project I'm pursuing outside of my corporate work. Past work includes the KineBrick EV3, which detects the movement of both arms with the Kinect and then traces them with the MINDSTORM EV3.

Watch the demo: https://www.youtube.com/watch?v=oLFFggZ0mcI

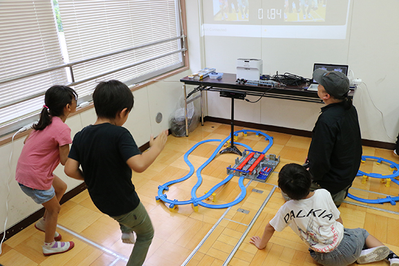

This app allows children with disabilities who are unable to see their own body movements objectively to grasp their own body movements by having them visually recognize their body movements directly as EV3 movements. As the experience builds up, they will be able to grasp their own body movements even without the Kinect or EV3. There is also a work in which the accuracy of the running form detected by the Kinect is converted into the speed of a model train, and the model train races instead of a person.

Watch the demo here: https://www.youtube.com/watch?v=nT1Yy21z_jo

When this work was exhibited at an elementary school, the children who took part in it were primarily thrilled at the prospect of racing and winning against their opponents. However, this experiment made me realize that when I ran better by using the right body form, I could run even faster on the planar rail. This led me to start checking my form many times before I did the next one, and even before I started running, I started looking at my reflection on the display and checked the way I swung my arms and raised my knees. No one teaches you to look at things objectively and look back like a professional athlete does.

In this way, I was interested in providing value to children who are not good at seeing themselves objectively by expanding the perspective they can see objectively with Kinect.

Encounters with speech recognition

However, in my work at the company, it was difficult to come across projects that involved direct sensing of the real world. The turning point in this situation came five years ago (2015). I was approached by my boss, who knew I was developing an app using Kinect as a private initiative, to see if there was any solution to the strain of communicating with my hearing-impaired colleagues.

Initially, we looked at approaches such as using Kinect to read and verbalize sign language. However, it's not the inability of able-bodied people to understand sign language that becomes a problem at work.

The real problem at work was that it was difficult for people with hearing impairments to grasp what they were saying in real time in meetings and other places where many people were speaking at once. The problem was solved by having employees take turns typing the content of the meeting into a computer screen on the spot (PC summary writing). However, this method made it difficult for the person typing the content of the utterance to speak in the meeting, and it also required a great deal of concentration.

That's where I started to focus my attention, five years ago at the time, on speech recognition technology that was rapidly improving in accuracy. If speech can be recognized in real time and converted to text on the spot, there will be no need for a PC summary writer, and we can now free up one more person to contribute to the meeting.

Development of speech recognition communication applications

There are two phases to the development of this app: the first is the AI development phase, where we need to improve the speech recognition rate to a practical level. The other is the UX development aspect, which makes it more intuitive and easier to use for the hearing impaired.

For speech recognition, we decided to work with an external vendor's speech recognition engine, such as Microsoft Cognitive Services (at the time, Bing Speech API), to solve the problem. Because we didn't want to develop a speech recognition engine, we wanted to develop an application that would employ it as a means to communicate smoothly with people with hearing impairments. So we decided to focus our development resources on improving the UX.

While discussing with developers with hearing impairments (this discussion turned into an opportunity for UX verification), we pursued ease of use. When we actually tried real time speech recognition, we got a lot of realizations. To some extent, typos and omissions can be compensated for by those with hearing disabilities who read them.

- If the recognition speed is slow, there will be a time lag with facial expressions, gestures, and materials, and the rate of understanding of the content of speech will decrease.

- There are many cases where people with hearing impairments have difficulty speaking, and hearing impairments requires a method other than speech recognition.

- When a large number of people are speaking, it is not always the person in the direction that the hearing-impaired person is looking, so it is necessary to respond because the speaker is not aware of who is speaking.

About our application

The application we developed focused on reducing the time lag between speech and the display of speech recognition results. To make it easier for people with hearing impairments to speak up, we have also adopted a system that allows people with hearing impairments to easily and quickly express their feelings, such as inputting stamps in addition to keyboard input.

From speech recognition to automatic speech translation

When we put the developed app on the market, we were able to get a lot of people to adopt it. However, in larger companies, the adoption rights for the app may be held by a department that doesn't know the field, rather than the field that is struggling to communicate with deaf and hard of hearing people on a daily basis. In such cases, the parties may not be able to understand the situation of being deaf or not understanding what is being said in front of them.

Therefore, we decided to let them experience the daily hardships of the hearing-impaired and the sense of relief they feel when they introduce the application by experiencing that the words they don't understand are displayed in their native language in real time.

By experiencing this system at events and other events, people who have little contact with the hearing-impaired will be able to understand the importance and rational consideration of information security through speech recognition as a personal matter.

To provide a means of translation to the community

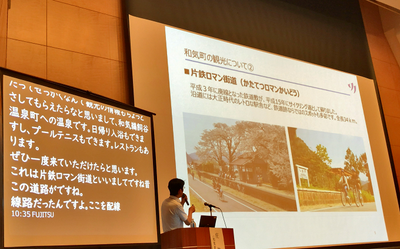

One day, a fellow developer who is hearing impaired approached me about his concerns about joining the developer community. The JXUG (Japan Xamarin User Group) community he wanted to attend was hosted by a Microsoft MVP he knew, so he contacted the organizer on SNS on the spot and agreed to provide information assurance using speech recognition at the seminar.

Of course, I'm sure they're willing to accept requests from non-Microsoft MVPs too, but I think one of the values of being a Microsoft MVP is that you know such a diverse group of community leaders and each other's contacts. Since then, we have provided information assurance through subtitles to various communities:

We've taken the knowledge we've gained from providing subtitles for this community event and put it into the app, making it easier and easier for us to provide subtitles for the event. Now, communities such as HoloMagicians, which I am a part of as an organizer, provide real-time translated subtitles. This will make it easier for the Japanese developer community to enjoy sessions with speakers from overseas.

I would like to see a world where speech recognition is everywhere, where information can be guaranteed in writing at any time, and where language differences do not become a barrier to communication through real-time translation. I hope to work with the developer community and my fellow Microsoft MVPs to empower them to do so.

#HumansofIT

#AccessibilityforAll