This post has been republished via RSS; it originally appeared at: AI Customer Engineering Team articles.

Authors: Wolfgang M. Pauli and Manash Goswami

AI applications are designed to perform tasks that emulate human intelligence to make predictions that help us make better decisions for the scenario. This drives operational efficiency when the machine executes the task without worrying about fatigue or safety. But the effectiveness of the AI application is defined by the accuracy of the model used to address the end user scenario.

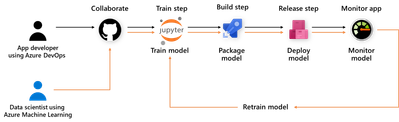

To build the accurate model, package in application and execute in the target environment requires many components to be integrated into one pipeline, e.g. data collection, training, packaging, deployment, and monitoring. Data scientists and IT engineers need to monitor this pipeline to adjust to changing conditions, rapidly make updates, validate, and deploy in the production environment.

This continuous integration and continuous delivery (CI/CD) process needs to be automated for efficient management and control. It also helps in developer agility to shorten the lifecycle to update and deploy the application.

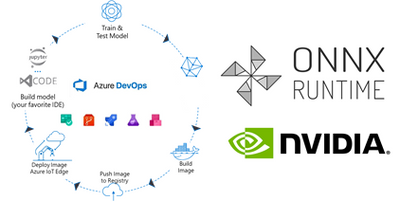

Today, we are introducing a reference implementation for a CI/CD pipeline built using Azure DevOps to train a CNN model, package the model in a docker image and deploy to a remote device using Azure IoT Edge for ML inference on the edge device. We will be training a TinyYolo Keras model with TensorFlow backend. The trained model is converted to ONNX and packaged with the ONNX Runtime to run on the edge device.

The sample is published here.

Before we get started, here are a few concepts about the tools we are using in this sample:

What is Azure DevOps?

Azure DevOps is the collection of tools that allows developers to setup the pipeline for the different steps in the development lifecycle. Developers can automate and iterate on software development to ship high quality applications.

ONNX and ONNX Runtime for ML on Edge device

ONNX (Open Neural Network Exchange) is the common format for neural networks that can be used as a framework-agnostic representation of the network’s execution graph. Models in ONNX format allow us to create a framework-independent pipeline for packaging and deployment across different hardware (HW) configurations on the edge devices.

ONNX Runtime is the inference engine used to execute models in ONNX format. ONNX Runtime is supported on different OS and HW platforms. The Execution Provider (EP) interface in ONNX Runtime enables easy integration with different HW accelerators. There are packages available for x86_64/amd64 and aarch64. Developers can also build ONNX Runtime from source for any custom configuration. The ONNX Runtime can be used across the diverse set of edge devices and the same API surface for the application code can be used to manage and control the inference sessions.

This flexibility, to train on any framework and deploy across different HW configuration, makes ONNX and ONNX Runtime ideal for our reference architecture, to train once and deploy anywhere.

Pre-requisites and setup

Before you get started with this sample, you will need to be familiar with Azure DevOps Pipelines, Azure IoT and Azure Machine Learning concepts.

Azure account: Create an Azure account in https://portal.azure.com. A valid subscription is required to run the jobs in this sample.

Devices: There are many options for Edge HW configurations. In our example, we will use two devices from the Jetson portfolio – they can be any of Nano / TX1 / TX2 / Xavier NX / AGX Xavier. One device will be the dev machine to run the self-hosted DevOps agent, and the other will be the test device to execute the sample.

- Dev Machine: This machine will be used to run the jobs in the pipeline for CI/CD. This requires some tools to be installed on the device:

- Azure DevOps agent: Since the test device is based on Ubuntu/ARM64 platform, we will setup a self-hosted Azure DevOps agent to build the ARM64 docker images in one of the devices. Another approach is to setup a docker cross-build environment in Azure which is beyond the scope of this tutorial.

- Azure IoT Edge Dev Tool: The IoT Edge Dev Tool (iotedgedev) helps to simplify the development process for Azure IoT modules. Instead of setting up the dev machine as an IoT Edge endpoint with all the tools and dependencies, we will install the IoT Edge Dev container. This will greatly simplify the dev-debug-test loop to validate the inner loop of this CI/CD pipeline on the device before pushing the docker images to the remote IoT endpoint. You will need to manually setup the iotedgedev tool on this arm64 device.

- AzureML SDK for Python: This SDK enables access to AzureML services and assets from the dev machine. This will be required to pull the re-trained model from the AzureML registry to package in the docker image for the IoT Edge module.

- Test Device: This device is used to deploy the docker containers with the AI model. It will be setup as an IoT Edge endpoint

Training in TensorFlow and converting to ONNX

Our pipeline includes a training step using AzureML Notebooks. We will use a Jupyter notebook to setup the experiment and execute the training job in AzureML. This experiment produces the trained model that we will convert to ONNX and store the model in the model registry of our AzureML workspace.

Setup the Release Pipeline in Azure Dev Ops

A pipeline is setup in Azure DevOps to package the model and the application code in a container. The trained model is added as an Artifact in our pipeline. Everytime a new trained model is registered in the AzureML model registry it will trigger this pipeline.

The pipeline is setup to download the trained model to the dev machine using the azureml sdk.

Packaging the ONNX Model for arm64 device

In the packaging step, we will build the docker images for the NVIDIA Jetson device.

We will use the ONNX Runtime build for the Jetson device to run the model on our test device. The ONNX Runtime package is published by NVIDIA and is compatible with Jetpack 4.4 or later releases. We will use a pre-built docker image which includes all the dependent packages as the base layer to add the application code and the ONNX models from our training step.

Push docker images to Azure Container Registry (ACR)

The docker images are pushed to the container registry in Azure from the dev machine. This registry is accessible for other services like Azure IoT Edge to deploy the images to edge devices.

Deploy to IoT Edge device

The Azure IoT Hub is setup with the details of the container registry where the images are pushed in the previous step. This is defined in the deployment manifest – deployment.json. When new docker images are available in the ACR, they are automatically pushed to the IoT Edge devices.

This completes the deployment step for the sample.

Additional Notes

We can monitor the inference results in the IoT Hub built-in event point.

This sample can be enhanced to store the inference results in Azure Storage and then visualize in PowerBI.

The docker images can be built for other HW platforms by changing the base image in the Dockerfiles.