This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Since the inception of Azure SQL more than ten years ago, we have been continuously raising the limits across all aspects of the service, including storage size, compute capacity, and IO. Today, we are pleased to announce that transaction log rate limits have been increased for General Purpose databases and elastic pools in the vCore purchasing model.

Increasing log rate

Resource governance in Azure SQL has specific limits on resource consumption including log rate, in order to provide a balanced Database-as-a-Service and ensure that recoverability SLAs (RPO, RTO) are met.

Maximum transaction log rate, or log write throughput, determines how fast data can be written to a database in bulk, and is often the main limiting factor in data loading scenarios such as data warehousing or database migration to Azure. In 2019, we doubled the maximum transaction log rate for Business Critical databases, from 48 MB/s to 96 MB/s. With the new M-series hardware at the high end of the Azure SQL Database SKU spectrum, we now support log rate of up to 264 MB/s.

Our latest increases are for all hardware generations currently supported in the General Purpose service tier, and apply equally to both provisioned and serverless compute tiers.

For single databases, log rate limit increases are:

|

Cores |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 and higher |

|

Old limit (MB/s) |

3.75 |

7.5 |

11.25 |

15 |

18.75 |

22.5 |

26.3 |

30 |

|

New limit (MB/s) |

4.5 |

9 |

13.5 |

18 |

22.5 |

27 |

31.5 |

36 |

For elastic pools, log rate limit increases are:

|

Cores |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 and higher |

|

Old limit (MB/s) |

4.7 |

9.4 |

14.1 |

18.8 |

23.4 |

28.1 |

32.8 |

37.5 |

|

New limit (MB/s) |

6 |

12 |

18 |

24 |

30 |

36 |

42 |

48 |

All up-to-date resource limits are documented for single databases and elastic pools.

Impact at scale

To see the impact of log rate increases on customer workloads, we analyzed telemetry data for the period when the change was being deployed in one Azure region. During that time, the region had a mix of databases and elastic pools with old and new limits.

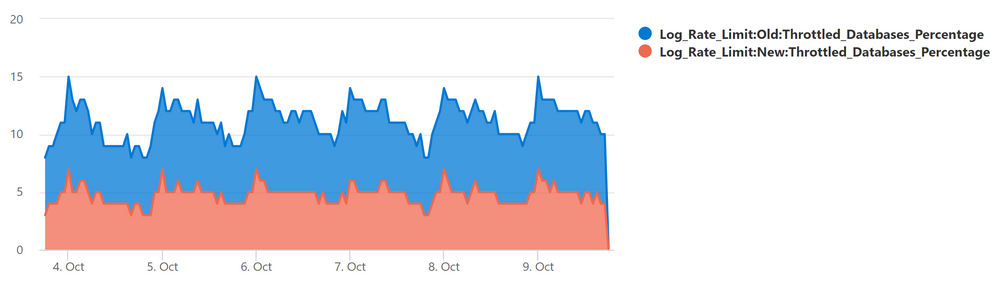

The chart below plots the percentage of General Purpose databases that reached log rate limit and experienced log rate throttling (defined as at least one LOG_RATE_GOVERNOR wait in a 1-hour interval). The higher (light blue) area is for databases with the old limits, and the lower (red orange) area is for databases with the new limits.

The positive impact is clear: the change reduced the average percentage of General Purpose databases experiencing log rate throttling from over 10% to less than 5%.

Data load example

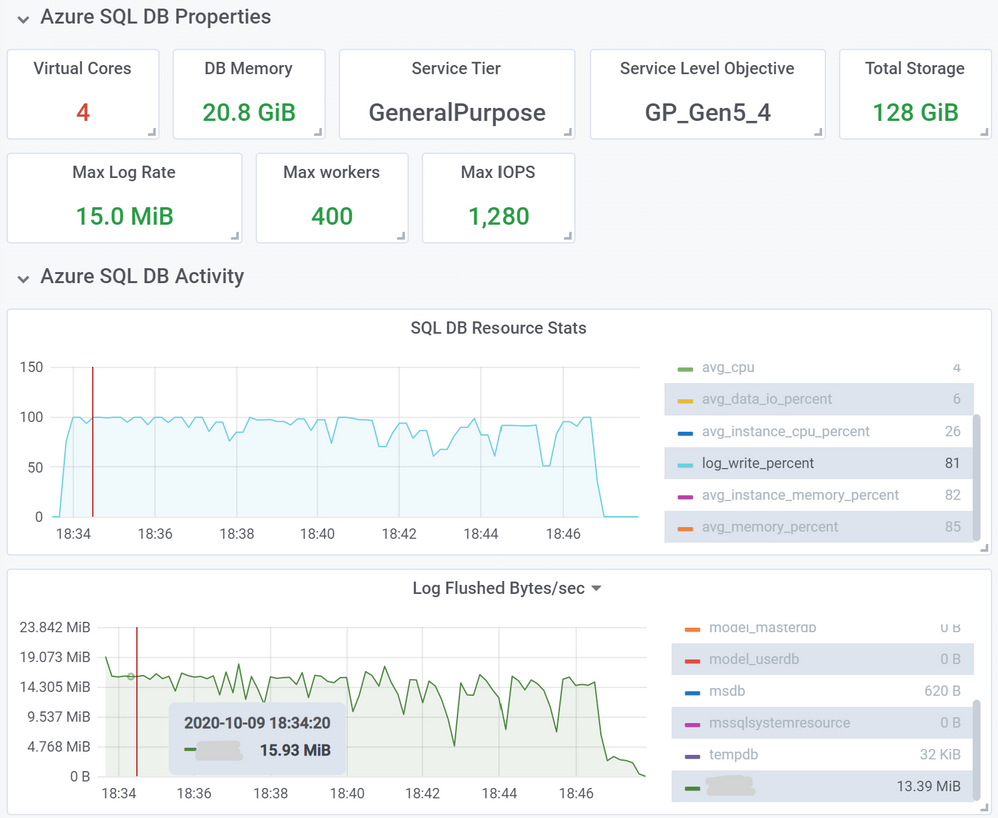

As an example of an operation benefiting from higher log rate limits, we inserted 10 million rows into a heap table using a SELECT … INTO … statement, using a 4-core General Purpose database on Gen5 hardware. Row size was 1,255 bytes, and total inserted data size was 11.7 GB.

First, we used a database with the old log rate limit of 15 MB/s. This load took 13 minutes and 2 seconds.

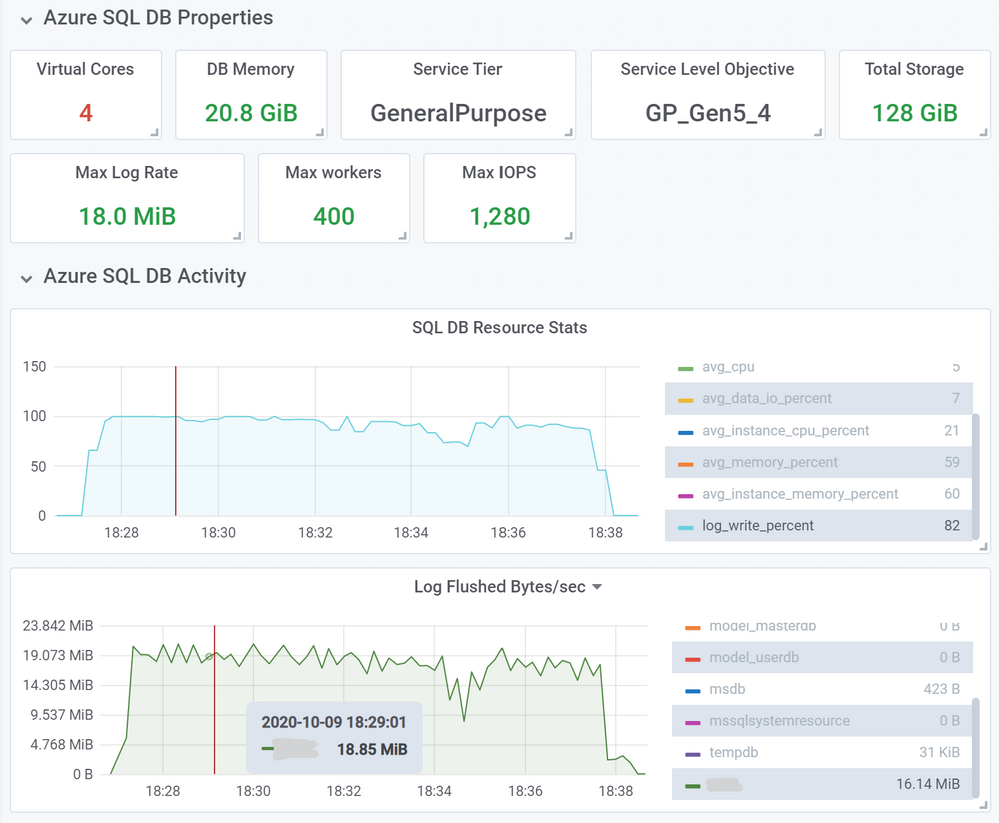

Next, we repeated the same load, but using a database with the new 18 MB/s limit.

This load took proportionally less time, 10 minutes and 33 seconds, a 21% decrease in load time.

You can expect this kind of improvement for most operations that used to consume close to 100% of log write throughput with the old limit. Similarly, for databases in elastic pools that generate high volumes of log writes at the same time, delays due to throttling are now reduced due to higher log rate limit at the elastic pool level.

Conclusion

This increase in log rate limits helps our customers using General Purpose databases and elastic pools improve performance of bulk data loading and data modification operations. In some cases, customers may be able to scale down to a lower service objective and maintain the same data load performance.

This improvement is another step in our journey to improve Azure SQL performance and scalability to help our customers achieve their goals more efficiently and at lower cost, while maintaining Database-as a-Service principles and existing recoverability SLAs.