This post has been republished via RSS; it originally appeared at: Azure Database Support Blog articles.

Azure SQL Database offers an easy several-clicks solution to scale database instances when more resources are needed. This is one of the strengths of PaaS, you pay for only what you use and if you need more or less, it's easy to do the change. A current limitation, however, is that the scaling operation is a manual one. The service doesn't support auto-scaling as some of us would expect.

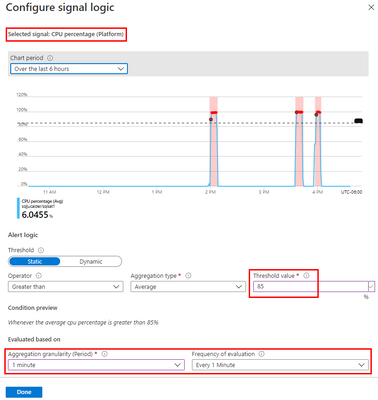

Having said that, using the power of Azure we can set up a workflow that auto-scales an Azure SQL Database instance to the next immediate tier when a specific condition is met. For example: what if you could auto-scale the database as soon as it goes over 85% CPU usage for a sustained period of 5 minutes? Using this tutorial we will achieve just that.

Supported SKUs: because there is no automatic way to get the list of available tiers at script runtime, these must be hard-coded into it. For this reason, the script below only supports DTU and vCore (provisioned compute) databases. Hyperscale, Serverless, Fsv2, DC and M series are not supported. Having said that, the logic is the same not matter the tier so feel free to modify the script to suit your particular SKU needs.

|

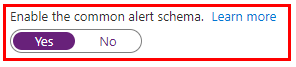

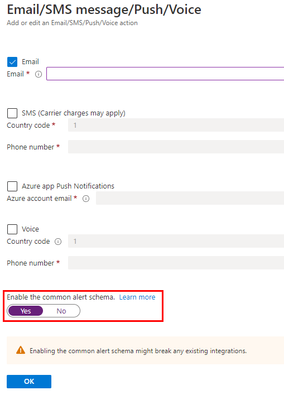

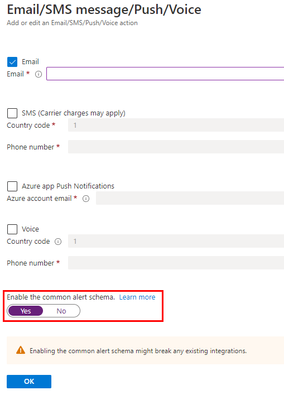

Important: every time any part of the setup asks if the Common Alert Schema (CAS) should be enabled, select Yes. The script used in this tutorial assumes the CAS will be used for the alerts triggering it.

|

Step #1: deploy Azure Automation account and update its modules

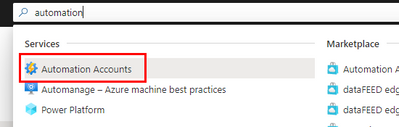

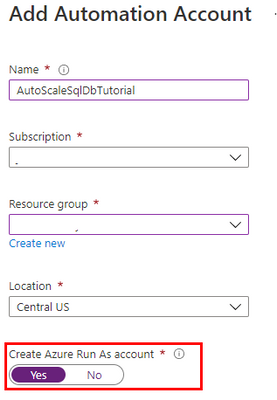

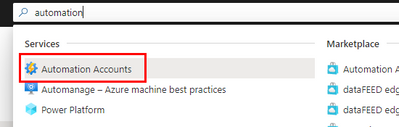

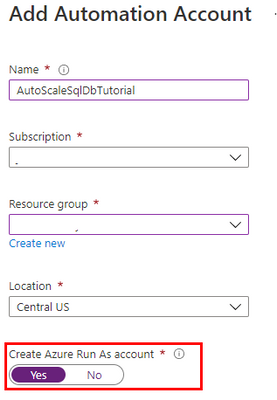

The scale operation will be executed by a PowerShell runbook inside of an Azure Automation account. Search Automation in the Azure Portal search bar and create a new Automation account. Make sure to create a Run As Account while doing this:

Once the Automation account has been created, we need to update the PowerShell modules in it. The runbook we will use uses PowerShell cmdlets but by default these are old versions when the Automation account is provisioned. To update the modules to be used:

- Save the PowerShell script here to your computer with the name Update-AutomationAzureModulesForAccountManual.ps1. The Manual word is added to the file name as to not overwrite the default internal runbook the account uses to update other modules once it gets imported.

- Import a new module and select the file you saved on step #1:

- When the runbook has been imported, click Test Pane, fill in the details for the Resource Group and the Azure Automation account name we are using and click Start:

- When it finishes, the cmdlets will be fully updated. This benefits not only the SQL cmdlets used below but any other cmdlets any other runbook may use on this same Automation account.

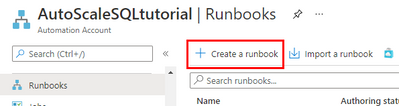

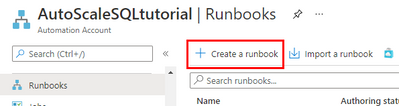

Step #2: create scaling runbook

With our Automation account deployed and updated, we are now ready to create the script. Create a new runbook and copy the code below:

The script below uses Webhook data passed from the alert. This data contains useful information about the resource the alert gets triggered from, which means the script can auto-scale any database and no parameters are needed; it only needs to be called from an alert using the Common Alert Schema on an Azure SQL database.

param

(

[Parameter (Mandatory=$false)]

[object] $WebhookData

)

# If there is webhook data coming from an Azure Alert, go into the workflow.

if ($WebhookData){

# Get the data object from WebhookData

$WebhookBody = (ConvertFrom-Json -InputObject $WebhookData.RequestBody)

# Get the info needed to identify the SQL database (depends on the payload schema)

$schemaId = $WebhookBody.schemaId

Write-Verbose "schemaId: $schemaId" -Verbose

if ($schemaId -eq "azureMonitorCommonAlertSchema") {

# This is the common Metric Alert schema (released March 2019)

$Essentials = [object] ($WebhookBody.data).essentials

Write-Output $Essentials

# Get the first target only as this script doesn't handle multiple

$alertTargetIdArray = (($Essentials.alertTargetIds)[0]).Split("/")

$SubId = ($alertTargetIdArray)[2]

$ResourceGroupName = ($alertTargetIdArray)[4]

$ResourceType = ($alertTargetIdArray)[6] + "/" + ($alertTargetIdArray)[7]

$ServerName = ($alertTargetIdArray)[8]

$DatabaseName = ($alertTargetIdArray)[-1]

$status = $Essentials.monitorCondition

}

else{

# Schema not supported

Write-Error "The alert data schema - $schemaId - is not supported."

}

# If the alert that triggered the runbook is Activated or Fired, it means we want to autoscale the database.

# When the alert gets resolved, the runbook will be triggered again but because the status will be Resolved, no autoscaling will happen.

if (($status -eq "Activated") -or ($status -eq "Fired"))

{

Write-Output "resourceType: $ResourceType"

Write-Output "resourceName: $DatabaseName"

Write-Output "serverName: $ServerName"

Write-Output "resourceGroupName: $ResourceGroupName"

Write-Output "subscriptionId: $SubId"

# Because Azure SQL tiers cannot be obtained programatically, we need to hardcode them as below.

# The 3 arrays below make this runbook support the DTU tier and the provisioned compute tiers, on Generation 4 and 5 and

# for both General Purpose and Business Critical tiers.

$DtuTiers = @('Basic','S0','S1','S2','S3','S4','S6','S7','S9','S12','P1','P2','P4','P6','P11','P15')

$Gen4Cores = @('1','2','3','4','5','6','7','8','9','10','16','24')

$Gen5Cores = @('2','4','6','8','10','12','14','16','18','20','24','32','40','80')

# Here, we connect to the Azure Portal with the Automation Run As account we provisioned when creating the Automation account.

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection=Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

# Gets the current database details, from where we'll capture the Edition and the current service objective.

# With this information, the below if/else will determine the next tier that the database should be scaled to.

# Example: if DTU database is S6, this script will scale it to S7. This ensures the script continues to scale up the DB in case CPU keeps pegging at 100%.

$currentDatabaseDetails = Get-AzureRmSqlDatabase -ResourceGroupName $ResourceGroupName -DatabaseName $DatabaseName -ServerName $ServerName

if (($currentDatabaseDetails.Edition -eq "Basic") -Or ($currentDatabaseDetails.Edition -eq "Standard") -Or ($currentDatabaseDetails.Edition -eq "Premium"))

{

Write-Output "Database is DTU model."

if ($currentDatabaseDetails.CurrentServiceObjectiveName -eq "P15") {

Write-Output "DTU database is already at highest tier (P15). Suggestion is to move to Business Critical vCore model with 32+ vCores."

} else {

for ($i=0; $i -lt $DtuTiers.length; $i++) {

if ($DtuTiers[$i].equals($currentDatabaseDetails.CurrentServiceObjectiveName)) {

Set-AzureRmSqlDatabase -ResourceGroupName $ResourceGroupName -DatabaseName $DatabaseName -ServerName $ServerName -RequestedServiceObjectiveName $DtuTiers[$i+1]

break

}

}

}

} else {

Write-Output "Database is vCore model."

$currentVcores = ""

$currentTier = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(0,8)

$currentGeneration = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(6,1)

$coresArrayToBeUsed = ""

try {

$currentVcores = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(8,2)

} catch {

$currentVcores = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(8,1)

}

Write-Output $currentGeneration

if ($currentGeneration -eq "5") {

$coresArrayToBeUsed = $Gen5Cores

} else {

$coresArrayToBeUsed = $Gen4Cores

}

if ($currentVcores -eq $coresArrayToBeUsed[$coresArrayToBeUsed.length]) {

Write-Output "vCore database is already at highest number of cores. Suggestion is to optimize workload."

} else {

for ($i=0; $i -lt $coresArrayToBeUsed.length; $i++) {

if ($coresArrayToBeUsed[$i] -eq $currentVcores) {

$newvCoreCount = $coresArrayToBeUsed[$i+1]

Set-AzureRmSqlDatabase -ResourceGroupName $ResourceGroupName -DatabaseName $DatabaseName -ServerName $ServerName -RequestedServiceObjectiveName "$currentTier$newvCoreCount"

break

}

}

}

}

}

}

Step #3: create Azure Monitor Alert to trigger the Automation runbook

On your Azure SQL Database, create a new alert rule:

The next blade will require several different setups:

- Scope of the alert: this will be auto-populated if +New Alert Rule was clicked from within the database itself.

- Condition: when should the alert get triggered by selecting a signal and defining its logic.

- Actions: when the alert gets triggered, what will happen?

Condition

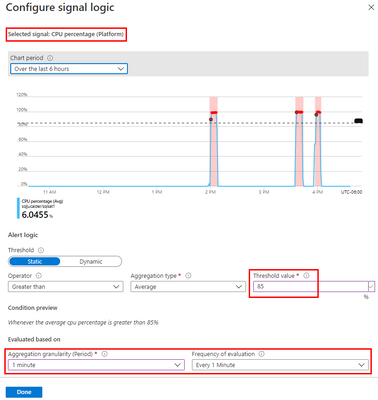

For this example, the alert will monitor the CPU consumption every 1 minute. When the average goes over 85%, the alert will be triggered:

Actions

After the signal logic is created, we need to tell the alert what to do when it gets fired. We will do this with an action group. When creating a new action group, two tabs will help us configure sending an email and triggering the runbook:

Notifications

Actions

After saving the action group, add the remaining details to the alert.

That's it! The alert is now enabled and will auto-scale the database when fired. The runbook will be executed twice per alert: once when fired and another when resolved but it will only perform a scale operation when fired.