This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Availability Groups in Big Data Clusters. Two or more replicas in the same node?

Big Data clusters supports high availability for all its components; most notably for the SQL Server master it provides an Always On Availability Group out of the box. You can deploy your big data cluster by using the HA configurations as explained here.

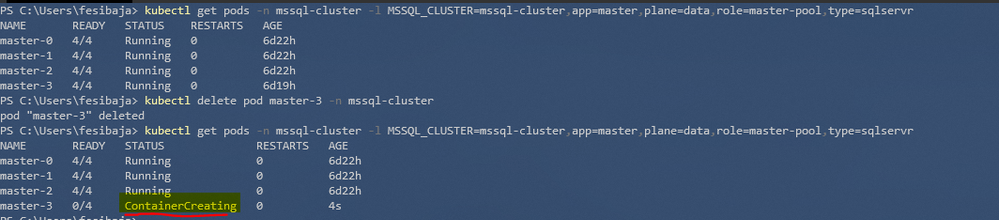

Once your BDC cluster is deployed you can check the status of your replicas using kubectl in Power-Shell.

kubectl get pods -n mssql-cluster -l MSSQL_CLUSTER=mssql-cluster,app=master,plane=data,role=master-pool,type=sqlservr -o wide

Recently we have observed a couple of occurrences where 2 or more AG replicas are being executed within the same node. In such scenario the command executed above would get you an output analogous to this:

|

PS C:\Users> kubectl get pods -n mssql-cluster `

master-0 4/4 Running 0 3h29m xx.xxx.x.xx aks-agentpool-15065227-vmss000003 master-1 4/4 Running 0 3h28m xx.xxx.x.xx aks-agentpool-15065227-vmss000003 |

In the above output you can observe that both master-0 and master-1 are hosted on node aks-agentpool-15065227-vmss000003. The problem should be already apparent with this example, if the node in which both replicas are goes down no failover will occur and the containers will have to be recreated and started on different nodes. This seems to destroy the purpose of a high availability solution.

In this post we will discuss this scenario, its cause and how can we avoid it.

How is HA achieved in BDC?

Big Data Clusters uses Kubernetes itself as the high availability solution. The master instance AG is implemented using a StatefulSet object named master. The stateful set is very similar to a ReplicaSet but it ensures that uniqueness between pods is enforced. Kubernetes will do its best to ensure that the desired state for the replica is maintained at all times; that is the number or replicas defined for the set to be running.

If you manually delete one of the pods you will see that a new one is immediately rescheduled.

Let’s quickly review how the process of recovering a pod after an event such as deletion occurs:

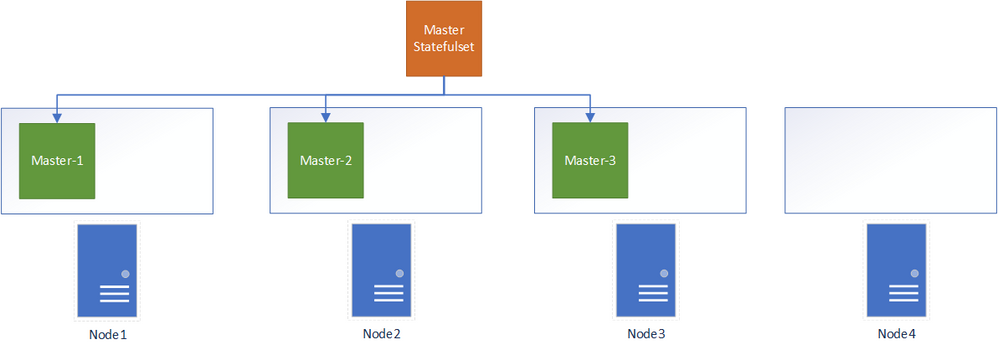

- Let’s suppose that we have a 4-node cluster and 3 -replica master stateful set. Each pod is running on a different node.

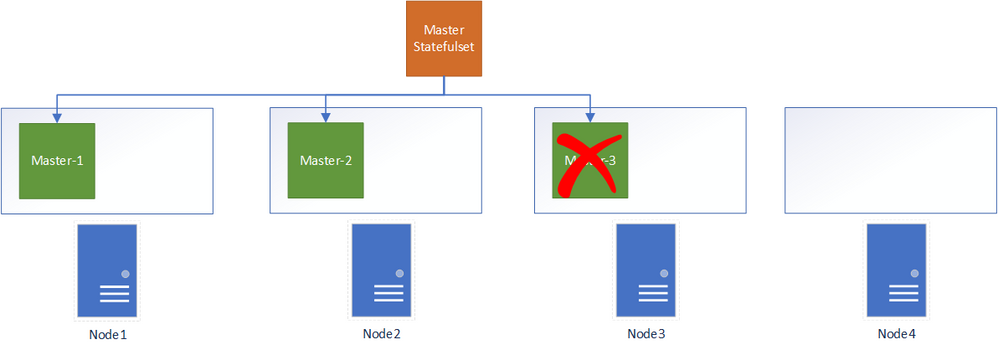

- An event that causes the pod to terminate occurs.

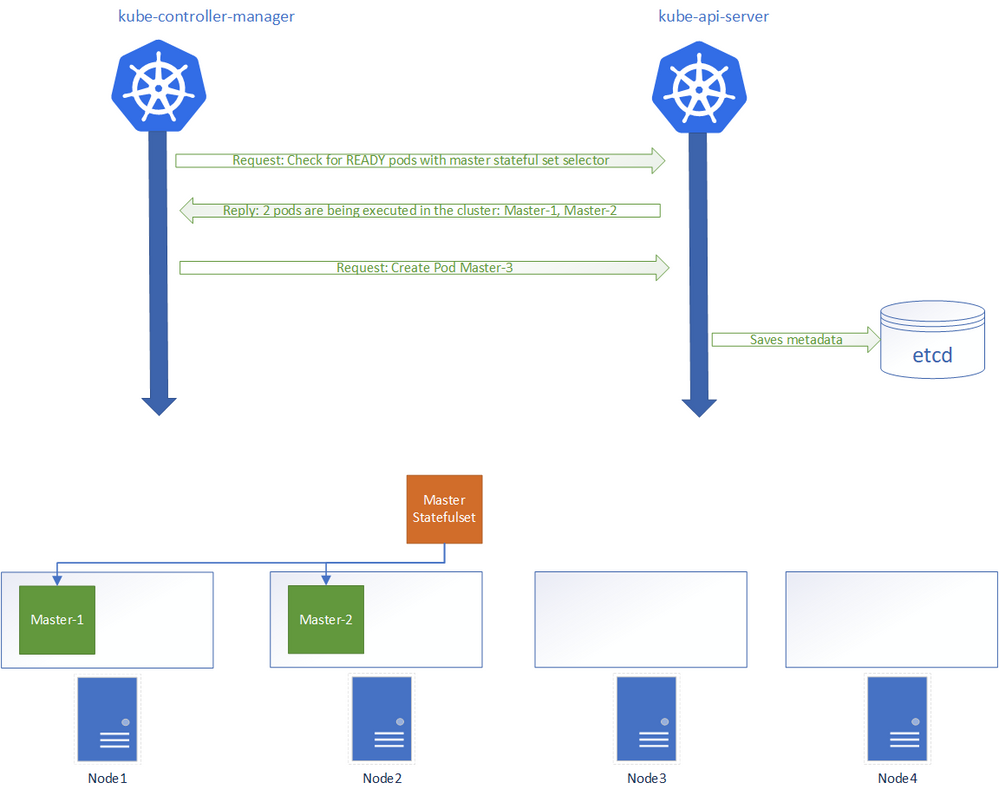

- The kube-controller-manager process constantly checks with the API server for the state of controller objects. For the master stateful set in our example if the number of running pods is less than 3, then it will request for the creation of additional pods to satisfy the desired state.

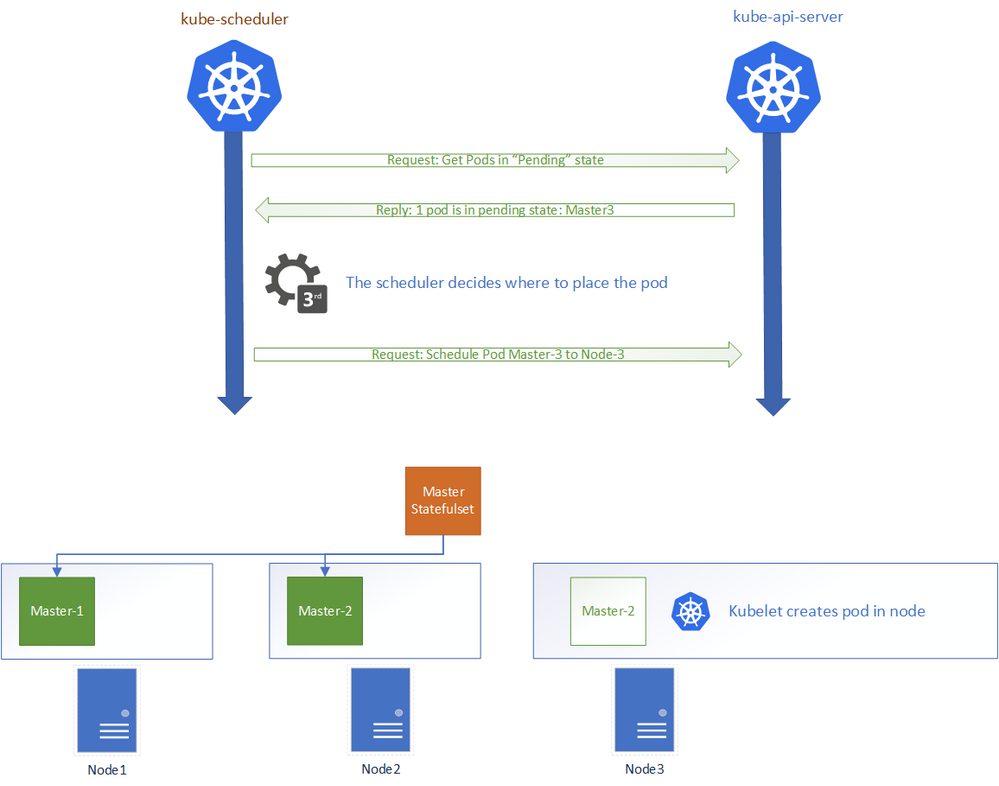

- The Kubernetes Api Server recreates the new pod within its internal database. But saving the metadata does not mean that the pod will be immediately scheduled into a node. When only the metadata for the pod is saved into the k8s internal database, the pod is left in the “Pending” state.

- The scheduler process checks for pods in the pending state. The Scheduler then decides where the pod should be placed. Once the scheduler has made the decision it requests the api server to schedule the pod on a given node. The kubelet process running on the selected node will then be notified and will start the pod.

Now, coming back to our Availability Group discussion; two events in the above list interest us the most:

- Pod termination: Why was the pod removed from the node in the first place?.

- Pod scheduling: How the scheduler decides where to place the pod? Why can 2 pods from the same AG be placed on the same node?

Regarding pod termination the possibilities are manual deletion, container process termination, pod eviction and node failure. A manual deletion should be unlikely in a production environment. If the Sql Server process unexpectedly terminates or even if a shutdown is issued this will cause the pod to terminate. The kubelet process proactively monitors the resource usage in a node and can terminate pods to reclaim back resources if being starved. Finally, a node shutdown will obviously cause all its workload to fail.

Scheduling is performed by default by the kube-scheduler program (although a customer scheduler can be implemented). For every pending pod, the scheduler will find the “best” node available and will schedule it into it. How the scheduler determines which node is the best is determined in 2 phases:

- Filtering: the scheduler filters out nodes that are not feasible to assign the pod to. This includes ensuring that the node is in the ready state, having enough resources to fit the pod, not being under resource pressure and checking for node selectors and taints, among others. See the complete list here.

- Scoring: The scheduler ranks the feasible nodes based on a set of policies listed here and choses the best ranked. One very relevant policy for an AG group is the “Selector Spread Priority” which attempts to spread pods from the same StatefulSet across hosts.

Having discussed the termination and scheduling events, lets see an example of this issue can be reproduced.

Example

Let’s observe the following cluster with 4 nodes and 4 master instance replicas.

|

PS C:\Users> kubectl get pods -n mssql-cluster ` -l MSSQL_CLUSTER=mssql-cluster,app=master,plane=data,role=master-pool,type=sqlservr -o wide

|

Currently the replicas are spread across the nodes as expected. Let’s cause a pod termination by deleting a pod.

|

PS C:\Users> kubectl delete pod master-2 -n mssql-cluster

|

Immediately afterwards we check the status of the pods:

|

PS C:\Users> kubectl get pods -n mssql-cluster -l MSSQL_CLUSTER=mssql-cluster,app=master,plane=data,role=master-pool,type=sqlservr -o wide

|

The pod was rescheduled into the node 01 again and this is behavior we want. The node 01 was a feasible node and the “Selector Spread Priority” policy causes it to rank high and be the preferred node.

Now let’s repeat the exercise but making the node 01 unfeasible by putting a taint on it. Taints place a restriction on a node that only allows pods that explicitly tolerate the taint to be scheduled.

We taint node 01 with “lactose”.

|

PS C:\Users> kubectl taint node aks-agentpool-15065227-vmss000001 lactose=true:NoSchedule |

We delete pod master-2.

|

PS C:\Users> kubectl delete pod master-2 -n mssql-cluster |

We check the pods again:

|

PS C:\Users> kubectl get pods -n mssql-cluster ` -l MSSQL_CLUSTER=mssql-cluster,app=master,plane=data,role=master-pool,type=sqlservr -o wide

|

Now we can observe that the pod was scheduled on node 00 due to node 01 being tainted with “lactose=true” and master-2 not being tolerant to lactose. It will also take longer to this pod to execute on the newly assigned node, because the persistent volume claims need to be reattached to a new host.

Notice that if we remove the taint, the pods will remain where they are; no “rebalance” is performed unless an explicit deletion of pod is executed again.

Generalized Scenario.

We can now generalize this scenario and assert that this problem will arise when:

- A pod belonging to the availability group is terminated.

- The scheduler cannot “spread” the pod due to not finding feasible pods or the SelectorSpreadPriority being at odds with other scoring priorities.

Pod eviction due to memory starvation is likely to cause this problem if there are not other pods available. After the pod eviction the node will not be selected to reschedule, since the MemoryPressure condition will be active and this will filter out the host.

The scheduler most schedule the pod in another node because the kubernetes priority is to preserve desired state (number of pods).

How to avoid this scenario?

This most likely happen in clusters with a very small number of nodes available.

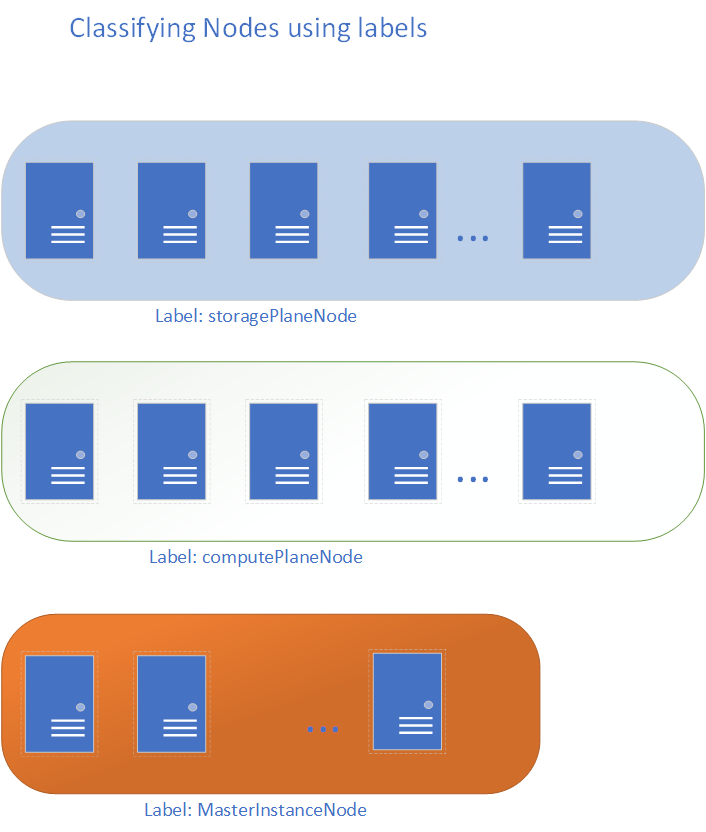

It is important to plan and reserve specific sets of pods with the appropriate resources assigned to serve the different planes for the Big Data cluster.

Before deployment you can label your nodes and group them according to each role. During the configuration phase of the BDC you can then patch your configuration to use node selectors for the different statefulsets as described here.

We will further discuss the deployment planning in another post.