This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

When we first released Network ATC, we greatly simplified the deployment and on-going management of host networking in Azure Stack HCI. Whether it was the simple yet powerful deployment experience, a “that was easy” cluster expansion process, or increased reliability helping to avoid support costs, the feedback was unanimous: Network ATC was a hit!

Despite the fanfare, there were several improvements we wanted to make once we saw just how valuable this turned out to be to our customers. Some of these improvements, like Auto-IP Addressing for storage adapters, were obvious opportunities for improvement. Others, like the clustering integrations we added, were built entirely at the request of customers like you.

First, we wanted to say THANK YOU to all those that provided feedback! Next, keep the feedback coming because we’re going to continue addressing your feedback to make your Azure Stack HCI networking experience is simpler, quicker, and more reliable for your business-critical workloads.

Before we move on if you want to review our previous posts to “brush-up” on the 21H2 release, you can find some information here and here. But in this article, we’re going to cover many of the new improvements coming soon to Azure Stack HCI subscribers – As a reminder, Network ATC is part of the free upgrade to 21H2 and the improvements in this article are part of the 22H2 free update.

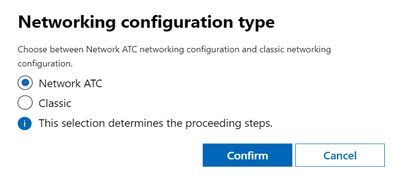

Windows Admin Center Deployment

Availability: Already available with the latest version of WAC and the cluster creation extension

Description: If you’re rummaging through our deployment documentation, you’ll notice that it was updated to include a deployment model with Network ATC. This has been available for a few months and we’re shipping out new fixes to address issues with the deployment. Most of the issues are unrelated to Network ATC (for example DNS replication of the cluster name) but even in those cases we’re attempting to learn and harden the system through your feedback.

Network Symmetry

Description: Microsoft has required symmetric adapters for Switch-Embedded Teaming (the only teaming mechanism supported on Azure Stack HCI) since Windows Server 2016. Adapters are symmetric if they have the same make, model, speed, and configuration.

In 21H2, Network ATC configures and optimizes all adapters identically based on the intent that you configured. However, you needed to ensure that the make, model, and speed were identical for all adapters in the same intent. In 22H2, Network ATC verifies all aspects of network symmetry across all nodes of the cluster. The following table outlines the differences in symmetry verification between Azure Stack HCI 21H2 and 22H2.

|

|

21H2 |

22H2 |

|

Make |

|

|

|

Model |

|

|

|

Speed |

|

|

|

Configuration |

|

|

To say it another way, if Network ATC provisions adapters, the configuration is 100% supported by Microsoft. There’s no more guessing; there’s no more surprises when you open a support ticket.

Here's a demo of the Network Symmetry in action:

Automatic IP Addressing for Storage Adapters

Description: If you choose the -Storage intent type for your adapters on 21H2, Network ATC configured nearly everything needed for a fully functional storage network. It will automatically

- Configure the physical adapter properties

- Configure Data Center Bridging

- Determine if a virtual switch is needed

- If a vSwitch is needed, it will create the required virtual adapters

- Map the virtual adapters to the appropriate physical adapter

- Assign VLANs

However (with 21H2), all adapters are assigned an APIPA address (169.254.x.x) which weren't recommended for several reasons. Since all adapters are on the same subnet, clustering (which detects networks at the IP layer (3)) assumes that they can all communicate to one another but marks them “partitioned” because the adapters are on different VLANs.

New with 22H2, Network ATC will automatically assign IP Addresses for storage adapters! If you’re storage network is connected using switches, just ensure that the default VLANs provisioned by Network ATC are configured on the physical switch’s switchports (which you already needed to do) and you're ready to go! Note: If you’re using a switchless storage configuration, these IP Addresses will automatically work without configuring a switch (because its switchless!) and no additional action is required.

Here's a demo of automatic storage IP addressing new in 22H2!

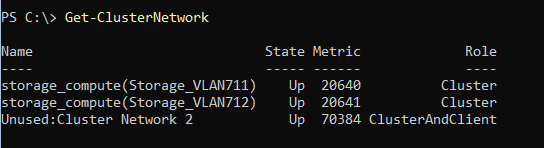

Cluster Network Naming

Description: This is one of my personal favorites. By default, failover clustering always names unique subnets like this: “Cluster Network 1”, “Cluster Network 2”, and so on. This is unconnected to the actual use of the network because there is no way for clustering to know how you intended to use the networks – until now!

Once you define your configuration through Network ATC, we now understand how the subnets are going to be used and can name the cluster networks more appropriately. For example, we know which subnet is used for management, storage network 1, storage network 2 (and so on, if applicable). As a result we can name the networks more contextually.

In the picture below, you can see the storage intent was applied to this set of adapters. There is another unknown cluster network shown which the administrator may want to investigate.

This has some interesting advantages in terms of diagnostic purposes. For example, you can now more easily identify an adapter (not managed by Network ATC) that was configured in the wrong subnet. This can cause some reliability issues like congestion leading to packet loss and increased latency.

Here's a demo of the Cluster Networking Naming feature in action:

Live Migration Improvements

Description: This was another improvement directly from customer feedback. Live migration management across cluster nodes has a few rules and best practices to ensure that you get the best experience. For example, do you know how many simultaneous live migrations Microsoft recommends? Did you know that the recommended transport (SMB, Compression, TCP) is dependent on the amount of allocated bandwidth?

These sorts of nuances are right up Network ATC’s alley and you no longer have to worry about this even if the recommendations change on future versions of the operating system. Network ATC will always keep you up to date with the recommended guidelines for that version of the operating system (as a reminder, you can always override). With that in mind, here are the live migration settings Network ATC will manage:

- The maximum number of simultaneous live migrations: Network ATC will ensure that the recommended value is configured by default and maintained across all cluster nodes (drift correction).

- The best live migration network: Network ATC will determine the best network for live migration and automatically configure the system.

- The best live migration transport: Network ATC will choose the best live migration transport (SMB, Compression, TCP) given your configuration.

- The maximum amount of SMBDirect (RDMA) bandwidth used for live migration: If SMBDirect (RDMA) is chosen to perform the live migration, Network ATC will determine the maximum amount of bandwidth to be used for live migration to ensure that there is bandwidth for Storage Spaces Direct.

Proxy Configuration

Description: Azure Stack HCI is a cloud service that resides in your data center. That means that it must connect to Azure for registration, and for some customers that means the nodes must have a proxy configured.

In 22H2, Network ATC can help you configure all cluster nodes with the same proxy configuration information if your environment requires it. By setting the proxy configuration in Network ATC, you get the benefit of a one-time configuration for all nodes in the cluster. This means all nodes have the same information, including future nodes that join the cluster down the road.

Stretch S2D Cluster support

Description: One of the most anticipated features of 20H2 was Stretch Storage Spaces Direct (S2D). This allows you to “stretch” your S2D cluster across physical locations for disaster recovery purposes.

With a Stretch S2D Cluster, the nodes are placed into different sites. Nodes in the same site replicate storage using high-speed networks. Nodes in separate sites replicate their storage using storage replica. Storage replica networks operate similarly to that of regular storage networks with two primary differences (see the documentation for more information):

- Storage replica networks are “routable”

- Storage replica networks cannot use RDMA

Network ATC will deploy the configuration required for the storage replica networks. Since these adapters need to route across subnets, we don’t assign any IP addresses, so you’ll need to assign these yourselves. Other than that, these adapters are ready to go.

Scope detection

Description: This one is so simple but it’s still one of my favorites – It’s a huge time saver. In 21H2, once you configure a node in a cluster, Network ATC requires that you specify the -ClusterName parameter with every command. This is true even if you’re running the command locally in that cluster. This can result in confusion if you don’t realize this happened because Network ATC might not show you the information you expected, or it just annoys you, requiring you to run the command again with the proper information.

Now with 22H2, Network ATC will automatically detect if you’re running the command on a cluster node. If you are, you won’t have to put the -ClusterName parameter anymore because we automatically detect the cluster that you are on, thereby preventing confusion and frustration.

Modify VLANs after deployment

Description: With 21H2, there was no way to directly modify the management or storage VLANs once you deployed the intent. The resolution to this was to remove then re-add the intent with the new VLANs. This process was non-disruptive – you don’t have to recreate any of the configuration already deployed like virtual switches, move VMs, or anything else – but it was annoying.

It became particularly painful for customers that had several overrides as part of their intent. This required gathering the overrides before removing the intent (these are stored as expandable properties in several places like Get-NetIntentStatus), removing then re-adding the intent with the overrides captured from Get-NetIntentStatus and the new VLANs. That’s a lot of work for something as simple as changing VLANs.

Instead, now you can use Set-NetIntent to modify the management or storage VLANs just like you would if you were using Add-NetIntent.

Bug Fixes

We also resolved several bug fixes

Provisioning Fails if *NetDirectTechnology is Null

Description: As you may already know, any adapter property with an asterisk in front of it is a Microsoft-defined adapter property. NIC vendors then implement those keys as part of our certification process. You can see these properties with the Get-NetAdapterAdvancedProperty cmdlet. Any property that doesn’t have the asterisk is defined by the NIC vendor. Importantly, the vendor must define the properties with asterisks as defined by Microsoft. As a simple example, if the key is expected to have a range of values between 1 and 5, the vendor shouldn’t allow values not in that range as it might not be handled correctly.

In this case, we found that some customers were using inbox drivers which are intended only for expedient OS deployment. When using these inbox drivers, some NIC vendors left the *NetworkDirectTechnology property as null which was not handled correctly by Network ATC.

First, please do not ever use an inbox driver in production. These drivers are not supported for production use. Second, we have made the fix to more appropriately handle when an advanced property is null.

VM Deployment

Description:

Running the Azure Stack HCI OS in a VM-environment is an unsupported scenario. However, having a VM-environment to run tests, validate functionality and set up demos is extremely beneficial. We do this ourselves quite often using the Azure Stack HCI Jumpstart module.

When deploying the Azure Stack HCI OS, and Network ATC in a VM-based lab environment, you need to make a few modifications to make the set-up work in the VM environment:

NetworkDirect

In order to have a functional set-up in a VM-environment, you need to disable RDMA (NetworkDirect). To do this, follow the instructions in our documentation.

Number of vCPUs:

Network ATC requires the number of vCPUs on your VM-environment (for each VM) to be greater than or equal to 2, for Receive Side Scaling (RSS). You can verify this with the following command:

The number of cores reported from this command should be greater than or equal to 2.

Wrapping up

Network ATC has continued taking significant strides towards simplifying networking deployments in the 22H2 release of the Azure Stack HCI OS. We have introduced a range of new features and improvements, predominantly from your feedback! This includes increasing network stability with Network Symmetry, simplifying network deployment with Automatic Storage IP Addressing, and managing additional properties like the System Proxy, as well as many others!

Your feedback continues to drive improvements in Network ATC. We look forward to hearing your feedback in the comments below.

Until next time,

Dan Cuomo and Param Mahajan