This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Scenario

I recently ran into a Kustomer that migrated from TSI to ADX (Azure Data Explorer). They were really excited about using Kusto Trender but one item they couldn't wrap their head around was how to update their hierarchy table(s) in ADX. i.e.

As a big data platform ADX is an append-only data store, so we don't have the options to do updates, right? Well, that's not completely true. We absolutely don't support updates, but we do have a couple options to simulate updates.

Time-series database designs stem from records being written as append-only or as new rows added to a table. Consider a bank statement, it typically has rows of credits and debits only, leading to a running balance. The transactions themselves aren't updated, instead a new row is added as a credit or debit. This provides the ability to track changes to the overall system as inserts, resulting in an append-only pattern. The ability to record each and every change to the system as a new, different row is what makes time-series data so powerful.

So how do we take an append-only data store and do updates? Here are the two most common options.

Option 1: Utilize a Materialized-View (Recommended)

Materialized Views in ADX can be used to simulate updates via the arg_max operator. For example, let's say your data has a column called EnqueuedTime that is the time it was submitted for ingestion to ADX. Then your materialized view could use the following KQL query:

If you ingest a row with a more-recent EnqueuedTime but the same value for ColumnA and ColumnB, then the new row will be the only one present in the Materialized-View. Checkout how materialized-views work.

Option 2: Row Level Deletion

We now have the capability to do row level deletes in ADX. In general materialized views are your better option for updates but if you have a scenario where you rarely update the table then this can be an option. If you try to use this option for frequent updates you will cause performance issues on your cluster. With that caveat aside the process is straightforward, you simply delete the row and then ingest the updated record.

KQL Script

Interested in seeing how this works? You can use the bellow KQL example to walk through the process by yourself. Given that Option 1 is the preferred method that is what we'll show below.

Don't have an Azure Data Explorer Cluster, no problem you can utilize our free offering at https://aka.ms/adx.free. Full script available here.

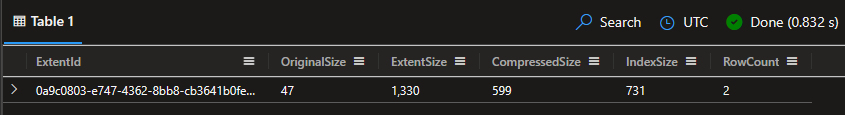

1. Let's start by in-line creating a table from a datable object.

2. Next, we'll fix the fname of id 02 by inserting/appending a new row to our table with the new fname value. Then, we'll query MyTable to summarize by id and get the latest/updated values based on the most recent ingestion time using arg_max().

3. Ideally, you'd have a datetime column in your dataset instead of relying on the system's ingestion_time() scalar function. So let's add one and retry the analytics. For example, I will set values for our new column enquedDate to now() in our table from itself by appending all rows.

4. Now that we have more clarity on how to deal with updates, we'll want to create a materialized-view to persist our logic for common querying. In this case, I'll use the backfill parameter for the view to index already existing data in MyTable.

5. Lets add more sample data, plus an update again as a new row for id 3 and to see it all together.

6. Challenge: Can you update Hiram's age?

Did you find this information helpful? Let us know in the comments below. On a future post we will write more regarding Option 2: Row level deletes.

Thank you!