This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Sharing Metadata Across Different Databricks Workspaces

Contents

Important Steps for the configuration.

Hive External metastore database.

Background

Every Databricks deployment comes with a managed built-in Hive metastore. (If you aren’t familiar, a Hive metastore is a database that holds metadata about our data, such as the paths to the data in the data lake and the format of the data (parquet, delta, CSV, etc.)) Instead of using the out-of-the-box Hive metastore wouldn't it be great to have one central metastore and have multiple workspaces point to that metastore. Some scenarios call for an external metastore that is shareable and independent of the workspace's existence. Well, this article will cover the end-to-end process of setting up an external metastore using Azure SQL DB and connecting multiple workspaces to that metastore.

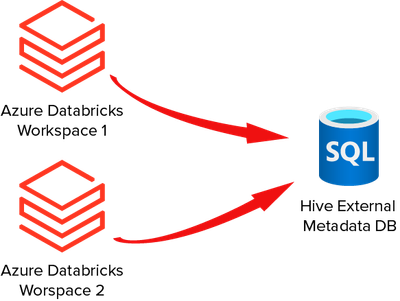

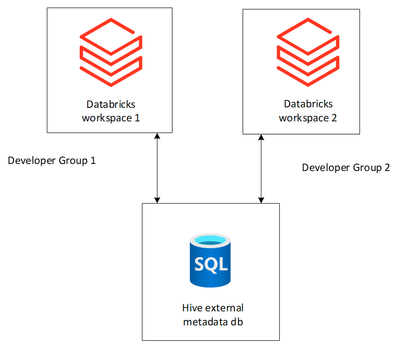

Scenario 1: Consider the scenario where we have several developer groups, and they all want to use their own Databricks Workspace for their development. However, they have a requirement that they must collaborate with each other. By default, Databricks uses the local built-in metastore in DBFS file system to keep the logical schema of all the Delta and Hive tables. If they start registering the databases and tables in their workspaces separately, we will end up having multiple metadata spread across the different workspaces which would make it harder for the teams to collaborate.

A solution to this is to create Hive external metastore that different Databricks Workspaces can share, and each of the Workspaces can register and use the commonly shared metastore. We will be detailing the end-to-end process that is required to set this up in the following steps.

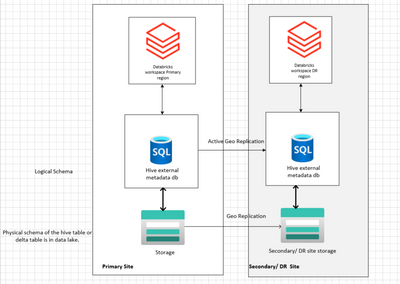

Scenario 2: Now let's paint the picture for Disaster Recovery. Imagine we have a requirement where we need to create a Disaster Recovery site for the primary Databricks Workspace. In addition, one of our requirements calls for minimum down time while failing over from the primary site to the Disaster Recovery site.

We could use the external Hive metastore database to store the metadata and make our lives easier. The moment disaster happens we can basically attach the replicated Hive metastore database in secondary region with the secondary Databricks Workspace (DR site). One of the huge advantages is we won’t have the overhead to run the DDL scripts again and create the databases or table in the secondary site as the Hive external metastore will already have the logical schema.

(Note: We will discuss the Disaster Recovery scenario above and how to use an external Hive metadata store with Azure Databricks for DR in this blogpost in our series.)

Reference link(s):

Data Redundancy in Azure Storage

Disaster Recovery for azure sql db

Hive External Metastore

Here are the steps that we can follow to create the external Hive metastore. Remember the config values are dependent on the Hive version that we are using, and the Hive version is dependent on the Databricks runtime version.

Prerequisites

- Two databricks workspaces are created.

- You have created an Azure SQL server and a database which will store the Hive metastore. You can provide any name for the Azure SQL server, and Azure SQL DB.

- You have a storage account (preferably ADLS g2) where the table’s data would be stored (e.g., Data Lake).

- You have already created a Service Principal, and you noted down the Application ID, Application secret, Tenant ID. To create a Service Principle in the Azure Portal the steps are here.

- Provide the storage data blob contributor access in the storage account that is created in #3 to the service principal.

Important steps for the configuration

Below we are going to create a Hive external metastore that will be shared by two Databricks Workspaces (scenario 1 from the background section above).

Create Hive metastore schema

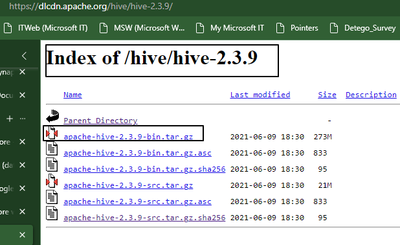

- Download the DDL script for the Hive metadata creation from here. Since I am using 2.3.7, I am selecting the 2.3.x version.

- Unzip the downloaded file, and you will see the folder structure like below. Then select the hive-schema-2.3.0.mssql.sql file.

- Run the script in your Azure SQL database. This database is going to be our Hive external metastore.

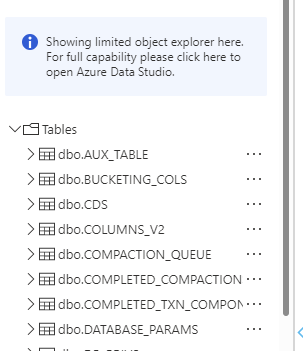

- Post running the script you will see that the Hive metastore schema was created. (See image below.)

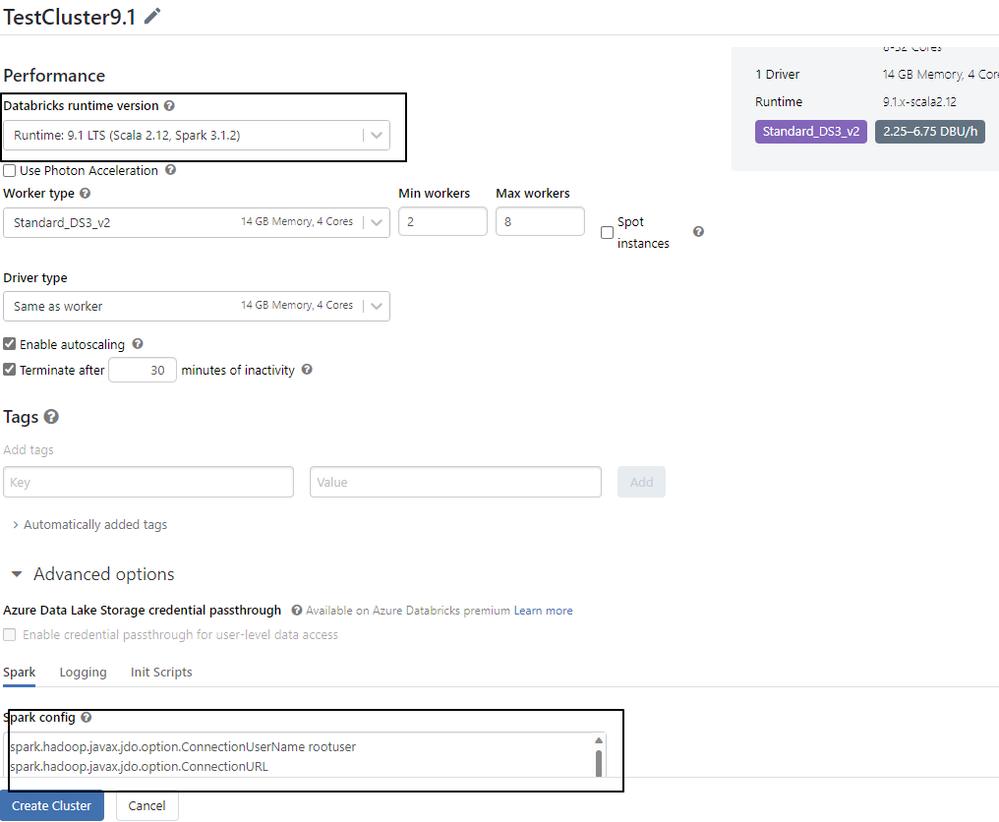

Cluster creation and spark configuration

- In both workspaces, create a cluster with Databricks runtime version. Hive 2.3.7 (Databricks Runtime 7.0 - 9.x) or Hive 2.3.9 (Databricks Runtime 10.0 and above) and with the below configurations.

- In the spark configuration, provide the below config values:

Note:

- Since we used the databricks runtime version 9.1, we provided the hive version as 2.3.7: spark.sql.hive.metastore.version 2.3.7

- For the Hive version 2.3.7 and 2.3.9, we don’t need to explicitly mention the jar file location. We can just provide builtin: spark.sql.hive.metastore.jars builtin

- For the password or secrets, you can use Databricks Secrets. We need to provide the config value as follows: javax.jdo.option.ConnectionPassword {{secrets/xxxscope/xxxsecretname}}

- In the above example we provided the password in clear text which is not recommended.

- For production environments, it is recommend that you set hive.metastore.schema.verification to true. This prevents the Hive metastore client from implicitly modifying the metastore database schema when the metastore client version does not match the metastore database version.

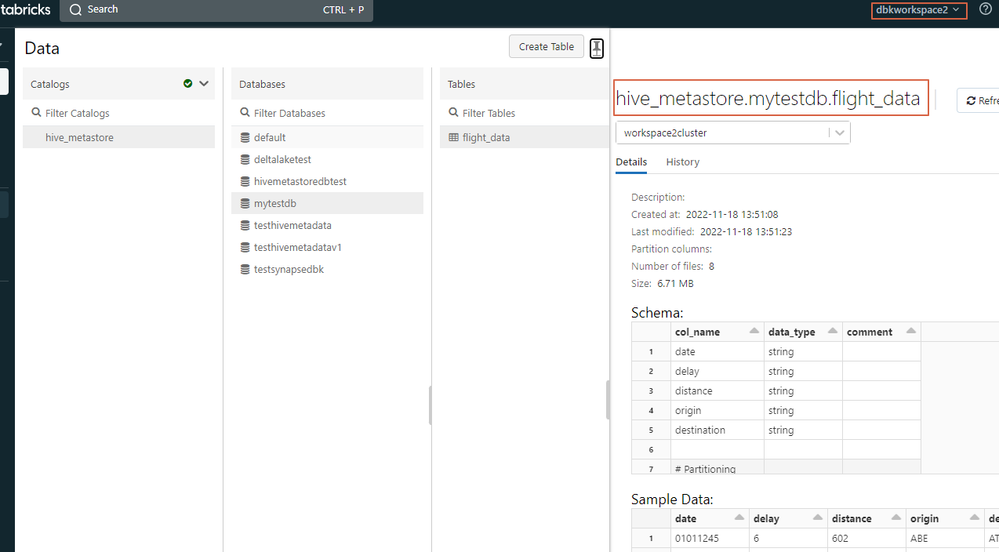

- Post creating the cluster you will find that the Hive metastore to be present in the catalogs section of your workspace. The Hive metastore will always have a default database.

Database and table creation

- We are going to create a mount-point for an external storage account that will store the actual data.

- Now let's create a Delta Table

- Once the Delta Table is created, we can see the Delta Table in the database section in both Workspaces.

In dbkworkspace1

- In dbkworkspace2, if you navigate to the database, you will see an error. This is because we have only attached the external Hive metastore which contains the logical metadata of the table. The physical metadata or the folder structure is present in the Data Lake that we need to mount with the dbkworkspace2 to view the data.

- Mount the storage account in dbkworkspace2 that we did #8 for dbkworkspace1. We should always give the same mount point name. Once we mount the storage account with same name, we can see the data.

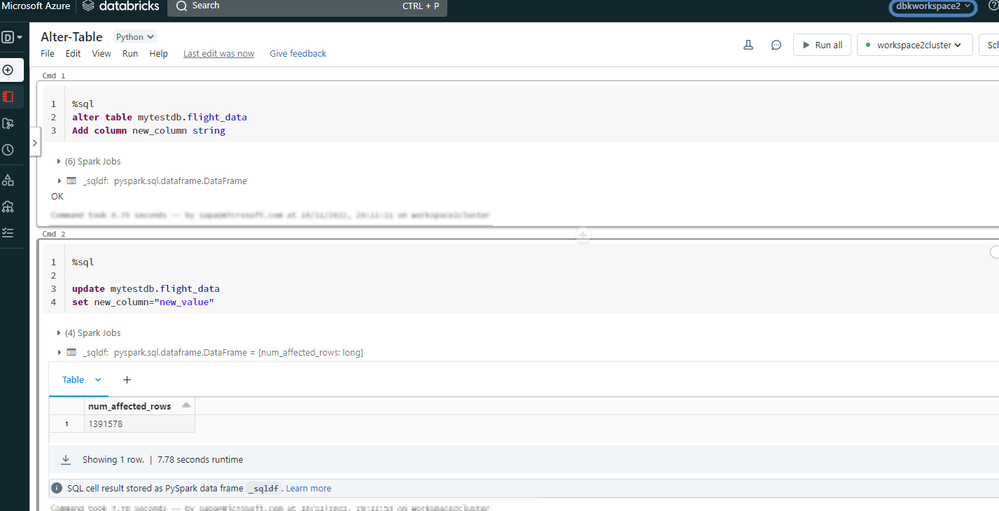

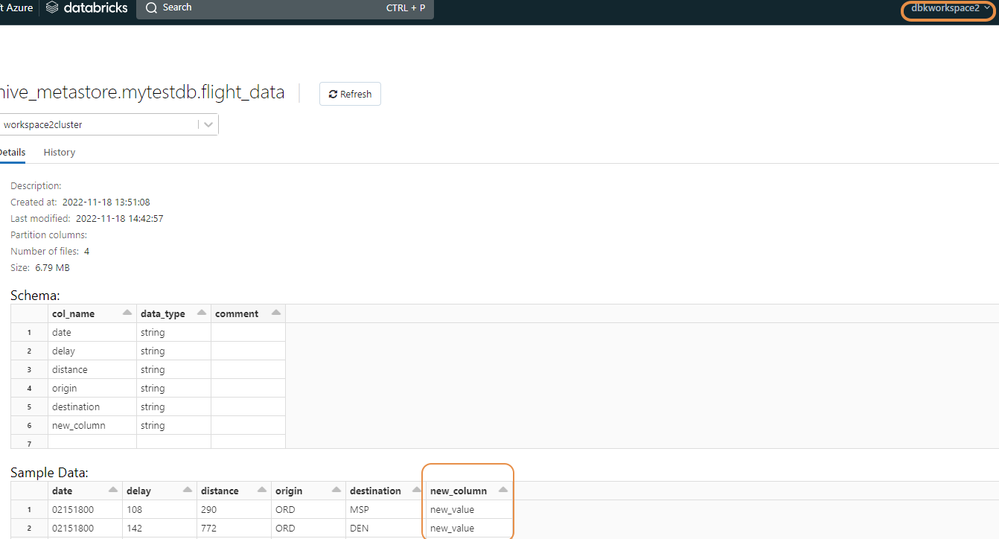

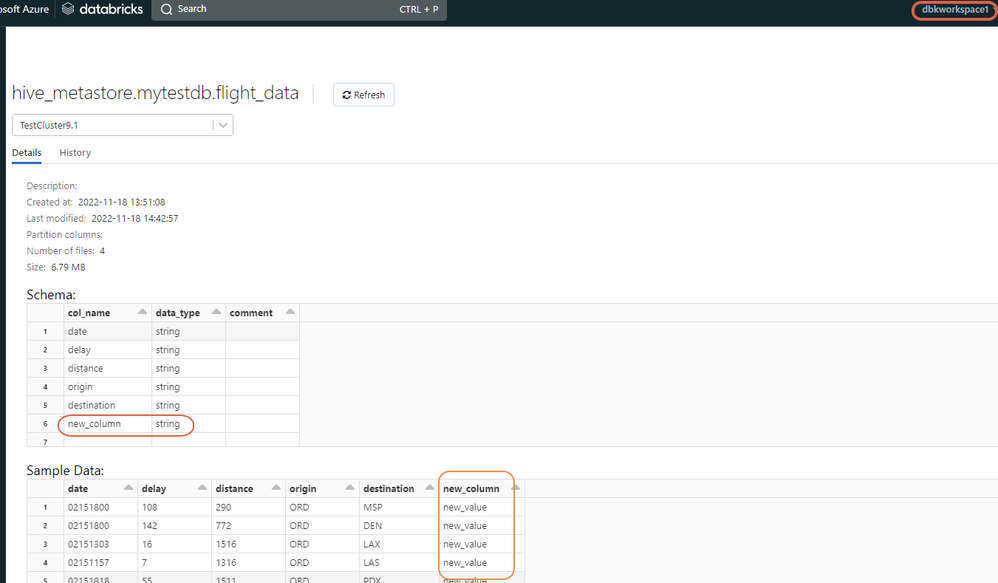

Let’s make some change to the table from the dbworkspace2 –

In dbkworkspace1, we can see the changes-

Hive External Metastore Database

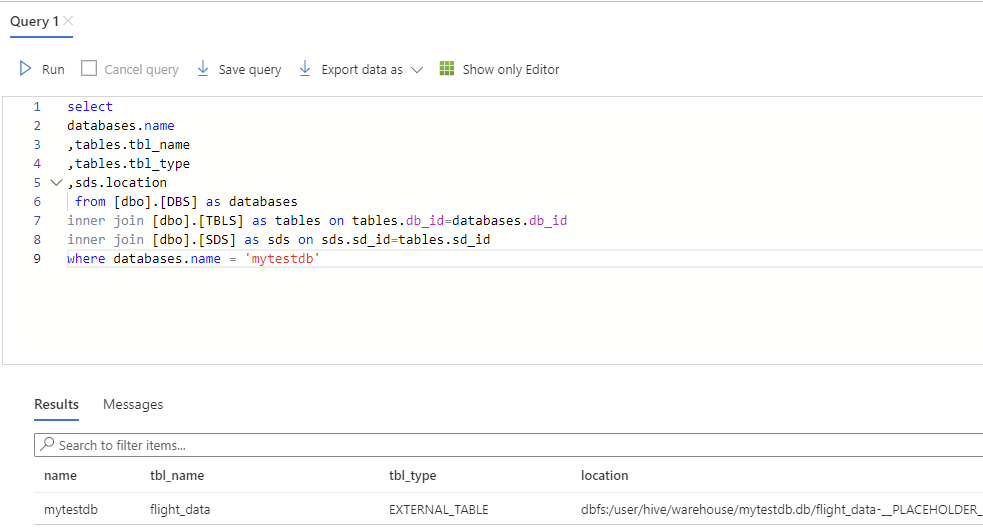

Running the below query will give us the information about the tables that are registered in hive external metastore for a given database

The Hive external metostore contains the pointer to the external storage.

Here is the pointer for the database - dbfs:/user/hive/warehouse/mytestdb.db

Now when we look at the table, we see the actual path is being mapped with the table.

Final Thoughts

Whether you have a simple Databricks setup or a complicated one, every workspace in Azure Databricks has a Hive metastore that is critical to your Databricks jobs, your collaboration and your disaster recovery. Leveraging the above setup will allow you to paint a better picture for sharing tables across the business, sharing a metastore so that different workspaces can register their data into a commonly shared metastore and simplifying your disaster recovery setup. We hope that you got some new insights and they come in handy. The only thing left is to get your hands dirty and have fun!