This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Introduction

In this blog, we talk about steps to follow to move data from Azure SQL Database to Lakehouse using Copy activity of Data Pipeline integrated within Lakehouse.

If you are interested in bringing mainframe/Db2 data to Fabric, please read our blog here or if you are looking to copy data between Azure Data Services using Fabric, read our other blog here

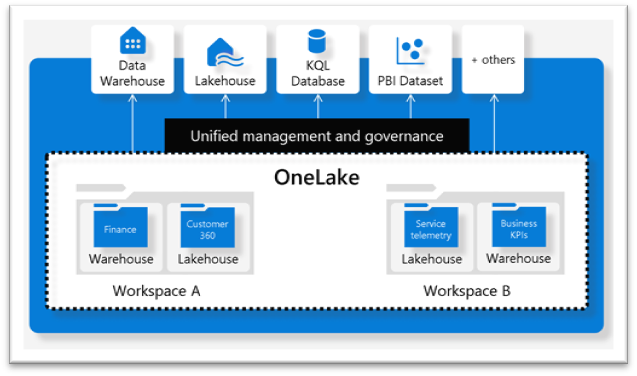

Before we move forward, let’s understand how OneLake and Lakehouse are related to each other:

-

OneLake is a single, unified, logical data lake for the whole organization. Like OneDrive, OneLake comes automatically with every Microsoft Fabric tenant and is designed to be the single store for all your analytics data.

-

Lakehouse is a data item in OneLake that will store, manage, and analyze structured and unstructured data in a single location.

OneLake is built on top of Azure Data Lake Storage Gen2 and supports any type of file, structured or unstructured. All Fabric data items such as data warehouses and store their data automatically in OneLake in delta parquet format. This means when a data engineer loads data into a Lakehouse using Spark and a SQL developer in a fully transactional data warehouse uses T-SQL to load data, everyone is still contributing to building the same data lake.

Architecture Overview

This architectural diagram shows the components used in the solution for moving data from Azure SQL Database to Microsoft Fabric Lakehouse.

Since Microsoft Fabric is new to many of you, let’s start by creating a Lakehouse.

Step 1: Create a Lakehouse in your OneLake tenant.

Once you’re in PowerBI Workspace, click on the

Once you click on Data Engineering, you will be redirected to your workspace (referred as My Workspace) where you will get an option to create items like Lakehouse, Notebook, Spark Job etc.

For the scope of this blog, we will create a new Lakehouse and name it lakehouse4blog:

Once the Lakehouse is created, you will be redirected to its homepage which will have its own "Explorer". Within this Lakehouse, you can create your own tables or files under the Tables and Files branches respectively. Note that Lakehouse creates a serving layer by auto-generating a SQL endpoint and a default dataset during creation. This new see-through functionality allows users to work directly on top of the delta tables in the lake to provide a frictionless and performant experience all the way from data ingestion to reporting.

Here is how the Lakehouse homepage will look like, you can consider it as a landing zone for Lakehouse also:

Step 2: Get Data into Lakehouse

In this step, we will get data into the Lakehouse that we have created. There are a number of ways to get the data into Lakehouse, however, we will use Data Pipeline in this blog.

Click on the Get data dropdown menu and select New data pipeline and mention the pipeline name and Create.

Step 3: Setting up the data pipeline

In this step, we will set up the data pipeline by choosing the data source and destination, connecting to both source and destination, and choosing the source table(s) from which data is to be migrated.

Step 3(a): Setting up the Data Source

First, we select the data source, in this case, it’s Azure SQL Database, followed by creating a new connection to the source database as depicted below:

Step 3(b): Setting up the Data Source

Once the connection to the database is successful, you need to select a table from the database or perhaps, write a query to get only the data that you need to move to Lakehouse. In this example, we will select a table and move all its records to Lakehouse.

The table name is customers with just a few records for demo purposes only.

Step 3(c): Choose data destination

As we have initiated the data pipeline from within Lakehouse, this page will consider the destination as Lakehouse and will have its pre-populated name, which in this case is lakehouse4blog

You may choose to create a new Lakehouse and connect to it right from the same window.

Step 3(d): Choose data destination

Here, we need to choose if we want to put data into Tables or Files in Lakehouse. To achieve seamless data access across all compute engines in Microsoft Fabric, Delta Lake is chosen as the unified table format.

In this example, we choose Tables as a preferred destination.

Step 3(e): Review and Save

Here, you must review the data sources, destination, etc., and proceed.

Our advice is to uncheck the box for “Start data transfer immediately”.

The reason to uncheck the box for Start data transfer immediately is to verify the pipeline once it’s created and make some modifications to it which otherwise are not possible. For example, if the data size is quite large and you want to use partitioning to move the data (parallel read and write based on partitions) you can do so now. Also, you now have the option to use “Upsert” which otherwise was not possible in the Copy data into Lakehouse window.

Step 4: Validate the Copy data pipeline and execute

In this step, you can validate or change settings like partitioning, table options, schema mapping, DOP, etc. Let’s run the pipeline when ready.

Once the pipeline is executed successfully, you should be able to see the data migrated to Lakehouse.

Go to the Lakehouse Explorer and under “Tables” you should be able to see the “customers” table.

Summary

In the above writeup, we have used Microsoft Fabric data pipelines to copy data from Azure SQL Database to Lakehouse (OneLake). You can modify the pipeline to use partitioning, table options etc. if the data to be moved is large. It’s quite straightforward and should work with other data sources in a similar way. Try it out!

Feedback and suggestions

If you have feedback or suggestions for improving this data migration asset, please contact the Azure Databases SQL Customer Success Engineering Team. Thanks for your support!