This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Deploy an Azure AI Custom Vision App on Azure App Service with Zero Downtime Deployment using Deployment Slots

In this tutorial you will get to use Custom Vision client library for Node.js to create a Custom Vision classification model and deploy a Web App implementation using Azure App Service. Moreover, you will also get to leverage the power of Azure App Service’s Deployment Slots to avert downtime during redeployment when building and testing and deploy gradually without users being affected.

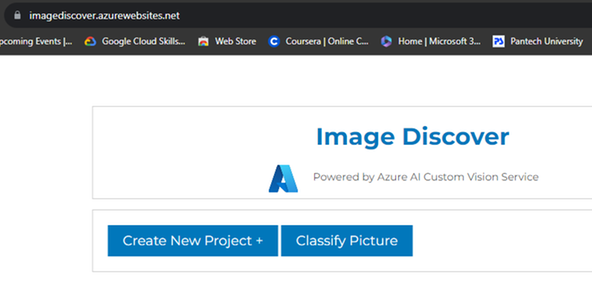

A Quick Look at our Demo App

You are going to build a model creation and inference site for image classification called “Image Discovery”. It does a simple task of creating a Azure AI Service Custom Vision model and training the created classification model based on images you upload to the model on Azure AI Services Custom Vision portal and it also capable of doing an inference to the created model to classify an uploaded image.

Prerequisites

- Node 18+ (Long Term Support, LTS), it comes with npm (Node 16 might be used but will be soon discontinued towards the end of 2023 on Azure App Service as a runtime stack)

- You need to have Visual Studio Code installed.

- Create a GitHub Account incase you don’t have one.

- An Active Azure Subscription

- Git

Create a Custom Vision Resource in Azure AI Service’s Custom Vision

Before you do anything, you need to generate your keys and endpoints to use with the Custom Vision client library for Node.js. Therefore, creation of custom vision resources is vital in generating these required credentials. To get our keys and endpoints follow these steps.

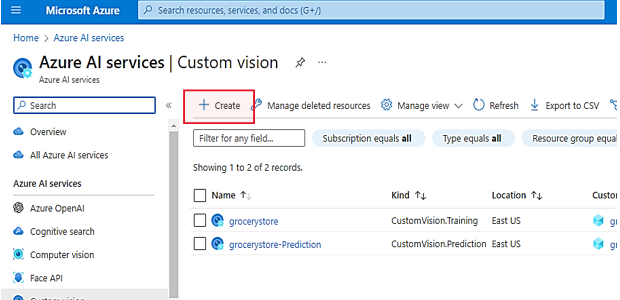

- In your Azure portal home page, type in the search bar “custom vision” and click result that is shown as Custom Vision.

- Once you have been directed to the Azure AI Services | Custom Vision page. Click on Create to create a new resource.

- In Create Custom Vision, because you are creating a prediction and training resource, you choose both in Create options. Enter the required details then go to Review + Create.

Setting up the Application Environment

- Open up a terminal then create a directory (folder) where your project is going to reside.

$ cd desktop $ mkdir customvision-app $ cd customvision-app - Then initialize the application and install all the required modules.

$ npm init -y $ npm i express ejs dotenv body-parser multer $ npm install /cognitiveservices-customvision-training $ npm install /cognitiveservices-customvision-prediction $ npm i nodemon -D $ code . - After Visual Studio Code pops up and fully loads, in the package.json file generated, remove the test script and replace it with the following:

{ “start”:”node app.js”, “dev”:”nodemon app.js” } - Get the keys and endpoints of your Azure custom vision training and prediction resources.

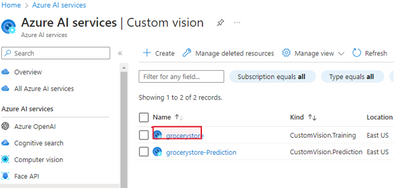

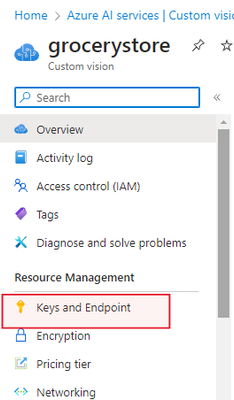

- Go to your resource for example, the training resource below is, grocerystore.

- Head over to Resource Management, on the left panel, click on Keys and Endpoints.

- Copy your endpoint and a key, in this case you are copying the ones from the training resource. Remember to do the same for your prediction resource.

- Go to your prediction resource, for example, grocerystore-prediction. Go to Resource Management> Properties. Copy the Resource ID. The resource id in this case is your prediction resource id.

- Create a .env file in the root directory of your project with the following variables.

PORT= <your custom port number> resourceTrainingKEY= <your training key> resourceTrainingENDPOINT=<your training endpoint> resourcePredictionKEY=<your prediction key> resourcePredictionENDPOINT=<your prediction endpoint> resourcePredictionID= <your prediction resource id>

Configuring a new Application

In this section, you will create a new node.js application from scratch using express.js.

- In Visual Studio Code in your root folder, create a new file and name it app.js, then add the following code to import express and create a http server to listen for the application.

const express = require(‘express’); const app = express(); //bring in the environmental variables using dotenv const dotenv=require(‘dotenv’); dotenv.config(); app.get(‘/’,(req,res))=>{ res.send(‘<h1>App Working</h1>’); } const PORT=process.env.PORT || 5004; app.listen(PORT, ()=>console.log(`App listening on PORT ${PORT}…`))

The code above helps one know if the application is up and running, and if the scripts you wrote are working as expected.

- Open the terminal to run the application to test if it is working, before we do anything else. Type in the terminal the following command to start the app using nodemon, that restarts the server on every change made, to avoid restarting the server everytime you make a change.

$ npm run dev

Creating the Application

You are going to modify the code in app.js with the following parts of code necessary to create “Image Discover”.

- Import necessary libraries into app.js file.

//Required Node Modules const express=require('express') const util=require('util') const fs=require('fs') const multer=require('multer') const trainAPI=require('@azure/cognitiveservices-customvision-training') const predAPI=require('@azure/cognitiveservices-customvision-prediction') const msREST=require('@azure/ms-rest-js') const publishIterationName=<add name you want to give an iteration> const setTimeOutPromise=util.promisify(setTimeout) const body_parser=require('body-parser') //const fileUpload=require('express-fileupload') const dotenv=require('dotenv') //configure environment dotenv.config() - Add necessary variables, including those for your resource's Azure endpoint and keys.

//variables const trainer_endpoint=process.env.resourceTrainingENDPOINT const pred_endpoint=process.env.resourcePredictionENDPOINT const creds=new msREST.ApiKeyCredentials({inHeader:{'Training-key':process.env.resourceTrainingKEY}}) const trainer=new trainAPI.TrainingAPIClient(creds,trainer_endpoint) const pred_creds=new msREST.ApiKeyCredentials({inHeader:{'Prediction-key':process.env.resourcePredictionKEY}}) const pred=new predAPI.PredictionAPIClient(pred_creds,pred_endpoint) const upload=multer({dest:'uploads/',storage:storage}) let projectID=''

- Create necessary variables to handle file functions.

//create a variable to your image folder const rootImgFolder='./public/images' const storage = multer.diskStorage({ destination: (req, file, cb) => { cb(null, 'uploads/'); // Set the directory where uploaded files will be stored }, filename: (req, file, cb) => { const uniqueSuffix = Date.now() + '-' + Math.round(Math.random() * 1E9); cb(null, file.fieldname + '-' + uniqueSuffix + '.' + file.originalname.split('.').pop()); }, });

- Strictly create express server app and then import required middleware for express.

//create express app const app=express() //middleware //sets the default view engine to ejs app.set('view engine','ejs') //that handles json data app.use(body_parser.urlencoded({extended:true})) //default file for all static assets e.g. pictures, css app.use(express.static('public'))

- Create app endpoints that handle get requests and render a html page.

//index page app.get('/',(req,res)=>{ res.render('index') }) //create new project app.get('/create-train-project', (req,res)=>{ res.render('create') }) //classify image app.get('/classify-image', (req,res)=>{ // Read the JSON file fs.readFile('id.json', 'utf8', (err, data) => { if (err) { console.error(err); return res.status(500).send('Error reading JSON file'); } const projectsData = JSON.parse(data); let pred_results=[] // Pass the projectsData to the EJS template res.render('classify', { projects: projectsData.projects,pred_results:pred_results }); }); })

Create Application User Interface

Before you create the UI using ejs templates that will be rendered as per the routes that call the template. You have to create necessary directories. In the root directory in Visual Studio Code, create two folders public and views.

- In views, create the files index.ejs, create.ejs and classify.ejs. Add the following code to index.ejs, which serves as the home page.

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>Image Discover</title> <link rel="preconnect" href="https://fonts.googleapis.com"> <link rel="preconnect" href="https://fonts.gstatic.com" crossorigin> <link href="https://fonts.googleapis.com/css2?family=Montserrat:ital,wght@0,100;0,200;0,300;0,400;0,500;0,600;0,700;0,800;0,900;1,100;1,200;1,300;1,400;1,500;1,600;1,700;1,800;1,900&display=swap" rel="stylesheet"> <link rel="stylesheet" href="./css/style.css"> </head> <body> <div class="container"> <h1>Image Discover</h1> <center><div class="info normal"><img src="./images/azure-logo.png" alt="Microsoft Azure Logo">Powered by Azure AI Cognitive Services</div></center> </div> <div class="container layout"> <button><a href="/create-train-project">Create New Project +</a></button> <button><a href="/classify-image">Classify Picture</a></button> </div> </body> </html> - Add the following code to create.ejs. This file serves as the project creation page.

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>Image Discover Find</title> <link rel="preconnect" href="https://fonts.googleapis.com"> <link rel="preconnect" href="https://fonts.gstatic.com" crossorigin> <link href="https://fonts.googleapis.com/css2?family=Montserrat:ital,wght@0,100;0,200;0,300;0,400;0,500;0,600;0,700;0,800;0,900;1,100;1,200;1,300;1,400;1,500;1,600;1,700;1,800;1,900&display=swap" rel="stylesheet"> <link rel="stylesheet" href="./css/style.css"> </head> <body> <div class="container"> <h1>Image Discover - Create Project</h1> <div class="info"><p>To create a new classification project, please enter the new project name. Then use the file upload button to upload 5 to 10 images and specify the label of the group you have uploaded.</p></div> <br> <br> <center><p><span id="total-images">0</span> File(s) Selected</p></center> <div id="image-showcase"></div> <br> <br> <form action="/create-train-project" method="POST" enctype="multipart/form-data"> <div class="field"> <input type="text" name="projName" id="projName" placeholder="new project name"> </div> <div class="field"> <input type="text" name="projTag" id="projTag" placeholder="enter tag e.g. orange"> </div> <div class="field"> <input type="file" name="image" id="image" multiple accept=".png, .jpg, .bmp, .jpeg" onchange="createPreview(e)"> </div> <div class="field"> <button type="submit">Create New Project +</button> </div> </form> </div> </body> </html> - Add the following JavaScript code to the body of the html content in create.ejs. This code checks for image file size and rejects files above 4 MB in size, since images beyond this size is not allowed in Custom Vision Service. This script also creates a preview of the images you upload.

<script> const fileInput = document.getElementById('image'); const images = document.getElementById('image-showcase'); const totalImages = document.getElementById('total-images'); // Listen to the change event on the <input> element fileInput.addEventListener('change', (event) => { // Get the selected image files const imageFiles = event.target.files; totalImages.innerText = imageFiles.length; images.innerHTML = ''; if (imageFiles.length > 0) { // Loop through all the selected images for (const imageFile of imageFiles) { // Check the file size (4MB limit) const maxSize = 4 * 1024 * 1024; // 4MB in bytes if (imageFile.size > maxSize) { images.innerHTML=`<center><p style="color: rgb(204, 58, 58);">${imageFile.name} exceeds the 4MB size limit. Please select a smaller file.</p></center>`; continue; // Skip this file and continue with the next one } const reader = new FileReader(); // Convert each image file to a string reader.readAsDataURL(imageFile); reader.addEventListener('load', () => { // Create a new <img> element and add it to the DOM images.innerHTML += ` <div class="image_container"> <img src='${reader.result}'> <span class='image_name'>${imageFile.name}</span> </div> `; }); } } else { images.innerHTML = ''; } }); </script> - In classify.ejs add the following code. This code will create a preview of upload that you are sending and send the image over to the custom vision model for classification, resultantly showing its classification.

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>Image Discover</title> <link rel="preconnect" href="https://fonts.googleapis.com"> <link rel="preconnect" href="https://fonts.gstatic.com" crossorigin> <link href="https://fonts.googleapis.com/css2?family=Montserrat:ital,wght@0,100;0,200;0,300;0,400;0,500;0,600;0,700;0,800;0,900;1,100;1,200;1,300;1,400;1,500;1,600;1,700;1,800;1,900&display=swap" rel="stylesheet"> <link rel="stylesheet" href="./css/style.css"> </head> <body> <div class="container"> <h1>Image Discover - Classify Image</h1> <br> <center><div class="info normal"><p>To classify image, please enter 1 (one) image only. Using the file upload button.</p></div></center> <br> <br> <center><p><span id="total-images">0</span> File(s) Selected</p></center> <div id="image-showcase"></div> <div class="result"> <b> <p> <% if (pred_results && pred_results.length > 0) { %> <% if(pred_val==='Passed'){ %> <p style="color: rgb(34, 116, 68);"><%= pred_results %></p> <center><p style="color: rgb(34, 116, 68);"><%= pred_val %></p></center> <%}else{ %> <%= pred_results %> <center>Failed</center> <% } %> <% } else { %> <% } %> </p> </b> </div> <br> <br> <div class="info normal"> <h1>Projects List</h1> <ul> <% projects.forEach(project => { %> <li><%= project.projId %> - <%= project.projName %></li> <% }); %> </ul> </div> <br> <br> <form action="/classify-image" method="POST" enctype="multipart/form-data"> <div class="field"> <input type="text" name="projId" id="projId" placeholder="enter project id here"> </div> <div class="field"> <input type="file" name="image" id="image" accept=".png, .jpg, .bmp, .jpeg" onchange="createPreview(e)"> </div> <div class="field"> <button type="submit">Classify Image</button> </div> </form> </div> <script> const fileInput = document.getElementById('image'); const images = document.getElementById('image-showcase'); const totalImages = document.getElementById('total-images'); // Listen to the change event on the <input> element fileInput.addEventListener('change', (event) => { // Get the selected image files const imageFiles = event.target.files; totalImages.innerText = imageFiles.length; images.innerHTML = ''; if (imageFiles.length > 0) { // Loop through all the selected images for (const imageFile of imageFiles) { // Check the file size (4MB limit) const maxSize = 4 * 1024 * 1024; // 4MB in bytes if (imageFile.size > maxSize) { images.innerHTML=`<center><p style="color: rgb(204, 58, 58);">${imageFile.name} exceeds the 4MB size limit. Please select a smaller file.</p></center>`; continue; // Skip this file and continue with the next one } const reader = new FileReader(); // Convert each image file to a string reader.readAsDataURL(imageFile); reader.addEventListener('load', () => { // Create a new <img> element and add it to the DOM images.innerHTML += ` <div class="image_container"> <img src='${reader.result}'> <span class='image_name'>${imageFile.name}</span> </div> `; }); } } else { images.innerHTML = ''; } }); </script> </body> </html> - In the public directory, create two sub-directories css and images. In images add the MS Azure logo and other images you may use for classification provided in this project’s GitHub Repository.

- In css create style.css file and add the code found in the style.css file from the GitHub Repository.

Finish the Application

At this point you will complete the app.js file with necessary endpoints that will do a post request to the custom vision service on Azure.

- Add the following code that will create a project in custom vision service and automatically train a model. You are at liberty to provide any relevant name to your project during form submission, this name is used in creation of your project. This code receives form submission from create.ejs, creates a new project and saves its id and name to id.json then trains the model and finally redirects you to the image classification page.

app.post('/create-train-project', upload.array('image', 10), async (req, res) => { try { const myNewProjectName = req.body.projName; const tag = req.body.projTag; const imageFiles = req.files; // Import id json const id_data = fs.readFileSync('id.json'); console.log(`Name: ${myNewProjectName}\nTag: ${tag}`); // Ensure that project name, tag, and images are provided if (!myNewProjectName || !tag || !imageFiles) { return res.status(400).send('Project name, tag, and images are required.'); } console.log(`Creating project...`); const project = await trainer.createProject(myNewProjectName); projectID = project.id; // after creating the project, save the ID to id.json const idsJSON = JSON.parse(id_data); idsJSON.projects.push({ projId: projectID, projName: project.name, }); fs.writeFileSync('id.json', JSON.stringify(idsJSON)); // add tags for the pictures in the newly created project const tagObj = await trainer.createTag(projectID, tag); // upload data images console.log('Adding images...'); // Process each uploaded image for (const imageFile of imageFiles) { const imageData = fs.readFileSync(imageFile.path); // Upload the image to the project with the specified tag await trainer.createImagesFromData(projectID, imageData, { tagIds: [tagObj.id] }); } // train the model console.log('Training initialized...'); var trainingIteration = await trainer.trainProject(project.id); console.log('Training in progress...'); // train to completion while (trainingIteration.status === 'Training') { console.log(`Training status: ${trainingIteration.status}`); await setTimeOutPromise(1000); const updatedIteration = await trainer.getIteration(projectID, trainingIteration.id); if (updatedIteration.status !== 'Training') { console.log(`Training status: ${updatedIteration.status}`); break; } } // publish current iteration // Publish the iteration to the endpoint await trainer.publishIteration(project.id, trainingIteration.id, publishIterationName, process.env.resourcePredictionID); res.status(200).redirect('/classify-image'); } catch (e) { console.log('This error occurred: ', e); // remember to change this to default behavior and not throw actual error res.status(500).send('An error occurred during project creation and training.'); } }); Add the model inference code to app.js. This code handles classification of uploaded images. app.post('/classify-image', upload.single('image'),async (req,res)=>{ try { // Check if a file was uploaded if (!req.file) { res.send('<p>Files Missing</p>'); return; } if(!req.body.projId){ res.send('<p>Project Id Missing</p>'); return; } // Read the uploaded image file const fileimageBuffer = fs.readFileSync(req.file.path); const results = await pred.classifyImage(req.body.projId, publishIterationName, fileimageBuffer); let pred_results=[] let pred_val=0 // Show results console.log("Results:"); results.predictions.forEach(predictedResult => { console.log(`\t ${predictedResult.tagName}: ${(predictedResult.probability * 100.0).toFixed(2)}%`); pred_results.push(`${predictedResult.tagName}: ${(predictedResult.probability * 100.0).toFixed(2)}%`) pred_val=(predictedResult.probability * 100.0).toFixed(2) }); fs.readFile('id.json', 'utf8', (err, data) => { if (err) { console.error(err); return res.status(500).send('Error reading JSON file'); } const projectsData = JSON.parse(data); if (pred_val>=50){ pred_val='Passed' } // Pass the projectsData to the EJS template res.render('classify', { projects: projectsData.projects,pred_results:pred_results,pred_val }); }); } catch (e) { console.error('Error:', e); res.status(500).send('Error processing the image.'); } }) - Add id.json from GitHub Repository into the root folder.

- Create nodemon.json in the root folder and add the following code.

{ "ignore": ["id.json"] }

It will cause nodemon to generally ignore id.json everytime changes are made and saved to id.json to avoid restarting the server when not necessary.

- Test the application. Go over to your terminal in Visual Studio Code and type in rs if you already started the server, if not type one of the following commands below.

$ npm run start $ npm run dev

Add Project to a GitHub Repository

Before you deploy the project, it will be necessary to add the project to a github repository. This will enable you to track your project versions and enable github workflows on Azure during deployments.

- Add a .gitignore file in the root directory and type in the following. Git will ignore the directories you have specified, during a push to a remote repository.

/node_modules

/uploads

- Create a GitHub repository and copy the url.

- Create a git project locally.

- Create multiple branches with git.

- Finally push your local repository to the GitHub remote repository that you created. Using the command.

$ git push origin <active branch name>

Deploy your Application to Azure App Service

This part of the tutorial shows you a step-by-step method of deploying your node.js application to Azure App Service using a GitHub repository.

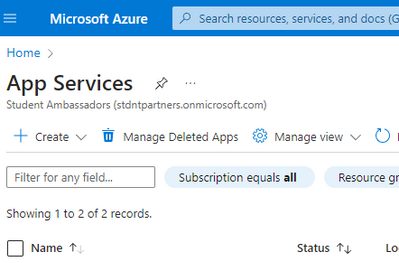

- On the Azure portal, search for “app services” and click on the result App Services.

- Click on Create.

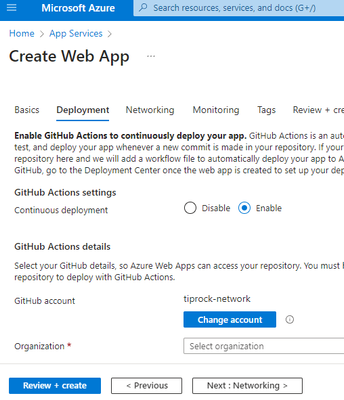

- Enter the required information in the fields and select the runtime stack as Node 18 LTS, publish as code, operating system as Linux, you may leave region as default and choose an existing resource group or create a new one. Click Deployment.

- Go to Git Actions settings and select Enable.

- In case your GitHub Account is not connected, sign in. Select Organization, the project Repository and specific Branch. Click Review + create.

- Click on Go to Resource on completion of creation.

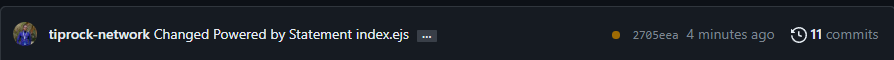

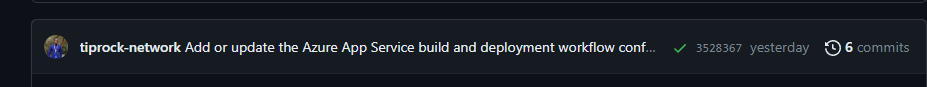

- Then constantly check if the amber dot has turned into a green tick in your GitHub Repository branch you have deployed.

- Once the green tick appears it means that deployment of your code was successful.

- Head over to the left pane and click on Overview then click on the URL in Default domain of the website to see your web app.

Note: In case of any failure, you can refer to the work flow folder that will have a red X mark that you can use to see the logs where the deployment failed.

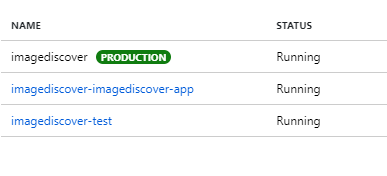

Add an Azure Deployment Slot

When your application is in production mode, you will not want to interrupt users therefore using Azure Deployment Slot will guarantee you Zero Downtime Deployment (ZDD) that will help deliver services while application updates and tests are made in real time.

In this part of the tutorial we will cover slot swapping to show the power of deployment slots even when using different resources with Azure.

- Go to Deployment> Deployment slots> Add Slot.

- Enter Name for slot URL then clone settings from production slot, in this case imagediscover.

- Click on the new slot, for example, imagediscover-test.

- Go to Deployment Center> Source> GitHub. Then choose your repository then branch. Finally, click on save.

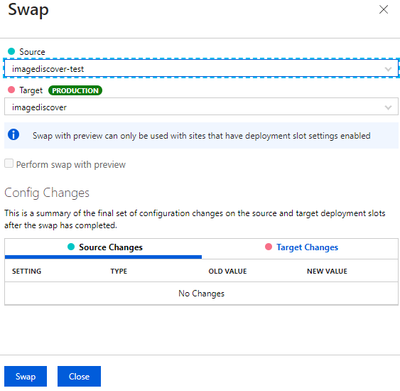

- Go to Deployment> Deployment slots> Swap.

- Select the Target the slot you want to replace and Source the slot you want to replace with as shown in the below image. Click Swap then click Close after the swapping is done. I encourage you to continue using the app during swapping, this acts as a proof of concept.

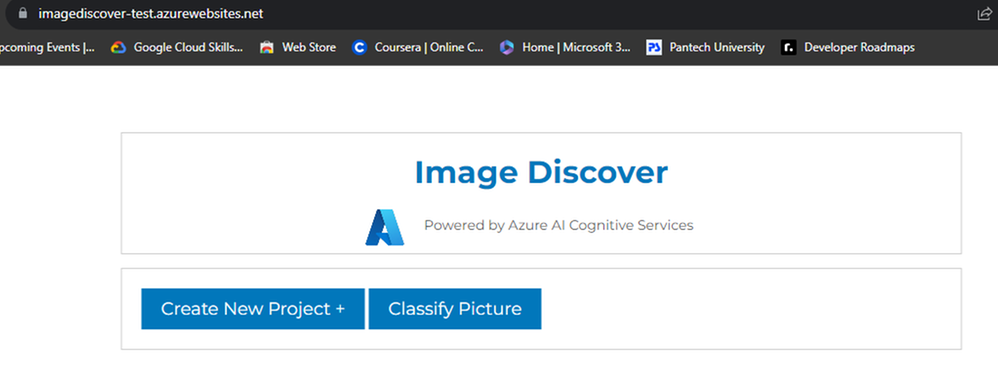

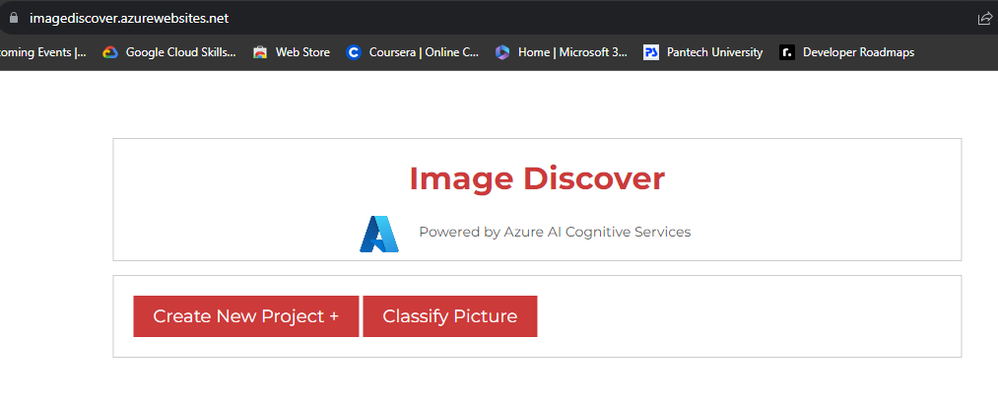

- Check your production URL and compare it with deployment slot URL. As shown below the two slots have been swapped in real time. Notice the change in the URLs.

Learn More

Checkout the documentation on Azure App Service.

Automate your workflow with GitHub Actions.

Learn the same in other languages to Create an image classification project with the Custom Vision client library or REST API.

Build an object detector with the Custom Vision website.