This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Summary

Calculating distinct counts on massive distributed datasets is not trivial.

Kusto (aka Azure Data Explorer/KQL database in Fabric) dcount and dcountif functions use a special algorithm to return an estimate of distinct counts

The new functions count_distinct and count_distinctif were recently added to calculate exact distinct counts. These two functions are much more expensive than the original ones.

We added a setting in Power BI to control the level of accuracy of dcount.

The setting also allows calling count_distinct when absolute accuracy is needed.

When is dcount used?

The dcount function is invoked by the Kusto connector when the DISTINCTCOUNT Dax function is used in a measure, or a distinct count aggregation is used in a visual.

Until the new setting was added the dcount function used the default value for the second parameter which is 1.

This parameter controls the level of accuracy of the calculated distinct count.

The allowed values for the settings are 0-4, the higher this value the higher the accuracy and the cost.

How does the new setting work?

Starting from the October version of Power BI desktop, you can add a setting to the source statement in a query:

= AzureDataExplorer.Contents("help", null, null, [DcountAccuracyLevel=1])

The values of the DcountAccuracyLevel are directly inserted as the second parameter of the dcount function.

The special value of -1 is translated to the use of count_distinct.

Example

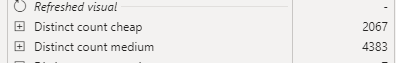

In the attached file you can see three queries using the same table.

Each query uses a different value of the setting 0,4 and -1.

The queries limit the number of rows to 100 Million.

These are the execution times as measured by the performance analyzer of three distinctcount measures from the same column in each of the three tables.

You can see that the medium version using the value 4 is much closer to the exact value which appears in the premium version using count_distinct.

Notice the difference between the execution times of each measure.

The differences are not linear.

count_distinct will return an error if used on a column with a very high # of distinct values.

Here are the values calculated against the entire table (1.5 Billion rows).

I did not attempt count_distinct because it returns an error.

The costs when refreshing both visuals are

Conclusion

You can choose between more or less accurate distinct counts with different cost for each level.

Be careful with the use of distinct_count when the table returns many millions of rows after applying all filters.