This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Today we want to write about a very interesting case that our team Prathibha Vemulapalli, Charl Roux, and I worked this very week.

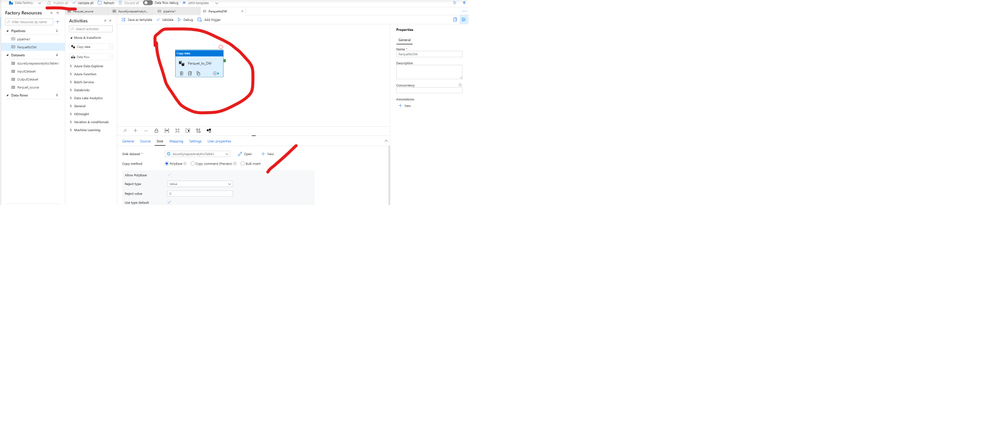

A parquet file was created with more than 100 columns to be imported on the AzureDW using Azure Data Factory. The file had diverse datatypes. So, before I go more deeply on the error and the troubleshooting process. Let me share step by step of the environment.

Put in a simple way. This environment has a parquet file as source, SQL DW as destination running on ADF.

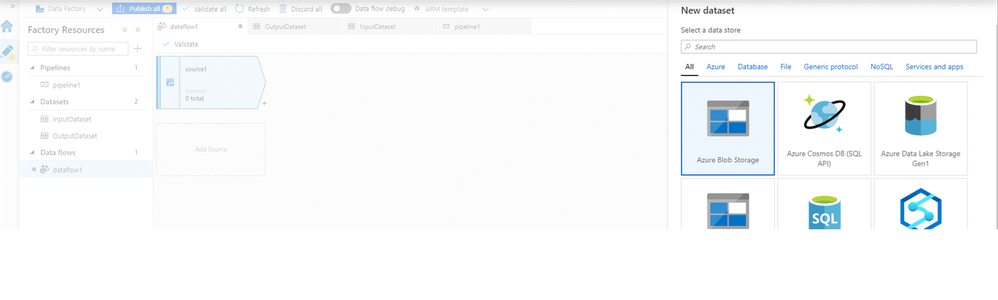

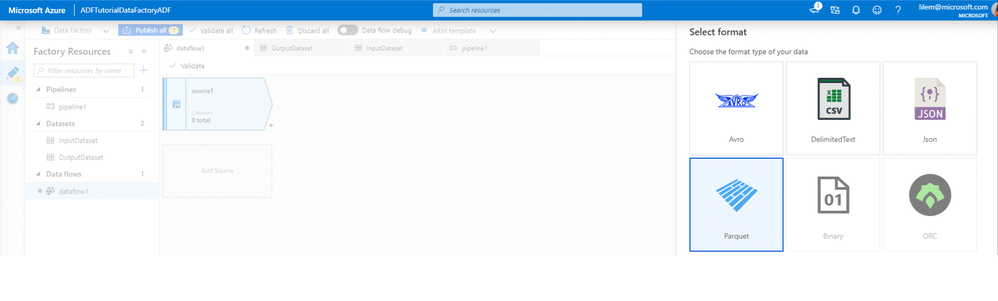

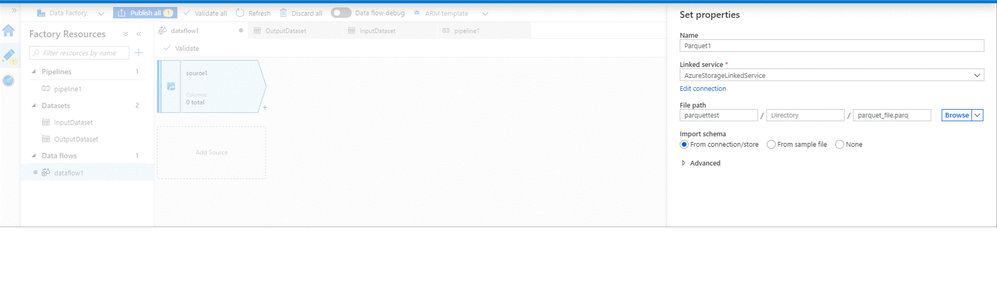

As figure 1,2 and 3 shows:

Figure 1: Define Blob Storage

Figure 2: Define Parquet file

Figure 3: Define Source file

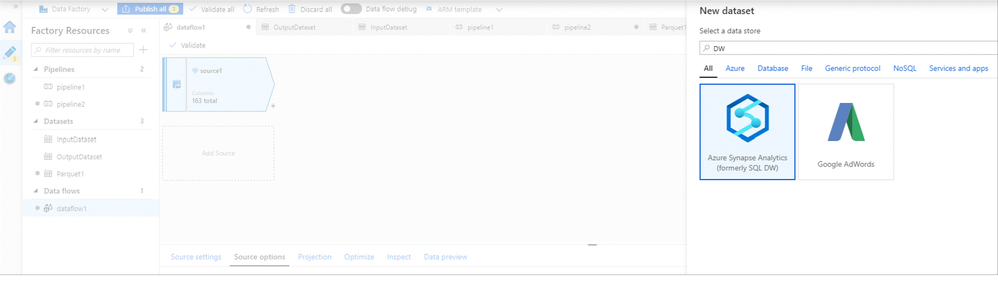

The destination is SQL DW as figure 4 shows:

Figure 4: Define data destination

Once the table was defined on the SQLDW connector. A pipeline must be created with the source (parquet), Sink (SQLDW) as figure 5 shows:

Figure 5: pipeline

Publish all changes, rename the pipeline to a suitable name.

More details here about data factory you can find here:https://docs.microsoft.com/en-us/azure/data-factory/quickstart-create-data-factory-portal

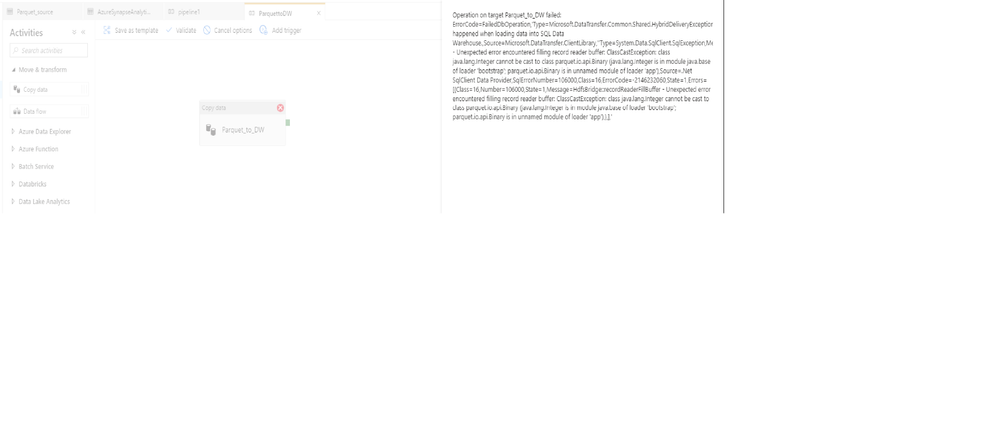

Our environment is ready to debug. Once I run the pipeline ( debug option) it will hit the condition I mentioned before:

Figure 6: ADF Cast error

The table was defined with data types like Int, DateTime, nVarchar , Float, and Real. More than 100 rows and the error was::

“Error happened when loading data into SQL Data Warehouse.,Source=Microsoft.DataTransfer.ClientLibrary,''Type=System.Data.SqlClient.SqlException,Message=HdfsBridge::recordReaderFillBuffer - Unexpected error encountered filling record reader buffer: ClassCastException: class java.lang.Integer cannot be cast to class parquet.io.api.Binary (java.lang.Integer is in module java.base of loader 'bootstrap'; parquet.io.api.Binary is in unnamed module of loader 'app'),Source=.Net SqlClient Data Provider,SqlErrorNumber=106000,Class=16,ErrorCode=-2146232060,State=1,Errors=[{Class=16,Number=106000,State=1,Message=HdfsBridge::recordReaderFillBuffer - Unexpected error encountered filling record reader buffer"

Discussing in your team this error was spotted: ClassCastException: class java.lang.Integer cannot be cast to class parquet.io.api.Binary

But which one from the 100 columns?

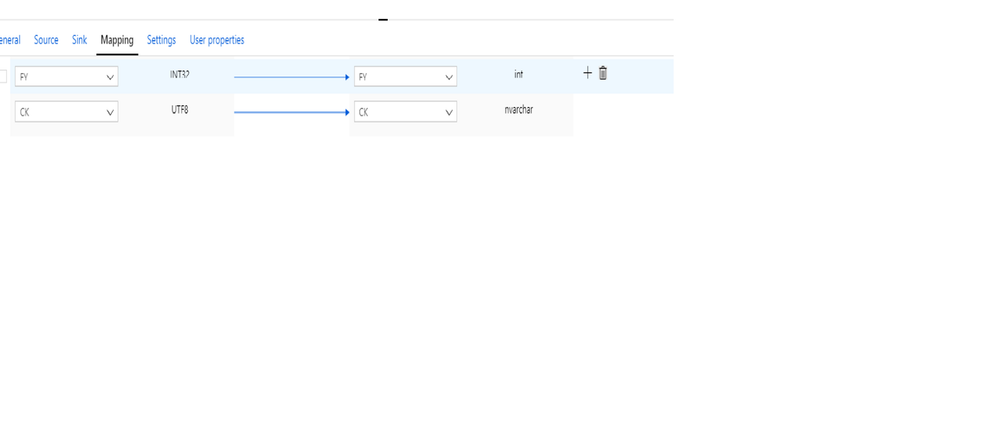

Once your main table accepts null values you can test by excluding the columns from a certain datatype. For example, try to exclude all the int columns from your transformation see if it works and so on so forth until you find the on to blame, as figure 7 shows:

Figure 7: Delete column to be imported

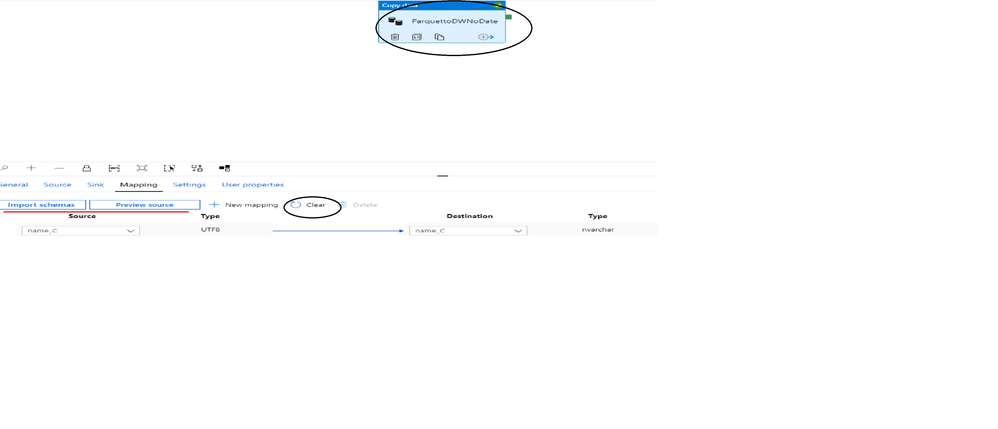

Note in ADF, once you click on the transformation you can check the schema and also preview the source data. If you changed a datatype you can clear to refresh and import the schema again as figure8 shows:

Figure 8: ADF Metadata

In our case, the suitable solution found by the customer was recreated the parquet file using INT96 instead of INT32 which raised the error mentioned on date columns.

Once you spot this error, you will need to review the data types on the table and work on it.

That was great CSS teamwork

Liliam C Leme