This post has been republished via RSS; it originally appeared at: Storage at Microsoft articles.

First published on TECHNET on May 11, 2016Hello, Claus here again. Dan and I recently received some Samsung PM1725 NVMe devices. These have 8 PCIe lanes, so we thought we would put them in a system with 100Gbps network adapters and see how fast we could make this thing go.

We used a 4-node Dell R730XD configuration attached to a 32 port Arista DCS-7060CX-32S 100Gb switch, running EOS version 4.15.3FX-7060X.1. Each node was equipped with the following hardware:

- 2x Xeon E5-2660v3 2.6Ghz (10c20t)

- 256GB DRAM (16x 16GB DDR4 2133 MHz DIMM)

- 4x Samsung PM1725 3.2TB NVME SSD (PCIe 3.0 x8 AIC)

-

Dell HBA330

- 4x Intel S3710 800GB SATA SSD

- 12x Seagate 4TB Enterprise Capacity 3.5” SATA HDD

-

2x Mellanox ConnectX-4 100Gb (Dual Port 100Gb PCIe 3.0 x16)

- Mellanox FW v. 12.14.2036

- Mellanox ConnectX-4 Driver v. 1.35.14894

- Device PSID MT_2150110033

- Single port connected / adapter

Using VMFleet we stood up 20 virtual machines per node, for a total of 80 virtual machines. Each virtual machine was configured with 1vCPU. We then used VMFleet to run DISKSPD in each of the 80 virtual machines with 1 thread, 512KiB sequential read with 4 outstanding IO.

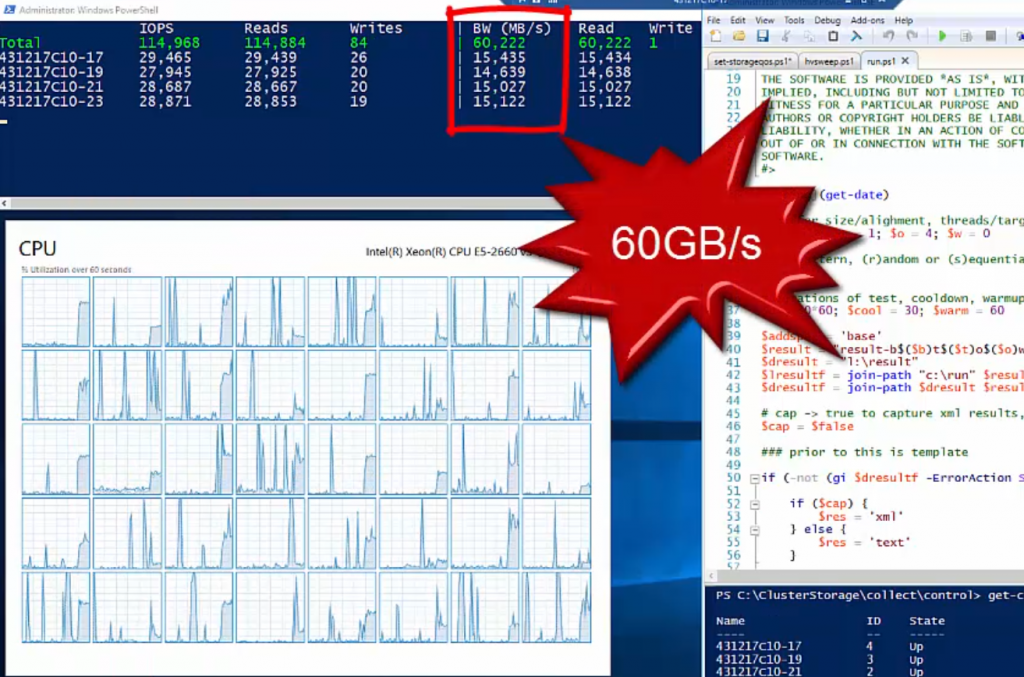

As you can see from the above screenshot, we were able to hit over 60GB/s in aggregate throughput into the virtual machines. Compare this to the total size of English Wikipedia article text (11.5GiB compressed), which means reading it at about 5 times per second.

The throughput was on average ~750MB/s for each virtual machine, which is about a CD per second.

You can find a recorded video of the performance run here:

We often speak about performance in IOPS, which is important to many workloads. For other workloads like big data, data warehouse and similar, throughput can be an important metric. We hope that we demonstrated the technical capabilities of Storage Spaces Direct when using high throughput hardware like the Mellanox 100Gbps NICs, the Samsung NVMe devices with 8 PCIe lanes and the Arista 100Gbps network switch.

Let us know what you think.

Dan & Claus