This post has been republished via RSS; it originally appeared at: Windows Blog.

Since the initial release, Windows ML has powered numerous Machine Learning (ML) experiences on Windows. Delivering reliable, high-performance results across the breadth of Windows hardware, Windows ML is designed to make ML deployment easier, allowing developers to focus on creating innovative applications.

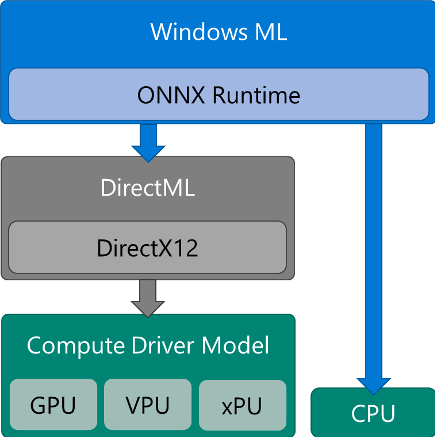

Windows ML is built upon ONNX Runtime to provide a simple, model-based, WinRT API optimized for Windows developers. This API enables you to take your ONNX model and seamlessly integrate it into your application to power ML experiences. Layered below the ONNX Runtime is the DirectML API for cross-vendor hardware acceleration. DirectML is part of the DirectX family and provides full control for real-time, performance-critical scenarios.

This end-to-end stack provides developers with the ability to run inferences on any Windows device, regardless of the machine’s hardware configuration, all from a single and compatible codebase.

Figure 1 – The Windows AI Platform stack

Windows ML is used in a variety of real-world application scenarios. The Windows Photos app uses it to help organize your photo collection for an easier and richer browsing experience. The Windows Ink stack uses Windows ML to analyze your handwriting, converting ink strokes into text, shapes, lists and more. Adobe Premier Pro offers a feature that will take your video and crop it to the aspect ratio of your choice, all while preserving the important action in each frame.

With the next release of Windows 10, we are continuing to build on this momentum and are further expanding to support more exciting and unique experiences. The interest and engagement from the community provided valuable feedback that allowed us to focus on what our customers need most. Today, we are pleased to share with you some of that important feedback and how we are continually working to build from it.

Bringing Windows ML and Direct ML ton More Places

Today, Windows ML is fully supported as a built-in Windows component on Windows 10 version 1809 (October 2018 Update) and newer. Developers can use the corresponding Windows Software Development Kit (SDK) and immediately begin leveraging Windows ML in their application. For developers that want to continue using this built-in version, we will continue to update and innovate Windows ML and provide you with the feature set and performance you need with each new Windows release.

A common piece of feedback we’ve heard is that developers today want the ability to ship products and applications that have feature parity to all of their customers. In other words, developers want to leverage Windows ML on applications targeting older versions of Windows and not just the most recent. To support this, we are going to make Windows ML available as a stand-alone package that can be shipped with your application. This redistributable path enables Windows ML support for CPU inference on Windows versions 8.1 and newer, and GPU hardware-acceleration on Windows 10 1709 and newer.

Going forward, with each new update of Windows ML, there will be a corresponding redist package, with matching new features and optimizations, available on GitHub. Developers will find that with either option they choose, they will receive an official Windows offering that is extensively tested, guaranteeing reliability and high performance.

Windows ML, ONNX Runtime, and Direct ML

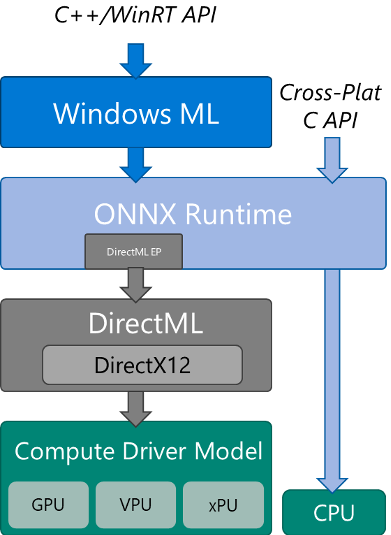

In addition to bringing Windows ML support to more versions of Windows, we are also unifying our approach with Windows ML, ONNX Runtime, and DirectML. At the core of this stack, ONNX Runtime is designed to be a cross-platform inference engine. With Windows ML and DirectML, we build around this runtime to offer a rich set of features and hardware scaling, designed for Windows and the diverse hardware ecosystem.

We understand the complexities developers face in building applications that offer a great customer experience, while also reaching their wide customer base. In order to provide developers with the right flexibility, we are bringing the Windows ML API and a DirectML execution provider to the ONNX Runtime GitHub project. Developers can now choose the API set that works best for their application scenarios and still benefit from DirectML’s high-performance and consistent hardware acceleration across the breadth of devices supported in the Windows ecosystem.

In GitHub today, the Windows ML and DirectML preview is available as source, with instructions and samples on how to build it, as well as a prebuilt NuGet package for CPU deployments.

Are you a Windows app developer that needs a friendly WinRT API that will integrate easily with your other application code and is optimized for Windows devices? Windows ML is a perfect choice for that. Do you need to build an application with a single code-path that can work across other non-Windows devices? The ONNX Runtime cross-platform C API can provide that.

Figure 2 – newly layered Windows AI and ONNX Runtime

Developers already using the ONNX Runtime C-API and who want to check out the DirectML EP (Preview) can follow these steps.

Experience it for yourself

We are already making great progress on these new features.

You can get access to the preview of Windows ML and Direct ML for the ONNX Runtime here. We invite you to join us on GitHub and provide feedback at AskWindowsML@microsoft.com

The official Windows ML redistributable package will be available on NuGet in May 2020.

As always, we greatly appreciate all the support from the developer community. We’ll continue to share updates as we make more progress with these upcoming features.

The post Extending the Reach of Windows ML and DirectML appeared first on Windows Blog.