This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Supervised machine learning (ML) models need labeled data, but majority of the data collected in the raw format lacks labels. So, the first step before building a ML model would be to get the raw data labeled by domain experts. To do so, we did a survey of some of the annotation tools and came across Doccano as an easy tool for collaborative text annotation. The latest version of Doccano supports annotation features for text classification, sequence labeling (Named Entity Recognition NER) and sequence to sequence (machine translation, text summarization) use cases.

Getting Started

To get started, Doccano needs to be hosted somewhere where all the users can use the tool. This blog walks the user through the steps needed to get started with Doccano on Azure and collaboratively annotate text data for Natural Language Processing (NLP) tasks. For instructions on the one-click deployment onto Azure, visit the NLP-recipes repository.

Assuming you have successfully set up the Doccano instance on Azure as an admin, here are some tips on getting started.

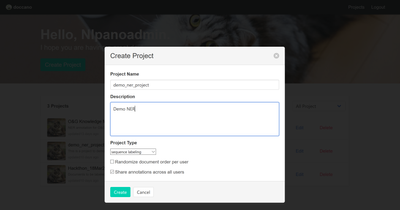

Using the admin credentials, login and create a project using the option for ‘Create Project’. For illustration, the first step we do is to create a new demo sequence labeling project for annotation by selecting 'Project Type' = 'sequence labeling'. Options to share annotations across users and to randomize document order by user is also available.

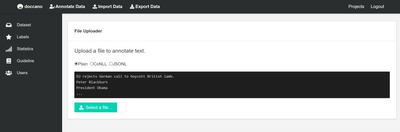

Once the project has been created, the admin should be able to see the list of projects in their Dashboard. Within the newly created project, upload your documents for annotation and create labels. It is easy to import data into Doccano, it currently supports plain text, CoNLL, JSONL formats as shown below.

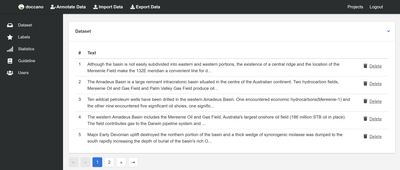

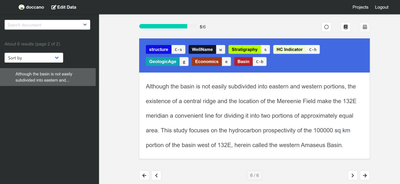

Once a few documents that need to be annotated are uploaded, the admin will have the option to edit/view the data with options to ‘Annotate Data’.

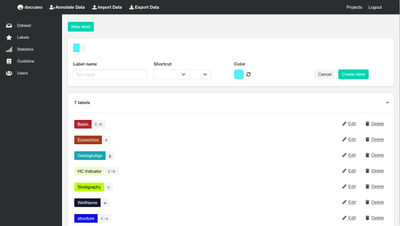

The admin would also need to create 'Labels' for annotation, the labels are color coded and can be the first alphabet of the entity that needs to be tagged as shown:

To annotate the documents, the user would need to select the document with no annotation as shown below, highlight the text and use the short-cut key as listed. For example, to tag an entity as a ‘WellName’, highlight the text to be annotated and the use the key ‘w’ or click on the entity ‘WellName’.

Using the admin login credentials, you can set up user accounts for annotators with limited access to creation/deletion of projects. Each of the annotation user account roles would need to be mapped to the projects where the documents for annotation are uploaded by the admin.

After the user account creation, share the credentials with the annotators and have them login so that they can access the project with the documents for labeling.

Upon login, the annotator only gets to view the projects mapped to them and they do not have the permission to create/edit/delete projects nor do they have the permission to upload/download data as shown below:

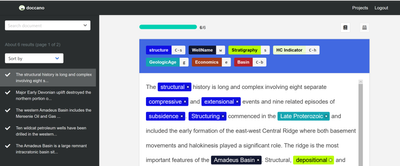

The annotator can access their project and start annotating by using the keyboard short-cuts listed on the top of each page. For example, to tag an entity as a ‘Basin’, highlight the text to be annotated and the use the key ‘Ctrl+b’ or click on the entity ‘Basin’. This is how the documents look when annotated by a user.

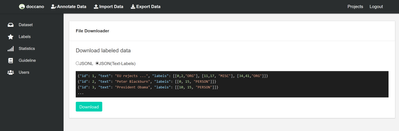

Once the data has been annotated by the domain experts, the admin can download the entire project with labels in either JSON/JSONL format to build a machine learning model.

Using sample Python code as shown below, the JSONL file can be read into a Jupyter notebook and used for modeling.

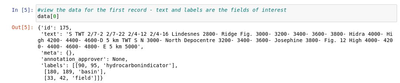

The individual record of data can be reviewed as shown below:

Here is a sample Jupyter notebook to build a Named-Entity Recognition model (NER model).

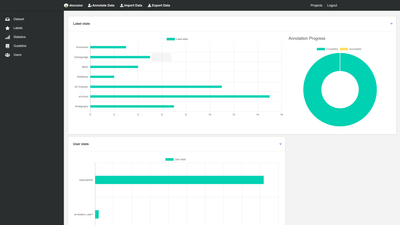

The admin can also view the statistics of the labels, count/user stats, annotation progress as shown below:

Overall based on our experience, Doccano is an easy to use tool for collaborative annotation.

References