This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Azure Stack Hub lights up IaaS capabilities in your datacenter. These range from foundational concepts like enabling self-service, having a marketplace of items, or enabling RBAC (all of them concepts we’ve explored in the Azure Stack IaaS series) – all the way to enabling an Infrastructure as Code practice, or enabling hybrid applications.

Regardless of your motivation to get started with Azure Stack Hub (regulatory, compliance, network requirements, backend systems), every customer has their own, unique journey to the cloud. We’ve talked about how the journey could start with existing servers and have captured some of the lessons learned and questions that should be asked along the way (https://azure.microsoft.com/en-us/resources/migrate-to-azure-stack-hub-patterns-and-practices-checklists/).

Ideally, every cloud migration project would include all the scripts and templates required to have an Infrastructure as Code (IaC) approach. This would enable the management of infrastructure (networks, virtual machines, load balancers, and connection topology) in a descriptive model, using the same versioning features as DevOps teams use for source code. It would take advantage of idempotency and enable DevOps teams to test applications early in the development cycle in production-like environments.

In reality though, most deployments on Azure Stack Hub will start with either small POCs, migrations of that one VM which I’ll-migrate-like-this-while-the-others-I’ll-do-properly which then turns into the migration process used for everything, or multiple lift-and-shift migrations moved “as they are” in the hopes that you will switch to a Infrastructure as Code later.

While it is easier to start with IaC from the start of the project, it’s never too late to take advantage of the IaaS capabilities exposed by Azure Stack Hub.

To facilitate this transition, we’ve came up with a series of scripts (under the name of “subscription replicator”) that will virtually read the resources in the current Azure Stack Hub user-subscription and recreate the ARM templates required to deploy these resources. This process applies only for the ARM-resources themselves (virtual networks, NSGs, VMs, RGs, etc), not the actual data that lives in the respective VMs or storage accounts.

We’ve used the initial process to replicate resources across multiple stamps – where customers wanted to change something in the original Azure Stack Hub stamp. The same process could however be used in a wider range of scenarios:

- Moving from an ASDK (POC) environment to a production Azure Stack Hub

- Updating ASDKs to a newer version, without losing everything created

- Creating BCDR across multiple stamps

- Even starting your journey towards an Infrastructure as Code approach.

Having the ARM templates required to deploy the resources, could be the starting point of building a DevOps practice, including CI/CD pipelines for your infrastructure, and building the next deployments using code.

The following example is based off a scenario where you’ve used an ASDK and built your solution. This could be a POC, or the start of a project where things were tested in the ASDK. When completed, you plan to move to an Azure Stack Integrated System, and are looking to start an IaC practice as well.

Source environment

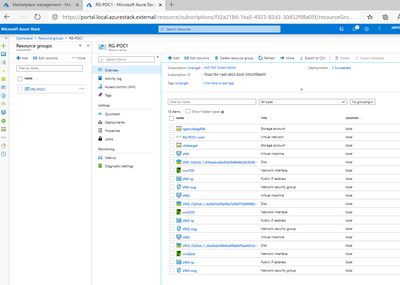

In this example, the solution is comprised of:

- 3 Virtual Machines of different sizes, each with various NSGs and rules

- One virtual network, which all these VMs are linked to

- One storage account used to host the bootdiagnostics for the 3 VMs

- Certain RBAC rules set for resources

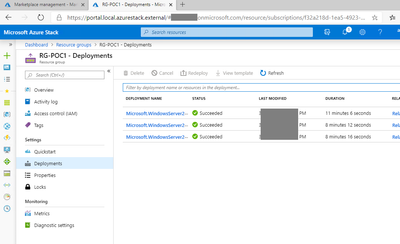

All these resources were deployed as part of 3 different deployments

This reflects many cases where the initial deployment is done through the portal, after that things are added, changed, adjusted, and stabilized.

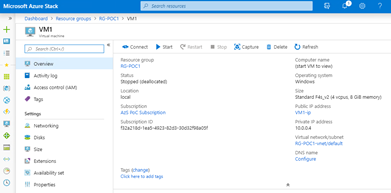

The VMs have different sizes and could have various settings, numbers of disks, or even NICs attached to them:

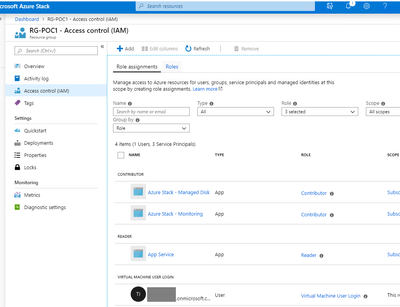

The RBAC side has a few aspects to consider:

- The users typically used in a PoC will be different to the ones in a Production – so when “moving” these resources you will need to convert the assignments accordingly

- The RBAC custom roles will need to be populated respectively as well, since their membership will change, when migrating you need to take into account what happens to these roles

- The RBAC itself isn’t part of the scripts automatically

The last part is due to complexities around the roles. We will not cover in this article ways for you to replicate the custom RBAC roles, but these can be used and adapted as needed on each Azure Stack Hub stamp.

In this case, I have created a new role called “Virtual Machine User Login” (which is the same as the Azure role with the same name – if you are looking for more info, check the links below):

The role is assigned to a user at the RG level – remember that everything is deployed in a single RG in this case, so this user would have rights for all the VMs in this RGs.

Links

- If you are looking to configure custom roles, a good starting point is this exercise from the operator guide workshop.

- The https://github.com/Azure-Samples/Azure-Stack-Hub-Foundation-Core/ are a set of materials (PowerPoint presentations, workshops, and links to videos) aiming to provide Azure Stack Hub Operators the foundational materials required to ramp-up and understand the basics of operating Azure Stack Hub

Target environment

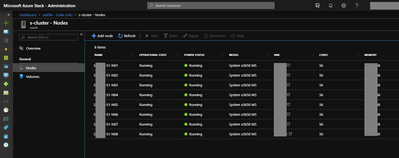

The target environment is an 8 node Azure Stack Hub Integrated System configured with Internet access. This isn’t required, as the Azure Stack Hub system could use “local” IP ranges (local for the respective Enterprise or Datacenter that it resides in), but in this case, it does enable certain features.

For example, the ASDK is placed in a different location to the Azure Stack Hub Integrated System. Access from the ASDK will be outbound only and we can access the target environment directly, even though it’s in a different location. Of course, networking needs to be taken into consideration whenever resources are moved across environments and adjustments need to be made accordingly.

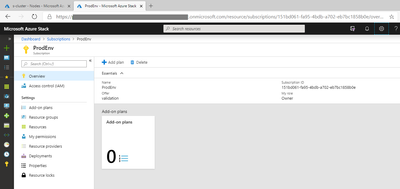

In the user-portal, we’ve already created a user subscription with sufficient quotas to support the resources we plan to move. In a real migration, this would need to be properly assessed and planned, in accordance to the capacity management plans for this Azure Stack Hub environment.

This is needed before running the scripts, since the script will use the TenantID of the AAD used as well as the user SubscriptionID where the resources will be created. Both are needed during the deployment and the script will hydrate these when creating the ARM templates.

Note: Before starting the script, you should note the source and target tenantIDs and subscriptionIDs.

Moving the resources

ARM resources

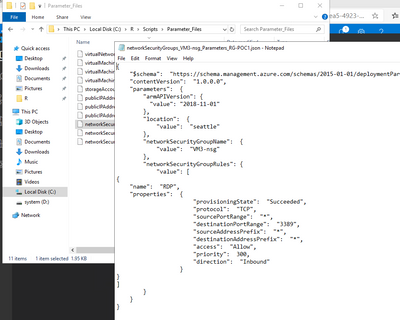

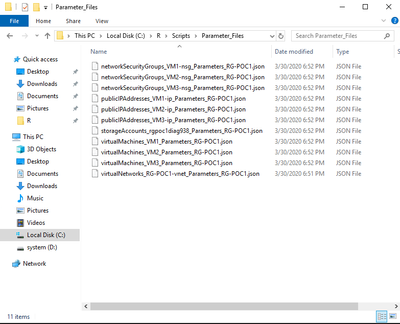

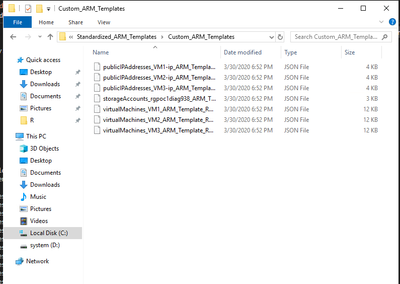

The location of the scripts, process, and setting up the environments are described in the Replicate resources using the Azure Stack Hub subscription replicator. Completing the steps, will result in the creation of the parameter files as well as custom ARM templates where required:

|

|

These are important as they act as a “snapshot” in time of your deployment – meaning, if you were to use these and build ARM templates for every deployment moving forward, this would act as a “starting point” in your Infrastructure as Code journey.

The example given above is simple: a few virtual machines, a virtual network, and NSGs. Even so, you can notice how parameters include the IP settings of for each of those VMs, and how NSGs include the ports configured:

You can easily image a complex scenario, where these have been vetted by the networking team, or are following certain design that is a standard in the company.

This can also be the moment where you adjust these settings to reflect the naming convention for your production environment, or configure certain ports required by other production applications. Since every ARM resource that is part of the deployment is characterized through these parameters, this can also be the time to standardize and ensure the target-requirements are met.

In our scenario, we are moving from an ASDK (which is an isolated environment with certain restrictions) to a Azure Stack Hub Integrated System. Following the Replicate resources using the Azure Stack Hub subscription replicator deployment steps you need to first deploy the Resource Group (RG) and then start the actual deployment.

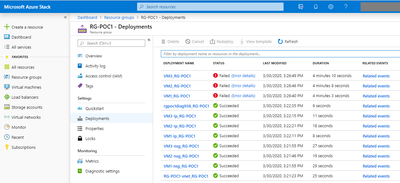

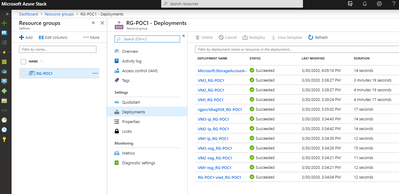

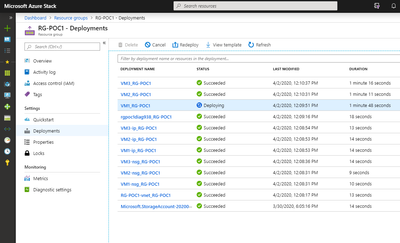

As you do, you’ll notice all the previous resources start to be deployed in their respective dependency order: first the things like virtual networks, public IPs, storage accounts, NSGs, and then the VMs themselves (which take a dependency on all the others).

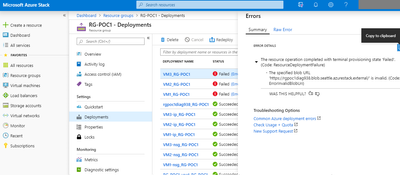

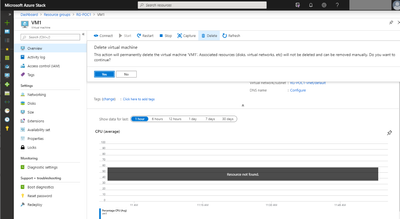

In my case, the first time running the script, the VM creation failed:

As you can see, the first resources were successfully deployed, but not the VMs themselves. A quick check on the deployment revealed the issue

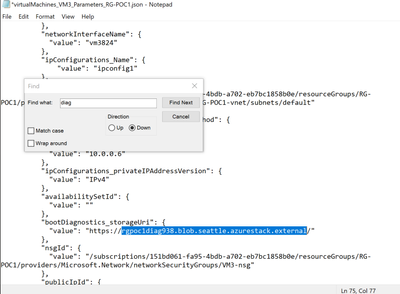

Checking the parameters file showed that the script expected a different region for that storage account – this type of “azurestack.external” is specific to the ASDK and not something you would see on an Azure Stack Hub Integrated System, where this is replaced by an FQDN

Since this storage account is used for bootdiagnostics only (and common to all 3 VMs), a quick change in the 3 parameter files (for each of the VMs) quickly got the deployment back on track:

Moving the data

The steps above would create the ARM resources (vnets, NSGs, VMs, storage accounts, etc) but not the actual data that would live in those storage accounts, or the data inside the VMs themselves. For this you would either need to rely on a 3rd party backup-recovery partner (https://azure.microsoft.com/en-us/blog/azure-stack-laas-part-two/), or even to copy the VHD from the source.

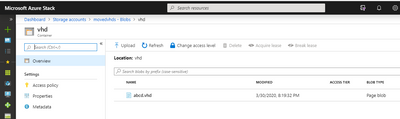

In our current scenario, it’s not an issue to turn off the VM and export the Disk:

Note: Since the source VM uses a Managed Disk, you will first need to export this disk to a VHD and then copy it ( process is similar with the one used in Azure to export/copy the VHDs across different regions)

Once copied, the VHD needs to be uploaded on the Azure Stack Hub Integrated System

On this system, we’ve already run the “DeployResources.ps1” as part of our deployment, which means all the VMs have been deployed.

One option would be to remove everything and deploy them again using the same scripts, but since the generated ARM templates have an incremental deployment, deleting just the VM itself and changing the ARM template would also work:

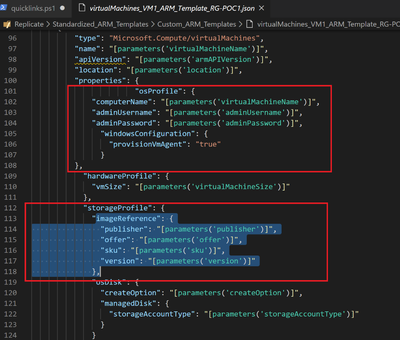

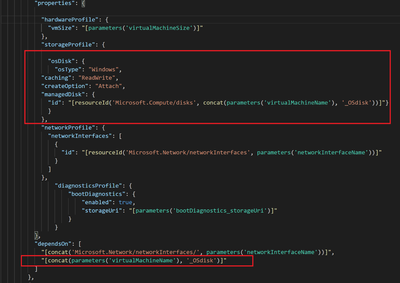

The ARM template used to create the VM (in this case found in the “Standardized_ARM_Templates\Custom_ARM_Templates\virtualMachines_VM1_ARM_Template_RG-POC1.json” file) will use an image available in the Azure stack Marketplace (in this case would be a new Windows Server 2019 server). To change this template, we’ll need to remove the parts that refer to this marketplace item:

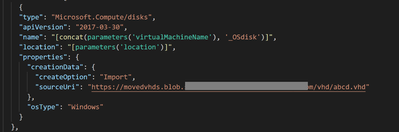

and replace them with a new managed disk that is created from the existing vhd

and create the actual VM that will also take a dependency on that Managed Disk

Note: As you are building these, try to use parameters and ARM templates as much as possible. At first, it would be easier to go to the portal to change things, but having them as code will help drive the Infrastructure as Code practice.

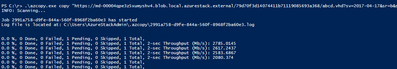

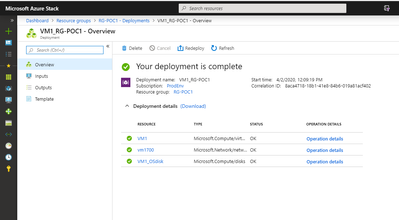

To deploy, simply rerun the DeployResources.ps1 using the same password for the Windows VMs:

As you’ll notice from the deployments tab, most of the resources will not change, but the VM1 will have an extra item created (the Managed Disk) and the VM will be created using that OS disk:

Once the deployment completes, you will be able to login this VM using the initial user and password.

Note: Since the ASDK uses a specific set of “public IPs” (which are NATed IPs by the ASDK), the PublicIPs would change when the resources are created, but the PrivateIPs could be maintained if needed.

Once started on this Infrastructure as Code journey, all the servers, the networking, the storage accounts, every part of the infrastructure itself could be described as code. This could be included in a CI/CD pipeline, which would include tests – so when something changes (a VM is changed, or a new server added), certain tests can be run to make sure things work as expected. The Infrastructure as Code process is a way of managing and provisioning resources, in a declarative way, taking advantage of the IaaS features exposed by Azure Stack Hub in a manner consistent with Azure operations.