This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

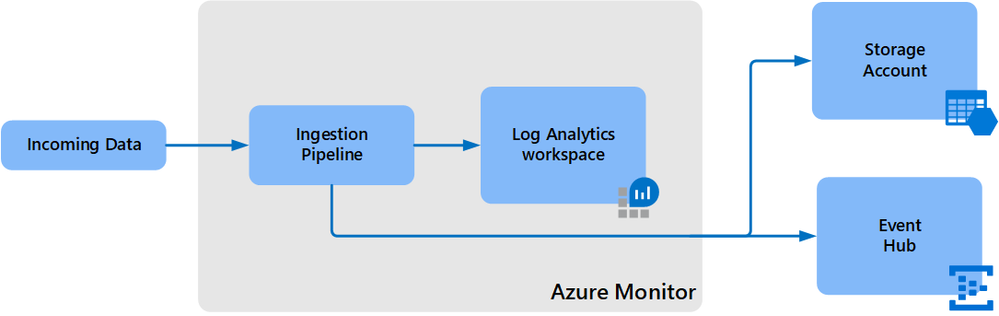

Log Analytics data export let’s you export data of selected tables in your Log Analytics workspace as it reaches ingestion and continuously export it to a Azure storage account and event hub.

Benefits

- Native capability that is designed for scale

- Long retention for auditing and compliance in storage, long beyond the 2 years supported in workspace

- Low cost retention in storage

- Integration with Azure 3rd party and external solutions such as Data Lake and Splunk through event hub

- Near-real-time applications and alerting through event hub

How it works?

Data export was design as the native export path for Log Analytics data and in some cases, can replace alternative solutions used based on query API and were bounded to its limits. Once data export rules are configured in your workspace, any new data arriving at Log Analytics ingestion endpoint and targeted to selected tables in your workspace is exported to your storage account hourly or to event hub in near-real-time.

When exporting to storage, each table is kept under a separate container. Similarly, when exporting to Event Hub, each table is exported to a new event hub instance. Data export is regional and can be configured when your workspace and destination (storage account, event hub) are located in the same region. If you need to replicate your data to other storage account(s), you can use any of the Azure Storage redundancy alternatives.

Configuration is currently available via CLI and REST request and the support in UI, but PowerShell will be added in the near future.

Here is an example of data export rule to storage account – if a table that you defined isn’t supported currently, no data will be exported to destination, but once it gets supported, data will start being exported automatically.

Supported regions

All regions except Switzerland North, Switzerland West and government clouds. The support for these will be added gradually.

Some points to consider

- Not all tables are supported in export currently and we are working to add more gradually. Some tables like custom log require significant work and will take longer. A list of supported tables is available here.

- When exporting to event hub, we recommend Standard, or Dedicated SKUs. The event size limit in Basic is 256KB and the size of some logs exceeds it.

- Log Analytics data export writes append blobs to storage. You can export to immutable storage when time-based retention policies have the allowProtectedAppendWrites setting enabled. This allows writing new blocks to an append blob, while maintaining immutability protection and compliance. Learn more

- Azure Data Lake Storage Gen2 supports for append blob is in preview and requires registration before export configuration can be set. You need to open support request to register the subscription where your Azure Data Lake Gen2 storage is located.

Next steps

- Review data export documentation for more information and configuration of the feature.

- Learn how to query and export data using Logic App flow

- Once exported your data to storage, learn how to query exported data using ADX

Please do let us know of any questions or feedback you have around the feature.