This post has been republished via RSS; it originally appeared at: AI Customer Engineering Team articles.

In a previous blog post (Video Anomaly Detection with Deep Predictive Coding Networks), I provided an overview for how to train a recurrent neural network to detect behavioral anomalies in videos.

In this post we are going to take it a step further: Let’s imagine the University of California, San Diego was so impressed with the incredible performance of your model in detecting unusual events on a sidewalk that the they want to detect anomalies in video feeds from all sorts of cameras on campus. Some of these cameras are similarly monitoring sidewalks, but other cameras might observe other types of scenes, e.g. the entrance to the library or a parking lot.

How can we scale our existing solution so that we can quickly offer anomaly detection at all these other locations? Can we do better than implementing anomaly detection services for all these cameras with brute force, by repeatedly going through the steps described in the previous blog post for each of these cameras? Luckily Microsoft Azure Machine Learning service offers a couple of useful tools that will make our lives much easier and allow us to train and deploy models much more quickly.

First, the steps for developing a model are analogous for each of the cameras: (1) video decoding, (2) data augmentation, (3) training with hyperparameter sweeps, (4) model registration. It would be nice if we could define a pipeline that automatically executes these steps in sequence for each camera. This pipeline could cache the intermediate results of pipeline steps from previous runs, to avoid redundant re-runs of pipeline steps. This is exactly what AML pipelines were built for. We can further attach a Scheduler to each pipeline, to monitor our Azure blob storage container for new training data. This scheduler then automatically triggers a pipeline run to ensure that our models stay up-to-date. For example, maybe the original training data was collected in the middle of summer, but we want to make sure that our model also works in the winter when there is snow on the ground.

Second, we already have a trained model for anomaly detection. We can therefore try to speed things up with transfer learning: Instead of training from scratch, we load the parameters (weights) of the trained model and start training on a new dataset from there. One way to achieve this is to pull an already trained model from our Azure Machine Learning service Workspace to then train the model on the new data.

Third, in the previous blog post I omitted to talk about how to find hyperparameters (e.g. learning rate and learning rate decay) for our model, but just picked some that worked. Whether these same hyperparameters will work for a different camera is far from certain. For example, one often uses relatively smaller learning rates during transfer learning to avoid that the model unlearns previous knowledge. We will also want to use different hyperparameters depending on whether we perform transfer learning or train from scratch.

To build this new, scalable solution, you’ll want to follow these steps, which are typical:

- Setting up AML pipeline (with a DataStore scheduler)

- Performing transfer learning

- Performing hyperparameter sweeps with HyperDrive

Setting up an AML pipeline

(see pipelines_create.py)

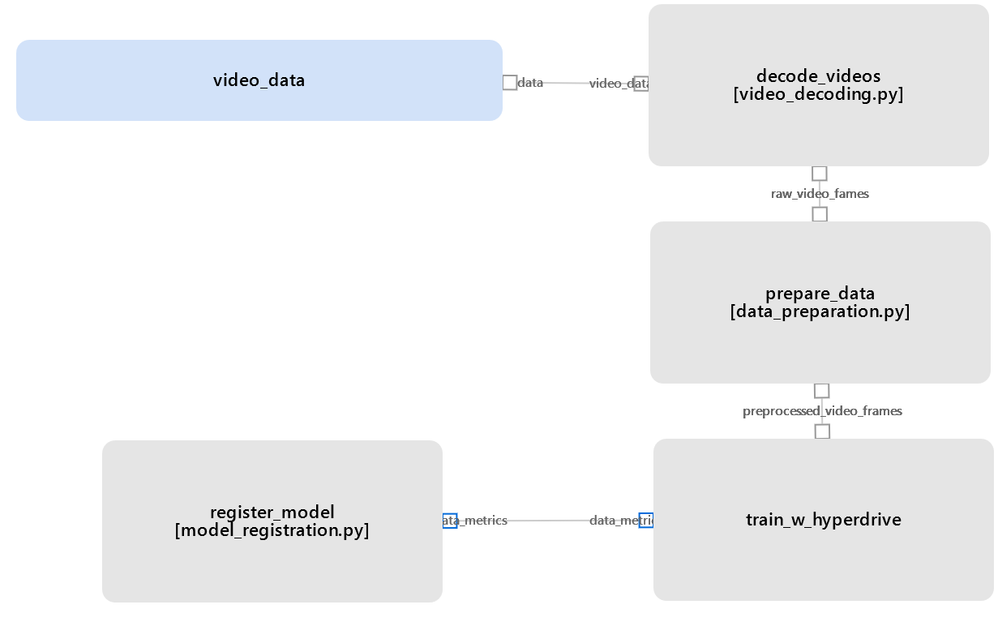

Figure 1: Screenshot of the AML pipeline in the Azure Portal.

As you can see in Figure 1, our AML pipeline for training a model contains the following steps:

- video_decoding - extract individual frames of the video and store them in separate files (e.g. tiff).

- data_prep - scale and crop images so that their size matches the size of the input layer of our model.

- train_w_hyperdrive - train the model using HyperDrive.

- register_model - register the model in the AML workspace for later deployment.

We define steps 1, 2, and 4 with the PythonScriptStep class. For example, video_decoding looks like this:

video_decoding = PythonScriptStep(

name='decode_videos',

script_name="video_decoding.py",

arguments=["--input_data", video_data, "--output_data", raw_data],

inputs=[video_data],

outputs=[raw_data],

compute_target=cpu_compute_target,

source_directory=script_folder,

runconfig=cpu_compute_run_config,

allow_reuse=True

)

A PythonScriptStep is the most generic kind of step in AML pipelines. You can use it to run any kind of python script (e.g. video_decoding.py). If your script accepts input and output data, you can specify those as well. Don’t forget to also define script arguments that allow the script to interpret what to do with these data. The PythonScriptStep also allows you to configure on which compute target and which python environment to execute the script.

One of the most useful features of AML pipelines, in my opinion, is that you can attach a Scheduler to a published AML pipeline. This scheduler can poll a defined storage location at a given interval. This means that we have an opportunity to automatically retrain our model whenever new training data is available. For example, you can set up the scheduler to check your Azure Blob storage container every hour to see whether there is new training data available:

schedule = Schedule.create(workspace=ws, name=pipeline_name + "_sch",

pipeline_id=published_pipeline.id,

experiment_name=pipeline_name,

datastore=def_blob_store,

wait_for_provisioning=True,

description="Datastore scheduler for Pipeline" + pipeline_name,

path_on_datastore=os.path.join('prednet/data/video/UCSD1/Train'),

polling_interval=1

)

In this example, we are telling the scheduler to look at the DataStore def_blob_store (the default blob store of our AML workspace) and check the path_on_datastore for new video files.

Performing transfer learning

Another trick when propagating our solution to other cameras is to not train every model from scratch, but to take an already trained model from the Azure Machine Learning service Workspace, to train it on the data from a new camera. This can lead to a very large speedup of the training process.

For this to work, you need to register a trained model in the Model Registry of your AML service Workspace (see model_registration.py), so that you can then pull the model and use it for transfer learning. The following code shows how to pull and initialize a new model with the trained weights:

from azureml.core import Workspace

from azureml.core.model import Model

# connect to AML workspace

ws = Workspace.from_config(path=config_json)

# pull model from ACR

model_root = Model.get_model_path('prednet_UCSDped1', _workspace=ws)

json_file = open(os.path.join(model_root, 'model.json'), 'r') # todo, this is going to the real one

model_json = json_file.read()

json_file.close()

# load weights

trained_model = model_from_json(model_json, custom_objects={"PredNet": PredNet})

trained_model.load_weights(os.path.join(model_root, "weights.hdf5"))

# create instance of prednet model with the above weights and our layer_config

prednet = PredNet(weights=trained_model.layers[1].get_weights(), **layer_config)

Now we can train our instance of PredNet as before but, instead of starting from randomly initialized weights, we start with the weights of the trained model (see train.py).

Performing hyperparameter sweeps with HyperDrive

One more thing we can do to optimize our process of scaling our video anomaly detection solution is to use HyperDrive to perform automated hyperparameter sweeps. This is useful if we want to train models on completely different training data because we don’t know whether we can use the same hyperparameters that we also used in our initial solution.

First, we define the hyperparameters that we are interested in and how to choose hyperparameters for the next run.

ps = RandomParameterSampling(

{

[…]

'--learning_rate': loguniform(-6, -1),

'--lr_decay': loguniform(-9, -1),

[…]

'--transfer_learning': choice("True", "False")

}

)

Note how we are also including a hyperparameter called “transfer_learning.” This allows us to simultaneously evaluate whether training a model from scratch or training via transfer learning gives us an advantage.

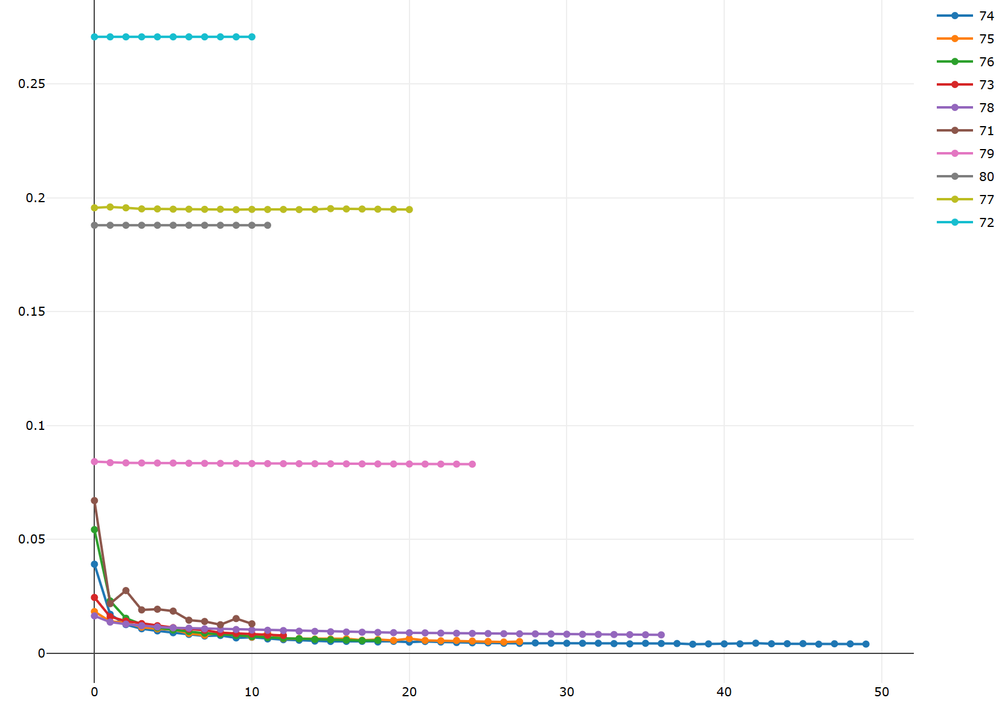

Next, we need to define how to decide when a run should be aborted if it becomes clear that a configuration of hyperparameters is not going to lead to good model performance. Computing costs can be minimized if we cancel those unhelpful jobs. Here, we use a BanditPolicy, which is evaluated every 2 epochs. Training runs in which the model performance (ability to predict the next video frame) is worse by slack_factor times the performance of the best model (after the same number of training epochs) are aborted. We wait for at least 10 epochs, because initial model performance is not a reliable predictor of performance after training is complete. Figure 2 illustrates the result of applying such a policy.

policy = BanditPolicy(evaluation_interval=2, slack_factor=0.1, delay_evaluation=10)

Instead of a PythonScriptStep for training, we use a HyperDriveStep for executing this step to get better resource management and logging of results.

hdc = HyperDriveConfig(estimator=est,

hyperparameter_sampling=ps,

policy=policy,

primary_metric_name='val_loss',

primary_metric_goal=PrimaryMetricGoal.MINIMIZE,

max_total_runs=10,

max_concurrent_runs=5,

max_duration_minutes=60*6

)

hd_step = HyperDriveStep(

"train_w_hyperdrive",

hdc,

estimator_entry_script_arguments=[

'--data-folder', preprocessed_data,

...

],

inputs=[preprocessed_data],

metrics_output = data_metrics,

allow_reuse=True

)

hd_step.run_after(data_prep)

Figure 2: Snapshot of run logs of a HyperDrive run in the Azure Portal. The horizontal axis indicates training epoch and the vertical axis shows error in predicting each pixel in the next frame of the video. You can see that run #72 is cancelled after 10 epochs (at the end of the evaluation delay period) because the error of about .27 is much larger than the error of the best models (~0.01).

Summary

This blog post provided an overview of how to scale your video anomaly detection solution to include data from other cameras. In effect, all the user has to do is to upload training data for a new camera, and this solution then automatically trains a model to perform anomaly detection on this new camera. This is achieved by combining AML pipelines and HyperDrive.

Have you encountered similar scenarios in the past? I have encountered scenarios in many industry verticals in which anomaly detection could be useful. The following table gives a couple of examples.

|

Industry |

Use Case |

|

Manufacturing |

The model can detect accidents on the factory floor (e.g. explosions, violation of safety guidelines, injuries). |

|

Transportation |

This can be used to detect unusual or illegal events in crowds (e.g. traffic accidents, cars on bike paths, physical assault, injured pedestrians, illegally entering/exiting public transportation, anomalies in video of dashboard camera in car). |

|

Health Care |

The approach can be used for any kind of visual monitoring (e.g. hallways in hospitals, patients in bed, ultrasound) |

|

Banking |

The approach could be used to detect suspicious behavior (e.g. bank robbery, theft at ATM). |

|

Farming |

The approach can be used to detect anomalies in the behavior of ranch animals (e.g. limping, predators, thieves). |

|

Education |

Detecting bullying or other violence in schools or universities. |

Please be responsible when you implement such a solution. For example, take a look at the Microsoft AI principles before you deploy a solution.

The complete code for this blog post can be found on github. Please let me know if you have any questions or comments!