This post has been republished via RSS; it originally appeared at: AzureCAT articles.

Co-Author: Hussein Shel

Credits: Ed Price, Azure CAT HPC Team

Goal of this Blog

Goal of this Blog

The goal of this blog is to share our experiences running key Oil and Gas workloads in Azure. We have worked with multiple customers running these workloads successfully in Azure. We now have a great potential for using the cloud in the Oil and Gas industry, to optimize the business workflows that were previously limited by capacity and older hardware.

Targeted Business Workflows

Targeted Business Workflows

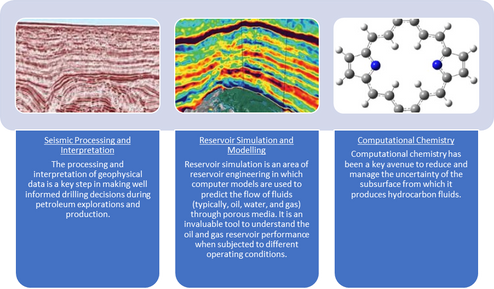

There are multiple user scenarios and workflows in Oil and Gas that use high-performance computing (HPC) to solve problems and create simulations that require massive amounts of data. The workflows that we will be focusing on in this blog are Seismic Processing and Interpretation, Reservoir Simulation and Modeling, and Computational Chemistry. We will refer back to these three workflows throughout this article. See the figure below for more information about them:

Running Your Oil and Gas Workload in Azure

Running Your Oil and Gas Workload in Azure

The key advantage that the cloud brings is elasticity of resources and low infrastructure management overhead thus allowing our customers to focus on their primary business. The IEEE article here (Section 1.2) presents these benefits in some more detail.

Now you have decided to run your workload in Azure. Just like you would do for planning your on-premises infrastructure, you need to make some key infrastructure choices in the cloud that are based on your workload’s requirements. Azure offers many choices for you, because each business and workload have their unique needs. However, we are laying out the Azure service architectures and offerings that we have commonly used in our projects when working with some key O&G customers.

From our experience, both Reservoir and Seismic workflows typically have similar requirements for compute and job scheduling. However, Seismic workloads challenge the infrastructure on storage with its multi-PB storage requirements (a single seismic processing project may start with 500 TB of raw data, which requires a total of several PBs of long-term storage) and throughput requirements (measured in 100's of GB/s). We will share below a few options we have today so you can successfully meet the goals for running your application in Azure.

Typical Reference Deployments and Infrastructure Options

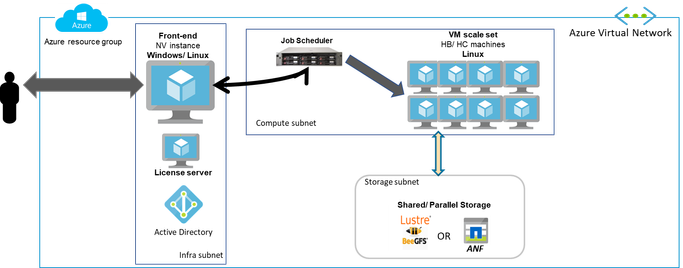

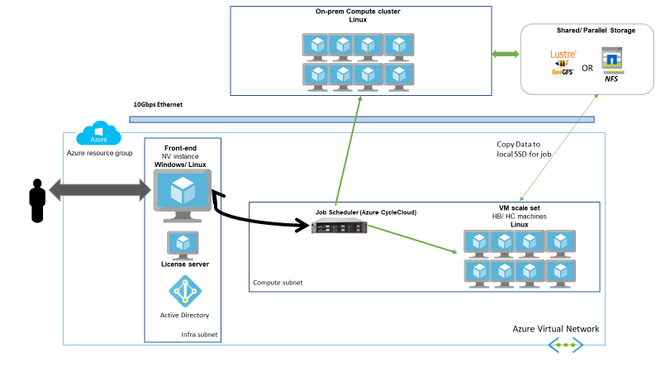

Below we are demonstrating two of the common deployment architectures we see for the O&G workloads. While it is less complex to have all your resources in the cloud (compute, storage, and visualization), it is not uncommon for our customers to have a hybrid model due to multiple business constraints.

Cloud Only

Hybrid

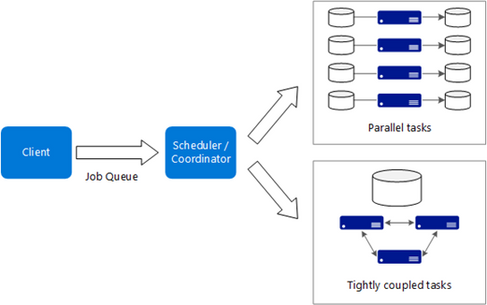

A typical HPC setup includes a front-end for submitting jobs, a job scheduler or orchestrator, a compute cluster, and a shared storage. Below we outline the typical infrastructure options that are used in the Oil & Gas workload deployments shown above for each of the key components.

A few important things to note:

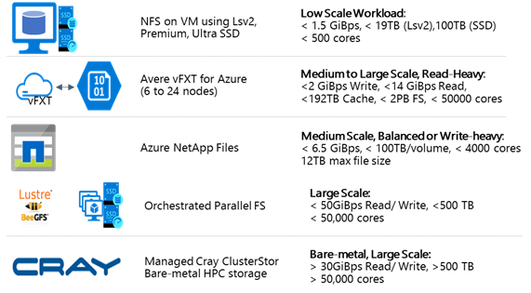

- Lustre/BeeGFS is typically used to handle the large throughput requirements of primarily seismic processing (but also reservoir simulation).

- Azure NetApp Files and local disks are typically used to handle the more latency/IOPS sensitive workloads, like seismic interpretation, model preparation, and visualization.

- Avere vFXT is also very useful in the hybrid scenario, when there is a requirement to sync data between on premises and the cloud, with a high-read I/O pattern (for example, serving up a software stack).

Compute and Visualization Hardware

Azure offers a range of VM instances that are optimized for both CPU & GPU intensive workloads (both compute and visualization) that are greatly suitable for running Oil and Gas workloads.

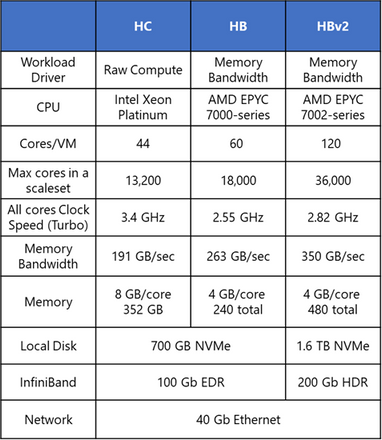

Azure is the only cloud platform that offers VM instances with InfiniBand-enabled hardware. This provides a significant performance advantage for running some reservoir simulation and seismic workloads. This improved performance narrows the performance gap and gets to near or better performance than current on-premises infrastructures.

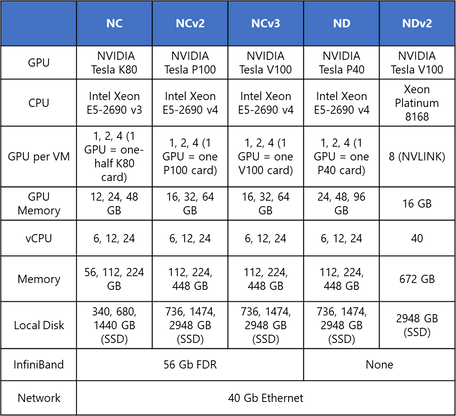

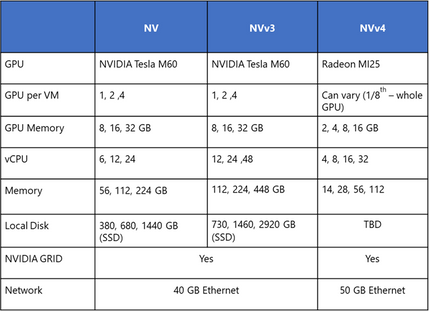

Below are some details of the HPC SKUs available at the time of writing this blog that we have seen commonly used in the workloads stated above. You can find a complete and the most up-to-date list of the available HPC SKUs here.

CPU-enabled virtual machines

GPU-enabled virtual machines: Compute

GPU-enabled virtual machines: Visualization

HPC SKUs have been built specially for high-performance scenarios. However, Azure also offers other SKUs that may be suitable for certain workloads you are running on your HPC infrastructure, which could be run effectively on less expensive hardware. Some compute SKUs commonly used are the E and F series. Details for the available Azure VM SKUs can be found here.

Job Scheduler

Azure offers some specialized services that one can use to schedule compute-intensive work to run on a managed pool of virtual machines, and you can automatically scale compute resources to meet the needs of your jobs.

Azure Batch

Azure Batch is a managed service for running large-scale HPC applications. Using Azure Batch, you configure a VM pool, and then you upload the applications and data files. Then the Batch service provisions the VMs, assigns tasks to the VMs, runs the tasks, and monitors the progress. Batch can automatically scale up/down the VMs in response to the changing workload. Batch also provides a job-scheduling functionality.

Azure CycleCloud

Azure CycleCloud is a tool for creating, managing, operating, and optimizing HPC & Big Compute clusters in Azure. With Azure CycleCloud, users can dynamically provision HPC Azure clusters and orchestrate data and jobs for hybrid and cloud workflows. Azure CycleCloud provides the simplest way to manage HPC workloads, by using various Work Load Managers (like Grid Engine, HPC Pack, HTCondor, LSF, PBS Pro, Slurm, or Symphony) on Azure.

Storage

Below are some of the important storage options available at the time of writing this blog that we commonly see being used in Oil and Gas workloads. Select an option based on your unique IO and capacity requirements. You can find a complete and the most up to date list for available HPC storage options here.

Note: While PFS can scale well to much larger scale than mentioned here, at scales higher than these one may want to consider management and cost implications of maintaining a static IaaS based cluster where ClusterStor may be a good choice. For building larger elastic clusters on the fly you can still do it fairly easily using some of the scripts shared here.

Some Key Oil and Gas Applications that Our Customers and Partners Have Run on Azure

Some Key Oil and Gas Applications that Our Customers and Partners Have Run on Azure

There is a long list of commercial and proprietary applications that are used in the Oil and Gas workflows mentioned above. They run well on Azure today and have been chosen by our customers to migrate. We are listing a handful of these applications below, in order to provide some perspective.

|

Application |

Workflow |

|

Mechdyne TGX |

Technical Desktop |

|

Citrix Xenapp |

Technical Desktop |

|

HP RGS |

Technical Desktop |

|

VMware Horizon View |

Technical Desktop |

|

CGG GeoSoftware |

Seismic, Reservoir, Velocity, etc. |

|

Halliburton SeisSpace ProMAX |

Seismic |

|

Halliburton DecisionSpace Geosciences |

Interpretation |

|

Halliburton Nexus |

Reservoir |

|

Rock Flow Dynamics tNavigator |

Reservoir |

|

Schlumberger ECLIPSE |

Reservoir |

|

Schlumberger INTERSECT |

Reservoir |

|

Emerson Paradigm Suite |

Seismic, Reservoir, etc. |

|

CMG STARS |

Reservoir |

|

OPM |

Reservoir |

|

ParaView |

Interpretation |

|

Petrel |

Interpretation |

|

ResInsight |

Interpretation |

|

Abaqus |

Finite Element Analysis |

|

Gromacs |

Computational Chemistry |

|

LAMMPS |

Computational Chemistry |

|

VASP |

Computational Chemistry |

|

Quantum ESPRESSO |

Computational Chemistry |

Test Drive

Test Drive

The Azure CAT HPC team has put together a framework for deploying an end-to-end (e2e) Azure environment and for running your workload. If you would like to try to run your O&G scenarios, you can very easily use the examples here to build an environment of your choice or just try some of the tutorials provided, which include some applications like OPM (open source) and Intersect (requires license).

Additional References

Additional References

- ISV Partnership Announcements:

- Customer Partnership Announcements:

- Technical References: