This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

The Azure Data Factory team has released JSON and hierarchical data transformations to Mapping Data Flows. With this new feature, you can now ingest, transform, generate schemas, build hierarchies, and sink complex data types using JSON in data flows.

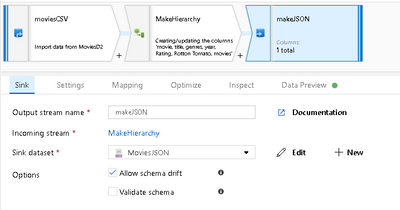

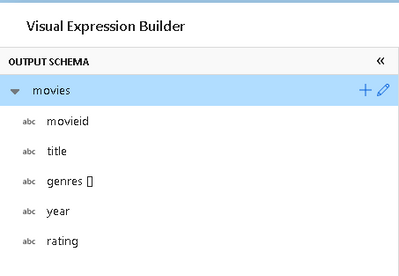

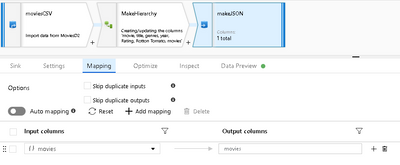

In the sample data flow above, I take the Movies text file in CSV format, generate a new complex type called "Movies" that contains each of the attributes of the incoming CSV file.

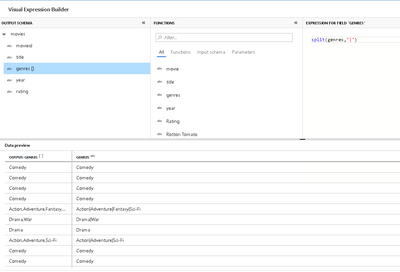

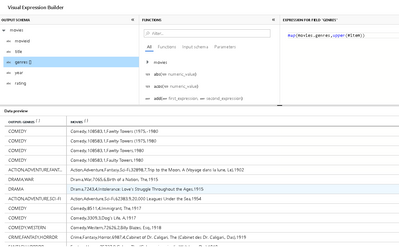

By using the Expression Builder (see above) inside of a Derived Column transform, I can define a new hierarchical structure that includes arrays. Genres is a multi-value field from the source CSV that contains 1:n genre categories for movies via a pipe (|) delimited field. I can use the ADF data flow expression language to split all values from that string and store each value as an array element.

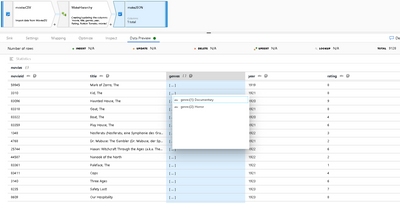

Mapping Data Flows now has the ability to show metadata for complex types including hierarchies and arrays in both metadata Inspect views as well as in Data Preview.

Now that I've transformed my flat file into a hierarchical JSON format, I can sink this as a new JSON file to my Blob or lake using a JSON Sink. To create this new file, I'll map just the new complex types by selecting the top-level "Movies" hierarchy.

JSON datasets can also now become source files for Data Flows. In this case, I'll read in my new JSON dataset from the above example and perform some transformations. I want to upper case each of the genres. To do this, I can apply a single, simple expression in a Derived Column, that will upper case each element in my Genres array that I created previously.

We've posted a helper document on our ADF documentation site to help you work through more examples of working with hierarchies, arrays, and JSON datasets in ADF Mapping Data Flows here.