This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Part 1: The Project

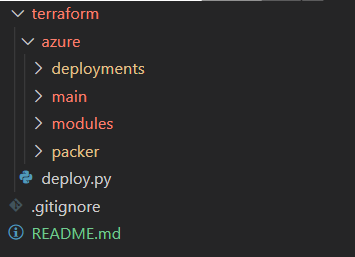

- Devops repo

- Overview of the Terraform Project

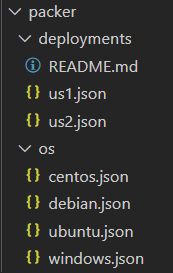

- Packer project structure

- Naming conventions

- Configuration Options

Part 2: Deploy Wrapper Overview

Part 3: Deploying the first environment

- Deploying Core Resources

- Building first Packer Image

- Deploy Squid Proxy VMs

- Build second Packer image (proxy aware)

- Deploy SQL Server / Database

- Deploy NodeJS VM's

- Deploy Nginx VM's

- Deploy Core Resources for Secondary Region (optional)

- Enabling Log Analytics (optional)

- Enable Remote State (optional)

This is an example of how you could begin to structure your terraform project to deploy the same terraform code to multiple customers, environments or regions. The project will also give you consist naming across all your resources in a given deployment. We will not be able to cover all of the options in this project but will cover the basics to get you started. By no means should this project be deployed without modification to include security, monitoring and other key components to harden your environment.

*** Project is written in Terraform v12

*** Users should have a general understanding of Terraform HCL

*** This is an example / guide not a production ready template.

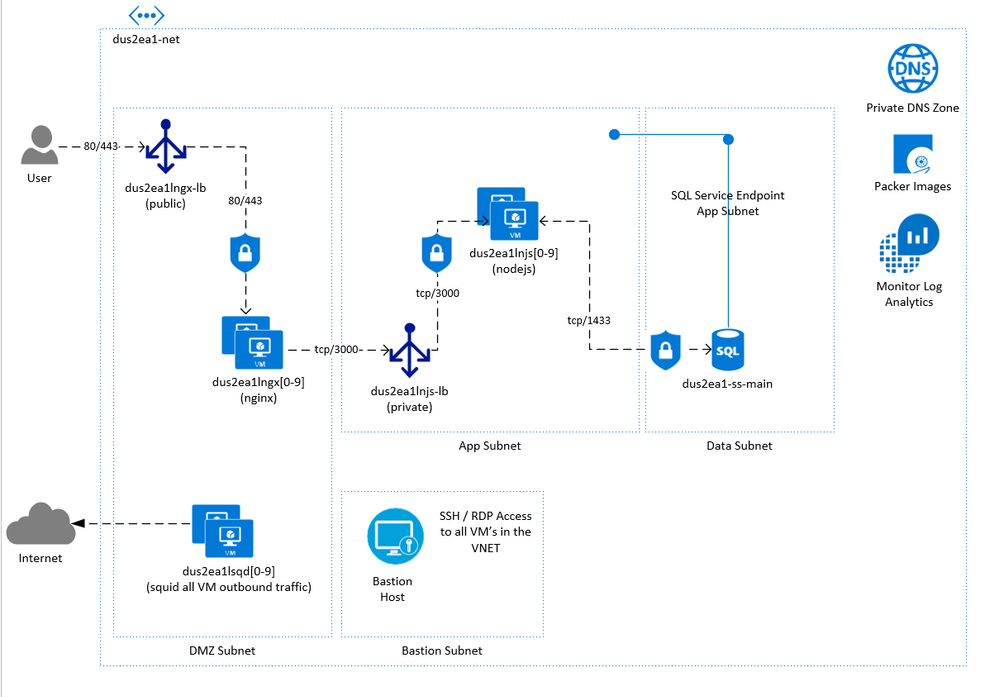

What is being built:

In this example we will deploy 2 squid proxy servers for outbound traffic, 2 nginx servers in front of a public load balancer, 2 nodejs web servers in front of a private load balancer and a single Azure SQL Database with service tunneling enabled. All DNS resolution will be through Azure Private DNS zones which is configured when building the base image using Packer. The nginx servers will act as the frontend which will route traffic to a private LB in front of the nodejs app which connects to the Azure SQL Server all of which is configured on deploy through cloud-init. SSH access will be disabled remotely and is only allowed via Azure Bastion.

The Project

The repo (clone)

git clone https://github.com/rohrerb/terraform-eiac.git

View the project online > https://github.com/rohrerb/terraform-eiac/

*Feel free to contribute or give feedback directly on the repo.

Overview of the Project

High Level Project:

|

Level |

Additional Information |

|

terraform/azure |

This level is referred to as the cloud level which is defaulted as azure in deploy.py |

|

terraform/azure/deployments |

Contains terraform variable files for different isolated deployments. These variable files are fed into the section (main) and drive naming, configuration… |

|

terraform/azure/main |

"Main" is also called a section in the deploy.py. Each section gets its own terraform state file (local or remote). You can have as many sections as possible and use terraform data sources to related resources between them. Deployment variable files are shared across all sections. An example of this could be to have a "main" sections for all things and a "NSG" section for only NSG rules (subnet, NIC, etc). |

|

terraform/azure/modules |

Terraform modules which can reused and referenced across any section. There is a marketplace with modules or you can write your own like I did. |

|

terraform/azure/packer |

Contains packer deployment and os files used to build images. Deployment files are auto generated based on first run terraform run of "main" |

|

terraform/deploy,py |

Python3 wrapper script which is used to control terraform / packer executions. |

|

In depth instructions with common deploy.py terraform / packer commands |

Deployment:

|

Level |

Additional Information |

|

azure/deployments |

Contains terraform variable files for different isolated deployments. These variable files are fed into the section (main) and drive naming, configuration… |

|

azure/deployments/<folder> |

<folder(s)> under deployments ultimately act as a deployment or separate environment. In my example US1 has configured for USGov and US2 is configured for a US Commercial deployment. |

|

azure/deployments/<folder>/deployment.tfvars |

This is the variable file which is passed into the "main" section and drives the configuration for the environment. |

|

azure/deployments/<folder>/secrets.tfvars |

This is the variable file which is passed into the "main" section and drives the configuration for the environment along with deployment.tfvars except this one is excluded in any commits using .gitignore. |

|

azure/deployments/<folder>/state/main |

If using local state the state file will be deployed in this folder. Deploy.py also will keep a 30 day rolling backup of state in this folder. If your using remote state this folder will not exist. |

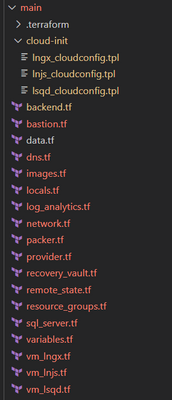

Main:

|

Level |

Additional Information |

|

azure/main |

"Main" is also called a section in the deploy.py. Each section gets its own terraform state file (local or remote). You can have as many sections as possible and use terraform data sources to related resources between them. Deployment variable files are shared across all sections. An example of this could be to have a "main" sections for all things and a "NSG" section for only NSG rules (subnet, NIC, etc). |

|

azure/main/.terraform |

Folder which is created by terraform. Contains providers, module information as well as backend state metadata. |

|

azure/main/cloud-init/<vm_type>_cloudconfig.tpl |

Cloud-init folder contains vm type specific cloud-init tpl files. If no file exists for a vm type the VM will not have a value in "custom_data". |

|

azure/main/backend.tf |

Target backend which is ultimately controlled by `deploy.py` Backend config values are added to the command line. |

|

azure/main/data.tf |

Contains any high level `data` terraform resources. |

|

azure/main/images.tf |

Holds multiple azurerm_image resources which pull packer images from a resource group. |

|

azure/main/locals.tf |

Local values which are used in the root module (main). |

|

azure/main/network.tf |

Contains network, subnet, watcher and a few other network related resources. |

|

azure/main/packer.tf |

Local File resource which is used to write the packer configuration files. |

|

azure/main/provider.tf |

Target provider resource. |

|

azure/main/recovery_vault.tf |

Azure Site recovery terraform resources which are used to protect VMs in a secondary region. |

|

azure/main/remote_state.tf |

Project allows you to have a local state or a remote state. This file contains the resources required to host state in a blob storage account. 100% driven by a variable. |

|

azure/main/sql_server.tf |

Contains the resources to deploy Azure SQL Databases and related resources. |

|

azure/main/resource_groups.tf |

Contains multiple resource group terraform resources to hold the many resources created by this project. |

|

azure/main/variables.tf |

Variables used in the deployment in the root module. |

|

azure/main/vm_lngx.tf |

Example of a VM type instance. `vm_` is just a prefix to keep them together. `lngx` first character `l` for linux and `sql` to describe what workload the vm is running. |

|

azure/main/vm_lsqd.tf |

Example of a VM type instance. `vm_` is just a prefix to keep them together. `lsqd` first character `l` for linux and `web` to describe what workload the vm is running. |

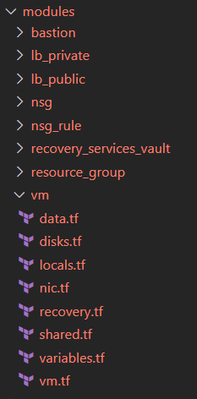

Main:

|

Level |

Additional Information |

|

azure/main/modules |

This folder contains custom modules which are leveraged within the root module (main). There is a large registry of modules already built which can be pulled in. https://registry.terraform.io/browse/modules?provider=azurerm |

Packer:

|

Level |

Additional Information |

|

azure/packer/deployments |

This folder contains deployment.json files for each terraform deployment. These files are 100% dynamically created by azure/main/packer.tf |

|

azure/packer/os |

This folder contains the different os specific packer files which are used in the deploy.py script. Any changes to the provisioners will affected the base images which all VM's are created from. Specific VM type modifications should be made using cloud-init. |

Naming Conventions:

Deploying using the template will provide a consisting naming convention across your environments. The deployment.tfvars file contains several variables which will drive your resource naming.

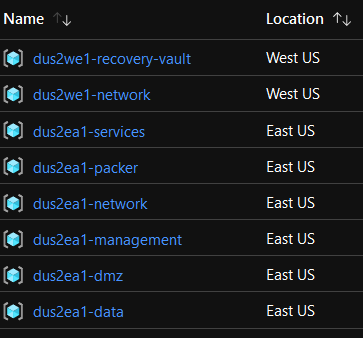

Most resources are prefixed using full environment code (above) which is made up of

environment_code + deployment_code + location_code

Example of resource groups names using the above values.

Configuration Options:

Below are some of the variable options available per deployment.tfvars

|

Variable |

Example Value |

Required |

Description |

|

subscription_id |

"7d559a72-c8b6-4d07-9ec0-5ca5b14a25e7" |

Yes |

Subscription which all resources will be deployed into. User deploying must have access to this subscription. |

|

enable_remote_state |

false |

Yes |

If enabled a new Resource Group, Storage account, Container will be created to hold the terraform state remotely. Additional steps will be required and are listed in a later blog post. |

|

enable_secondary |

true |

No |

If enabled a few other variables would be required, such as location_secondary, location_code_secondary . It will build out this skeleton deployment in a secondary region for DR purposes which includes additional resource groups, vnet, etc. |

|

location |

"East US" |

Yes |

Region where all resources are deployed. |

|

location_secondary |

"West US" |

No |

Required if enable_secondary is enabled. This drives the region where the secondary resources are placed. |

|

network_octets |

"10.3" |

Yes |

First two network octets for the VNET. Be default the VNET is configured with a /16 space. By default you get DMZ, Services, Data, Management and AzureBastion Subnets. These can be changed under the subnet variable in the variables.tf file. |

|

network_octets_secondary |

"10.4 |

No |

Required if enable_secondary is enabled. First two network octets for the secondary VNET. Be default the VNET is configured with a /16 space. |

|

enable_recovery_services |

true |

No |

In enabled the resources are deployed to enable Azure Site Recovery to the secondary region. The secondary region needs to be configured to enable this. |

|

enable_bastion |

true |

No |

If enabled the key components for Bastion Host will be added. |

|

deploy_using_zones |

True |

No |

If enabled and the region supports Zones the VM, LB, Public IP's will be deployed across 3 zones. If disabled the VM's will be deployed within a AVSET. |

|

dns_servers |

[10.3.1.6, 10.3.1.7] |

No |

Private Zones are created by default but you can override by specifying your own DNS servers. |

|

vm_instance_map |

{} #See below |

Yes |

A map which is used to deploy certain VM types in this deployment. See VM Configuration Options below. |

|

sql_config_map |

{} |

No |

A map which is used to deploy 1:N Azure SQL Servers. See SQL Server Configuration Options below. |

VM Configuration Options:

Of course this project can be expanded to include Azure resource such as Web Apps, Functions, Logic Apps, etc but out of the box it includes VM's. VM's are controlled by a single map in the environment.tfvars.

All VM's are by default configured to use bastion host to ssh or RDP. If you wish to override you will need to add a public ip to the VM's and open the NSG.

The above vm instance map would deploy these resources in the dmz subnet and rg with the following naming convention which are derived from

`environment_code` + `deployment_code` + `location_code` + `vm_code` + `iterator`

Breaddown of the `vm_instance_map` options for each entry (vm type):

lngx = { count = 2, size = "Standard_D2s_v3", os_disk_size = 30, data_disk_count = 1, data_disk_size = 5, enable_recovery = false, enable_public_ip = false, enable_vm_diagnostics = false }

|

Attritbute |

Example Value |

Required |

Description |

|

Map Key |

lngx |

Yes |

Map Key which maps to the `location_code` + `instance_type` of the VM |

|

count |

2 |

Yes |

Number of VM's you want to create of this type. |

|

size |

Standard_D2s_v3 |

Yes |

Size that you want each of the VMs of this type to have. You can use to lookup available sizes http://www.azureinstances.info/ as well as Azure CLI, etc. |

|

os_disk_size |

30 |

Yes |

Size in GB of the OS Disk of this VM type. |

|

data_disk_count |

1 |

No |

Number of Data Disks to create and attach to the VM |

|

data_disk_size |

50 |

No |

Size in GB of the Data Disks |

|

enable_public_ip |

true |

No |

If enabled a public ip will be created and attached to each of the VM's nic's |

|

enable_vm_diagnostics |

false |

No |

If enabled a storage account will be created and configured for this VM type as well as enabling diagnostics for the VM. |

|

enable_recovery |

False |

No |

If enabled the VM and all disks will be protected by Recovery Services. `enable_secondary` and `enable_recovery_services` must be enabled in the deployment as well. |

SQL Server Configuration Options:

Each high level map will get you a single SQL Server instance with 1:N SQL Databases.

|

Attritbute |

Example Value |

Required |

Description |

|

map Key |

mapp |

Yes |

Map Key which is used to derive the name of the sql resources |

|

deploy |

true |

Yes |

Switch used to control the creation of the resources. |

|

admin_user |

sadmin |

Yes |

Username for the SQL Admin user. |

|

subnet_vnet_access |

app = { } |

No |

Map list which will allow traffic from a subnet to flow to the SQL privately. |

|

firewall_rules |

all = { start_ip_address = "0.0.0.0", end_ip_address = "255.255.255.255" } |

No |

Map list of firewall rules to add to the sql server. |

|

databases |

main = { edition = "Standard", size = "S0" } qa = { edition = "Standard", size = "S2" } |

Yes |

Map list of the databases to create under this sql server resources. Map key is the name of the database, edition and size are required. |

Deploy Wrapper Overview

If using this project you should always use the deploy.py to manipulate the terraform environment. It handles wiring up all paths to variables, state as well as the backend configurations for local and remote state. In addition the wrapper handles switching cloud and changing accounts automatically based on terraform variables in the deployment.tfvars.

If you are using windows, please go through these steps to install Terraform on the Window 10 Linux Sub System. You also need to install Packer, please install the same way you installed Terraform.

Next we need to install python3 and pip3

The wrapper has a detailed README.md that can help you with command help as well as example commands for terraform and packer.

Reviewing the Commands:

|

Argument |

Example Value |

Required |

Description |

|

-d |

us1 |

Yes |

Matches the deployment folders in the terraform project structure. `azure/deployments/<deployment_code>` |

|

-s |

main |

no |

"main" (default) is also called a section in the terraform project structure. `azure/main` Each section gets its own terraform state file (local or remote). You can have as many sections as possible and use terraform data sources to related resources between them. Deployment variable files are shared across all sections. An example of this could be to have a "main" sections for all things and a "NSG" section for only NSG rules (subnet, NIC, etc). |

|

-a |

plan |

No |

Maps to terraform commands (plan, apply, state, etc) > https://www.terraform.io/docs/commands/index.html. Plan is the default. |

|

-t |

module.rg-test.azurerm_resource_group.rg[0] |

No |

Required when certain actions are used. Use target when you want to perform a action on only a few isolated resources. https://www.terraform.io/docs/commands/plan.html#resource-targeting |

|

-r |

/subscriptions/7d559a72-c8b6-4d07-9ec0-5ca5b14a25e7/resourceGroups/dus2ea1-dmz |

No |

Required when importing resources into state. https://www.terraform.io/docs/import/index.html |

|

-pu |

|

No |

When used the following is added to `terraform init` `-get=true -get-plugins=true -upgrade=true'. Used when you want to upgrade the providers, however its best practice to pin providers in the provider.tf. |

|

-us |

--unlock_state 047c2e42-69b7-4006-68a5-573ad93a769a |

No |

If you are using remote state you may occasionally end up with a lock on the state blob. To release the lock you can use this parameter or you can release from the azure portal. |

|

--ss |

|

No |

Default is True, Set to False if you wish to NOT take a snapshot. I do not recommend that you disable. |

|

-p |

|

No |

Use -p to enable a Packer run |

|

-po |

ubuntu |

Yes |

Required when -p is added. Maps to a os file under `azure/packer/os/<filename> |

Example Commands:

plan

deploy.py -d us1

apply

deploy.py -d us1 -a apply

apply w/target

deploy.py -d us1 -a apply -t module.rg-test.azurerm_resource_group.rg[0]

apply w/target(s)

deploy.py -d us1 -a apply -t module.rg-test.azurerm_resource_group.rg[0] -t module.rg-test.azurerm_resource_group.rg["test"]

apply w/target against a tf file.

deploy.py -d us1 -t resource_groups.tf

state remove

deploy.py -d us1 -a state rm -t module.rg-test.azurerm_resource_group.rg[0]

state mv

deploy.py -d us1 -a state mv -t module.rg-test.azurerm_resource_group.rg[0] -t module.rg-test2.azurerm_resource_group.rg[0]

import

deploy.py -d us1 -a import -t module.rg-test.azurerm_resource_group.rg[0] -r /subscriptions/75406810-f3e6-42fa-97c6-e9027e0a0a45/resourceGroups/DUS1VA1-test

taint

deploy.py -d us1 -a taint -t null_resource.image

untaint

deploy.py -d us1 -a untaint -t null_resource.image

unlock a remote state file

If you receive this message you can unlock using the below command. Grab the *ID* under *Lock Info:*

deploy.py -d us1 --unlock_state 047c2e42-69b7-4006-68a5-573ad93a769a

Common Packer Commands:

build ubuntu

deploy.py -p -po ubuntu -d us1

build centos

deploy.py -p -po centos -d us1

build windows

deploy.py -p -po windows -d us1

Deploying the first environment

Deploying Core Resources:

- Open the vscode then File > Open Folder > Choose the root folder which was cloned.

- The project is default setup with two deployments:

- `us1` which is setup for US Government

- `us2` which is setup for US Commercial

- We will continue with us2 as our deployment. Open the `deployment.tfvars` under `us2` folder, plug your subscription guid in the empty quotes. (Save)

- Click Terminal > New Terminal. (If on windows, type bash and hit enter in the terminal to enter WSL).

- Type `cd terraform` so that deploy.py is in your current directory.

- Type `./deploy.py -d us2 -pu -t resource_groups.tf -a apply` to run a apply targeting all of the resource groups. When prompted type `yes` and then enter. Take note of the commands which show in yellow (after Running:), they are the actual commands which are run to bootstrap the environment (az, tf init/command)

Runtime Variables: Cloud: azure Section: main Deployment: us2 Action: apply Variables: /mnt/c/Projects/thebarn/eiac/terraform/azure/deployments/us2/deployment.tfvars Secrets: /mnt/c/Projects/thebarn/eiac/terraform/azure/deployments/us2/secrets.tfvars Section: /mnt/c/Projects/thebarn/eiac/terraform/azure/main Deployment: /mnt/c/Projects/thebarn/eiac/terraform/azure/deployments/us2 Target(s): module.rg-dmz module.rg-services module.rg-management module.rg-data module.rg-network module.rg-network-secondary module.rg-packer module.rg-recovery-vault Running: az cloud set --name AzureCloud Running: az account set -s 7d559a72-xxxx-xxxx-xxxx-5ca5b14a25e7 Start local state engine... Running: terraform init -reconfigure -get=true -get-plugins=true -upgrade=true -verify-plugins=true ... Terraform has been successfully initialized! … Taking state snapshot Running: terraform apply -target=module.rg-dmz -target=module.rg-services -target=module.rg-management -target=module.rg-data -target=module.rg-network -target=module.rg-network-secondary -target=module.rg-packer -target=module.rg-recovery-vault -state=/mnt/c/Projects/thebarn/eiac/terraform/azure/deployments/us2/state/main/terraform.tfstate -var-file=/mnt/c/Projects/thebarn/eiac/terraform/azure/deployments/us2/deployment.tfvars -var-file=/mnt/c/Projects/thebarn/eiac/terraform/azure/deployments/us2/secrets.tfvars ... Terraform will perform the following actions: # module.rg-data.azurerm_resource_group.rg[0] will be created + resource "azurerm_resource_group" "rg" { + id = (known after apply) + location = "eastus" + name = "dus2ea1-data" + tags = (known after apply) } # module.rg-dmz.azurerm_resource_group.rg[0] will be created + resource "azurerm_resource_group" "rg" { + id = (known after apply) + location = "eastus" + name = "dus2ea1-dmz" + tags = (known after apply) } # module.rg-management.azurerm_resource_group.rg[0] will be created + resource "azurerm_resource_group" "rg" { + id = (known after apply) + location = "eastus" + name = "dus2ea1-management" + tags = (known after apply) } # module.rg-network.azurerm_resource_group.rg[0] will be created + resource "azurerm_resource_group" "rg" { + id = (known after apply) + location = "eastus" + name = "dus2ea1-network" + tags = (known after apply) } # module.rg-packer.azurerm_resource_group.rg[0] will be created + resource "azurerm_resource_group" "rg" { + id = (known after apply) + location = "eastus" + name = "dus2ea1-packer" + tags = (known after apply) } # module.rg-recovery-vault.azurerm_resource_group.rg[0] will be created + resource "azurerm_resource_group" "rg" { + id = (known after apply) + location = "westus" + name = "dus2we1-recovery-vault" + tags = (known after apply) } # module.rg-services.azurerm_resource_group.rg[0] will be created + resource "azurerm_resource_group" "rg" { + id = (known after apply) + location = "eastus" + name = "dus2ea1-services" + tags = (known after apply) } Plan: 7 to add, 0 to change, 0 to destroy. - Type `./deploy.py -d us2 -a apply` to apply the rest of the initial resources (network, Bastion, Watcher, etc)

... Plan: 22 to add, 0 to change, 0 to destroy.

Building First Packer Image:

Next we need to build our first image, we will focus on only Ubuntu. This first image will be solely used to build out the `lsqd` VM's which will be our proxy (squid) VM's. During our 2nd apply the packer configuration for us2 was written to the packer deployment folder.

*The first time you deploy packer it will require you to authenticate twice. Once the image is built it will land in the `dus2ea1-packer` resource group.

./deploy.py -p -po ubuntu -d us2

You will see the following output on the first build because squid is not running on a vm in the vnet.

Once the build is completed you will see the following output:

Building Squid Proxy VMs:

Lets build out the `lsqd` VM's using the Ubuntu image we just built.

- Be default all linux boxes are setup to use a ssh_key which is by default pulled from `~/.ssh/id_rsa.pub`. To override this path, create a `secrets.tfvars` in the deployment folder and paste `ssh_key_path = "<path to key>"` If you need to create a new key, run `ssh-keygen`

- Open `deployment.tfvars` and change the count for `lsqd` to `2`.

- Run `./deploy.py -d us2 -a apply`

- Type `yes` to create the resources for the squid box.

Building the Second Packer Image which is proxy aware:

Next we need to build our second image. The second image will run the `packer/scripts/set_proxy.sh` script which will check if the proxy servers are online and make the necessary proxy changes.

Run `./deploy.py -p -po ubuntu -d us2`

Take note of the output from the build which will include…

Building Azure SQL Server and DB:

- Open `deployment.tfvars` and change `sql_config_map.myapp.deploy` equal to `true`. The sql server will be a single database and vnet support.

- Run `./deploy.py -d us2 -a apply`

- Take note that the `password` is passed from a resource in terraform. Because we are passing it into cloud-init at another step in plain text it's VERY important that its changed.

Terraform will perform the following actions:

Building NodeJS VMs:

Lets build out the nodejs servers. Make sure the 2nd packer images exists in the Packer resource group.

- Open `deployment.tfvars` and change the count for `lnjs` to `2`. The nodejs servers will be deployed with only a private ip and put behind a private load balancer.

- Run `./deploy.py -d us2 -a apply`

- The `lnjs` vm will be created using the packer ubuntu image we created in a previous step. The VM itself will be bootstrapped by a cloud-init script which will install nodejs and pm2. The cloud-init scripts are under the cloud-init folder. Take note that the sql_sa_password, sql_dns is passed from the sql_server module which is why we created it first. This is done to simplify the example, please do not pass credentials as plain text through cloud-init in production.

cloud-init for lnjs vm with simple node site:#cloud-config package_upgrade: true packages: write_files: - owner: ${admin_username}:${admin_username} - path: /home/${admin_username}/myapp/index.js content: | var express = require('express') var app = express() var os = require('os'); app.get('/', function (req, res) { var Connection = require('tedious').Connection; var config = { server: '${sql_dns}', //update me authentication: { type: 'default', options: { userName: 'sa', //update me password: '${sql_sa_password}' //update me } } }; var connection = new Connection(config); connection.on('connect', function (err) { if (err) { console.log(err); } else { console.log("Connected"); executeStatement(); } }); var Request = require('tedious').Request; var TYPES = require('tedious').TYPES; function executeStatement() { request = new Request("SELECT @@VERSION;", function (err) { if (err) { console.log(err); } }); var result = 'Hello World from host1 ' + os.hostname() + '! \n'; request.on('row', function (columns) { columns.forEach(function (column) { if (column.value === null) { console.log('NULL'); } else { result += column.value + " "; } }); res.send(result); result = ""; }); connection.execSql(request); } }); app.listen(3000, function () { console.log('Hello world app listening on port 3000!') }) runcmd: - sudo curl -sL https://deb.nodesource.com/setup_13.x | sudo bash - - sudo apt install nodejs -y - sudo npm config set proxy http://${proxy}:3128/ - sudo npm config set https-proxy http://${proxy}:3128/ - cd "/home/${admin_username}/myapp" - sudo npm install express -y - sudo npm install tedious -y - sudo npm install -g pm2 -y - sudo pm2 start /home/${admin_username}/myapp/index.js - sudo pm2 startup

Building Nginx VMs:

Lets build out the nginx servers.

- Open `deployment.tfvars` and change the count for `lngx` to `2`. The lngx servers will be deployed with only a private ip and put behind a public load balancer.

- Run `./deploy.py -d us2 -a apply`

- The `lngx` vm will be created using the packer ubuntu image we created in a previous step. The VM itself will be bootstrapped by a cloud-init script which will install nginx. The cloud-init scripts are under the cloud-init folder. Take note that the `njs_lb_dns` is passed from the `lnjs` module which we created in the previous step.

cloud-init example:#cloud-config package_upgrade: true packages: - nginx write_files: - owner: www-data:www-data - path: /etc/nginx/sites-available/default content: | server { listen 80; location / { proxy_pass http://${njs_lb_dns}:3000; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection keep-alive; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; } } runcmd: - service nginx restart

Enabling the secondary region (optional):

For the next apply lets enable the secondary region which will deploy another vnet and create peerings on both ends. Open `deployment.tfvars` and change `enable_secondary = true`.

Run `./deploy.py -d us2 -a apply`

If you get an error, just run the plan again. Occasionally a resource creation is attempted before its resource group is created.

Enable Log Analytics (optional):

You can enable log analytics for your deployment by opening `deployment.tfvars` and change/add `enable_log_analytics = true`.

Run `./deploy.py -d us2 -a apply`

Any VM's you have will get a extension added which will auto install the OMS agent and configure it to the log analytics instance.

Enable Remote State (optional):

So far your state has been local only which can be found deployments/<deployment code>/state. State should be checked into source control but first should be encrypted as it may contain senstive keys from resources. The other option is to store the state in a blob storage account by following the below steps. This can be a bit tricky as we need to play a bit of chicken n egg.

You can enable remote state for your deployment by:

- Open `deploy.py` and change REMOTE_STATE_BYPASS = True

- Open `deployment.tfvars` and change/add `enable_remote_state = true`

- Run and apply `./deploy.py -d us2 -a apply -t remote_state.tf` to deploy the remote state core resources.

- Look at the output for the following command, run it once the above apply is complete.

Run the following command after the next apply: az storage blob upload -f/mnt/c/Projects/terraform-eiac/terraform/azure/deployments/us2/state/main/terraform.tfstate -c main -n terraform.tfstate - Open `deploy.py` and change REMOTE_STATE_BYPASS = False

- Open 'deploy.py' and change UPLOAD_STATE_TO_REMOTE = True to auto upload the local state file to the remote container on next plan

- Run a plan `./deploy.py -d us2` which should show the following in the output

Start remote state engine... Will take a few seconds... Uploading State file to Remote State Container... - Change UPLOAD_STATE_TO_REMOTE = False and run `./deploy.py -d us2`. View the terminal output and verify that State is still using the remote engine. Every state modification will not result in a blob snapshot which will allow you to easily rollback.

- Once you have verified that remote state is being used you can backup the state folder under the deployment folder and then remove it.

`