This post has been republished via RSS; it originally appeared at: Networking Blog articles.

Overview

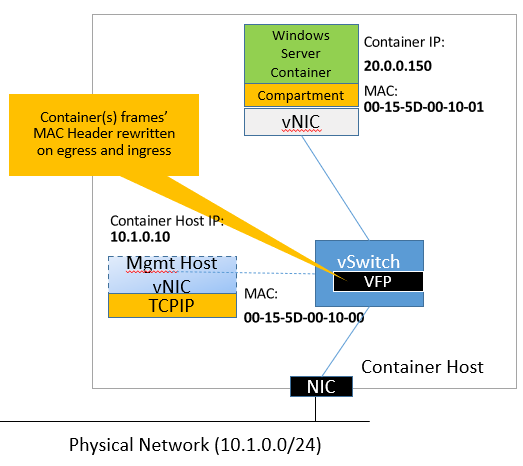

Containers attached to a l2bridge network will be directly connected to the physical network through an external Hyper-V switch. L2bridge networks can be configured with the same IP subnet as the container host, with IPs from the physical network assigned statically. L2bridge networks can also be configured using a custom IP subnet through a HNS host endpoint that is configured as a gateway.

In l2bridge, all container frames will have the same MAC address as the host due to Layer-2 address translation (MAC re-write) operation on ingress and egress. For larger, cross-host container deployments, this helps reduce the stress on switches having to learn MAC addresses of sometimes short-lived containers. Whenever container hosts are virtualized, this comes with the additional advantage that we do not need to enable MAC address spoofing on the VM NICs of the container hosts for container traffic to reach destinations outside of their host.

There are several networking scenarios that are essential to successfully containerize and connect a distributed set of services, such as:

- Outbound connectivity (Internet access)

- DNS resolution

- Container name resolution

- Host to container connectivity (and vice versa)

- Container to container connectivity (local)

- Container to container connectivity (remote)

- Binding container ports to host ports

We will be showing all the above on l2bridge and briefly touch on some more advanced use-cases:

- Creating an HNS container load balancer

- Defining and applying network access control lists (ACLs) to container endpoints

- Attaching multiple NICs to a single container

Pre-requisites

In order to follow along, 2x Windows Server machines (Windows Server, version 1809 or above) are required with:

- Containers feature and container runtime (e.g. Docker) installed

- HNS Powershell Helper Module

To achieve this, run the following commands on the machines:

Creating an L2bridge network

Many of the needed policies to setup l2bridge are conveniently exposed through Docker’s libnetwork driver on Windows.

For example, an l2bridge network of name “winl2bridge” with subnet 10.244.3.0/24 can be created as follows:

The available options for network creation are documented in 2 locations (see #1 here and #2 here) but here is a table breaking down all the arguments used:

| Name | Description |

|

-d |

Type of driver to use for network creation |

|

--subnet |

Subnet range to use for network in CIDR notation |

|

-o com.docker.network.windowsshim.dnsservers |

List of DNS servers to assign to containers. |

|

--gateway |

IPv4 Gateway of the assigned subnet. |

|

-o com.docker.network.windowsshim.enable_outboundnat |

Apply outbound NAT HNS policy to container vNICs/endpoints. All traffic from the container will be SNAT’ed to the host IP. If the container subnet is not routable, this policy is needed for containers to reach destinations outside of their own respective subnet. |

|

-o com.docker.network.windowsshim.outboundnat_exceptions |

List of destination IP ranges in CIDR notation where NAT operations will be skipped. This will typically include the container subnet (e.g. 10.244.0.0/16), load balancer subnet (e.g. 10.10.0.0/24), and a range for the container hosts (e.g. 10.127.130.36/30). |

IMPORTANT: Usually, l2bridge requires that the specified gateway (“10.244.3.1”) exists somewhere in the network infrastructure and that the gateway provides proper routing for our designated prefix. We will be showing an alternative approach where we will create an HNS endpoint on the host from scratch and configure it so that it acts as a gateway.

NOTE: You may see a network blip for a few seconds while the vSwitch is being created for the first l2bridge network.

TIP: You can create multiple l2bridge networks on top of a single vSwitch, “consuming” only one NIC. It is even possible to isolate the networks by VLAN using -o com.docker.network.windowsshim.vlanid flag.

Next, we will enable forwarding on the host vNIC and setup a host endpoint as a quasi gateway for the containers to use.

NOTE: The last netsh command above would not be needed if we supplied a proper gateway that exists in the network infrastructure at network creation. Since we created a host endpoint to use in place of a gateway, we need to add a static ARP entry with a dummy MAC so that traffic is able to leave our host without being stuck waiting for an ARP probe to resolve this gateway IP.

This is all that is needed to setup a local l2bridge container network with working outbound connectivity, DNS resolution, and of course container to container and container to host connectivity.

Multi-host Deployment

One of the most compelling reasons for using l2bridge is the ability to connect containers not only on the local machine, but also with remote machines to form a network. For communication across container hosts, one needs to plumb static routes so that each host knows where a given container lives.

For demonstration, assume there are 2 container host machines (Host “A”, Host “B”) with IP 10.127.132.38 and 10.127.132.36 and container subnets 10.244.2.0/24 and 10.244.3.0/24 respectively.

To realize connecting containers across the 2 hosts, the following commands would need to be executed on host A:

Similarly, on host B the following also needs to be executed:

Now l2bridge containers running both locally and on remote hosts can communicate with each other.

TIP: On public cloud platforms, one also needs to add these routes to the default system’s route table, so the underlying host cloud network knows how to forward packets with container IPs to the correct destination. For instance on Azure, user-defined routes of type “virtual appliance” would need to be added to the Azure virtual network. If host A and host B were VMs provisioned in an Azure resource group “$Rg”, this could be done by issuing the following az commands:

Starting l2bridge containers

Once all static routes have been updated and l2bridge network created on each host, it is simple to spin up containers and attach them to the l2bridge network.

For example, to spin up two IIS containers with ID “c1”, “c2” on container subnet with gateway “10.244.3.1”:

NOTE: The last netsh command above would not be needed if we supplied a proper gateway that exists in the network infrastructure at network creation. Since we created a host endpoint to use in place of a gateway, we need to add a static ARP entry with a dummy MAC so that traffic is able to leave our host without being stuck waiting for an ARP probe to resolve this gateway IP.

Here is a video demonstrating all the connectivity paths available after launching the containers:

Publishing container ports to host ports

One feature to expose containerized applications and make them more available is to map container ports to an external port on the host.

For example, to map TCP container port 80 to the host port 8080, and assuming the container has respective endpoint with ID “448c0e22-a413-4882-95b5-2d59091c11b8” this can be achieved using an ELB policy as follows:

Here is a video demonstrating how to apply the policy to bind a TCP container port to a host port and access it:

Advanced: Setting up Load Balancers

The ability to distribute traffic across multiple containerized backends using a load balancer leads to higher scalability and reliability of applications.

For example, creating a load balancer with frontend virtual IP (VIP) 10.10.0.10:8090 on host A (IP 10.127.130.36) and backend DIPs of all local containers can be achieved as follows:

Finally, for the load balancer to be accessible from inside the containers, we also need to add two encapsulation rules for every endpoint that needs to access the load balancer:

Here is a video showing how to create the load balancer and access it using its frontend VIP "10.10.0.10" from host and container:

Advanced: Setting up ACLs

What if instead of making applications more available, one needs to restrict traffic between containers? l2bridge networks are ideally suited for network access control lists (ACLs) that define policies which limit network access to only those workloads that are explicitly permitted.

For example, to allow inbound network access to TCP port 80 from IP 10.244.3.75 and block all other inbound traffic to container with endpoint “448c0e22-a413-4882-95b5-2d59091c11b8”:

Here is a video showing the ACL policy in action and how to apply it:

Access control lists and Windows fire-walling is a very deep and complex topic. HNS supports more granular capabilities to implement network micro-segmentation and govern traffic flows than shown above. Most of these enhancements are available via Tigera’s Calico for Windows product and will be incrementally documented here and here.

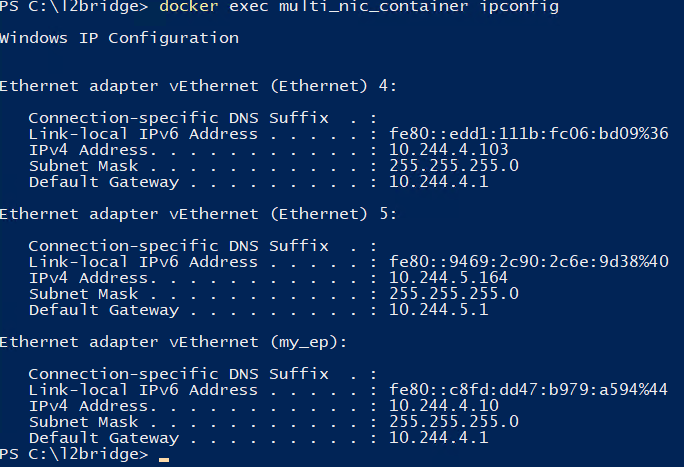

Advanced: Multi-NIC containers

Attaching multiple vNICs to a single container addresses various traffic segregation and operational concerns. For example, assume there are two VLAN-isolated L2bridge networks called “winl2bridge_4096” and “winl2bridge_4097”:

Attaching a container to both networks can be done as follows:

To add more vNICs, we can create HNS endpoints under a given network and attach them to the container’s network compartment. For example, to add another NIC in network “winl2bridge_4096”:

By executing all the above, a single container has three vNICs ready to use now (two in “winl2bridge_4096”, one from “winl2bridge_4097”). Every endpoint may have different policies and configurations specifically tailored to meet the needs of the application and business.

Summary

We have covered several supported capabilities of l2bridge container networking, including:

- Cross-host container communication (not possible via WinNAT)

- Logical separation of networks by VLANs

- Micro-segmentation using ACLs

- Load balancers

- Binding container ports to host ports

- Attaching multiple network adapters to containers

L2bridge networks require upfront configuration to install correctly but offers many useful features as well as enhanced performance and control of the container network. It is always recommended to leverage orchestrators such as Kubernetes which utilize CNI plugins to streamline and automate many of these configuration tasks, while still rewarding advanced users with a similar level of configurability. All of the HNS APIs used above and much more are also open-source in a Golang shim (see hcsshim).

As always, thanks for reading and please let us know about your scenarios or questions in the comments section below!