This post has been republished via RSS; it originally appeared at: AI Customer Engineering Team articles.

The NLP Recipes Team

Text summarization is a common problem in Natural Language Processing (NLP). With the overwhelming amount of new text documents generated daily in different channels, such as news, social media, and tracking systems, automatic text summarization has become essential for digesting and understanding the content. Newsagents, for example, have been utilizing such models for generating headlines and titles for news articles, and thus saving a great deal of human effort. Overall, automated text summarization technology is powering business scenarios in a wide range of industry verticals such as media & entertainment, retail, technology, and financial services such as robo-advisors.

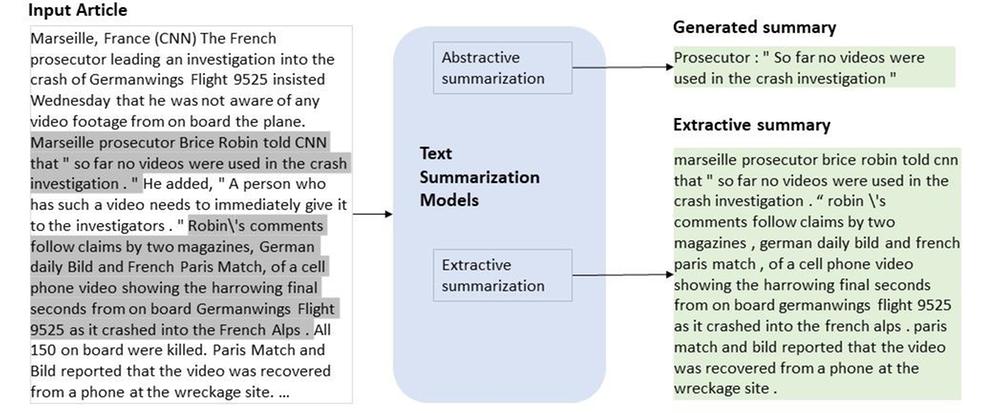

The goal of text summarization is to extract or generate concise and accurate summaries of a given text document while maintaining key information found within the original text document. Text summarization methods can be either extractive or abstractive. Extractive models select (extract) existing key chunks or key sentences of a given text document, while abstractive models generate sequences of words (or sentences) that describe or summarize the input text document.

In our newest release of NLP-recipes, we have included utilities and notebook examples for both extractive and abstractive summarization methods. The available models are:

- UniLM: UniLM is a state-of-the-art model developed by Microsoft Research Asia (MSRA). The model is pre-trained on a large unlabeled natural language corpus (English Wikipedia and BookCorpus) and can be fine-tuned on different types of labeled data for various NLP tasks like text classification and abstractive summarization.

Supported models: unilm-large-cased and unilm-base-cased.

- BERTSum: BERTSum is an encoder architecture designed for text summarization. It can be used together with different decoders to support both extractive and abstractive summarization.

Supported models: bert-base-uncased (extractive and abstractive) and distilbert-base-uncased (extractive).

Figure 1: sample outputs: the sample generated summary is an output of a finetuned “unilm-base-cased" model, and the sample extractive summary is an output of a finetuned “distilbert-base-uncased”, and both are finetuned on CNN/Daily Mail dataset.

All model implementations support distributed training and multi-GPU inferencing. For abstractive summarization, we also support mixed-precision training and inference. Please check out our Azure Machine Learning distributed training example for extractive summarization here.

Informativeness, fluency and succinctness are the three aspects used to evaluate the quality of a summary. To quantitatively evaluate a summary, ROUGE scores are commonly used, which is a standard metric used to measure the overlap between machine-generated text and human-created reference text. Since it is not straightforward to set up the environment to run ROUGE evaluation, we have included utilities and an example notebook to instruct how to set up the evaluation environment and demonstrate how the metrics can be computed.

In our notebook examples, we have used CNN/Daily Mail dataset. To use a different dataset, please consult the cnndm.py script to apply the models to your own dataset.

For more information, please visit https://github.com/microsoft/nlp-recipes.

To submit a feature request, please go to https://github.com/microsoft/nlp-recipes/issues