This post has been republished via RSS; it originally appeared at: Azure Compute articles.

Azure Accelerated Networking is now enabled on modern Azure HPC VM Sizes - HB, HC and HBv2. Accelerated Networking enables Single Root IO Virtualization (SR-IOV) for a VM’s SmartNIC resulting in throughput of up to 30 gigabits/sec, and lower and more consistent latencies.

Note that this enhanced Ethernet capability does not replace the dedicated InfiniBand network included with these modern HPC VM types. Instead, Accelerated Networking simply enhances the functions that the dedicated Ethernet interface has always performed such as loading OS images, connectivity to Azure Storage resources, or communicating with other resources in a customer’s VNET.

When enabling Accelerated Networking on supported Azure HPC VMs it is important to understand the changes you will see within the VM and what those changes may mean for your HPC workload:

A new network interface:

As the front-end ethernet NIC is now SR-IOV enabled, it will show up as a new network interface. For example, following is a screenshot from Azure HBv2 VM instance.

Figure 1: "mlx5_0" is the ethernet interface, and "mlx5_1" is the InfiniBand interface.

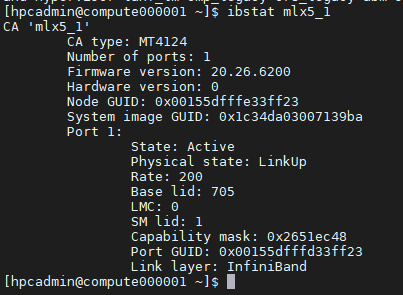

Figure 2: InfiniBand interface status (mlx5_1)

Impact of additional network interface:

As InfiniBand device assignment is asynchronous, the device order in the VM can be random. i.e., some VMs may get "mlx5_0" as InfiniBand interface, whereas certain other VMs may get "mlx5_1" as InfiniBand interface.

However, this can be made deterministic by using a udev rule, proposed by rdma-core.

With the above udev rule, the interfaces are named as follows:

Changes to MPI launcher scripts:

Most MPIs do not need any changes to MPI launcher scripts. However, certain MPI libraries, especially those using UCX without a Libfabric layer, may need the InfiniBand device to be specified explicitly because of the additional network interface introduced with Accelerated Networking.

For example, OpenMPI using UCX (pml=ucx) fails by default as it tries to use the first interface (i.e. the Accelerated Networking NIC).

This can be addressed by explicitly specifying the correct interface (UCX_NET_DEVICES=ibP57247p0s2:1).

Below are the environment parameters for specifying the interface names for various MPI libraries:

MVAPICH2: MV2_IBA_HCA=ibP57247p0s2

OpenMPI & HPC-X: UCX_NET_DEVICES=ibP57247p0s2:1

IntelMPI (mlx provider): UCX_NET_DEVICES=ibP57247p0s2:1