This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

At Ignite we announced the public preview of Azure Synapse data explorer. To complement existing SQL and Apache Spark runtime engines, the new data explorer runtime engine is optimized for efficient log analytics using powerful indexing technology to automatically index free-text and semi-structured data commonly found in telemetry data, such as logs, time series, events, and more.

We discussed multiple use cases is our announcement blog. In this one we are going to discuss about the popular use case of industrial IoT Analytics solutions by taking the example of two fictional companies in automotive, Adatum and Contoso.

Alice & IoT

Alice, a production manager at Adatum, receives a complaint regarding the quality of a batch of steering components shipped to Contoso Inc (purchase order 300000004). So the only thing she has so far is a Purchase order.

The information needed to investigate the issue is scattered across multiple systems, with no straightforward way to combine it or analyze it. There is purchase order data in an SAP manufacturing execution system with the production schedule and the actual telemetry data from the production line.

Luckily Adatum built its data estate on .

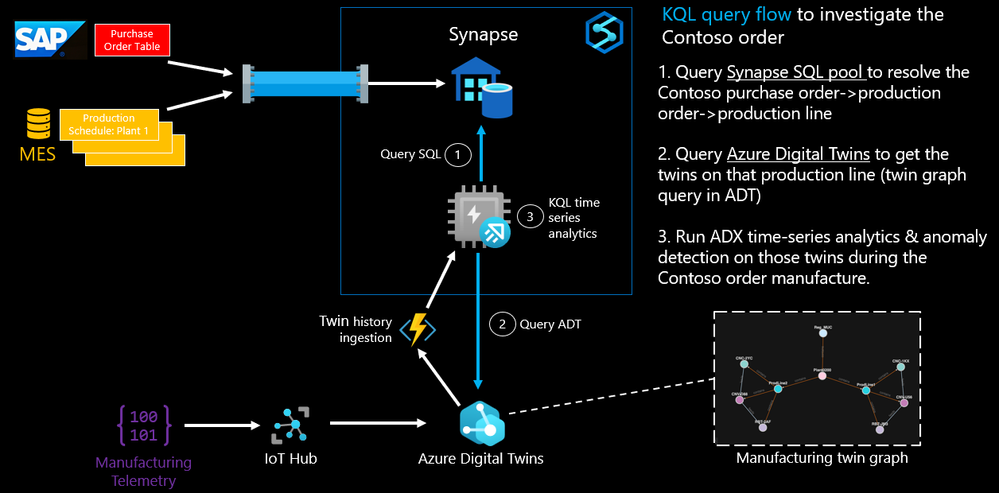

The system which Adatum built is based on the following rough architecture:

On this schema you see two data integration flows:

- The data integration using Synapse pipelines for Production Order and schedule data

- The data integration of the telemetry data of the Production line via IoTHub, Azure Digital Twins into Data Explorer

Azure Synapse data explorer can federate queries across multiple Azure Synapse analytics engines. This is possible because of plugins like sql_request or azure_digital_twins_query_request.

In this specific scenario the engine executes a SQL request to get purchase order data (plant id, line id & production date). Based on the plant and line id it queries the Azure Digital Twins to get the twin identifier of the production line components. Finally, it joins that data with the actual telemetry data stored in Azure Synapse data explorer.

The KQL query flow can be found on GitHub: KQL queries to contextualize timeseries data (github.com).

With those components in place Alice was able to quickly spot the anomaly in the telemetry data and resolved the complaint.

Conclusion

With the help of Azure Synapse Analytics, Adatum was able to federate a complex query across multiple engines. The KQL query reached out to data exported from SAP and used it to get the result of an Azure Digital Twins query. Finally, the timeseries data was used to detect anomalies quickly.

Next steps