This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

This article reviews a common pattern of streaming data (i.e. real-time message ingestion) in Synapse dedicated pool. It opens a discussion on the simple standard way to implement this, as well as the challenges and drawbacks. It then presents an alternate solution which enables optimal performance and greatly reduces maintenance tasks when using clustered column store indexes. This is aimed at developers, DBAs, architects, and anyone who works with streams of data that are captured in real-time.

Scenario description

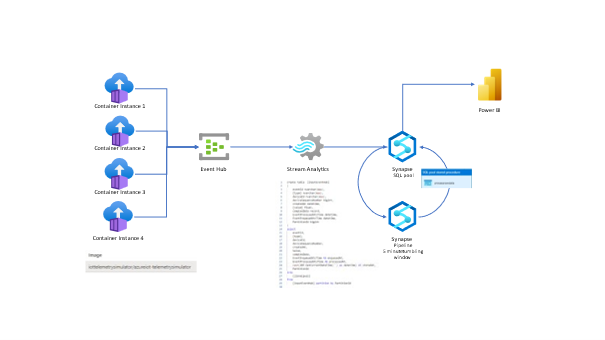

Description of this scenario is as follow: we have messages produced by sensors (simulated by Azure container instances) sent to an Event Hub, then processed by an ASA job which redirects its output into a Synapse table named “rawdata”. At this stage there are two options:

- query directly “rawdata” table.

- use “rawdata” as a staging table, then build a pipeline that process the data every ten minutes and store it in a final fact table. It consists of an UPSERT statement to ensure existing records are updated (in case they are resent), otherwise inserts them into a final fact table named “rawdata_fact”.

General solution Design Architecture

Building blocks of the current solution can be found on the following link

This sample deploys within one resource group:

- Container instances simulating IoT devices sending their temperature and amount of CO2.

- Event Hub ingesting messages in real time.

- Stream Analytics job preprocessing messages and redirect them into Synapse SQL pool.

How to handle the data ingested

In case ASA job loads data directly in the fact table and assuming it has a clustered column store index, what happens is:

- no delta store compression can take place as the table is constantly inserted

- simultaneous COPY INTO statements issued by ASA job can potentially trigger new delta stores creation, hence reduce the performance and/or require index maintenance tasks.

See illustration below which shows this:

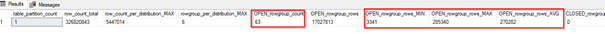

In case ASA job loads the telemetry data into a staging table named "rawdata" then upsert the fact table, we can see that what happens is the following:

- there is one delta store per distribution where the insertions land, and no closed rowgroup hence all others are compressed rowgroups.

- the tuple mover at each compute node ensures that once the delta stores get filled up they are converted in compressed rowgroups.

See illustration below which shows this:

This task is constituted of:

- A simple pipeline with a stored procedure activity.

- A tumbling windows trigger that runs the pipeline every 10 minutes.

- a stored procedure scheduled to update the fact from the staging:

Then we can a simple view on to of both tables so we have the latest data as well as the processed one:

And monitor in near real time the average temperature and CO2 values:

Takeaways

We can stream data directly from Stream Analytics into a Synapse dedicated pool table. We can avoid the clustered store index maintenance by using an intermediate table and a scheduled pipeline.