This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

For decades, companies have relied on Microsoft and SAP software to run their most mission-critical operations. Today we’re excited to share the upcoming public preview of SAP Change Data Capture (CDC) in Azure Data Factory (ADF), launching June 30, 2022. This new data connector streamlines access to SAP data within core Azure services like Azure Synapse Analytics and Azure Machine Learning.

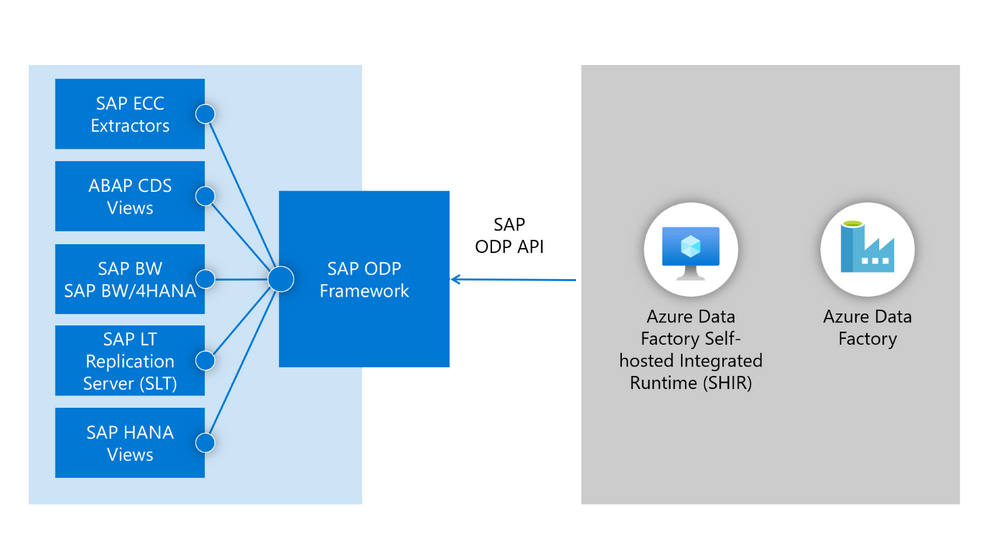

The new SAP CDC connector leverages SAP Operational Data Provisioning (ODP) framework, which is an established best practice for data integration within SAP landscapes. ODP provides access to a wide range of sources across all major SAP applications and comes with built-in CDC capabilities.

Sign up today: Watch this new feature in action and find out how to join the public preview in our free launch webinar.

Background

For many of our customers, SAP systems are critical to their business operations. As organizations mature, become more sophisticated, and graduate from using only descriptive analytics to adopting more predictive/prescriptive analytics, they want to combine their SAP data with non-SAP data in Azure, where they can leverage the advanced data integration and analytics capabilities to generate timely business insights. ADF is a data integration (ETL/ELT) Platform as a Service (PaaS) and, for SAP data integration, ADF currently offers six connectors:

These connectors can only extract data in batches, where each batch treats old and new data equally without identifying data changes (“batch mode”). This extraction mode isn’t optimal when dealing with large data sets, such as tables with millions or even billions of records, that change often. To keep your copied SAP data fresh, frequently extracting it in full is expensive and inefficient.

There’s a manual and limited workaround to extract mostly new or updated records, but this process requires a column with timestamp or monotonously increasing values, and continuously tracking the highest value since last extraction (“watermarking”). Unfortunately, some tables have no column that can be used for watermarking and this process can’t handle deleted records.

Our customers have been asking for a new connector that can extract only data changes (inserts/updates/deletes = “deltas”), using CDC capabilities provided by SAP systems (“CDC mode”). To meet this need, we’ve built a new SAP CDC connector leveraging SAP ODP framework. This new connector can connect to all SAP systems that support ODP, such as R/3, ECC, S/4HANA, BW, and BW/4HANA, directly at the application layer or indirectly using SAP Landscape Transformation (SLT) replication server as a proxy. The connector can fully or incrementally extract SAP data that includes not only physical tables, but also logical objects created on top of those tables, such as ABAP Core Data Services (CDS) views, without watermarking.

How does it work?

Our new SAP CDC connector can extract various data source (“provider”) types, such as:

- SAP extractors, originally built to extract data from SAP ECC and load it into SAP BW

- ABAP CDS views, the new data extraction standard for SAP S/4HANA

- InfoProviders and InfoObjects in SAP BW or BW/4HANA

- SAP application tables, when using SLT replication server as a proxy

These providers run on SAP systems to convert full/incremental data into data packages in Operational Delta Queue (ODQ) that can be consumed by ADF pipelines leveraging SAP CDC connector ("subscriber").

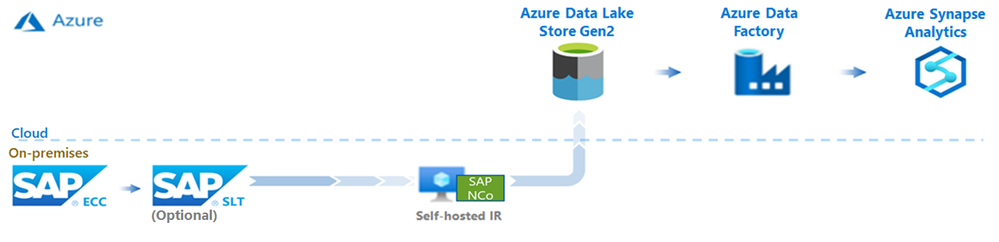

You can run ADF copy activity with SAP CDC connector on self-hosted integration runtime (SHIR) to extract the raw SAP data and load it into any destination, such as Azure Blob Storage or Azure Data Lake Store (ADLS) Gen2, in CSV/Parquet format, essentially archiving/preserving all historical changes. You can then run ADF data flow activity on Azure Databricks/Apache Spark cluster (Azure IR) to transform the raw SAP data, merge all changes, and load the result into any destination, such as Azure SQL Database or Azure Synapse Analytics, in effect replicating your SAP data.

If you load the merged result into ADLS Gen2 in Delta format (Delta Lake/Lakehouse), you can query it using Azure Synapse serverless SQL/Apache Spark pool to produce snapshots of SAP data for any specified periods in the past (“time-travel”). ADF pipelines containing these copy and data flow activities can be auto-generated using ADF templates and frequently run using ADF tumbling window triggers to replicate SAP data into Azure with low latency and without watermarking.

Our new SAP CDC solution in ADF including SAP CDC connector and data replication templates will be released for public preview on June 30, 2022.

To learn more about this new solution and how to join the public preview, please register (for free) and attend the launch webinar on June 30, 2022.

Learn more about Azure at Microsoft Build

- Read how to build productively, collaborate securely, and scale innovation—no matter where in the world with a comprehensive set of Microsoft developer tools and platform.

- Read the latest on how Azure powers your app innovation and modernization with the choice of control and productivity you need to deploy apps at scale.

- Read how you can Innovate faster and achieve greater agility with the Microsoft Intelligent Data Platform and turn your data into decisions.